Vol. 10 No. 2, January 2005

| Vol. 10 No. 2, January 2005 | ||||

Introduction. Knowing the credibility of information about genetically modified food on the Internet is critical to the everyday life information seeking of consumers as they form opinions about this nascent agricultural technology. The Website Quality Evaluation Tool (WQET) is a valuable instrument that can be used to determine the credibility of Websites on any topic.

Method. This study sought to use the WQET to determine the quality of Websites in the context of biotechnology or genetically modified food and to seek one or more easily identified characteristics, such as bias, commitment, use of meta-tags and site update-access interval (length of time between last update of the site and the date reviewed) that might be used as a quick discriminator of a Website's quality.

Analysis. Using SPSS, analysis of variance and regression analyses were performed with the website variables of a population of one hundred Websites about genetically modified food.

Results. Only the site update-access interval was found to be a shortcut quality indicator with an inverse relationship. The longer the interval the lower the quality score.

Conclusion. The study established a model for Website quality evaluation. The update-access interval proved to be the single clear-cut indicator to judge Website quality in everyday information seeking.

Everyday life information seeking, as described by Savolainen, is a model for understanding how information sources are obtained by the ordinary person to preserve the coherence in her way of life (Savolainen 1995). Many aspects of everyday life information seeking have been studied subsequently, especially as they relate to health related information seeking (e.g., Johnson 2001; Wikgren 2001), however, many other aspects remain unexplored. Of particular interest, is the constellation of questions surrounding the purchase of consumer goods; a primary component of maintaining a way of life for anyone in industrialized societies, such as the United States and Europe. How does information seeking play a role in the shaping of consumer decisions?

The use of genetic engineering techniques in the production of agricultural commodities has grown and developed in the past decade. In 2003 it was estimated that 145 million acres worldwide were planted with genetically engineered plants compared with no acreage in 1994, the year that the first genetically engineered crop was approved by the US government regulatory agencies (Pew Initiative... 2003b). In addition, approximately 80% of the processed foods sold in supermarkets are made from genetically engineered crops either through the use of processed oil, such as canola, or from a corn derivative, such as corn syrup (Hallman, et al. 2003). Nonetheless, consumer acceptance of this new technology as an appropriate method of producing food for human consumption in the United States is still an open question. Recent surveys have shown that between 33% and 51% of American consumers are opposed to these products and would not buy them (Hallman et al. 2003; Pew Initiative... 2003a). This percentage has remained fairly consistent despite the fact that most consumers lack substantive information about what these products are and how they might be harmful or helpful. In a recent survey, Hallman et al. (2003) found that only 52% of those surveyed knew that genetically engineered food are already in the supermarkets while the Pew Initiative on Food and Biotechnology poll (2003) showed that only 34% of their sample had even heard of genetically modified food.

This lack of knowledge seems to reflect a lack of available information. If information is scarce on the topic, the questions are: What is the quality of the information available to the US public? How much is available? and What is the credible content that the many media channels, newspapers, magazines, television, and the Internet are carrying about this important subject? A multidisciplinary study undertaken by the Food Policy Institute of Rutgers, the State University of New Jersey is examining the information sources that are available for people seeking to learn more. By understanding the nature of information that consumers have been exposed to about this new technology and how they are exposed to it, the study hopes to generate a knowledge base necessary for understanding how consumers form opinions about food biotechnology and how to communicate more effectively with the public about biotechnology-related issues. This project is being conducted by an international team of researchers (McInerney et al. 2004). The Information Access part of the project, conducted by researchers at Rutgers University, first looked at the number of newspaper and magazine stories written in the decade between 1992 and 2002 (McInerney et al. 2004). The project continues to examine other channels for what some authors have called the 'public understanding of science' (Wynne 1995), including television coverage and the Internet.

As Wilson (1997) noted, the characteristics of the information being sought can pose a significant barrier to information seeking behaviour. Both the understanding of channels available to everyday life information seekers and the credibility of the information carried there is important to understanding the process that they might use to create knowledge. The characterization of what is carried in the many media channels that people are exposed to in their daily lives is a first step in studying what they might know about a topic, especially one where opinions are so mixed as in the case of genetically modified foods (Spink & Cole 2001).

The present work investigated the quality of Internet Websites as sources of information about genetically modified food. The Internet is now an accessible information channel for a majority of Americans; the PEW survey on Internet use in the United States completed in 2002 found that 59% of households in the United States have direct access to the Internet (Horrigan 2003) and there are estimates that 70.4% of the U.S. population uses this medium in some way (Internet World Stats. 2004). Recent research has shown that people use the Internet extensively to gather information (Flanagin & Metzger 2001), and they are more likely to give credence to the information they find on the Internet than they did in the past (Johnson & Kaye 2002). Johnson & Kaye found that readers using political Websites in the 2000 election found them more believable than during the 1996 election. In this environment of computer mediated information retrieval, it becomes critical to identify quality sites with substantive information, especially about a controversial topic.

Assessing the quality of an Internet Website is not as easy as it might seem. The question of Website quality has been defined by different disciplines in three distinct ways:

Each definition of quality leads to lists of criteria about what constitutes a quality site. McInerney (2000a) looked at all of these criteria from multiple studies on Web quality to form a comprehensive tool for evaluating the quality of a Website that would serve to assess its trustworthiness. Her guiding principle was that 'if information can pass a test of quality, it is most likely to prove trustworthy' (McInerney 2000a: 3) and therefore, have higher credibility. The Website Quality Evaluation Tool (WQET) that resulted from the analysis and synthesis of multiple Web quality studies is an interdisciplinary assessment instrument.

The tool requires an investment of time and careful consideration. It takes at least one half-hour to examine a Website thoroughly and apply quality criteria. This time commitment may be available to information professionals, but the public may not be willing to invest the same amount of time. Thus, the challenge is to devise a method that will lead the Internet user to the same conclusion as the WQET without the heavy time investment. Using a different assessment tool to assess the content of diabetes health sites, Seidman looked at domain as one such possible quality indicator but no statistical correlation was found (Seidman 2003). Different results were obtained by Triese, et al. (2003) when they compared readers responses to different science Websites. Readers in that study rated the .gov sites as being more credible than those from .com sites.

The present study sought to connect other meta-characteristics of a site to the results obtained from using the WQET. There were three guiding research questions:

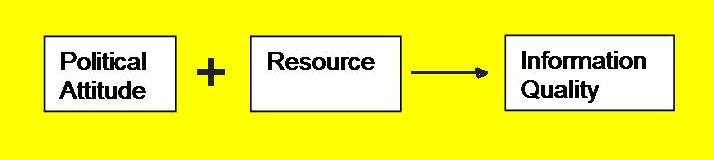

The model in Figure 1 shows the conceptual relationship among these questions. The proposed model shows that political attitudes combined with the level of resources available to an organization could affect the quality of the information on any given Website. The concepts political attitudes (that is, bias or stance) and resources have been operationalized for the present study by examining several variables. By bias we mean how the Website's sponsor views genetically modified (GM) crops or food and how strong the attitude might be regarding the benefits or disadvantages of growing genetically modified crops and using genetically modified food. A bias score was assigned to a Website using one of five levels that range from 'Very Opposed' to 'Very Favorable' towards genetically modified (GM) food; for example, Friends of the Earth, an environmental organization, is extremely opposed to genetically modified food so their Website received a rating of Very Opposed for bias.

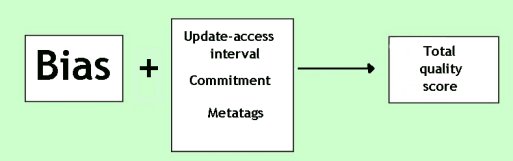

Resource levels were assessed by looking at three different factors: commitment, update-access interval, and meta-tags. The first factor, the amount of commitment that the organization shows towards genetically modified food, was measured by the amount of space on the site devoted to the topic. The second resource factor, site update-access interval, was measured by the length of time between the last time the Website was updated and the actual date of the review of the site. The third resource indicator, the presence of meta-tags, was used for keyword identification of the site's contents. Meta-tags are used in the HTML coding to describe the contents of the site. The rationale behind using these factors is that organizations that care about the quality of information on their site will include substantial amounts of information, will update their sites regularly to reflect new information and will assign keyword meta-tags in order to help information seekers locate information. Overall quality was measured by the score on the Website Quality Evaluation Tool (McInerney, 2000b). The empirical model of these variables is shown in Figure 2.

Working with these models generated four research hypotheses, which were tested using the Website as a unit of analysis. In each case the null hypothesis is that the effect cannot be found. The four hypotheses are:

The primary objective of this research is to study the quality of Websites that provide information about genetically engineered foods. The first step in this process was to identify the sites using a purposive sampling technique. The second step was to determine the quality score for each identified site using the Website Quality Evaluation Tool. Coding was done over a six-month period by evaluating all the variables related to Web quality as articulated in the Website Quality Evaluation Tool and entering the score for each variable into a Microsoft Access database built expressly for this project. The database allowed for a total quality score to be calculated from a composite of all the variables, some of which were weighted according to their importance. The resulting data were exported to an SPSS 11.0 spreadsheet for analysis.

Purposive sampling techniques were used to gather subjects, that is, Websites for the study. The unit of analysis was a Website that had some information about agricultural biotechnology, genetically modified food, genetically engineered food or some variant of these terms. Individual pages about genetically modified food were the focal point of the analysis although the entire Website was analysed. In practice, this meant that information about the last date of update, authority, links, style and graphics were obtained from all of the pages on a Website. Content, authority, and coverage were primarily gleaned only from the most pertinent pages devoted to genetically engineered food.

An initial set of sixty-five Web sites was taken from a checked list compiled by a Rutgers University scholar who studied rhetorical strategies used on Websites by proponents and opponents of biotechnology (Quaglione 2002). Additional sites were identified using the top three search engines as rated by Greg Notess's authoritative Search Engine Showdown (Notess 2003). At the time of the research in 2003, Notess rated Google , WiseNut, and Altavista as the top three search engines. Three search phrases; genetically engineered food, genetically modified food, and genetically engineered crop, had previously been identified as being the most popularly used terms for biotechnology food, and consequently, these phrases were used to search for Websites in the search engines (McInerney et al. 2004). The top thirty hits on each search engine were compared against the already identified list. Unique items were flagged for review. Some sites were rejected for analysis based on three factors:

Identified sites were analysed using McInerney's Website Quality Evaluation Tool (McInerney 2000b). A score for characteristics of each site was recorded. In addition to the eight different quality characteristics and the meta-tag score that make up the total quality score, the bias of the site, the amount of space on the site devoted to the topic of genetically engineered food, or commitment, and the dates that the Website was accessed and updated were noted. These dates were used to determine an update-access interval score for the site.

A single coder was used for the bulk of the project. However, a preliminary test of a sample done by the primary coder and the author of the WQET was done and inter-coder reliability was calculated. The results showed a Spearman's rho correlation of only .775 between the two author coders. Since this was below the usual .80 standard, further training was done and it was raised to .985. This high reliability between the authors of this paper, one of whom was the developer of the Website Quality Evaluation Tool, implied that the best course of action would be for all sites to be analysed by one of the authors.

Answers to a series of questions about each Website were recorded on paper forms. (See Appendix) A score for each of eight criteria was determined from these answers by a single coder. After a subtotal was computed by the Access software, the presence of meta-tags was noted. Five points were awarded for use of the meta-tag field for keywords that were used responsibly, i.e., were descriptive of the actual content on the site. The rationale for this weighting is based on the care and engagement by the Web staff when they assign meta-tags. In addition, the bias of the site, the amount of space on the site devoted to the topic of genetically engineered food, or commitment, and two dates: the date the Website was accessed and the last date the Website was updated, were noted. The results were entered into the Access database. At present, 100 Websites have been completely analysed and form the basis of this paper.

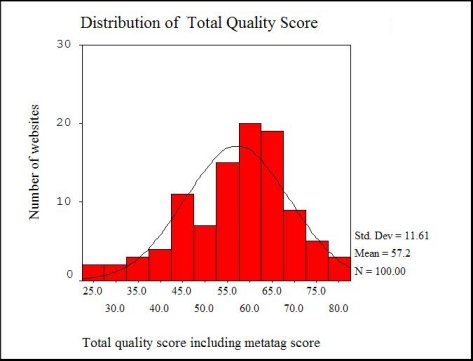

The primary dependent variable in the analysis is qualscor, the total quality score, as derived from the Website Quality Evaluation Tool. This score is made up of nine different criteria with a rating score of 1-7 based on the presence of various subcriteria. The criteria are content, functionality, authority, currency, links, graphics, coverage, style, and use of meta-tags. The first three criteria: content, functionality, authority - are weighted by doubling their scores. In this case content refers to the intellectual content of the site, its accuracy and clarity; functionality means ease of use and navigation; and authority refers to the credibility of the Website author or organizational sponsor. The weighting of these factors reflects the importance that Website quality experts assign to the qualities (Beck 1997; Ciolek 1996; Dragulanescu 2002; Lynch & Horton 1999; Tillman 2003; Wehmeyer 1997, etc.). The highest possible score for a site is eighty-two with no necessary minimum. Figure 3 shows the number of Websites in the sample that received certain total quality scores.

Three independent variables were assessed and recorded in the Access database. Bias was judged on a five-point scale from 'Very Favorable' (five points) towards genetically engineered foods to 'Very Opposed' to these foods (one point). To aid the analysis an ordinal level, a numerical variable was created from this one and labelled biasnum. Commitment was defined as percentage of the site directory sections that were devoted to this topic. The amount of commitment to the topic of genetically modified food was initially evaluated on a ten-point scale, for example, a site could be scored from 0-10% to 90-100% depending on what proportion of the Website's pages discussed genetically modified food. In order to make statistical analysis easier, the scale was transformed into a numerical variable called commit. However, very few sites were found to devote between 40 to 70% of their site space to the topic, so the variable was collapsed into three levels: low (0-29% commitment), medium (30-69% commitment), and high (70-100% commitment); the revised variable was labelled commit2.

Two variables were created from the gathered evaluation data: basequal, the quality score without the five points for the presence of meta-tags that were added after the evaluation; and update-access interval. Update-access interval can also be thought of as a measure of how recently the site has been maintained. Since meta-tag usage was an addition to the total quality score, it was desirable to create a new variable, metapres that could be used in an independent samples t-test. Rather than an addition to the score, metapres was a bimodal, nominal variable created by transforming the cases where five points were added into a yes or no for the use of meta-tags on the site.

Table 1 provides an overview of the Website characteristics that demonstrate Website quality and the number of sites that received specific evaluation ratings. Bias is fairly evenly distributed, although the Very Opposed group is the largest. Overall, more Websites demonstrated that the sponsors were either somewhat opposed or very opposed to genetically modified food (n=46), compared with thirty-eight sites that were somewhat in favour or very favourably inclined towards genetically modified food (n=38). Sixteen of the sites demonstrated an even-handed treatment of genetically modified food, demonstrating a position that was neither for nor against. Commitment results were bimodal with only five sites showing a medium level (30-69% of the site) devoted to the topic. Approximately half of those examined showed a low commitment (n=46), and half had a strong or high commitment to the topic on their site (n=49). meta-tag usage was evenly split in the sample; about half the sites used meta-tags (n=54), and half did not (n=46), and their mean quality scores were quite close. The mean update-access interval of the sites was over one-half year with a large standard deviation of almost one-year on either side of the mean. Ninety-four of the sites were examined for this characteristic because six sites had no dates associated with them. The mean total quality score was 57.2 which would classify the average site as 'Good' on the Website Quality Evaluation Tool rating scale.

| Characteristics | Number (N=100) |

|---|---|

| Bias | |

| Very opposed | 37 |

| Somewhat opposed | 9 |

| Neutral | 16 |

| Somewhat in favour | 18 |

| Very favourable | 20 |

| Commitment | |

| Low (0-29% of site) | 46 |

| Medium (30-69%) | 5 |

| High (70-100%) | 49 |

| Meta-tags | No. (Mean basequal score) |

| Meta-tags used | 54 (55.39) |

| Meta-tags not used | 46 (53.46) |

| Update-access interval (n=94) | Mean no. of days (Std.Dev.) |

| 193 (309) | |

| Total quality score (max. =82) (n=100) | Mean score (Std.Dev.) |

| 57.2 (11.6) | |

| Quality rating | No. of sites |

| Excellent site | 9 |

| Very good | 25 |

| Good | 17 |

| Fair | 17 |

| Problematic | 25 |

This study proposed a model that showed how resources, as measured by meta-tag use, commitment of Website, and update-access interval of the site combined with politics (as measured by bias) would predict the quality of a Website. Quality was defined by variables measured on the Website Quality Evaluation Tool. Using meta-tags for keywords describing a site is one of the indicators of the level of resources in an organization because it implies that the organization has access to a Webmaster with some sophistication and with an interest in accurate information retrieval. It also might indicate that the organization cares about getting its message out to Internet users.

Although meta-tags have lost their ascendancy as a source of evidence for search engine relevancy decisions (Sullivan 2002) they are still widely used and may be an indicator of the quality of the Website. The first hypothesis, then, was that an independent samples t-test would find a significant difference in the mean base quality score of sites (basequal) that used meta-tags and those that did not. The basequal variable was designed specifically to be used in this analysis to capture the other criteria scores without including the five-point reward for using meta-tags. In addition, the metapres variable was used because it was a simple, nominal variable. The results show that, in fact, the means of the two groups of sites, those that use meta-tags and those that do not are almost equal, 55.39 and 53.46 (see Table 1). The equal variances were confirmed by the Levene's Test which was shown to have a significant F=.643 at p <.001. With t=.865, p >.05 there is no statistically significant difference between the Websites that used meta-tags and those that did not, and, therefore, the null hypothesis cannot be rejected. Hypothesis 1, that the use of meta-tags is a good indicator of overall quality of a Website, is not supported.

A second measure of resource use by an organization is its level of commitment, which in this study was operationalized to the proportion of the Website devoted to the topic of genetically engineered food. It was found that Total Quality Score did not vary with levels of commitment as had been predicted. An analysis of variance (ANOVA) showed that the three commitment levels (commit2) had mean quality scores within two points of one another. The results of the analysis showed no support (F (2.97)=1.451, p >.05) for H2, that such a difference would be found in sites that devote quite a bit of their space to genetically modified food and those that have a small section of the site on the topic. The bimodal split of either high or low commitment levels may be a confounding factor in this analysis attributed to the purposive sampling technique that was used. Since the Websites analysed had by definition substantive amounts of information about biotechnology food, there was a high probability that these sites would have greater commitment to this topic. It might also be observed that, as in other contexts, quantity does not necessarily equal quality.

Bias as a measure of the politics involved in this topic was also not among a sufficient predictor of the quality of a Website. Although bias was widely distributed among the Websites (see Table 3 for details), bias did not correlate with the level of quality a Website exhibited. A simple regression analysis (r =-.111, p >.05) provided evidence that bias had no effect on the total quality score. The null hypothesis for H3 (that strong bias affects quality) failed to be rejected and no support was given to bias as a quick quality measure as indicated on the model.

The final hypothesis looked at the impact of two of the resource variables (commitment and update-access interval of the site) and bias on the total quality score using a multiple regression analysis. Although bias had been rejected as a single predictor of Website quality, a multiple regression might reveal interactions among the variables that would lend support to the total model. This was not the case since only site update-access interval had a significant relationship to the score (beta=-.465, p≤.001 with an adjusted r-squared=0.206). Neither bias nor commitment correlated with the total quality score. H4, that there was a relationship between the resource indicators, commitment and update-access interval and the mean quality score, was partially supported with this evidence although the null hypothesis cannot be completely rejected.

A separate linear regression analysis confirmed the strength of the correlation of update-access interval and quality, and its direction (r= -.474, p <.001 with r-squared=.216). The negative direction of this correlation indicates that this factor would predict a lower score on the Website Quality Evaluation Tool. In this new single factor model, update-access interval of the site was found to explain 21.6% of the variance. In other words the greater the amount of time between when a Website is updated and when it is viewed by the user, the lower the site's quality. In other words how often a Website is updated does indicate the lesser quality of the site. This suggests that there is still a large amount of the variation in Website quality that remains unexplained.

The search for quality Websites is an endeavour that occupies many different fields (Seidman 2003; Wikgren 2001). The Website Quality Evaluation Tool is useful for evaluating the credibility of Websites, however, it can be time consuming, taking approximately thirty minutes to evaluate each site. Consequently, the tool may be more useful to information professionals rather than to the public at large. Certainly, for sites that will be linked on an organizational portal or a library's Webography, the investment of time in using the tool is worthwhile. Consumers might benefit indirectly if they use library or research centre sites that have been examined and vetted using the criteria in the tool.

It would be valuable to be able to use some meta-characteristic rather than the entire WQET, such as the update-access interval of the site, that might help differentiate Websites that do stand up to the quality test. This study looked for such a distinguishing feature(s). It examined political position as measured by bias and resource use as indicated by space, commitment, meta-tag use, and update-access interval of the site as possible discriminators.

It was determined that bias as a measure of political stance was not a useful discriminator. There was no significant correlation between bias and the WQET score for the sample population. High quality sites were produced by both proponents and opponents of genetically modified food.

The second possible discriminator, resources, was actually a set of factors. It was thought the level of resources would be indicated by commitment, or the amount of the Website that was devoted to the topic of interest, the use of meta-tags, and the access-update interval. It was thought that correlations would exist that would follow the empirical model provided in Figure 2. However, strong statistical evidence for the model was not found in the sample of Websites that was analysed. The group of Websites that used meta-tags was equally high in quality as those that did not; the basequal scores were almost identical. In addition, commitment was not significantly correlated with the total quality score. Those sites that devoted many pages to the topic of genetically modified food fared equally well on the WQET as those that dedicated only a few to the topic.

The update-access interval was shown to account for over 20% of the variance in the quality score. This may indicate that the amount of updating that a site undergoes overwhelms all other considerations of resources that an organization commits to an endeavour such as a Web presence on the Internet. However, this result may be complicated by the number of sites with no easily determined update date and by those that use automatic dating techniques. Many Websites now pull in the date automatically from their server host. This serves to obscure the length of time since it was last updated. It is especially crucial in a science domain such as genetically modified food to know where current information exists and where outdated research may be. Identifying sites with older information could be critically important for research, for education, for library purposes, and for general learning by interested parties. It may be useful to identify and then segregate those sites that do use an automatic dater by looking carefully at the source code for javascript-supplied date routines. After differentiating the automatically dated sites from the sites where dates were manually applied, the analysis could then be revised.

As more and more people have access to the Internet and use Websites for gathering information, it becomes necessary to identify high quality and trustworthy sites. The WQET is a useful instrument for the information professional to use in accomplishing this important task. For everyday information seekers, however, it examines too many factors. Update-access interval of the site appears to be one valid shorthand criterion for assessing Website quality despite the many problems with determining the date a Website has been updated. Still, a large proportion of the variance in the scores for Websites remains unexplained.

Because it is common knowledge that information on the Internet differs dramatically in quality: some Websites being constructed by students who are compelled to do so for a class assignment, others by ideologues with an axe to grind, and others by reputable organizations with a mission to inform or advocate—it is useful to be able to assess the quality of the information, functionality, and the architectural details of such sites to find solid information resources in a time effective way. Like traditional efforts to establish authority in research reports and publishing, knowing how to assess Websites to assist information seekers in particular context areas is an emerging concern for library and information professionals. The Website Quality Evaluation Tool was built on aggregate research on information and Web quality, so it provides one step towards understanding Website evaluation. This paper explained how the WQET was used in the context of genetically modified agriculture and food. Current studies are planned to examine content factors more closely in an effort to determine more definitive quality measures and to look at how users themselves find information on genetically modified foods on the Web and how they incorporate what they find into their way of life.

The authors gratefully acknowledge the assistance offered by Dawn Filan, Kelly Grimmett, and Chris Scott who helped design and implement the Access Database used to record quality factors and total quality scores. The authors are also grateful to the researchers at Rutgers University Food Policy Institute, in particular, Bill Hallman and Helen Aquino for their help on the project.

Research described here was supported by a grant provided to the Rutgers Food Policy Institute by the U.S. Department of Agriculture (USDA), under the Initiative for the Future of Agricultural Food Systems (IFAFS) grant #2002-52100-11203 Evaluating Consumer Acceptance of Food Biotechnology in the United States, Dr. William K. Hallman, Principal Investigator. The

opinions expressed in the article are those of the authors and do not necessarily reflect official positions or policies of the USDA, The Food Policy Institute, or Rutgers University.

This tool is for Web developers, Web teams, or information professionals who are interested in rating Web sites for trustworthiness and quality. Evaluate the site according to each quality criterion. Rate each category, using a scale of 1-7 (see below), and then give the Web site an overall score based on the calculations and total.

Web site URL:___________________________________________________________

Web site Title:___________________________________________________________

Author:_________________________________________________________________

Sponsor:________________________________________________________________

Rating Scale: 1 2 Poor 3 4 Average 5 6 Good 7 Excellent

Score:

[1-7] Are the grammar and spelling correct?

Are statistics and research findings cited and documented?

Are biases clearly stated?

Is the audience or objective of the site clearly identified?

Is language appropriate for the subject matter?

Is there a theme, or are different topics tied together conceptually?

Is this a personal Web page or an institutional one?

Is there evidence that the information is accurate?

[1-7] How easy is it to navigate through the site?

Is there a menu bar that makes sense?

Is there a site map, index, or directory?

Is information presented in a clear and uncluttered manner?

Does the site load quickly or are there delays?

Is there an understandable conceptual plan?

[1-7] Is the material up to date?

What is the lasting power potential of this site?

Are pages dated to indicate date of creation or revision date?

Have there been frequent updates indicating that there is ongoing site maintenance?

[1-7] Do internal links lead user to a logical outcome?

Have external links been carefully selected?

Are connections live and reliable?

[1-7] Do the graphics enhance the information and understanding of the site material?

Are there gratuitous animated items that do little to enhance the information?

Are the colors pleasing and harmonious?

Are the fonts readable and consistent?

Is the design scheme consistent throughout the site?

Are frames annoying or are they functional (e.g. can the user print a frame and escape from a frame?)

[1-7] Is the author clearly identified with background, resume, CV, or biography?

Is contact information, including postal address, phone, and e-mail available?

Is e-mail address linked for easy communication?

What is the domain type of the sponsor?

Is the sponsor trying to sell something or advocate a cause? (Selling and advocating are not necessarily negative characteristics, but either activity should be clearly stated.)

[1-7] Is there a clearly stated goal for the site's information scope?

Does the site's information meet the clearly stated objectives of the site?

Is the information comprehensive or are there large gaps in coverage?

[1-7] Does the site demonstrate a consistent, clear style?

Does the style enhance the content or detract from it?

Is the style appropriate for the content?

Does the style demonstrate creativity or innovation?

______ Award 5 extra points if site uses meta-tags responsibly for subject cataloging

A (multiply x 2) = ______ A _______ E

B (multiply x 2) = ______ B _______ F

C (multiply x 2) = ______ C _______ G

_______ D _______ H

______Total

Coding results:

71 - 82 = Excellent Site, trust it

64 - 70 = Very Good, bookmark it

57 - 63 = Good, but proceed with caution

50 - 56 = May offer something, but don't trust information without investigation

Below 50 = Use the site information with a grain of salt

© 2000 Claire McInerney cmcinerney@aol.com

| Find other papers on this subject. |

| ||

© the authors, 2005. Last updated: 4 December, 2004 |