Information Research

Vol. 28 No. 3 2023

Comparison of accessibility and usability of digital libraries on mobile platforms: blind and visually impaired users' assessment

DOI: https://doi.org/10.47989/ir283337

Abstract

Introduction. This study compares thirty blind and visually impaired users' assessment of accessibility and usability of the two mobile platforms (mobile app and mobile website) of a digital library.

Method. Triangulation of data collection was applied, including think-aloud protocols, transaction logs, post-platform questionnaires, post-platform interviews, and post-search interviews.

Analysis. Two steps of analysis were used. First, quantitative analysis was applied to compare assessments of participants towards two mobile platforms' accessibility and usability. Second, qualitative data were analysed to identify types of design factors.

Results. Mobile app performs significantly better in all accessibility and usability variables except accessing information/objects. Most importantly, nine types of design factors are revealed in relation to blind and visually impaired users' assessment of accessibility and usability for the two mobile platforms. Furthermore, the design problems of the mobile website are associated with a responsive design that adjusts the digital library interface to a mobile device, the complexity of digital library structure and formats, and a sight-centred design that excludes blind and visually impaired users' unique information-seeking behaviours.

Conclusion. Mobile platforms of digital libraries, especially mobile websites, need to improve their designs. Design implications for mobile websites are further discussed.

Introduction

Accessibility and usability are critical research topics in developing information retrieval systems for all types of users (Guerreiro et al., 2019; Kane, 2007; Leuthold et al., 2008; Zhang et al., 2017). Blind and visually impaired users are one of the main user groups who experience accessibility and usability problems using information retrieval systems because of the sight-centred design (Babu, 2013; Khan and Khusro, 2019; Xie et al., 2020). Consequently, most research on blind and visually impaired users has focused on their problems with inaccessible information retrieval systems (Berget and MacFarlane, 2020; Craven, 2003; Xie et al., 2015; Xie, Wang, et al., 2021). Moreover, the number of people with disabilities, of which the majority are blind and visually impaired users, utilizing mobile devices via screen readers has increased dramatically from 12% in 2009 to 90% in 2021 (WebAIM, 2021). Unfortunately, they face more difficulties on mobile devices than on desktop computers.

Previous research on mobile devices found that blind and visually impaired users experience some of the same problems as they do in the desktop environment, including difficulty accessing images or multimedia content without alt text, difficulty locating information, difficulty understanding labels, and inadequate feedback (Carvalho et al., 2018; Oh et al., 2021; Wentz and Tressler, 2017). Additionally, they also face unique problems due to the mobile interfaces of information retrieval systems (Khan and Khusro, 2019). For example, they encounter difficulty adapting to new input methods in the mobile environment and difficulty using features that require applying gestures on a smartphone's touchscreen (Carvalho et al., 2018; Rodrigues et al., 2020).

Researchers have investigated the issues associated with designing blind and visually impaired-friendly mobile platforms: mobile app and mobile website. A mobile app provides web content without browsers, is developed for a specific operating system, and requires a user to download the specific app on a mobile device. In contrast, a mobile website offers web content via mobile browsers, using either responsive design that automatically supports multiple screen resolutions or mobile-dedicated design that only supports a specific screen resolution (Guerreiro et al., 2019; Marcotte, 2014; Zhang et al., 2017). Previous studies have examined the differences between accessibility and usability for the two platforms of online systems focusing on sighted users; their results showed that the mobile app had better performance and was preferred by sighted users (Jobe, 2013; Morrison et al., 2018; Othman, 2021).

Few studies have concentrated on mobile platforms of digital libraries. It is critical to investigate blind and visually impaired users' accessibility and usability issues when using mobile platforms of digital libraries. Unfortunately, existing literature has not adequately examined the current digital libraries' mobile interfaces for blind and visually impaired users. More importantly, there is a lack of research examining these users' assessments regarding accessibility and usability variables, comparing mobile websites and mobile apps, and identifying associated design factors in the digital library environment. Therefore, this study aims to test whether blind and visually impaired users consider a digital library's mobile app and mobile web interfaces to be equally accessible and usable and to identify related design factors with the platforms.

In this paper, key terms and concepts are defined as follows: according to the World Wide Web Consortium (W3C), ‘Web accessibility means that websites, tools, and technologies are designed and developed so that people with disabilities can use them’(2022, Introduction to Web Accessibility section). Usability is defined as ‘a quality attribute that assesses how easy user interfaces are to use’ (Nielsen, 2012, Usability 101: Introduction to Usability section). Blind and visually impaired users refer to those who do not have the necessary sight to see information on a screen, thus having to rely on screen readers to interact with computers and mobile devices non-visually when using information retrieval systems (Xie et al., 2020). A digital library is an interactive information retrieval system with an online collection of digitized or born-digital items, supporting diverse users to find desired information (Xie and Matusiak, 2016).

Literature review

Accessibility and associated design factors in the mobile environment

Research on accessibility has focused on four types of variables: accessing a system, accessing a navigation component, accessing a feature, and accessing information/objects. Accessing a system is the extent to which a system can be accessed via an app or a browser on mobile devices (Jobe, 2013; Othman, 2021). Most studies on mobile devices have concentrated on enhancing the accessibility of a system or making it compatible with assistive technology (Khan et al., 2018; Rodrigues et al., 2020). On desktops, sighted and blind and visually impaired users generally access a system via browsers. On mobile devices, users can access a system via a mobile app or a mobile website. Othman (2021) surveyed users’ preferences between the two platforms. In the study, some participants preferred the mobile app because they could access a site with only one click, but others preferred the mobile website because they did not want to install an app. However, no study has investigated how blind and visually impaired users access a digital library through both mobile apps and mobile websites.

Accessing a navigation component is the extent to which a system’s main elements (such as shortcuts, headings, landmarks, etc.) can be accessed, enabling a user to move among them on mobile devices (Rodrigues et al., 2017; Zhang et al., 2017). Blind and visually impaired users interact with mobile devices via screen reader software which reads a webpage linearly and sequentially (Ross et al., 2017). Navigation in the mobile environment relies on a touchscreen; blind and visually impaired users touch and swipe on the screen using pre-loaded screen readers of mobile devices instead of keyboard commands in the desktop environment (Rodrigues et al., 2017). Mobile apps can hinder access for these users due to inconsistent navigation layout (Rodrigues et al., 2017). Likewise, some navigational structures of responsive design, such as narrower and deeper structure, can create additional problems for them (Hochheiser and Lazar, 2010). Specifically, Nogueira et al. (2019) revealed these structures caused more problems for blind and visually impaired users than shallower and wider structures because they needed to scroll down and click more to access specific content and they often got lost along the way. Simultaneously, mobile apps may omit some functions or have a different layout than desktop websites, and users may get confused by the differences. Rodrigues et al. (2017) also found that inconsistent page design and a greater variety of required gestures put a heavy burden on blind and visually impaired users. In addition, Ross et al. (2017) discovered that mobile interface design elements, such as floating action buttons in smartphones, triggered unexpected interruptions by screen readers, causing users to lose their focus.

Accessing a feature is the extent to which features of a system can be accessed via a mobile app or a mobile website (Guerreiro et al., 2019; Vigo and Harper, 2014). Mi et al. (2014) pointed out that blind and visually impaired users experience significant difficulty knowing where and how they can type text on mobile devices. Unlike the desktop environment, the lack of physical buttons on touchscreen devices and problems with screen readers recognizing some buttons could cause accessibility problems (Carvalho et al., 2018; Rodrigues et al., 2020). Moreover, difficulty inferring the existence or unawareness of features’ functionality could lead to accessibility issues due to inadequate feedback on controls, forms, and features (Abraham et al., 2021). Carvalho et al. (2018) pointed out that blind and visually impaired users did not know the specific features of a system’s mobile website because the mobile website did not provide any feedback when they clicked a feature button. In addition, improper labelling or missing labels prevented them from accessing specific features on mobile platforms (Wentz and Tressler, 2017).

Accessing information/objects is the extent to which information/objects of a system can be accessed via a mobile app or a mobile website (Jobe, 2013; Rodrigue et al., 2020). It is a crucial attribute of accessibility because users cannot make relevant judgments of these items without accessing them. Content providers should try to increase the accessibility levels of content on mobile devices. The page layouts of mobile websites and mobile apps adjust their resolution because of the small screen size in the mobile environment, but mobile platforms often keep a desktop version of web design elements or contents. For example, Guerreiro et al. (2019) noted that non-accessible web design elements from desktop platforms could still exist on mobile platforms due to responsive design. Also, they stressed that keeping a desktop version layout in the mobile interface sometimes caused excessive scrolling to access a relevant object. In addition, accessing visual items, such as images and PDFs, hindered blind and visually impaired users from accessing information/objects if there is no alt text for visual items (Menzi-Cetin et al., 2017). Alajarmeh (2022) and Carvalho et al. (2018) found that these users had difficulty reading items on both platforms due to no alt text for an image.

Usability and associated design factors in the mobile environment

While there are several usability criteria used to evaluate digital libraries, this study focuses on ease of learning, ease of use, efficiency, and satisfaction, which are the most frequently applied criteria in digital library usability and mobile usability studies (Firozjah et al., 2019; Jeng, 2011; Pant, 2015; Xie et al., 2018). Ease of learning is the degree of easiness a user perceives when learning to use a system (Weichbroth, 2020; Wilson, 2009). Researchers have investigated system learning in the mobile environment, and they emphasized that reducing the learning process in the mobile environment was one of the critical issues for blind and visually impaired users’ usability enhancement (Grussenmeyer and Folmer, 2017; Rodrigues et al., 2020). They found that these users had a longer learning curve in interacting with a smartphone because they had to learn to use the input method. Specifically, they experienced problems when editing their previously entered text in an edit box because they did not know how to check spelling errors and modify text without deleting all search terms (Grussenmeyer and Folmer, 2017). Moreover, participants reported that a new app’s unfamiliar layout and structure forced them to frequently lose their location (Rodrigue et al., 2020).

Ease of use is the degree of easiness a user perceives when using a system to achieve their search goals (Jeng, 2011; Sbaffi and Zhao, 2020; Weichbroth, 2020). Ease of use has been considered as one of the main attributes in evaluating the usability of a digital library (Omotayo and Haliru, 2020; Xu and Du, 2018). Some researchers examined the types of design factors affecting ease of use in the mobile environment. In particular, Khan and Khusro (2019) discovered that a blind-friendly mobile app email interface, including the ordering of layout and menus, reduced the cognitive overload of blind and visually impaired users and increased ease of use. Also, Zhang et al. (2017) tested different designs of mobile websites for both blind and visually impaired and sighted users; the results showed that for both groups, hierarchical linear website design had a more positive impact on participants’ performance than desktop-oriented website design.

Efficiency is the degree of effort a user perceives when using a system to achieve their search goals (Ahmad et al., 2021; Weichbroth, 2020). Efficiency issues can be caused by user types, user preferences, and system design problems (Interaction Design Foundation, 2020). It can also be affected by capacity, layout, or performance of a system to present relevant results that meet users’ needs (Alonso-Ríos et al., 2009; Zhang et al., 2017). On mobile platforms, a mobile app is considered to perform better for both blind and visually impaired and sighted users because it is customized for a specific mobile operating system and presents a simplified interface (Guerreiro et al., 2019; Jobe, 2013). In addition, researchers found that blind and visually impaired users have difficulty using a keyboard on mobile devices due to the touchscreen and varying screen sizes (Zhang et al., 2017). Therefore, their speed of interaction with a smartphone is slower than sighted users, and eventually, they spend more time learning and completing a task (Grussenmeyer and Folmer, 2017; Rodrigues et al., 2020).

Satisfaction is the degree of contentment a user perceives with a system's overall interface design, such as structure and layout (Belkin and Vickery, 1985; Jeng, 2011; Weichbroth, 2020). Satisfaction is one of the top usability variables for evaluating users’ overall perception of information retrieval systems (Sbaffi and Zhao, 2020; Xie et al., 2018; Xu and Du, 2018). In the desktop environment, Aqle et al. (2020) created a search interface for blind and visually impaired users with enhanced accessibility and usability functions and compared it with the Google interface. The result demonstrated that this interface reduced search time and increased user satisfaction over Google. In addition, Qureshhi and Wong (2020) compared blind users’ satisfaction with adaptive and non-adaptive features of a mobile app and found that adaptive features had a higher satisfaction score than non-adaptive features of the app.

Comparison between mobile platforms

Some researchers have investigated the differences between accessibility and usability for the two platforms of online systems for sighted users. Othman (2021) compared users’ preferences between mobile apps and mobile websites on a smartphone. The results showed that the majority of the participants preferred using mobile apps because those were faster and easier to use than mobile websites due to the tailored screen. However, some participants preferred mobile websites because those used the same familiar layout as the desktop version. Tupikovskaja-Omovie et al. (2015) studied users’ eye-tracking behaviours on a mobile website and a mobile app of a fashion shopping website. They discovered that the mobile app did not guarantee higher satisfaction than the mobile website due to the small size of pictures and fonts in search results and its different layout compared with the desktop version. On the contrary, Jobe (2013) concluded that mobile apps performed better than mobile websites because they were customized for a specific mobile operating system. Similarly, Morrison et al. (2018) emphasized that mobile app users visited more frequently and used online health systems more efficiently than mobile website users due to design differences, such as navigation, length, and volume of content.

Nevertheless, few studies have compared two mobile platforms for blind and visually impaired user. Carvalho et al. (2018) investigated several problems encountered by blind and visually impaired and sighted users in mobile websites and mobile apps of two governmental services and two e-commerce services. The results showed that blind and visually impaired users experienced more issues than sighted users; mobile apps had fewer usability and accessibility problems than mobile websites in both user groups. Alajarmeh (2022) recently identified accessibility problems by examining blind and visually impaired users’ utilization of mobile apps and mobile websites for commercial sites. Focusing on mobile apps, the results showed that both platforms shared the same design problems, such as conflicting and complex gestures, labelling problems, dynamic content, and visual media. There were also design problems unique to mobile apps, such as inoperative controls or inappropriate use of controls and features, accessibility settings, and missing search options.

Research questions and associated hypotheses

Although previous studies have investigated online systems’ accessibility and usability issues in users’ interaction with mobile websites and mobile apps, no studies were found to have compared a mobile website and a mobile app of a digital library used by blind and visually impaired users. Therefore, this study is the first to concentrate on blind and visually impaired users’ experience and perceptions of mobile apps and mobile websites in the digital library environment. This paper addresses the following research questions and associated null hypotheses:

Do blind and visually impaired users perceive the mobile app and the mobile website of a digital library to be equally accessible? What are the design factors associated with users’ perceptions?

H01: There is no significant difference in perceived levels of accessing a digital library (H01:1), accessing a navigation component (H01:2), accessing a feature (H01:3), and accessing information/objects (H01:4) between the mobile app and the mobile website of a digital library.

Do blind and visually impaired users perceive the mobile app and the mobile website of a digital library to be equally usable? What are the design factors associated with users’ perceptions?

H02: There is no significant difference in perceived levels of ease of learning (H02:1), ease of use (H02:2), efficiency (H02:3), and satisfaction (H02:4) between the mobile app and the mobile website of a digital library.

Methods

Sampling

Thirty blind and visually impaired participants were recruited throughout the United States by distributing the recruitment flyer to the National Federation of the Blind (NFB) listserv. Prior to joining the study, potential participants received a brief pre-screening questionnaire and an informed consent form. Participants needed to meet the following requirements: (1) using iPhone 6S (or newer) with iOS 11 (or later); (2) using iPhone non-visually by listening to VoiceOver; (3) having at least three years of experience searching for information on the Internet via iPhone; (4) feeling comfortable verbalizing thoughts in English; and (5) being willing to install the Microsoft Teams software and the Library of Congress Digital Collections app. iPhone users were chosen because iOS devices (Apple iPhone, iPad, or iPod) are the most widely used mobile devices (71.9%) by people with disabilities (WebAIM, 2021).

Table 1 presents the demographic characteristics of the participants representing diverse backgrounds. A 7-point Likert scale represents the frequency of using the Internet via iPhone, with 1 indicating never use and 7 use all the time. A 7-point Likert scale was used to rate their familiarity with VoiceOver on iPhone, with 1 indicating not familiar at all and 7 extremely familiar. Participants used their iPhones to access the Internet very often (M = 6.5) and were quite familiar (M = 6.6) with VoiceOver - the screen reader used on iPhone. On average, participants had used iPhone devices to search for information for 8.8 years.

| Category | Sub-category | Number (percentage) |

|---|---|---|

| Age | 18-29 | 5 (17%) |

| 30-39 | 9 (30%) | |

| 40-49 | 8 (27%) | |

| 50-59 | 4 (13%) | |

| >59 | 4 (13%) | |

| Sex | Male | 15 (50%) |

| Female | 15 (50%) | |

| Information search skills | Beginner | 0 (0%) |

| Intermediate | 3 (10%) | |

| Advanced | 19 (63%) | |

| Expert | 8 (27%) | |

| Vision condition | Blind | 24 (80%) |

| Visually impaired | 6 (20%) | |

| Table 1: Demographic information of participants (N = 30) | ||

Mobile platforms and tasks for participants

The Library of Congress Digital Collections were selected for this study since it is one of a few digital libraries providing both mobile website (https://www.loc.gov/collections/) and mobile app (https://apps.apple.com/us/app/loc-collections/id1446790792). As a national level digital library, the Library of Congress Digital Collections consist of multiple digital collections covering a wide range of topics of interest to blind and visually impaired users. Participants were asked to use Safari to access the mobile website because it is the mobile browser most commonly used by blind and visually impaired users (WebAIM, 2021).

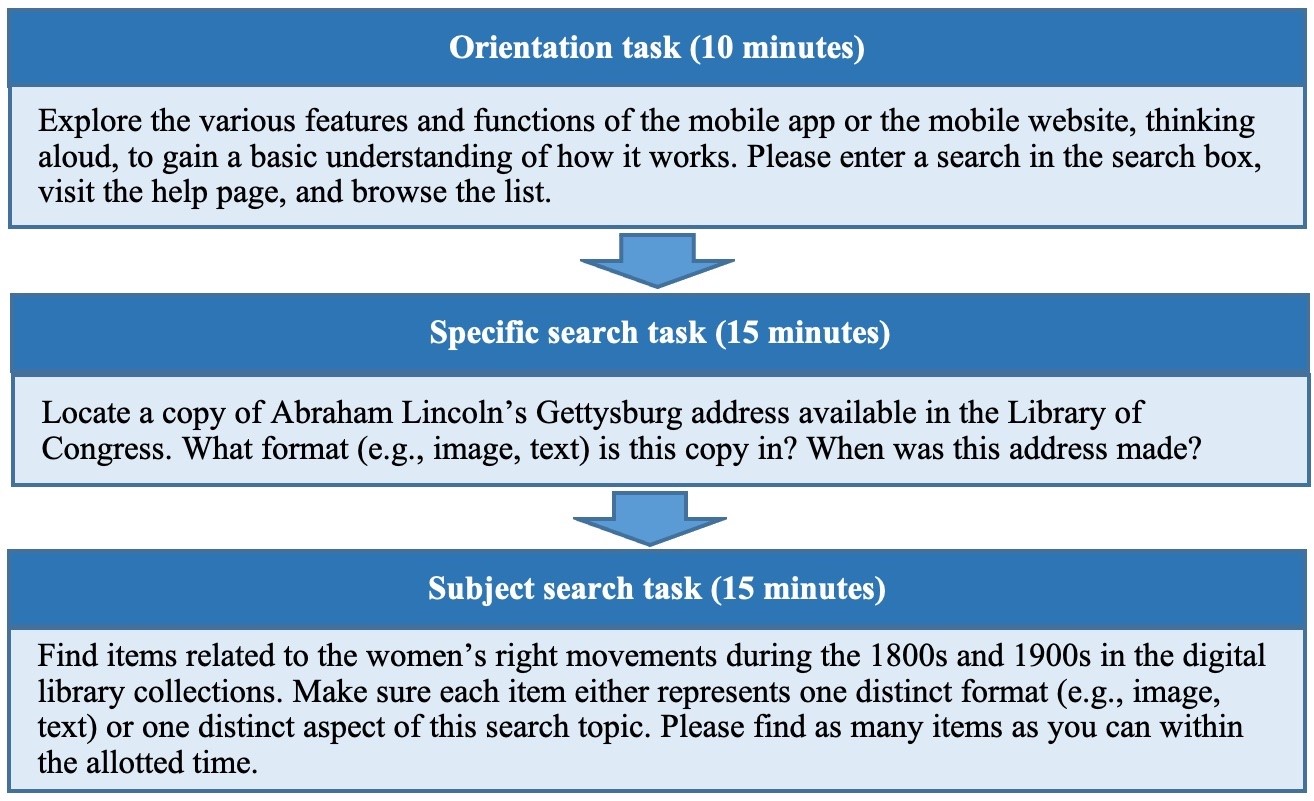

Thirty participants were recruited to use both the mobile app and the mobile website. Since this is a within-subjects design, following the Latin Square design, the first participant (P1) was assigned to use the mobile app first, and the second participant (P2) was assigned to the mobile website first. The rest of the participants were assigned to either platform first accordingly. For each platform, participants performed one orientation task, one specific search task, and one subject search task (Figure 1). After successfully installing the required app, each participant spent around 3.5 hours completing all the study activities. Microsoft Teams was employed to collect data. Required materials and forms about the study were submitted to the Institutional Review Board of a state university, and ethical approval was obtained. Each participant received a $100 electronic gift card as a token of appreciation for participating in the study.

Data collection

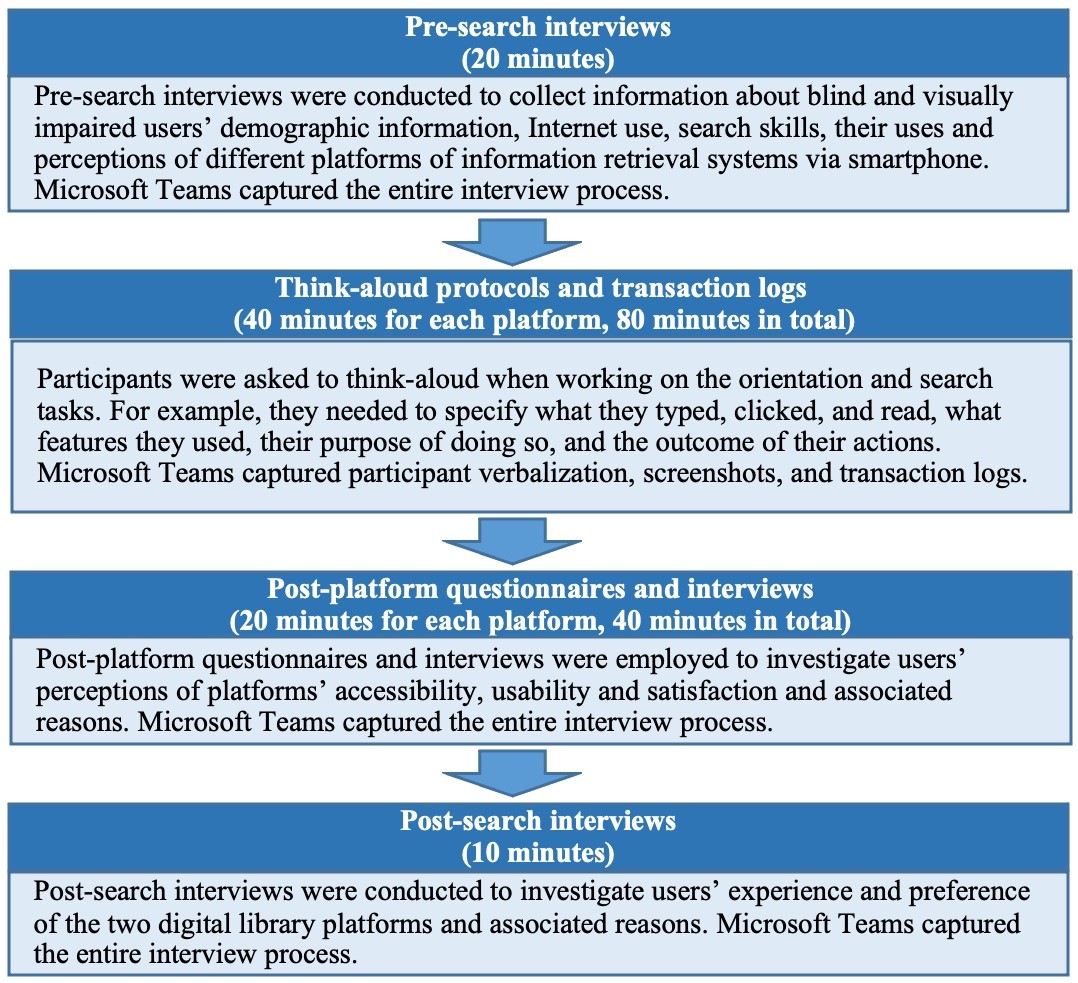

Multiple data collection methods (Figure 2) were used, including pre-search interviews, think-aloud protocols and transaction logs, post-platform questionnaires, post-platform interviews, and post-search interviews. Think-aloud protocols and transaction logs were applied to capture participants’ thoughts and movements when working on the tasks (Kinley et al., 2014; Xie, Babu, et al., 2021). All recorded audio and video files were later transcribed verbatim for further analysis. For each platform, a questionnaire was employed to help researchers to measure participants’ perceptions of each platform’ accessibility and usability as well as their satisfaction towards the platform by using a 7-point Likert scale with 1 indicating not at all and 7 extremely. For example, participants rated their perceptions, such as On a scale of 1 to 7, how would you rate the ease of access to the features of the DL when using the mobile app? Different types of interviews, including post-platform interviews and post-search interviews, allowed the researchers to record participants’ responses related to research questions. During Post-platform interviews, participants provided the reasons for their rating of accessibility and usability of each platform as well as suggestions for how to enhance the digital library on mobile platforms. For example, participants were asked, What made the mobile site of digital library easy or difficult to learn? and What made the digital library mobile app easy or difficult to use? In the post-search interviews, participants provided their assessment regarding the comparison of the two platforms of the digital library and their final thoughts.

A pilot study was conducted to test the data collection instruments and procedures.

Figure 1: Types of tasks

Multiple data collection methods (Figure 2) were used, including pre-search interviews, think-aloud protocols and transaction logs, post-platform questionnaires, post-platform interviews, and post-search interviews. Think-aloud protocols and transaction logs were applied to capture participants’ thoughts and movements when working on the tasks (Kinley et al., 2014; Xie, Babu, et al., 2021). All recorded audio and video files were later transcribed verbatim for further analysis. For each platform, a questionnaire was employed to help researchers to measure participants’ perceptions of each platform’ accessibility and usability as well as their satisfaction towards the platform by using a 7-point Likert scale with 1 indicating not at all and 7 extremely. For example, participants rated their perceptions, such as On a scale of 1 to 7, how would you rate the ease of access to the features of the DL when using the mobile app? Different types of interviews, including post-platform interviews and post-search interviews, allowed the researchers to record participants’ responses related to research questions. During Post-platform interviews, participants provided the reasons for their rating of accessibility and usability of each platform as well as suggestions for how to enhance the digital library on mobile platforms. For example, participants were asked, What made the mobile site of digital library easy or difficult to learn? and What made the digital library mobile app easy or difficult to use? In the post-search interviews, participants provided their assessment regarding the comparison of the two platforms of the digital library and their final thoughts.

Figure 2: Data collection methods and procedure

Data analysis

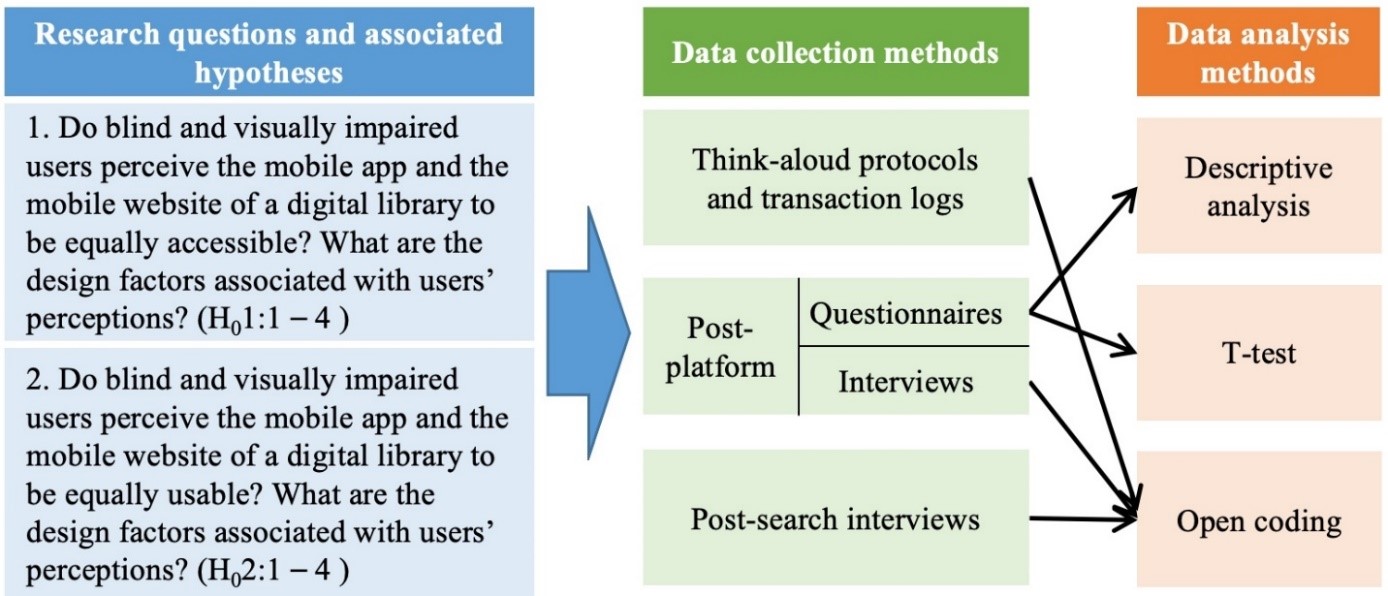

Figure 3 presents the research questions, associated data collection methods, and data analysis methods. There are two steps of analysis. First, quantitative analysis, mainly t-test, was applied to examine null hypotheses associated with research questions. Quantitative data from the questionnaires were used for quantitative analysis. Before performing a t-test, we checked the normality of the Likert scale data collected from the questionnaires. Specifically, skewness was used to check for normality of the data related to accessing a digital library, accessing a navigation component, accessing a feature, accessing information/objects, ease of learning, ease of use, efficiency, and satisfaction of the two digital library platforms. A value for skewness between -2 and +2 is considered to be normally distributed (George and Mallery, 2019). The collected data had a skewness value between -2 and +2 indicating normal distribution, so a t-test was applied to test the null hypotheses associated with the two research questions. After statistical analysis, interview transcripts, think-aloud protocols, and transaction logs were analysed as supporting evidence for the similarities and differences of variables between the mobile app and the mobile website. Qualitative data were examined to identify types of design factors corresponding to the two research questions. Open coding, which is the process of analysing and categorizing the unstructured textual transcripts into meaningful concepts (Flick, 2018), was utilized for the analysis of design factors. Nine types of design factors were identified. Two coders coded the data independently, and the inter-coder reliability was 0.94 based on Holsti’s (1969) method. The codes were discussed within the research team until an agreement was reached, and disagreements or questions were resolved by group discussions to ensure the reliability of data analysis. Table 2 presents the coding scheme of design factors for the two research questions with definitions. Examples of design factors are presented in the Results section.

Figure 3: Research questions and associated data collection and data analysis methods

| Design Factor | Definition |

|---|---|

| Adequacy of alt text (AAT) | Whether alt text associated with an image item or the text extraction from a scanned document is discernible and meaningful |

| Adequacy of a feature label (AFL) | Whether a label of a feature is discernible and understandable |

| Adequacy of item metadata and collection description (AMD) | Whether the metadata associated with an item, or the summary of a collection is discernible and identifiable |

| Availability of contextual help information (ACH) | Whether a digital library provides instructions or tips to use a feature |

| Availability of navigation shortcut (ANS) | Whether a digital library offers a shortcut to reach a desired location from current location |

| Logical location of a feature (LLF) | Whether a digital library presents a desired feature or places the cursor focus at a relevant location |

| Logic of structure and simplicity of layout (LSL) | Whether a digital library follows a straightforward navigation structure and an uncluttered layout in content organization |

| Simplicity of installation and access (SIA) | Whether the installation of an app is easy and launching of a digital library requires registration |

| Stability of keyboard focus (SKF) | Whether an input cursor stays within an input field that is being used |

| Table 2: Coding scheme of design factors | |

Results

Accessibility and associated design factors

As for accessibility (Research question 1), the results (Table 3) showed that there were significant differences between the mobile app and the mobile website for all perceived variable levels except accessing information/objects.

| Perception | Group | N | Mean | SD | t(df); p-value |

|---|---|---|---|---|---|

| Accessing a digital library | Mobile app | 30 | 6.5 | 0.9 | 3.30(58); 0.00 |

| Mobile website | 30 | 5.4 | 1.6 | ||

| Accessing a navigation component | Mobile app | 30 | 5.9 | 1.3 | 3.71(58); 0.00 |

| Mobile website | 30 | 4.4 | 1.7 | ||

| Accessing a feature | Mobile app | 30 | 6.0 | 1.2 | 4.74(58); 0.00 |

| Mobile website | 30 | 4.4 | 1.4 | ||

| Accessing information/objects | Mobile app | 30 | 4.2 | 2.1 | 0.97(58); 0.34 |

| Mobile website | 30 | 3.7 | 1.9 | ||

| Table 3: T-test results of accessibility variables | |||||

Null hypotheses H01:1 - 3 (no significant difference between mobile app and mobile web of a digital library) were rejected. An independent-samples t-test was applied to compare the perceived levels of accessing a digital library, accessing a navigation component, and accessing a feature between the mobile app and the mobile website. The perceived level of accessing a digital library for the mobile app (M = 6.5, SD = 0.90) was significantly higher than the mobile website (M= 5.4, SD = 1.6); t(58) = 3.30, p < .05. The perceived level of accessing a navigation component for the mobile app (M = 5.9, SD = 1.3) was significantly higher than the mobile website (M = 4.4, SD = 1.7); t(58) = 3.71, p < .05. The perceived level of accessing a feature for the mobile app (M = 6.0, SD = 1.2) was significantly higher than the mobile website (M = 4.4, SD = 1.4); t(58) = 4.74, p < .05.

Null hypothesis H01:4 (no significant difference between mobile app and mobile web of a digital library) was accepted. An independent-samples t-test was applied to compare the perceived levels of accessing information/objects between the mobile app and the mobile website. The perceived level of accessing information/objects for the mobile app (M = 4.2, SD = 2.1) was not significantly higher than the mobile website (M = 3.7, SD = 1.9); t(58) = .97, p > .05.

The design factors identified through open coding analysis are presented with associated examples. For each data type, TA, PS, and PI stand for think-aloud protocols and transaction logs, post search interview, and platform interview data, respectively.

Accessing a feature shows the largest mean difference between the mobile app and the mobile website. The design factors notably associated with the different perceptions on accessing a feature are adequacy of a feature label and stability of keyboard focus. Some participants noted that accessing a feature is related to how they are labelled. For example, on the mobile app, P23 stated that she could access all features without problems due to its proper labelling:

‘Everything was labelled properly. I never struggled to get through anything I was navigating or working with’ (P23-M.App-PI).

While, on the mobile website, P9 was confused due to ambiguous labels used for the search feature. The search field was labelled as a pop-up button rather than the text field, which was not what P9 expected.

‘I’m hitting the search toggle. [opens search box] And I’m searching the digital content...where’s the text field, guys? There it is. So, it’s interesting they call that a pop-up button rather than a text field. That would be very confusing for...yeah, pop up button’ (P9-M.Web-TA).

Additionally, the results showed that shifting the input cursor influenced accessing features in the mobile website. P29 experienced difficulty accessing a search feature because the keyboard focus would move away from the intended placement, forcing him to access the feature multiple times to execute a search.

[opens search box] … [types ‘Berg address’. Moves out of search box] Oops. [closes search box] Looks like the search kind of moved. The keyboard disappeared on me [types ‘berg’. Focus indicator moves out of search box] Every time I’m trying to search, right, one thing that’s happening is it’s saying that there are some results available as I’m typing and I’m trying to access those results and every time I try to click on the suggestions or navigate towards the suggestions, the keyboard and search overall just moves away, shifts away, and closes itself. I don’t know if that’s a bug or that’s something that I’m doing (P29-M.Web-TA).

Following accessing a feature, accessing a navigation component shows the second-largest mean difference between the mobile app and the mobile website. For this variable, logic of structure and simplicity of layout and availability of navigation shortcut were the design factors behind their perceptions of the two platforms. The participants expressed their preferences about the two platforms’ structure and the layout. For example, P12 praised the mobile app for being uncluttered and straightforward:

‘It was similar to other apps I’ve used. It was simple. It was uncluttered. It was straightforward. It was everything that an app should be’ (P12-M.App-PI).

P1 also noted the simplicity of the mobile app but disapproved of the mobile website for being complex, making it difficult for him to recognize a navigation component to move from one place to another.

‘Mobile application was cleaner and easier to navigate around but with this [M. Web], there’s a lot more hurdles to get to one point that you want so this seems more complex to know exactly what you want or figure out how to navigate through this’ (P1-M.Web-TA).

Furthermore, the participants complained that the mobile website lacked shortcuts, requiring them to make more efforts in navigating within a digital library page. While P9 stated the mobile app design enabled users to move around with a few swipes, P26 stressed that the mobile website needed improvement on assigning headings or landmarks to reach the desired sections on the page. This shortcoming negatively impacted the rating of the mobile website.

‘And then the search results are just in a list form so swiping. Once you’re in the result, it’s pretty simple, like the audio recording was just four or five swipes to get to that … Just really basic. No complexity’ (P9-M.App-PI).

‘the headings do get you from section to section and the links do take you through the links you need to find. … Navigating it would be easier if this website had more headings in it, for example. And more landmarks’ (P26-M.Web-PI).

Accessing information/objects shows the lowest mean difference between the mobile app and the mobile website. The participants encountered difficulty accessing information/objects such as photos, manuscripts, and newspapers in accomplishing their tasks for both the mobile app and the mobile website. Adequacy of alt text and adequacy of item metadata and collection description were mentioned in the participants’ explanations. P6 and P19 complained about the lack of alt text of an image item in both platforms.

‘Out of many items I looked at, only one had accessible text. So, the text of the items is largely inaccessible. There’s a lot of pictures without descriptive alt text, a lot of pictures without any real text. It’s terrible’ (P19-M.App-PI).

‘even the Lincoln Papers, those are all images. They’re text but they’re images of text and they’re unreadable’ (P6-M.Web-PI).

Furthermore, participants noted the absence of a meaningful title or descriptions needed for understanding the presented item. For example, P8 faced difficulty accessing the image description given to her, and she wasn’t sure whether the periodical existed in image or text format.

‘While it did give me the general title of the item, once I clicked on it, I didn’t know where to find the description anymore…And then it often would say it was a periodical, but I don’t know if that means it’s going to be an image file, a text file… So, yeah, I was a little disappointed by reading about items more and knowing what I’m getting myself into before needing to load and click things’ (P8-M.App-PI).

Similarly, for P10, the lack of uniqueness of titles hampered her ability to distinguish one item from another.

‘A lot of the items, they all kind of sound alike so I can’t really tell. I see a whole bunch of them are 1850 to 1890s or something, but I cannot tell the difference. The only one I can tell the difference is this one has 70 something images and this one has 15 or whatever so it doesn’t seem like it’s a comprehensive description of what they really are…’ (P10-M.Web-PI).

Usability and associated design factors

As to usability (Research question 2), the results (Table 4) showed there were significant differences in perceived levels of all variables between the mobile app and the mobile website.

| Perception | Group | N | Mean | SD | t(df); p-value |

|---|---|---|---|---|---|

| Ease of learning | Mobile app | 30 | 6.4 | 0.6 | 4.68(58); 0.00 |

| Mobile website | 30 | 4.8 | 1.7 | ||

| Ease of use | Mobile app | 30 | 5.9 | 1.4 | 5.01(58); 0.00 |

| Mobile website | 30 | 3.9 | 1.7 | ||

| Efficiency | Mobile app | 30 | 5.1 | 1.4 | 4.92(58); 0.00 |

| Mobile website | 30 | 3.1 | 1.8 | ||

| Satisfaction | Mobile app | 30 | 4.7 | 1.6 | 2.42(58); 0.02 |

| Mobile website | 30 | 3.6 | 2.0 | ||

| Table 4: T-test results of usability variables | |||||

Null hypotheses H02:1 - 4 (no significant difference between mobile app and mobile web of a digital library) were rejected. An independent-samples t-test was applied to compare the perceived level of ease of learning, ease of use, efficiency, and satisfaction between the mobile app and the mobile website. The perceived level of ease of learning for the mobile app (M = 6.4, SD = 0.6) was significantly higher than the mobile website (M= 4.8, SD = 1.7); t(58) = 4.68, p < .05. The perceived level of ease of use for the mobile app (M = 5.9, SD = 1.4) was significantly higher than the mobile website (M = 3.9, SD = 1.7); t(58) = 5.01, p < .05. The perceived level of efficiency for the mobile app (M = 5.1, SD = 1.4) was significantly higher than the mobile website (M = 3.1, SD = 1.8); t(58) = 4.92, p < .05. The perceived level of satisfaction for the mobile app (M = 4.7, SD = 1.6) was significantly higher than the mobile website (M = 3.6, SD = 2.0); t(58) = 2.42, p < .05.

Efficiency shows the largest mean difference between the mobile app and the mobile website in fulfilling their tasks. The participants mentioned that logic of structure and simplicity of layout and logical location of a feature affected their ratings for perceived efficiency. For example, P30 commented that the digital library’s mobile web structure had too many layers to reach the desired item of a search.

‘I’m looking for Abraham Lincoln, but it took a bit of a mental leap to know, how do I get Abraham Lincoln Papers to the Gettysburg Address specifically. That was not very clear. There was just not enough info, or too many layers of something that didn’t say...I don’t know. I just didn’t know how to get there…’ (P30-M.Web-PI).

The structure of the mobile website was not clear for her to locate the wanted information, which hampered her search efficiency in the digital library. In particular, some participants emphasized that the location of a feature hindered efficiency while using the mobile website. P6 stated that the location of the filter feature was not apparent, and he could not find a way to filter to a specific format quickly. Since the search filter was situated at the end of the mobile web page after the search result list, he faced difficulty recognizing the existence of such features.

‘I feel like it was well-organized and inefficiently as far as not a way to select for content. Like if I just wanted to look for audio, I should be able to do that and there’s not an obvious way to do that. I think that that’s an inefficiency that they could fix rather easily. If you can, I couldn’t find it’ (P6-M.Web-PI).

Ease of use shows the second-largest mean difference among usability variables. Logic of structure and simplicity of layout, availability of contextual help information, and availability of navigation shortcut were identified as design factors causing the different ratings for ease of use between the mobile app and the mobile website. Similar to other variables, the digital library structure and the layout played a role in participants’ perceived ease of use. The comments for the mobile app were relatively positive; for example, P2 pointed out that the mobile app was easy to use due to fewer elements and clutters.

‘It’s easy to use because there’s just way less clutter on there. The results were just each a single element on the screen so with VoiceOver you just flick through and every time you flick you’re just getting a new result and so it’s faster to click through, to be able to get a sense of what results you actually have in front of you’ (P2-M.App-PI).

For the mobile website, however, P8 was overwhelmed by the results structure due to multiple layers to reach individual items. The complex result structure caused difficulty in using the search features of the digital library to retrieve the desired item.

‘It tells you, here’s a collection of 20,000 items. But what if I just want one item? I have to go in there and search again … It’s daunting that there’s so many layers to search through’ (P8-M.Web-PI).

Furthermore, perceived ease of use was related to the existence of contextual help information. P11 noted that the clear and self-explanatory design of the mobile app assisted her in using the digital library with ease.

‘Most of the onscreen items … were self-explanatory in terms of search or browse. And I had a basic idea of what those terms generally mean. When it says search that means the keyboard comes up and when the mention of browse, that means that there’s a list of some things’ (P11-M.App-PI).

On the other hand, P4 commented on the need for the mobile website to guide him to get started using the digital library:

‘the lack of a clear way to orient yourself to the page and figure out where you should go to find information or where to go on the page...’ (P4-M. Web-PI).

In addition, availability of navigation shortcut impacted blind and visually impaired users’ ratings for ease of use. P28 suggested that the mobile website needed more headings or landmarks for blind and visually impaired users to use the digital library with ease.

‘finding things is not easy and I think part of that has to do with how they lay the website out. Navigating it would be easier if this website had more headings in it, for example. And more landmarks’ (P28-M.Web-PI).

Satisfaction shows the lowest mean difference between the mobile app and the mobile website. Logic of structure and simplicity of layout explains the difference in the ratings for the two platforms, while adequacy of alt text illustrates the low mean gap between the two. P22 compared the two platforms, crediting the simple and straightforward design of the mobile app and the cluttered layout of the mobile website for her preference of the mobile app:

‘The app is so streamlined and so compact and everything is there. No clutter…The website was kind of cumbersome and cluttered and you didn’t know necessarily where you were’ (P22-PS).

In contrast, the insufficient alt text was a negative factor when explaining their perceived satisfaction with both platforms. P14 indicated that a deficiency of both platforms was the lack of descriptive alt text in accessing and comprehending items:

‘They both have a lot of good information and a huge amount of information... but I think they both involve the same issue of having things for screen readers that there’s no description there’ (P14-PS).

Discussion

Highlights of the key findings

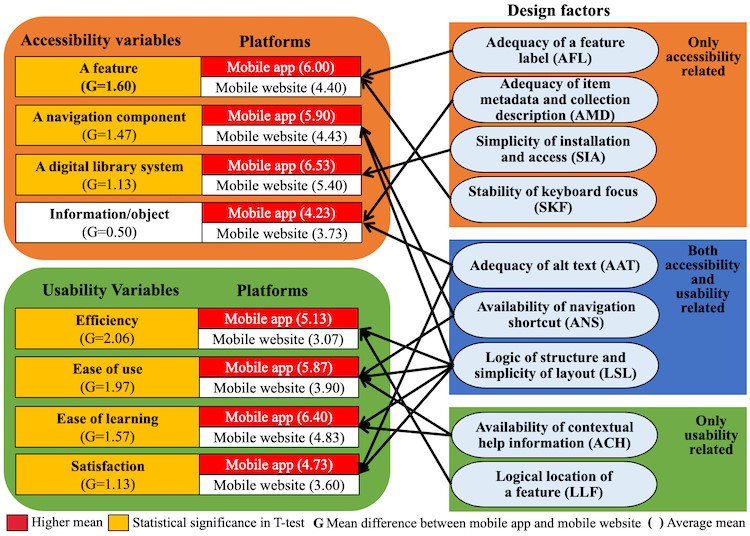

Since no prior studies have investigated their interactions with mobile platforms in the digital library environment, this research presents some unique findings (Figure 4). This study shows that the mobile app was rated significantly better than the mobile website for all accessibility and usability variables except accessing information/objects. Even though the mobile app is more accessible and usable for blind and visually impaired users because it is specifically designed for mobile devices (Carvalho et al., 2018), mobile web versions of digital libraries are more often used by them since they rarely install digital library apps on their mobile devices. Therefore, while most digital libraries are accessed through mobile websites by blind and visually impaired users, these findings reveal that the sight-centred design of digital library mobile web interfaces includes many of the accessibility and usability design problems that hinder them from effectively interacting with a digital library. While design factors are neutral to the accessibility and usability of the two platforms, design problems highlight the issues associated with some design factors

Figure 4: Relationships between design factors and accessibility and usability variables

This study indicates that the design problems of the mobile website are caused by three primary issues: 1) the responsive design that adjusts the web-based interface to fit a mobile device (Guerreiro et al., 2019; Nogueira et al., 2019), 2) the complexity of digital library structure and formats (Xie and Matusiak, 2016), and 3) the sight-centred design that does not consider blind and visually impaired users’ unique information-seeking behaviours, such as going through a page in a linear process (Berget and MacFarlane, 2020; Carvalho et al., 2018). Additionally, some issues are inherited from a system’s desktop version (Guerreiro et al., 2019). While previous research only examines one of the above aspects, this paper holistically discusses all the aspects that affect accessibility and usability problems that blind and visually impaired users face.

Digital library accessibility and related design problems

Among accessibility variables, accessing a feature and accessing a navigation component show the largest gaps between the two mobile platforms. In terms of accessing a feature, the primary type of design factor is adequacy of a feature label. While previous research identified the problem of improper labels for different features in both platforms (Alajarmeh, 2022; Wentz and Tressler, 2017), this study reveals that the problem of improper labels is more pronounced for the mobile website than the mobile app mainly because the design of the mobile website neglects the needs of blind and visually impaired users. For example, the search toggle at the top of the mobile website does not mention that it is a text field, and the search field is invisible, so they are confused about the function of the toggle when sighted users can see the search icon at their first look.

In terms of accessing a navigation component, logic of structure and simplicity of layout is the main design factor that highlights the difference between the two mobile platforms. Even though the findings of this study echo previous research, confirming that the mobile website has the problem of system structure and layout, previous studies have focused on inconsistent navigation layout and structure (Guerreiro et al., 2019; Rodrigues et al., 2017). This study highlights the complexity of digital library design in the mobile website, representing multiple layers and types of information: digital library structure, search results structure, and item/object structure. The complicated structure and layout of digital libraries cause more challenges for blind and visually impaired users who go through a linear process of exploring or inspecting a page using screen readers. Unlike previous works, this study further discovers that the mobile website of a digital library lacks the adequate navigation shortcuts that blind and visually impaired users need to move between different sections and within the same section.

Among accessibility variables, there is no significant difference between the two mobile platforms in accessing information/objects. Both have the same design factors: adequacy of alt text and adequacy of item metadata and collection description. Not surprisingly, the lack of alt text is also found in other types of online systems; previous researchers have pointed out that the lack of alt text contributes to problems for blind and visually impaired users in understanding an information object or item (Alajarmeh, 2022; Carvalho et al.,2018). The collections in a digital library add another layer of confusion for blind and visually impaired users that they do not encounter in other types of online systems. In this study, many users complained about the lack of adequacy of item metadata and collection description within the digital library. In particular, in the mobile website, the digital library only presents part of the descriptions of digital collections because of space limitations. Moreover, in the result lists, both platforms present similar item titles so blind and visually impaired users could not differentiate one from the other. Simultaneously, blind and visually impaired users would like to inspect the key metadata of an item before they click the item. Both platforms present each item with only the title, date, and creators/contributors, whereas many users would like to know the format of an item to determine whether they are able to access it.

Digital library’s usability and related design problems

This study identified more usability-related design factors than accessibility-related design factors on both platforms of the digital library. Simultaneously, the mobile website presented more design problems for usability variables. Among usability variables, efficiency and ease of use show the biggest gap. In terms of efficiency, the main types of design factors are logical location of a feature and logic of structure and simplicity of layout. Logical location of a feature is one key design factor behind efficiency. No previous research has identified the same issue. This study reveals that the mobile website’s sight-centred design causes issues for blind and visually impaired users who interact with a digital library through a linear process. For example, the filter feature of the digital library in the mobile website is at the bottom of the page, making it difficult for these users to find. While the mobile app of the digital library puts “sort by” and “filter” together, the mobile website separates these two features at different locations, which also leads to more confusion.

Logic of structure and simplicity of layout is the associated design factor that indicates the differences between the two platforms for both efficiency and ease of use. While Khan and Khusro (2019) pointed out that the ordering of layout and the ordering of menus are critical for blind and visually impaired users to easily use an email mobile app, this study shows that logic of structure and simplicity of layout is much more imperative for them to use a complex digital library. A digital library consists of multi-layers of structure, multiple access points, various collections, diverse formats of items, specific metadata, etc. The mobile website of the Library of Congress Digital Collections is very cluttered, with overwhelming information and various access points, hindering blind and visually impaired users from efficiently and easily using the digital library. Availability of navigation shortcut is another design factor related to ease of use. This study is in agreement with Morrison et al. (2018) ’s and Othman’s (2021) results that a mobile app is easier to use than a mobile website because of its straightforward navigation components, even though these two studies consist of two different types of users: blind and visually impaired and sighted users. This study further points out that the availability of navigation shortcuts is essential for blind and visually impaired users to interact easily with a digital library. The lack of navigation shortcuts for the mobile website makes it difficult for them to move from one element to the other. Simultaneously, availability of contextual help information is the third design factor associated with ease of use. While the mobile app offers contextual Help information when blind and visually impaired users need assistance, the mobile website disorients them when exploring digital library pages.

Blind and visually impaired users’ satisfaction with both platforms of the digital library is low, and many design factors impact them. Among them, the most critical design factors are adequacy of alt text and logic of structure and simplicity of layout. The lack of descriptive alt text on both platforms prevents them from effectively accessing and understanding a digital library’s items or elements. Again, the complexity of a digital library structure condensed to a mobile web interface lowers their overall satisfaction level.

Design implications

Among all the design factors, logic of structure and simplicity of layout, availability of navigation shortcut, and adequacy of alt text influenced both accessibility and usability of the mobile app and the mobile website. This section concentrates on the design implications for the mobile website because it has many more problems than the mobile app of the digital library. Logic of structure and simplicity of layout is the design factor affecting five of the accessibility and usability variables. For blind and visually impaired users, a shallower and wider navigational structure is the best option (Hochheiser and Lazar, 2010; Nogueira et al., 2019). Considering the complex design of a mobile website, it would be helpful to first provide an overview of the digital library structure and give blind and visually impaired users an idea of the digital library’s organization, such as options for finding and accessing relevant collections/items as well as how to use Help. Second, an overview of search results is critical for these users to understand the structure of search results. Possible structures include 1) two levels of search results consisting of collection-level and item-level, 2) only item-level, 3) or mixed results. Moreover, these overviews should be able to turn on and off so that experienced blind and visually impaired users and sighted users can skip that option.

Third, it would be helpful for a mobile website to present the main functions of a digital library that users most frequently use rather than a list of the collections in an alphabetical list. Examples of recommended functions include Search digital collections, Browse digital collections, About the digital library, etc. Fourth, the digital library layout must be adaptable without losing structure and information, ensuring that blind and visually impaired users can perform two-dimensional navigation. As to availability of navigation shortcut, creating shortcuts, such as assigning headings and/or landmarks, is an effective approach for blind and visually impaired users to jump from one page/section to another. Simultaneously, assigning more headings and/or landmarks, in particular in the results section, enables them to orient themselves to a page and to navigate easily to various sections of a page. In terms of adequacy of alt text, alt text is needed for visual items, and more importantly, descriptive alt text helps blind and visually impaired users differentiate one collection/item from another. As mobile devices hide some content because of the screen size, it is difficult for them to select a collection/item from multiple collections/items with similar titles. Furthermore, alt text needs to provide both content and format of a collection/item.

Conclusion

As a pioneering work in comparing accessibility and usability of the two mobile platforms of a digital library, this study shows that blind and visually impaired users cannot effectively access and use digital libraries in mobile contexts due to their sight-centred designs. The findings of the study help policymakers, researchers, and practitioners understand digital library design problems and enable developers to enhance digital libraries for universal access. This study demonstrates that the mobile app performs better in supporting blind and visually impaired users when accessing and using a digital library through mobile devices. It also discovers some unique design factors that affect their perceptions in evaluating the two mobile platforms of a digital library. This study helps researchers understand how the design of the mobile platforms supports or hinders blind and visually impaired users when accessing and using a digital library. As a result, mobile platforms, especially mobile websites, need to improve their designs. Offering an overview of digital library structure, presenting its main functions, and designing an adaptable layout for two-dimensional navigation are essential to enhance the accessibility and usability of mobile websites.

This study also has limitations. First, examining blind and visually impaired users’ interaction with one digital library cannot generate results applicable to the other ones. Second, 30 blind and visually impaired users cannot represent all users of this group. Third, only iPhone users were recruited to participate in the study because iOS devices represent the majority of mobile device usage for blind and visually impaired users. To generalize the results, further research needs to expand the number of participants and consider diverse digital library types. Moreover, the recruitment of participants needs to extend to users of other types of iOS devices and Android devices so researchers can better understand blind and visually impaired users’ accessibility and usability issues on diverse types of mobile devices. Finally, future research can also further investigate the relationships between types of accessibility and usability variables when blind and visually impaired users interact with mobile platforms of digital libraries.

Acknowledgements

The authors thank University of Wisconsin-Milwaukee Discovery and Innovation Grant and IMLS Leadership Grants for Libraries for funding for this project.

About the authors

Iris Xie is a Professor at the School of Information Studies, University of Wisconsin-Milwaukee. She can be contacted at: hiris@uwm.edu

Tae Hee Lee is a clinical assistant professor at the College of Information, University of North Texas. He can be contacted at: Taehee.Lee@unt.edu

Hyun Seung Lee is a PhD student at the School of Information Studies, University of Wisconsin-Milwaukee. She can be contacted at: lee649@uwm.edu

Shengang Wang is a PhD student at the School of Information Studies, University of Wisconsin-Milwaukee. He can be contacted at: shengang@uwm.edu

Rakesh Babu is a Lead Accessibility Scientist at Envision, Inc. He can be contacted at: rakesh.babu@envisionus.com

References

Abraham, C. H., Boadi-Kusi, B., Morny, E. K. A., & Agyekum, P. (2021). Smartphone usage among people living with severe visual impairment and blindness. Assistive Technology, 34(5), 611-618. https://doi.org/10.1080/10400435.2021.1907485

Ahmad, N. A. N., Hamid, N. I. M., & Lokman, A. M. (2021). Performing usability evaluation on multi-platform based application for efficiency, effectiveness and satisfaction enhancement. International Journal of Interactive Mobile Technologies, 15(10), 103-117.

Alajarmeh, N. (2022). The extent of mobile accessibility coverage in WCAG 2.1: sufficiency of success criteria and appropriateness of relevant conformance levels pertaining to accessibility problems encountered by users who are visually impaired. Universal Access in the Information Society, 21(2), 507–532. https://doi.org/10.1007/s10209-020-00785-w

Alonso-Ríos, D., Vázquez-García, A., Mosqueira-Rey, E., & Moret-Bonillo, V. (2009). Usability: a critical analysis and a taxonomy. International journal of Human-Computer Interaction, 26(1), 53-74. https://doi.org/10.1080/10447310903025552

Aqle, A., Al-Thani, D., & Jaoua, A. (2020). Can search result summaries enhance the web search efficiency and experiences of the visually impaired users?. Universal Access in the Information Society, 21(1), 171-192. https://doi.org/10.1007/s10209-020-00777-w

Babu, R. (2013). Understanding challenges in non-visual interaction with travel sites: an exploratory field study with blind users. First Monday, 18(12). https://doi.org/10.5210/fm.v18i12.4808 http://firstmonday.org/ojs/index.php/fm/article/view/4808. (Internet Archive)

Belkin, N. J., & Vickery, A. (1985). Interaction in Information Systems: A review of research from document retrieval to knowledge-based systems. British Library.

Berget, G., & MacFarlane, A. (2020). What is known about the impact of impairments on information seeking and searching? Journal of the Association for Information Science and Technology, 71(5), 596-611. https://doi.org/10.1002/asi.24256

Craven, J. (2003). Access to electronic resources by visually impaired people. Information Research, 8(4). Paper 156. http://informationr.net/ir/8-4/paper156.html (Internet Archive)

Carvalho, M. C. N., Dias, F. S., Reis, A. G. S., & Freire, A. P. (2018). Accessibility and usability problems encountered on websites and applications in mobile devices by blind and normal-vision users. Proceedings of the 33rd Annual ACM Symposium on Applied Computing, Pau, France, April 9-April13, 2018. (pp. 2022-2029). https://doi.org/10.1145/3167132.3167349

Firozjah, H. A., Dizaji, A. J., & Hafezi, M. A. (2019). Usability evaluation of digital libraries in Tehran public universities. International Journal of Information Science and Management (IJISM), 17(2), 71-83.

Flick, U. (2018). An introduction to qualitative research. Sage.

George, D., & Mallery, P. (2019). IBM SPSS statistics 26 step by step: A simple guide and reference. Routledge.

Grussenmeyer, W., & Folmer, E. (2017). Accessible touchscreen technology for people with visual impairments: a survey. ACM Transactions on Accessible Computing (TACCESS), 9(2), 1-31.

Guerreiro, T., Carriço, L., & Rodrigues, A. (2019). Mobile Web. In Y. Yesilada & S. Harper (Eds.), Web Accessibility: A Foundation for Research (pp. 737-754). Springer.

Hochheiser, H., & Lazar, J. (2010). Revisiting breadth vs. depth in menu structures for blind users of screen readers. Interacting with Computers, 22(5), 389-398.

Holsti, O. R. (1969). Content analysis for the social sciences and humanities. Addison-Wesley.

Interaction Design Foundation (2020). An introduction to usability. https://www.interaction-design.org/literature/article/an-introduction-to-usability (Internet Archive)

Jeng, J. (2011). Evaluation of information systems. In C.H. Davis & D. Shaw (Eds.), Introduction to information science and technology (pp. 129-142). American Society for Information Science and Technology.

Jobe, W. (2013). Native apps vs. mobile web apps. International Journal of Interactive Mobile Technologies, 7(4), 27-32. http://dx.doi.org/10.3991/ijim.v7i4.3226

Kane, S. K. (2007). Everyday inclusive web design: an activity perspective. Information Research, 12(3). Paper 309. http://informationr.net/ir/12-3/paper309.html (Internet Archive)

Khan, A., Khusro, S., & Alam, I. (2018). Blindsense: an accessibility-inclusive universal user interface for blind people. Engineering, Technology & Applied Science Research, 8(2), 2775-2784. https://doi.org/10.48084/etasr.1895

Khan, A., & Khusro, S. (2019). Blind-friendly user interfaces–a pilot study on improving the accessibility of touchscreen interfaces. Multimedia Tools and Applications, 78(13), 17495-17519. https://doi.org/10.1007/s11042-018-7094-y

Kinley, K., Tjondronegoro, D., Partridge, H., & Edwards, S. (2014). Modeling users' web search behavior and their cognitive styles. Journal of the Association for Information Science and Technology, 65(6), 1107-1123. https://doi.org/10.1002/asi.23053

Leuthold, S., Bargas-Avila, J.A., & Opwis, K. (2008). Beyond web content accessibility guidelines: design of enhanced text user interfaces for blind internet users. International Journal of Human-Computer Studies, 66(4), 257–270. https://doi.org/10.1016/j.ijhcs.2007.10.006

Marcotte, E. (2014). Responsive web design. A Book Apart.

Menzi-Cetin, N., Alemdağ, E., Tüzün, H., & Yıldız, M. (2017). Evaluation of a university website's usability for visually impaired students. Universal Access in the Information Society, 16, 151-160. https://doi.org/10.1007/s10209-015-0430-3

Mi, N., Cavuoto, L. A., Benson, K., Smith-Jackson, T., & Nussbaum, M. A. (2014). A heuristic checklist for an accessible smartphone interface design. Universal Access in the Information Society, 13, 351-365. https://doi.org/10.1007/s10209-013-0321-4

Morrison, L. G., Geraghty, A. W., Lloyd, S., Goodman, N., Michaelides, D. T., Hargood, C., & Yardley, L. (2018). Comparing usage of a web and app stress management intervention: an observational study. Internet Interventions, 12, 74-82. https://doi.org/10.1016/j.invent.2018.03.006

Nielsen, J. (2012). Usability 101: Introduction to Usability. Nielsen Norman Group. https://www.nngroup.com/articles/usability-101-introduction-to-usability/ (Internet Archive)

Nogueira, T.C., Ferreira, D. J., Carvalho, S. T., Berretta, L.O., & Guntijo, M. R. (2019). Comparing sighted and blind users task performance in responsive and non-responsive web design. Knowledge and Information Systems, 58(2), 319-339. https://doi.org/10.1007/s10115-018-1188-8

Oh, U., Joh, H., & Lee, Y. (2021). Image accessibility for screen reader users: a systematic review and a road Map. Electronics, 10(8), 953. https://doi.org/10.3390/electronics10080953

Omotayo, F. O., & Haliru, A. (2020). Perception of task-technology fit of digital library among undergraduates in selected universities in Nigeria. The Journal of Academic Librarianship, 46(1), 102097. https://doi.org/10.1016/j.acalib.2019.102097

Othman, S. (2021). Investigation of smartphone usage mobile applications versus mobile websites. The International Journal of Engineering and Information Technology. 7(2), 101-106.

Pant, A. (2015). Usability evaluation of an academic library website: experience with the Central Science Library, University of Delhi. The Electronic Library. 33(5), 896-915. https://doi.org/10.1108/EL-04-2014-0067

Qureshhi, H. H., & Wong, D. H. T. (2020). Usability analysis of adaptive characteristics in mobile phones for visually impaired people. Proceedings of the 2020 International Conference on Computer Communication and Information Systems, Ho Chi Minh City, Viet Nam August 1-August 3, 2020. (pp. 63-67). Association for Computing Machinery (ACM). https://doi.org/10.1145/3418994.3419005

Rodrigues, A., Nicolau, H., Montague, K., Guerreiro, J., & Guerreiro, T. (2020). Open challenges of blind people using smartphones. International Journal of Human–Computer Interaction, 36(17), 1605-1622. https://doi.org/10.1080/10447318.2020.1768672

Rodrigues, A., Santos, A., Montague, K., & Guerreiro, T. (2017). Improving smartphone accessibility with personalizable static overlays. Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, Maryland, USA, October 20-Nobember 1, 2017. (pp. 37-41). Association for Computing Machinery (ACM). https://doi.org/10.1145/3132525.3132558

Ross, A. S., Zhang, X., Fogarty, J., & Wobbrock, J. O. (2017). Epidemiology as a framework for large-scale mobile application accessibility assessment. Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, Maryland USA, October 20-November 1, 2017. (pp. 2-11). Association for Computing Machinery (ACM). https://doi.org/10.1145/3132525.3132547

Sbaffi, L., & Zhao, C. (2020). Modeling the online health information seeking process: Information channel selection among university students. Journal of the Association for Information Science and Technology, 71(2), 196-207. https://doi.org/10.1002/asi.24230

Tupikovskaja-Omovie, Z., Tyler, D. J., Dhanapala, S., & Hayes, S. (2015). Mobile app versus website: a comparative eye-tracking case study of Topshop. International Journal of Social, Behavioral, Educational, Economic, Business and Industrial Engineering, 9(10), 3251-3258.

Vigo, M., & Harper, S. (2014). A snapshot of the first encounters of visually disabled users with the web. Computers in Human Behavior, 34(2014), 203-212. https://doi.org/10.1016/j.chb.2014.01.045

WebAIM (2021). Screen reader user survey #9. Retrieved December 15, 2021, from https://webaim.org/projects/screenreadersurvey9/#mobilebrowsers (Internet Archive)

Weichbroth, P. (2020). Usability of mobile applications: a systematic literature study. IEEE Access, 8, 55563-55577. https://doi.org/10.1109/ACCESS.2020.2981892

Wentz, B., & Tressler, K. (2017). Exploring the accessibility of banking and finance systems for blind users. First Monday. 22(3). Retrieved from https://doi.org/10.5210/fm.v22i3.7036

Wilson, C. (2009). User experience re-mastered: your guide to getting the right design. Morgan Kaufmann.

World Wide Web Consortium. (2022). Introduction to Web Accessibility. https://www.w3.org/WAI/fundamentals/accessibility-intro/ (Internet Archive)

Xie, I., Babu, R., Joo, S. & Fuller, P. (2015). Using digital libraries non-visually: understanding the help seeking situations of blind users. Information Research, 20(2), paper 673. http://InformationR.net/ir/20-2/paper673.html (Internet Archive)

Xie, I., Babu, R., Lee, H. S., Wang, S., & Lee, T. H. (2021). Orientation tactics and associated factors in the digital library environment: comparison between blind and sighted users. Journal of the Association for Information Science and Technology,72(8), 995–1010. https://doi.org/10.1002/asi.24469

Xie, I., Babu, R., Lee, T. H., Castillo, M. D., You, S., & Hanlon, A. M. (2020). Enhancing usability of digital libraries: Designing help features to support blind and visually impaired users. Information Processing & Management, 57(3), 102110. https://doi.org/10.1016/j.ipm.2019.102110

Xie, I, Joo. S. & Matusiak, K. (2018). Multifaceted evaluation criteria of digital libraries in academic settings: similarities and differences from different stakeholders. The Journal of Academic Librarianship, 44(6), 854-863. https://doi.org/10.1016/j.acalib.2018.09.002

Xie, I., & Matusiak, K. (2016). Discover digital libraries: Theory and practice. Elsevier.

Xie, I., Wang, S., & Saba, M. (2021). Studies on blind and visually impaired users in LIS literature: a review of research methods. Library & Information Science Research, 43(3), 101109. https://doi.org/10.1016/j.lisr.2021.101109

Xu, F., & Du, J. T. (2018). Factors influencing users’ satisfaction and loyalty to digital libraries in Chinese universities. Computers in Human Behavior, 83(2018), 64-72. https://doi.org/10.1016/j.chb.2018.01.029

Zhang, D., Zhou, L., Uchidiuno, J. O., & Kilic, I. Y. (2017). Personalized assistive web for improving mobile web browsing and accessibility for visually impaired users. ACM Transactions on Accessible Computing (TACCESS), 10(2), 1-22. https://doi.org/10.1145/3053733