Information Research

Vol. 29 No. 1 2024

Who is using ChatGPT and why? Extending the Unified Theory of Acceptance and Use of Technology (UTAUT) model

DOI: https://doi.org/10.47989/ir291647

Abstract

Introduction. Since its public launch, ChatGPT has gained the world's attention, demonstrating the immense potential of artificial intelligence.

Method. To explore factors influencing the adoption of ChatGPT, we ran structural equation modelling to test the unified theory of acceptance and use of technology model while incorporating relative risk (vs. benefit) perception and emotional factors into its original form to gain a better understanding of the process.

Analysis. This study utilized partial least squares–structural equation modelling (PLS-SEM) with SmartPLS4.

Results. The findings revealed that in addition to individuals' technology-specific perceptions (i.e., performance expectancy, effort expectancy, social influence, and facilitating conditions), relative risk perception and emotional factors play significant roles in predicting favourable attitude and behaviour intentions towards ChatGPT.

Conclusion. Our extended model fits the data well, suggesting that it is not merely a matter of convenience but also of people's reservations, expectations, and emotions toward technology, which significantly influence their willingness to adopt ChatGPT.

Introduction

Generative artificial intelligence technology represents a revolutionary milestone in the field, enabling unprecedented advancements in the creation and interpretation of complex data. The advancement of this technology has given rise to an array of systems, notably OpenAI's ChatGPT, Google’s Bard, and several others. Within this innovative landscape, ChatGPT, since its public debut in November 2022, has emerged as a particularly significant advancement, showcasing the immense capabilities and transformative impact of artificial intelligence. ChatGPT is a versatile language model that can compose music, write news articles, debug computer code, and answer exam questions in response to prompts. The advent of this game-changing technology caused a global sensation; ChatGPT set a record for the fastest-growing application in history when it reached 100 million active users just two months after its launch. It had more than thirteen million daily visitors as of January 2023 and is predicted to surpass one billion users by the end of 2023 (Ruby, 2023).

Accordingly, there has been excitement, as well as concerns, over how ChatGPT would change our lives. Many anticipate that ChatGPT will transform the workplaces by enhancing efficiency and productivity. For instance, a recent study indicates that white-collar employees who utilised ChatGPT were 37% more efficient compared to manual work methods (Noy and Zhang, 2023). On the other hand, experts warn against the potential misuse of ChatGPT, such as by e-mail scammers, bots, stalkers, and hackers, leading to questions about whether we should be optimistic or exercise caution about this emerging technology (Goldstein et al., 2023). Such mixed signals of hope and fear make it challenging to predict public acceptance of ChatGPT.

Previous research on public acceptance of new technology has largely focused on the individual's perceived convenience factors, such as performance expectancy, effort expectancy, and facilitating conditions (Khechine et al., 2016; Venkatesh et al., 2003). With the significant societal implications of ChatGPT, however, how people weigh the potential benefits and risks of this transforming technology, in addition to convenience factors, will have a great impact on their willingness to adopt it. For example, nearly 600 years of technology history shows that the general public often resists new technologies when the benefits will accrue only to small sections of society while the risks will be more widely distributed, regardless of convenience (Juma, 2016). Furthermore, considering the extensive body of research that highlights the role of emotions in shaping public attitudes and intentions in various contexts (e.g., Nabi et al., 2018; Petty and Briñol, 2014), it is plausible that people's positive or negative emotions towards ChatGPT would also influence their perceptions and adoption of it. However, it remains to be empirically studied how these important factors shape public acceptance of ChatGPT.

Against this backdrop, the current study aims to contribute to the growing body of ChatGPT research in the following ways. First, it empirically examines what factors predict public attitudes toward ChatGPT and how such attitudes shape public’s intentions to use ChatGPT, drawing on the unified theory of acceptance and use of technology. Second, we incorporate the relative risk perception and various emotional factors (e.g., enthusiasm, worry, concern, anxiety) into the original model to gain a better understanding of the psychological process underlying public acceptance of ChatGPT. As the development and deployment of ChatGPT continue to progress, considering the multifaceted nature of societal and emotional factors will help us ensure that ChatGPT is integrated into our society in an ethical and responsible way.

Unified theory of acceptance and use of technology (UTAUT)

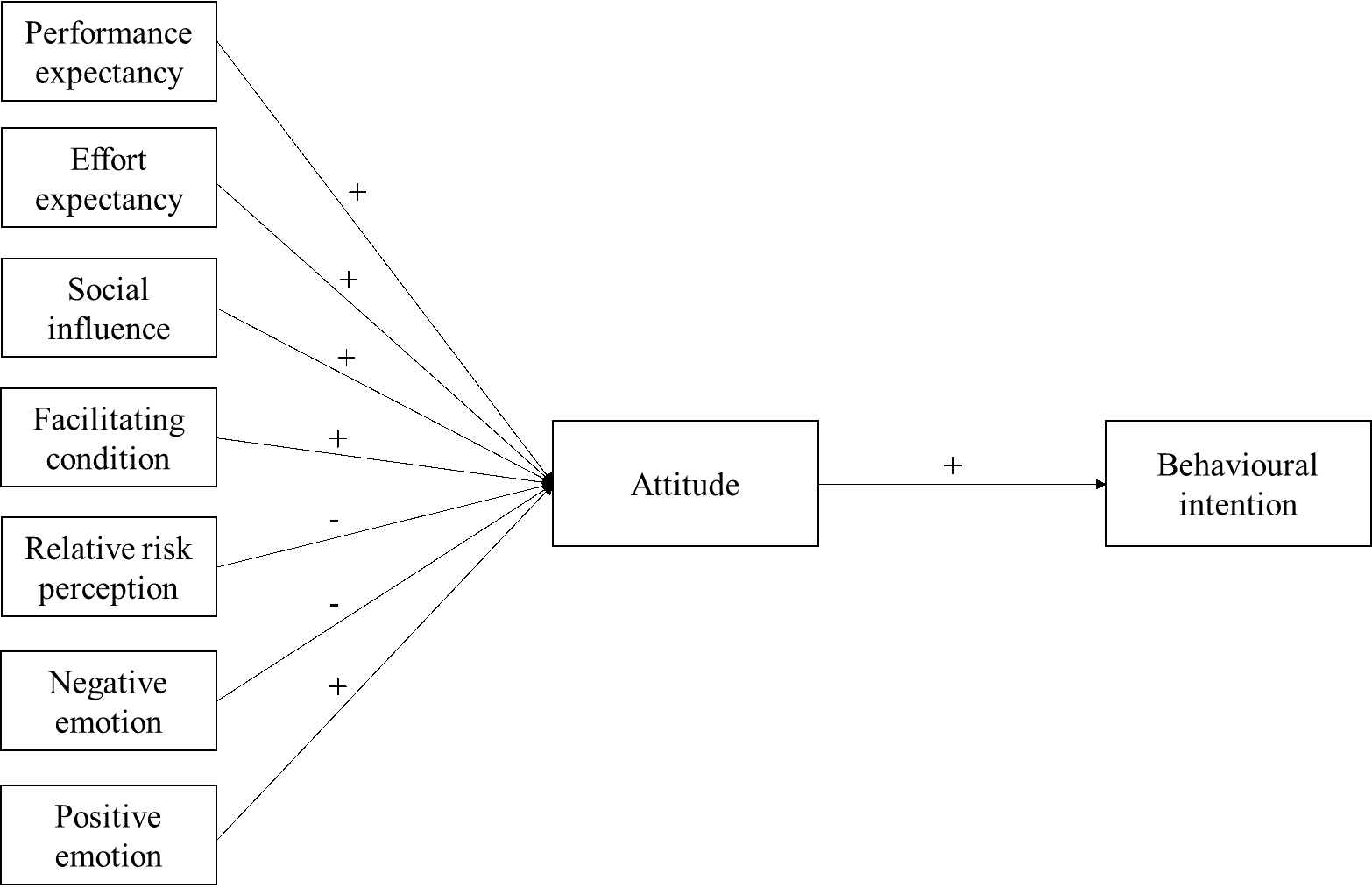

The unified theory of acceptance and use of technology (UTAUT) (hereafter, the Theory) offers a parsimonious framework that identifies factors influencing the use of a technology product (Venkatesh et al., 2003). The model integrates eight technology acceptance models, such as technology acceptance model, theory of planned behaviour, and innovation diffusion theory (see Venkatesh et al., 2003 for more details), and presents key cognitive and emotional factors to predict attitude and behavioural intention. Specifically, the original model primarily concerns the individual’s cognitive calculation, such as performance expectancy, effort expectancy, social influence, and facilitating conditions, but more recent models begin to incorporate emotional factors (e.g., Abikari et al., 2023; Sugandini et al., 2022). The attitude toward the technology then serves as a mediator toward behavioural intention. Figure 1 illustrates our model.

Figure 1. Theoretical model

There are reasons why we employ this theory. First, by combining eight technology acceptance models, the model is regarded as the most comprehensive model to predict behavioural outcome. Second, it has demonstrated its excellence in predicting who will adopt the technology (Khechine et al., 2016). Finally, the model is well-known for its robustness, parsimony, and simplicity (Sharma et al., 2022; Venkatesh et al., 2012).

Performance expectancy refers to the degree to which a user believes that using a new technology will help them achieve gains (Venkatesh et al., 2003). A meta-analysis found that the effect size of performance expectancy on behavioural intention is the largest among all relationships in the theory (Khechine et al., 2016). In the context of ChatGPT, people commonly perceive that ChatGPT provides superior web services compared with a general Google search (Hao, 2023). While Google search presents a list of links to other web pages which might involve relevant information, ChatGPT directly provides a summarised answer to users’ requests. ChatGPT users expect to increase their task productivity, relative to general web searches. Such expected gains will be associated with positive attitudes toward ChatGPT. Hence, we propose the following hypothesis.

H1a. Performance expectancy positively predicts a favourable attitude toward ChatGPT.

Effort expectancy is defined as the degree to which a user perceives the ease of use of new technology. Users may be motivated to accept a new technology when it requires little effort to learn and use (Alalwan, 2020). The literature on this theory suggests that effort expectancy is an important factor predicting positive attitudes toward a new technology. For example, the U.S. tax payers perceiving electronic tax filing systems as easy were more likely to use the system (Carter et al., 2011). The effect size of effort expectancy on behavioural intention is the second largest in this theory (Khechine et al., 2016). In the context of ChatGPT, when users think that ChatGPT is easy to use and reduces the burden of physical and mental efforts, they are likely to have positive attitudes toward it. Thus, we propose the following hypothesis.

H1b. Effort expectancy positively predicts a favourable attitude toward ChatGPT.

Social influence refers to the impact that significant others (e.g., friends, family, peers, and managers) have on a user’s attitude. Previous research has demonstrated that users are likely to conform to their social group’s norms when it comes to decisions regarding the use of artificial intelligence devices (Gursoy et al., 2019; Lin et al., 2020). Additionally, research has highlighted that the role of social influence is particularly relevant when users are unfamiliar with the new technology (Jeon et al., 2018). Given that ChatGPT is a relatively new service, we propose the following hypothesis.

H1c: Social influence positively predicts a favourable attitude toward ChatGPT.

Facilitating conditions refers to the degree to which a user believes that resources and technical infrastructure are available to facilitate the use of the system (Venkatesh et al., 2003). Previous studies showed that facilitating conditions played a significant role in predicting behavioural intention to use mobile learning (Donaldson, 2011) and webinar technology (Lakhal et al., 2013). According to the theory (Venkatesh et al., 2003), those who believe that they have sufficient knowledge and resources for a new system are more likely to have a favourable attitude toward the technology. In the context of ChatGPT, although the general public has access to free versions of ChatGPT and many experts’ opinions, the degree for individuals’ knowledge and resources would vary. Thus, we propose the following hypothesis.

H1d: Facilitating conditions positively predicts a favourable attitude toward ChatGPT.

Relative risk vs. benefit perception

This theory does not fully consider important factors that predict attitude toward ChatGPT because it was not originally developed to identify behavioural intent related to intelligence technology. Venkatesh, who introduced this theory, has invited others to expand it by adding new contexts, endogenous theoretical mechanisms, and exogenous factors (Venkatesh et al., 2012). Research on intelligence technology, in particular, has called for the incorporation of the risk-benefit perception variable into the existing theory (e.g., Martins et al., 2014). This is because users determine their attitudes towards novel technology based on the comparison of risks and benefits that the new technology can bring to society. Indeed, people are less likely to adopt new technologies such as financial technologies (fintech) (Ali et al., 2021), e-commerce (Pavlou, 2003), and biometrics (Miltgen et al, 2013) when they perceive high risks.

When a new technology is introduced, people often focus on the potential associated risks. An analysis of 601,778 tweets about self-driving cars, for instance, revealed that individuals are more likely to discuss the risks than the benefits of the technology (Kohl et al., 2017). Social discourse on the dangers of artificial intelligence is plentiful, with topics such as privacy violations and social manipulation receiving much attention (Vimalkumar et al., 2021). A survey of public perceptions regarding the technology found that only 24% of respondents viewed the benefits as outweighing the risks, while the remainder were either negative toward artificial intelligence or perceived equal levels of risk and benefit (Bao et al., 2022).

People are cautious in the use of technology due to increased uncertainty and a heightened risk perception (Jordan et al., 2018). Park and Jones-Jang (2022), for example, found that people's surveillance and security concerns about artificial intelligence had a detrimental effect on their evaluation and adoption intentions for artificial-intelligence-based health devices. Therefore, knowledge of the technology is necessary for people to have the confidence to use it. When the perceived benefits outweigh the perceived risks, trust in the technology increases, resulting in a greater willingness to embrace the technology (Ali et al., 2021).

In the case of ChatGPT, relative risk perception may be high due to its recent launch, having only been active for a few months. Several issues have been highlighted about this technology. For example, some are concerned that this new technology may propagate misinformation on an unprecedented scale (Brewster et al., 2023; Hsu, 2023). Additionally, there is tremendous concern regarding the potential for serious invasion of privacy, as personal web postings can be utilized to generate text (Burgess, 2023). Furthermore, there are fears that it can cause serious educational problems due to its potential for enabling easy plagiarism (Huang, 2023). Given the role of risk perception in shaping people's attitudes towards new technology, we can expect that those who perceive ChatGPT to have high levels of risk to have a negative attitude towards it. Thus, we propose the following hypothesis.

H2: Relative risk perception negatively predicts a favourable attitude toward ChatGPT.

Emotion

New technologies have the potential to disrupt established ways of doing things, eliciting a range of emotional responses (Mick and Fournier, 1998). However, this theory and related models have, to date, focused primarily on the cognitive determinants of attitudes and behaviour towards technology (Bagozzi, 2007; Perlusz, 2004). Aside from a few notable exceptions (e.g., Beaudry and Pisonneault, 2010; Lu et al., 2019; Venkatesh, 2000), theory and research on technology attitudes and acceptance has largely neglected the role of emotions. As emotions are fundamental human experiences that have far-reaching implications for attitudes and behaviour (Petty and Briñol, 2014), it is essential to consider the effects of different types of emotions in understanding attitudes toward technology.

Emotions refer to synchronized processes that stem from an appraisal of the environment with implications for one’s well-being or achievement of goals (Frijda, 1993). They involve interrelated changes in cognitive (e.g., appraisal), motivational (e.g., action tendencies), and neurophysiological (e.g., arousal) components when faced with both internal and external stimuli that are relevant to the self (Scherer, 2005; Moors et al., 2013).

Emotional reactions to technology can have a significant impact on attitudes, beyond thoughts and beliefs. On the one hand, emotions can act as a prompt for judgment when there is limited capacity or motivation (Petty and Briñol, 2014). In these cases, emotions will directly influence attitudes according to their emotional valence. That is, if a technology elicits more positive emotions (e.g., enthusiasm), it will be more liked; if it is associated with more negative emotions (e.g., anxiety), it will be more disliked. On the other hand, emotions can also be used as evidence to provide information about the value of the attitude object, even when people are being more mindful (Schwarz, 2012). To the extent that one's emotions about an object are perceived as relevant and based on legitimate appraisals, they can significantly affect attitudes towards the emotion-inducing target.

Previous research has provided evidence of the impact of emotions in the context of technology attitudes and adoption. For instance, positive emotions such as excitement and happiness have been found to have direct or indirect effects on technology use (Beaudry and Pinsonneault, 2010). Additionally, induced positive emotions were seen to lead to more positive evaluations of interactive websites (Jin and Oh, 2022). Conversely, anxiety towards technological innovations (e.g., apprehension about using computers, fear about personal/social changes due to AI) has been shown to reduce usage intentions and produce more negative attitudes (Kaya et al., 2024; Venkatesh, 2000). Therefore, this study hypothesises that positive and negative emotions will have distinct effects on attitudes towards ChatGPT.

H3a: Positive emotion toward ChatGPT positively predicts a favourable attitude toward ChatGPT.

H3b: Negative emotion toward ChatGPT negatively predicts a favourable attitude toward ChatGPT.

Attitude predicts behavioural intention

While attitude does not always lead to behaviour, a considerable amount of research has demonstrated that attitude is one of the most influential predictors of behaviour (Kaiser et al., 2010). For instance, numerous studies have established a positive correlation between individuals' attitude toward technology and their actual usage of it (e.g., Kim et al., 2015). A meta-analysis of theory of planned behaviour showed that the average variance extracted correlation between attitude and behaviour intention is p = 0.47), which is considered as a medium-level effect size (McEachan et al., 2011).

H4: Favourable attitudes toward ChatGPT positively predict ChatGPT use intention.

Testing the indirect effects

In addition to testing the direct effects, we also aim to examine the indirect effects, as not doing so may result in the under-representation of total effects (Raykov and Marcoulides, 2000). Consequently, combining the direct effect hypotheses presented above, we propose the following indirect hypotheses:

H5: Attitude toward ChatGPT mediates the relationship between the independent variables (H5a: PE, H5b: EE, H5c: SI, H5d: FC, H5e: RB perception, H5f: positive emotion, H5g: negative emotion) and ChatGPT use intention.

Methods

Data

Data were collected from an online panel administered by Dynata. To complement the non-representative nature of an online panel, samples were selected to match the demographic characteristics of U.S. adults and their characteristics such as age, sex, education, income, and race/ethnicity. Despite Dynata's global reach, this study exclusively focused on the U.S. population. This choice was made due to the varying perceptions of artificial intelligence in different countries, and our intention to avoid blending distinct viewpoints from diverse regions. The online survey was launched between February 20 and 27, 2023, and 2,103 panel members were sent invitations to take part. In the end, 1,004 respondents finished the survey, yielding a response rate of 47.7% (AAPOR cooperation rate 1). Those who failed the attention check were excluded from the final sample (i.e., not counted in the N). The sample was similar to the national population in terms of age, sex, education, income, and race/ethnicity. The details of demographic variables are outlined below.

| Demographics | Percentages |

|---|---|

| Age | |

| 18 to 24 | 18.7% |

| 25 to 34 | 21.4% |

| 35 to 44 | 19.7% |

| 45 to 54 | 20.5% |

| 55 to 64 | 5.1 |

| Age 65+ | 14.6% |

| Sex | |

| Male | 49.4% |

| Female | 50.6% |

| Ethnicity | |

| White | 72.2% |

| Black | 11.5% |

| Hispanic | 6.8% |

| Asian | 17.9% |

| Other | 2.2% |

| Education | |

| Less than high school graduate | 2.8% |

| High school graduate | 21.6% |

| Some college | 30.4% |

| Associate’s degree | 11.4% |

| Bachelor’s degree | 29.2% |

| Graduate degree | 14.5% |

| Income | |

| Under $ 10,000 | 7.1% |

| $10,000 - $29,999 | 15.3% |

| $ 30,000 - $ 49,999 | 18.7% |

| $ 50,000 - $ 69,999 | 17.6% |

| $70,000 - $ 99,999 | 18.0% |

| $100,000 - $144,999 | 12.3% |

| Over $150,000 | 11.0% |

Table 1. Demographics

Measures

Performance expectancy

Performance expectancy was measured with three items, “Using ChatGPT would be useful in my job,” “Using ChatGPT would enable me to accomplish tasks more quickly,” and “Using ChatGPT would increase my productivity.” (M = 3.27, SD = 1.07, Cronbach’s alpha = 0.88).

Effort expectancy

Effort expectancy was measured with three items, “My interaction with ChatGPT would be clear and understandable,” “It would be easy for me to become skillful at using ChatGPT,” and “ChatGPT seems easy to use.” (M = 3.51, SD = 0.89, Cronbach’s alpha = 0.83).

Social influence

Social influence was measured with two items, “People in my social networks (e.g., friends, family and co-workers) are using ChatGPT” and “People who are important to me think that I should use ChatGPT.” (M = 2.73, SD = 1.15, Cronbach’s alpha = 0.85).

Facilitating conditions

Facilitating conditions was measured with two items, “I have the resources necessary to use ChatGPT” and “I have the knowledge necessary to use ChatGPT.” (M = 3.36, SD = 1.04, Cronbach’s alpha = 0.82).

Attitude toward ChatGPT

Attitude towards ChatGPT was measured with four items, two of which asked participants to indicate the extent to which they agreed with the statements, "Using ChatGPT is a good idaea" and "I like working with ChatGPT" (rated on a 5-point scale from strongly disagree to strongly agree). The other two items measured participants' attitudes towards ChatGPT's influence on society, rating it on a scale from undesirable to desirable and negative to positive (four item scale: M = 3.15, SD = 1.01, Cronbach’s alpha = 0.72).

Behavioural intention

The behavioural intention was measured with three items, “I intend to use ChatGPT in the future,” “Using ChatGPT is something I would do in the future,” and “I consider using ChatGPT even with a monthly fee of $20.” (M = 3.01, SD = 1.07, Cronbach’s alpha = 0.86).

Relative risk perception. We measured relative risk (vs. benefit) perception by enquiring respondents regarding how they think about ChatGPT’s risks and benefits for a) society as a whole, b) themselves, and c) others (1 = Benefits far outweigh risks, 5 = Risks far outweigh benefits) (M = 3.10, SD = 0.98, Cronbach’s alpha = 0.88).

Emotion

To capture one's positive emotion towards ChatGPT, enthusiasm was captured (M = 3.12, SD = 1.21). To capture one's negative emotion towards ChatGPT, the following three emotions were captured: worry, concern, and anxiety (M = 3.27, SD = 0.97, Cronbach’s alpha = 0.86).

Analysis

We used partial least squares–structural equation modelling (PLS-SEM) with SmartPLS4 to look at the direct and indirect effects, as well as confidence intervals, t-values, and p-values of path coefficients. PLS‐SEM effectively examines the relationships among all variables of interest in a structure (Henseler and Chin, 2010) and has gained significant prominence in the field of information studies and its related domains (Lee et al., 2023; Soroya et al., 2021). The bootstrap estimates are based on 5,000 bootstrap samples, following Hair’s (2010) suggestion.

Results

Model evaluation in partial least squares–structural equation modelling consists of two stages; the first stage involves an assessment of the measurement model, and the second is an assessment of the structural model.

Measurement model

The measurement model assesses the validity and reliability of the instrument (see Table 2). More specifically, it evaluates convergent validity, discriminant validity, and reliability (Hair et al., 2017). The internal consistency reliability was established through composite reliability (CR), with all values exceeding 0.7, indicating that all constructs were reliable. Furthermore, indicator reliability was assessed through indicator loadings, with all values being above 0.7. Additionally, all of the constructs' average variance extracted values were above 0.5 (see Table 2), indicating that the shared variance among indicators was greater than any measurement errors, thus demonstrating a sufficient degree of convergent validity. Lastly, to measure the discriminant validity of the latent variables, we adopted the Fornell–Larcker (1981) criterion. The square root of the average value was greater than the correlation coefficient of each latent variable (see Table 3), demonstrating high discriminant validity. Overall, these values indicated that the measurement model fit was adequate.

| Latent Construct | Indicator | Indicator loading | Cronbach’s a a | CR | AVE |

|---|---|---|---|---|---|

| Performance Expectancy | PE1 | 0.87 | 0.88 | 0.92 | 0.80 |

| PE2 | 0.91 | ||||

| PE3 | 0.91 | ||||

| Effort Expectancy | EE1 | 0.86 | 0.83 | 0.90 | 0.75 |

| EE2 | 0.89 | ||||

| EE3 | 0.85 | ||||

| Social Influence | SI1 | 0.93 | 0.85 | 0.93 | 0.87 |

| SI2 | 0.94 | ||||

| Facilitating Condition | FC1 | 0.92 | 0.82 | 0.92 | 0.85 |

| FC2 | 0.92 | ||||

| Relative Risk Perception | RRP1 | 0.91 | 0.88 | 0.92 | 0.80 |

| RRP2 | 0.90 | ||||

| RRP3 | 0.89 | ||||

| Emotion (negative) | Worried | 0.93 | |||

| Anxious | 0.80 | 0.86 | 0.91. | 0.78 | |

| Concerned | 0.91 | ||||

| Attitude | ATT1 | 0.87 | 0.72 | 0.81 | 0.53 |

| ATT2 | 0.86 | ||||

| ATT3 | 0.55 | ||||

| ATT4 | 0.56 | ||||

| Behavioural Intention | BI1 | 0.93 | 0.86 | 0.91 | 0.78 |

| BI2 | 0.92 | ||||

| BI3 | 0.79 |

Table 2. Construct validity and reliability

| PE | EE | SI | FC | RRP | EMO(N) | ATT | BI | |

|---|---|---|---|---|---|---|---|---|

| Performance Expectancy | 0.90 | |||||||

Effort espectancy |

0.70 | 0.87 | ||||||

Social influence |

0.62 | 0.57 | 0.93 | |||||

Facilitating condition |

0.70 | 0.68 | 0.56 | 0.92 | ||||

Relative risk perception |

-0.38 | -0.44 | -0.38 | -0.38 | 0.90 | |||

Negative emotion |

-0.11 | -0.12 | -0.06 | -0.09 | 0.16 | 0.88 | ||

| Attitude | 0.72 | 0.73 | 0.69 | 0.67 | -0.49 | -0.26 | 0.93 | |

Behavioural intention |

0.76 | 0.69 | 0.69 | 0.63 | 0.48 | -0.13 | 0.83 | 0.88 |

Table 3. Fornell-Larcker criterion for discriminant validity

Structural model

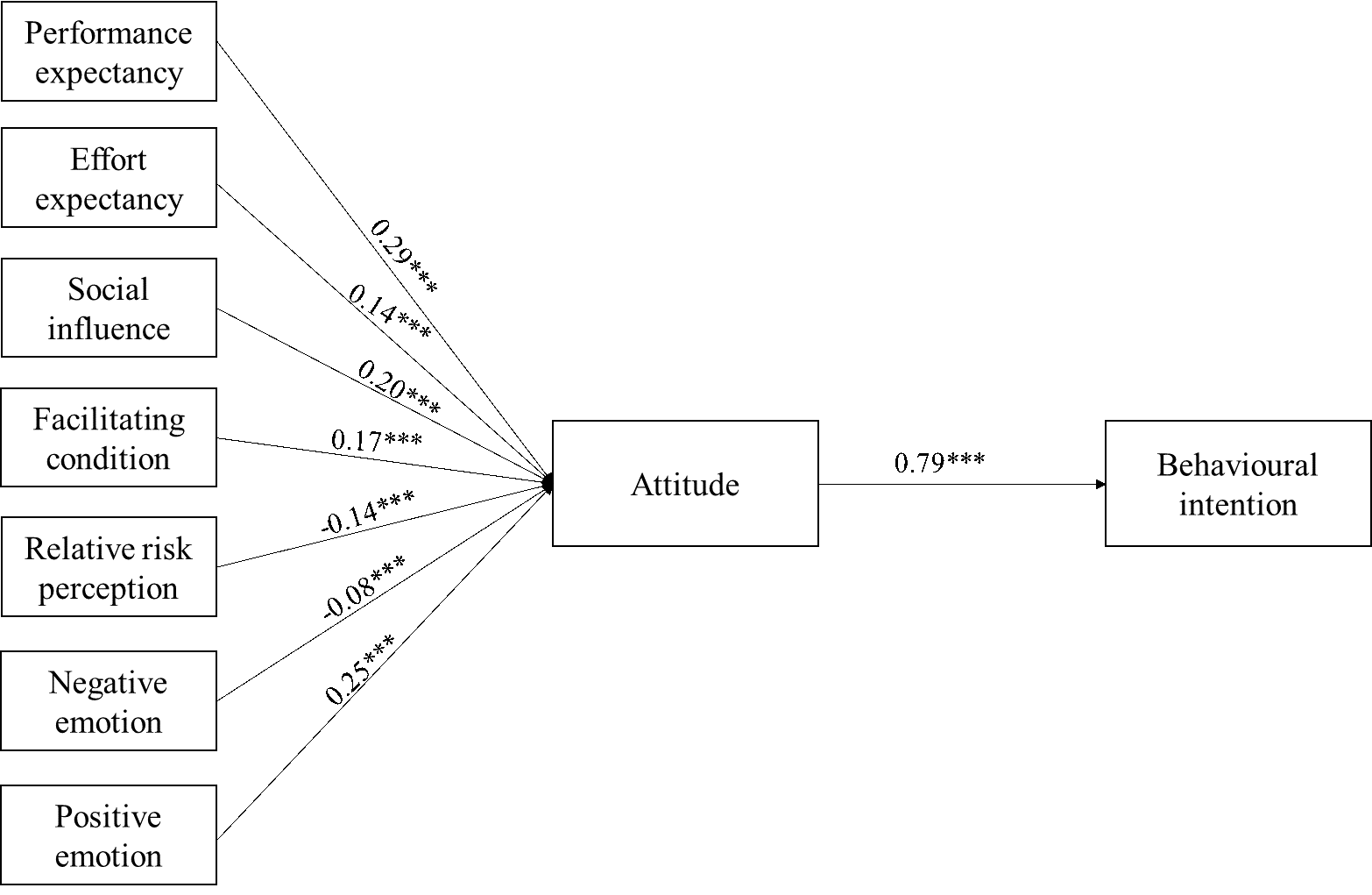

Having met all the assessment conditions for the measurement model, we assessed the structural model using partial least squares bootstrapping procedures. The structural model determines whether the structural relations in the model are meaningful and have the potential to explain the observed phenomena. R2 was used to evaluate the model's explanatory power, and the Stone-Geisser Q2 was gauged to assess the predictive relevance of the inner model (see Table 4). First, the R-squared (R2) values of the model were all above 0.60, indicating its high explanatory power. In addition, the Stone-Geisser Q2 values from the blindfolding procedure indicate our model has very strong predictive power, as all variables had cross-validated redundancy Q2 figures above 0.15 (see Hair et al., 2017 for more details on the interpretation). As the fit of the model was satisfactory, the individual path coefficient estimates were then examined to further assess the hypotheses. As predicted, all the hypotheses were supported (for the direct hypotheses, see Figure 2; for the indirect hypotheses, see Table 5).

| R2 | Q² predict | |

|---|---|---|

| Attitude toward ChatGPT | 0.75 | 0.74 |

| Behavioural intention | 0.62 | 0.64 |

Table 4. Structural model assessment

| Indirect Effects Paths | Point Estimate | Bias corrected bootstrap 95% CI |

|---|---|---|

| Performance expectancy Attitude Behavioural intention | 0.23 | 0.18 to 0.28 |

| Effort expectancy Attitude Behavioural intention | 0.11 | 0.06 to 0.16 |

| Social influence Attitude Behavioural intention | 0.16 | 0.12 to 0.20 |

| Facilitating condition Attitude | 0.13 | 0.09 to 0.18 |

| Relative risk perception Attitude Behavioural intention | -0.11 | -0.14 to -0.08 |

| Negative emotion Attitude Behavioural intention | 0.20 | 0.16 to 0.24 |

| Positive emotion Attitude Behavioural intention | -0.07 | -0.09 to -0.04 |

Table 5. Indirect effects of IVs on behavioural intention through attitude

Figure 2. Results of the partial least square structural equation model

Additional analyses

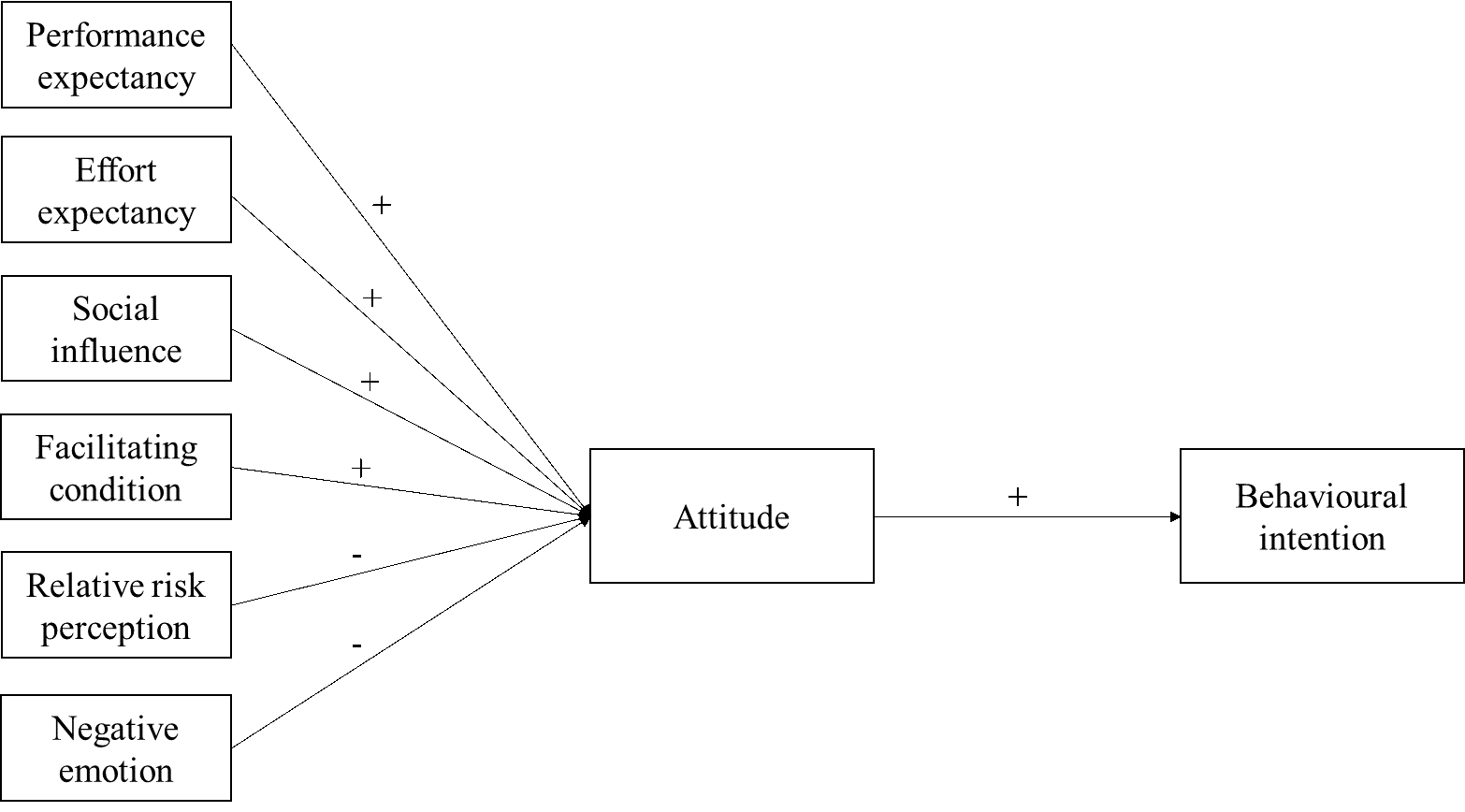

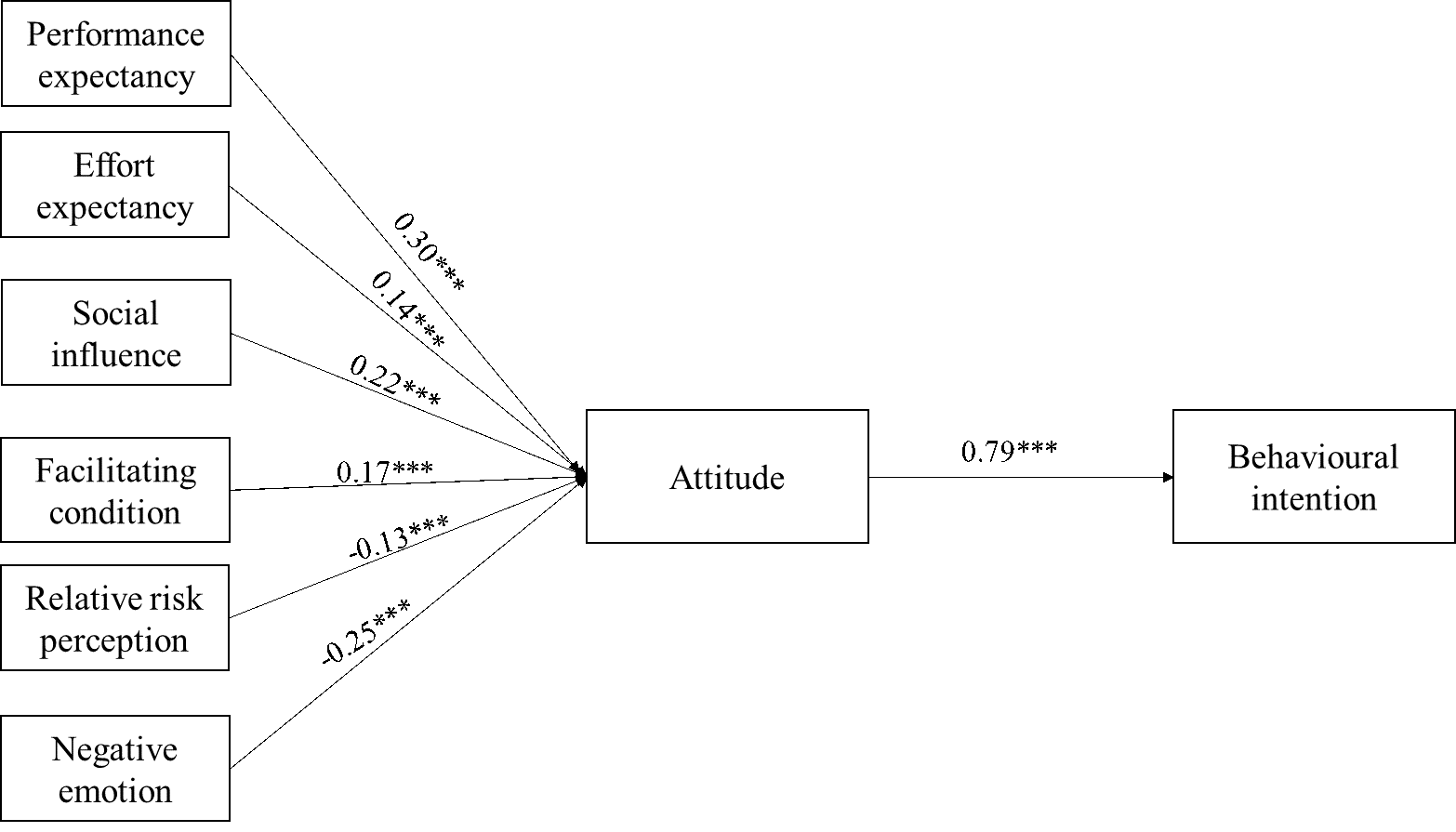

Given that positive emotion only has a single item (thus, cannot serve as a latent variable), we also created a composite variable of emotion consisting of three negative emotional items and a reverse-coded enthusiasm item. This four-item emotion latent variable was then put into the model (instead of separately putting a single-item positive emotion variable and a three-item negative emotion variable) (see Figure 3). The model fit for both the measurement and structural models was great, like the original model, and all the hypotheses were supported. The path coefficients are presented in Figure 4.

Additional analyses were conducted to ensure the rigidity of our model, such as multi-group analyses (MGA) and moderation analyses. First, we ran multi-group analyses (MGA) to examine whether there were any differences between the demographic subgroups in terms of their group-specific parameter estimates, such as path coefficients, outer weights, outer loadings, etc. that could not be identified by examining the entire sample. Second, we ran moderation analyses to investigate the potential for demographic factors to moderate the relationship between IVs and the mediator. Ultimately, we did not find any differences across different demographic characteristics in any of our analyses.

Figure 3. Alternative theoretical path model (emotion combined into the single dimension)

Figure 4. Results of the alternative path model

Discussion

While there has been much hype surrounding ChatGPT, empirical research on how the general public perceives and adopts this transformative technology is scarce. To investigate what factors influence public views and acceptance of ChatGPT, we employed the unified theory of acceptance and use of unified theory of acceptance and use of technology model while incorporating relative risk perception and emotional factors into the original model. A nationally representative survey conducted in the U.S. found that, in addition to convenience perceptions (e.g., performance expectancy, effort expectancy, social influence, and facilitating conditions), relative risk perception and emotional factors significantly influence favourable attitude and behavioural intentions towards ChatGPT.

This study provides a novel theoretical explanation for public acceptance of new technology by extending the original theory to reflect the public’s complex cognitive and emotional assessment of the technology. The original theory primarily focuses on perceived convenience in using a specific technology, such as if the technology works as expected, the amount of effort needed to learn it, and if the necessary resources are available.

However, the current discourse regarding ChatGPT presents a unique perspective, as it is not simply about the potential for this emerging technology to improve convenience, but also about how it could drastically alter the fundamental principles and norms of existing structures such as business, education, economy, among others. The potential benefits of these changes, in terms of increased efficiency and productivity, are unprecedented. Nevertheless, the potential risks are equally significant, and there is growing global concern about the irreversible harms they could cause.

Our findings indicate that recognising such multifaceted nature of ChatGPT is crucial in facilitating the ethical and responsible adoption of this emerging technology. First of all, the results showed strong support for the original theory; the four factors in the theory (i.e., performance expectancy, effort expectancy, social influence, and facilitating conditions) significantly predicted favourable attitudes toward ChatGPT (H1), which eventually led to behavioural intention (H5). Notably, in line with a meta- analysis of this theory (Khechine et al., 2016), performance expectancy appears to be the strongest predictor in the model (See Figure 2). This indicates that technological convenience or efficiency is still a critical factor for users to decide whether to use ChatGPT. On the other hand, the role of effort expectancy was relatively small compared to other factors, suggesting that people do not worry too much about whether they can learn how to use it easily. Secondly, as predicted, those who believe that risks of ChatGPT outweigh benefits expressed less willingness to use the technology. This observation reinforces the notion that the adoption of technology should be evaluated within a wider social framework, taking into account not only convenience but also people's evaluations of the risks and benefits associated with it. Such insights are invaluable in understanding how people perceive and adopt new technologies, particularly technologies that are expected to have a substantial impact on society. Third, our research demonstrated that emotional responses towards ChatGPT significantly predict attitudes and, consequently, intentions to use the technology in the future. This finding underscores the importance of taking into account emotional responses in addition to cognitive factors when understanding technology adoption, as emotions may account for additional variance in predicting user behaviour. Of particular note is the role of positive emotions in generating improved attitudes towards technology, as previous studies have mainly focused on negative emotions such as anxiety (Kaya et al., 2024; Venkatesh, 2000). Emotions may arise from a rapid and unconscious evaluation of an object or situation (Frijda, 1993), and the significant influence that these initial emotional reactions have on technology attitudes and adoption calls for a closer examination of the possibly dynamic role of emotions as the technology develops (see Bagozzi, 2007). Further theoretical research is necessary to more accurately assess the precise role of emotions in shaping technology adoption and use.

The current study emphasises the importance of considering societal implications when introducing new technologies to the public. It highlights the need for experts, including scientists and the broader scientific community, to go beyond just promoting the technological benefits and conveniences, and to actively engage in dialogue with the public regarding the potential impacts of the technology on society (Lee et al., 2020). This could involve discussing concerns related to issues such as privacy, security, equity, and ethical considerations that may arise with the use of the technology. Moreover, experts must also acknowledge and address any potential negative consequences that may arise with the use of the technology, and work to mitigate them in advance. By engaging in this type of dialogue with the public, experts can build trust and transparency, fostering a greater sense of ownership and involvement in the decision-making process around the use of the technology. Ultimately, this can lead to more responsible and sustainable deployment of new technologies that align with societal values and priorities. Additionally, although the heated debate over the ethical implications of ChatGPT has led to active discourse over regulating ChatGPT (Psaila, 2023), such discussions often overlook critical questions about what users and nonusers think about ChatGPT. Given that policy makers are known to incorporate the perceptions of the general public as well as the views of the scientific community in their policy decisions on controversial issues (Page and Shapiro, 1983), this study offers valuable insights to assist in informed policy decisions regarding ChatGPT.

Despite the aforementioned theoretical and practical implications, the implications of this study should be understood in the context of its several limitations. First, due to the fact that this technology is still in development, there is a variation in people's familiarity with it. Consequently, people’s perceptions or emotions toward ChatGPT could shift once they become more familiar with and actively use the technology. To address this, future studies may replicate or extend this research once more people become familiar with ChatGPT. Second, the cross-sectional nature of the data prevents the establishment of causal relationships between the key variables, though this is typical for exploratory research like this study. To better assess causal relationships, future research should utilise a panel design once familiarity with the technology increases. Finally, survey research tends to be limited in its ability to gain a thorough understanding of a phenomenon. For example, while our study established that positive and negative emotions are linked to attitudes toward ChatGPT, we are unaware of the underlying mechanisms. Additionally, people's emotions and relative risk perceptions may be intricate, which may not be completely revealed by survey answers. To capture people's intricate thoughts, interviews (either individual or focus group) could be conducted in the future.

Undoubtedly, generative artificial intelligence, exemplifiedby ChatGPT, Google Bard, and similar innovations will exert a substantial influence on our society. However, it remains unclear who will adopt and utilize these technologies. Some people may prioritise convenience and efficiency over privacy concerns, for example, while others may be more cautious about the potential side effects of new technologies. With this in mind, this study aimed to investigate which factors, beyond the convenience of using the technology, predict its adoption. By gaining an understanding of what motivates people to use technology, we can develop and utilise ChatGPT in a way that is beneficial to our society and is well-received by the public.

About the authors

Sangwon Lee (Ph.D., University of Wisconsin-Madison) is an assistant professor in the School of Media & Communication at Korea University. His research examines how new media technologies (e.g., social media, AI, etc.) impact our daily lives and society as a whole. Email: leesangwon@korea.ac.kr

S Mo Jones-Jang (Ph.D., University of Michigan) is an associate professor in the Department of Communication, Boston College, USA. His research focuses on misinformation and public perceptions of artificial intelligence. Email: s.mo.jang@bc.edu

Myojung Chung (Ph.D., Syracuse University) is an assistant professor in the School of Journalism, Northeastern University, Boston, USA. Her research focuses on how people process misinformation and how to counter the spread of misinformation. Email: m.chung@northeastern.edu

Nuri Kim (Ph.D., Stanford University) is an associate professor at the Wee Kim Wee School of Communication and Information at Nanyang Technological University, Singapore. Her research focuses on issues of diversity and difference in mediated environments. Email: nuri.kim@ntu.edu.sg

Jihyang Choi (Ph.D., Indiana University) is an associate professor at Ewha Womans University, Seoul, Korea. Her research looks into how the changing media environment influences people’s news processing, political attitudes, and behaviour. Email: choi20@ewha.ac.kr

References

Abikari, M., Öhman, P., & Yazdanfar, D. (2023). Negative emotions and consumer behavioural intention to adopt emerging e-banking technology. Journal of Financial Services Marketing, 28(4), 691-704. https://doi.org/10.1057/s41264-022-00172-x

Ali, M., Raza, S. A., Khamis, B., Puah, C. H., & Amin, H. (2021). How perceived risk, benefit and trust determine user Fintech adoption: a new dimension for Islamic finance. Foresight, 23(4), 403-420. http://dx.doi.org/10.1108/FS-09-2020-0095

Bagozzi, R. P. (2007). The legacy of the technology acceptance model and a proposal for a paradigm shift. Journal of the Association for Information Systems, 8(4), 244-254. . http://dx.doi.org/10.17705/1jais.00122

Bao, L., Krause, N. M., Calice, M. N., Scheufele, D. A., Wirz, C. D., Brossard, D., Newman, T. P., & Xenos, M. A. (2022). Whose AI? How different publics think about AI and its social impacts. Computers in Human Behavior, 130, article 107182. https://doi.org/10.1016/j.chb.2022.107182

Beaudry, A., & Pinsonneault, A. (2010). The other side of acceptance: studying the direct and indirect effects of emotions on information technology use. MIS Quarterly, 34(4), 689–710. https://doi.org/10.2307/25750701

Brewster, J., Arvanitis, L., & Sadeghi, M. (2023). The next great misinformation superspreader: How ChatGPT could spread toxic misinformation at unprecedented scale. Newsguard. From https://www.newsguardtech.com/misinformation-monitor/jan-2023/

Burgess, M. (2023, April, 4). ChatGPT has a big privacy problem. Wired. https://www.wired.com/story/italy-ban-chatgpt-privacy-gdpr/ (Internet Archive)

Carter, L., Shaupp, L.C., Hobbs, J., & Campbell, R. (2011). The role of security and trust in the adoption of online tax filing. Transforming Government: People, Process and Policy, 5(4), 303–318. http://dx.doi.org/10.1108/17506161111173568

Chandra, Y. (2023, March 2). Move over Google? ChatGPT and its like will change how we search online. South China Morning Post. https://www.scmp.com/comment/opinion/article/3211809/move-over-google-chatgpt-and-its-will-change-how-we-search-online (Internet Archive)

Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: a comparison of two theoretical models. Management Science, 35(8), 982-1003. https://doi.org/10.1287/mnsc.35.8.982

Donaldson, R. L. (2011). Student acceptance of mobile learning. UMI. (Florida State University PhD Dissertation)

Fornell, C., & Larcker, D. F. (1981). Structural equation models with unobservable variables and measurement error: algebra and statistics. Journal of Marketing Research, 18(3), 382-388. https://doi.org/10.2307/3150980

Frijda, N. H. (1993). The place of appraisal in emotion. Cognition & Emotion, 7,(3-4) 357-387. https://doi.org/10.1080/02699939308409193

Goldstein, J. A., Sastry, G., Musser, M., DiResta, R., Gentzel, M., & Sedova, K. (2023). Generative language models and automated influence operations: emerging threats and potential mitigations. arXiv preprint arXiv:2301.04246. https://doi.org/10.48550/arXiv.2301.04246

Gupta, N., Fischer, A. R., & Frewer, L. J. (2012). Socio-psychological determinants of public acceptance of technologies: A review. Public Understanding of Science, 21(7), 782-795. https://doi.org/10.1177%2F0963662510392485

Gursoy, D., Chi, O. H., Lu, L., & Nunkoo, R. (2019). Consumers acceptance of artificially intelligent (AI) device use in service delivery. International Journal of Information Management, 49, 157-169. https://doi.org/10.1016/j.ijinfomgt.2019.03.008

Hair Jr, J. F., Matthews, L. M., Matthews, R. L., & Sarstedt, M. (2017). PLS-SEM or CB-SEM: updated guidelines on which method to use. International Journal of Multivariate Data Analysis, 1(2), 107-123. https://doi.org/10.1504/IJMDA.2017.087624

Hao, K, (2023, April, 11). What is ChatGPT? What to know about the AI chatbot. Wall Street Journal. https://www.wsj.com/articles/chatgpt-ai-chatbot-app-explained-11675865177 (Internet Archive)

Huang, K. (2023, January, 16). Alarmed by AI chatbots, universities start revamping how they teach. New York Times. https://www.nytimes.com/2023/01/16/technology/chatgpt-artificial-intelligence-universities.html (Internet Archive)

Hsu, T., & Thompson, S. A. (2023. Feb, 8). Disinformation researchers raise alarms about A.I. chatbot. New York Times. https://www.nytimes.com/2023/02/08/technology/ai-chatbots-disinformation.html (Internet Archive)

Jeon, M. M., Lee, S., & Jeong, M. (2018). e-Social influence and customers’ behavioural intentions on a bed and breakfast website. Journal of Hospitality Marketing & Management, 27(3), 366-385. https://doi.org/10.1080/19368623.2017.1367346

Jin, E., & Oh, J. (2022). The role of emotion in interactivity effects: positive emotion enhances attitudes, negative emotion helps information processing. Behavior & Information Technology, 41(16), 3487-3505. https://doi.org/10.1080/0144929X.2021.2000028

Jordan, G., Leskovar, R., & Marič, M. (2018). Impact of fear of identity theft and perceived risk on online purchase intention. Organizacija, 51(2), 146-155. http://dx.doi.org/10.2478/orga-2018-0007

Juma, C. (2016). Innovation and its enemies: why people resist new technologies. Oxford University Press. https://doi.org/10.1093/acprof:oso/9780190467036.001.0001

Kaiser, F. G., Byrka, K., & Hartig, T. (2010). Reviving Campbell’s paradigm for attitude research. Personality and Social Psychology Review, 14(4), 351-367. https://doi.org/10.1177/1088868310366452

Kaya, F., Aydin, F., Schepman, A., Rodway, P., Yetişensoy, O., & Demir Kaya, M. (2024). The roles of personality traits, ai anxiety, and demographic factors in attitudes toward artificial intelligence. International Journal of Human–Computer Interaction, 40(2), 497-514. https://doi.org/10.1080/10447318.2022.2151730

Kelly, S., Kaye, S. A., & Oviedo-Trespalacios, O. (2022). What factors contribute to acceptance of artificial intelligence? a systematic review. Telematics and Informatics, 101925. https://doi.org/10.1016/j.tele.2022.101925

Kim, S., Lee, K. H., Hwang, H., & Yoo, S. (2015). Analysis of the factors influencing healthcare professionals’ adoption of mobile electronic medical record (EMR) using the unified theory of acceptance and use of unified theory of acceptance and use of technology in a tertiary hospital. BMC Medical Informatics and Decision Making, 16(1), 1-12. https://doi.org/10.1186%2Fs12911-016-0249-8

Kohl, C., Mostafa, D., Böhm, M., & Krcmar, H. (2017). Disruption of individual mobility ahead? A longitudinal study of risk and benefit perceptions of self-driving cars on twitter. [Paper presentation]. International Conference on Wirtschaftsinformatik, St. Gallen, Switzerland.

Lakhal, S., Khechine, H., & Pascot, D. (2013). Student behavioural intentions to use desktop video conferencing in a distance course: integration of autonomy to the unified theory of acceptance and use of technology model. Journal of Computing in Higher Education, 25(2), 93–121. https://doi.org/10.1007/s12528-013-9069-3

Lee, S., Nah, S., Chung, D. S., & Kim, J. (2020). Predicting ai news credibility: communicative or social capital or both?. Communication Studies, 71(3), 428-447. https://doi.org/10.1080/10510974.2020.1779769

Lee, S., Tandoc Jr, E. C., & Lee, E. W. (2023). Social media may hinder learning about science; social media's role in learning about COVID-19. Computers in Human Behavior, 138. article 107487. https://doi.org/10.1016/j.chb.2022.107487

Lin, H., Chi, O. H., & Gursoy, D. (2020). Antecedents of customers’ acceptance of artificially intelligent robotic device use in hospitality services. Journal of Hospitality Marketing & Management, 29(5), 530-549. http://dx.doi.org/10.1080/19368623.2020.1685053

Lu, Y., Papagiannidis, S., & Alamanos, E. (2019). Exploring the emotional antecedents and outcomes of technology acceptance. Computers in Human Behavior, 90, 153-169. https://doi.org/10.1016/j.chb.2018.08.056

Martins, C., Oliveira, T., Popovič, A. (2014). Understanding the internet banking adoption: a unified theory of acceptance and use of technology and perceived risk application. International Journal of Information Management, 34(1), 1-13. https://doi.org/10.1016/j.ijinfomgt.2013.06.002

McEachan, R. R. C., Conner, M., Taylor, N. J., & Lawton, R. J. (2011). Prospective prediction of health-related behaviours with the theory of planned behavior: a meta-analysis. Health Psychology Review, 5(2), 97-144. https://psycnet.apa.org/doi/10.1080/17437199.2010.521684

Mick, D. G., & Fournier, S. (1998). Paradoxes of technology: consumer cognizance, emotions, and coping strategies. Journal of Consumer Research, 25(2), 123-143. https://doi.org/10.1086/209531

Miltgen, C. L., Popovič, A., & Oliveira, T. (2013). Determinants of end-user acceptance of biometrics: Integrating the “Big 3” of technology acceptance with privacy context. Decision Support Systems, 56, 103-114. https://doi.org/10.1016/j.dss.2013.05.010

Moors, A., Ellsworth, P. C., Scherer, K. R., & Frijda, N. H. (2013). Appraisal theories of emotion: state of the art and future development. Emotion Review, 5(2), 119-124. http://dx.doi.org/10.1177/1754073912468165

Nabi, R. L., Gustafson, A., & Jensen, R. (2018). Framing climate change: exploring the role of emotion in generating advocacy behaviour. Science Communication, 40(4), 442-468. https://doi.org/10.1177/1075547018776019

Noy, S., & Zhang, W. (2023). Experimental evidence on the productivity effects of generative artificial intelligence. SSRN, article 4375283. https://dx.doi.org/10.2139/ssrn.4375283

Park, Y. J., & Jones-Jang, S. M. (2022). Surveillance, security, and AI as technological acceptance. AI & Society, 1-12. https://link.springer.com/article/10.1007%2Fs00146-021-01331-9

Pavlou, P. A. (2003). Consumer acceptance of electronic commerce: integrating trust and risk with the technology acceptance model. International Journal of Electronic Commerce, 7(3), 101-134. https://doi.org/10.1080/10864415.2003.11044275

Perlusz, S. (2004, October). Emotions and technology acceptance: development and validation of a technology affect scale. In 2004 IEEE International Engineering Management Conference (Vol. 2, pp. 845-847). IEEE.

Petty, R. E., & Briñol, P. (2015). Emotion and persuasion: cognitive and meta-cognitive processes impact attitudes. Cognition and Emotion, 29(1), 1-26. https://doi.org/10.1080/02699931.2014.967183

Psaila, S. (June 16, 2023). Governments vs ChatGPT: regulation around the world. Diplo. https://www.diplomacy.edu/blog/governments-chatgpt-regulation/ (Internet Archive)

Savela, N., Garcia, D., Pellert, M., & Oksanen, A. (2021). Emotional talk about robotic technologies on Reddit: Sentiment analysis of life domains, motives, and temporal themes. New media & Society. https://doi.org/10.1177/14614448211067259

Scherer, K. R. (2005). What are emotions? And how can they be measured?. Social Science Information, 44(4), 695-729. https://doi.org/10.1177/0539018405058216

Schwarz, N. (2012). Feelings-as-information theory. In P. A. M. Van Lange, A. Kruglanski, & E. T. Higgins (Eds.), Handbook of theories of social psychology (pp. 289–308). Sage Publications. https://doi.org/10.4135/9781446249215

Soroya, S. H., Farooq, A., Mahmood, K., Isoaho, J., & Zara, S. (2021). From information seeking to information avoidance: understanding the health information behavior during a global health crisis. Information Processing & Management, 58(2), article 102440 https://doi.org/10.1016/j.ipm.2020.102440

Sugandini, D., Istanto, Y., Arundati, R., & Adisti, T. (2022). Intention to adopt e-learning with anxiety: unified theory of acceptance and use of technology model. Review of Integrative Business and Economics Research, 11, 198-212.

Venkatesh, V. (2000). Determinants of perceived ease of use: Integrating control, intrinsic motivation, and emotion into the technology acceptance model. Information Systems Research, 11(4), 342-365. http://dx.doi.org/10.1287/isre.11.4.342.11872

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3) 425-478. https://doi.org/10.2307/30036540

Venkatesh, V., Thong, J. Y., & Xu, X. (2012). Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Quarterly, 36(1). 157-178. https://doi.org/10.2307/41410412

Vimalkumar, M., Sharma, S. K., Singh, J. B., & Dwivedi, Y. K. (2021). Okay google, what about my privacy? User’s privacy perceptions and acceptance of voice based digital assistants. Computers in Human Behavior, 120, article 106763. https://doi.org/10.1016/j.chb.2021.106763