vol. 13 no. 4, December, 2008

vol. 13 no. 4, December, 2008 | ||||

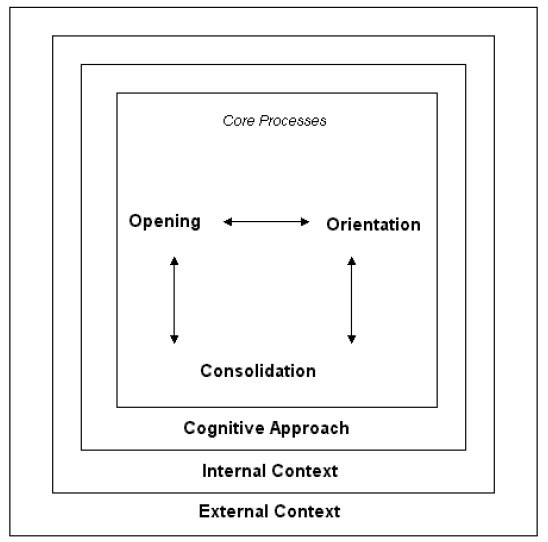

The model of information seeking under investigation is the non-linear model of information seeking (Foster 2004) that proposes three core processes of Opening, Orientation and Consolidation, together with three sets of contextual interactions. The model was developed as part of doctoral research on the information seeking behaviour of interdisciplinary academic researchers at a research-intensive university in the UK. It adopted Lincoln and Guba's (1985) framework and axioms for naturalistic inquiry and, accordingly, the transference of findings from one context to another requires due diligence and methodical treatment.

The aim of a research project funded by Aberystwyth University, was to produce a refined information behaviour model, which would be transferable. This paper discusses how to examine the credibility of coding and how some of these methods performed when applied to the validation discussions of the coding scheme. Examples of the coding discussions and their outcomes illustrate how the refined model is likely to develop.

In discussing the difference between models of information behaviour and theories, Case (2007:120) suggests that many models are concerned with specific problems and show sequences of events and the influencing factors. Some models resemble a flowchart for problem solving (e.g., Byström and Jarvelin 1995), others set out in more detail the various values, attitudes, external factors and personality influences that affect information seeking (e.g., Savolainen's Everyday Life Information Seeking model) (Savolainen 2005: 143). Others invoke theories, in particular from psychology or mass communication, to explain some aspects of activation of information seeking (e.g., Wilson 1999). The meaning of the term 'theory' in information behaviour research seems a little unclear (Pettigrew and McKechnie 2001; Pettigrew et al. 2001;), although many researchers cite Kuhlthau's Information Search Process (Kuhlthau 1991, 2005) or Dervin's Sense-making (Dervin et al. 2003). Our reasons for invoking some theories rather than others, can be attributed to the perspective or paradigm of our research approach. For example, research on the use of electronic sources of information can be divided into studies reflecting users' interest in using such resources (information behaviour researcher perspective) and studies on usage of those resources (the library administrator perspective) (Rowley and Urquhart 2007). Dervin and Reinhard (2006) discuss the problems of user and audience studies, based on findings from an Institute of Museum and Library Services funded project. Interviews across a range of experts found that user research was not satisfactory, as there is little agreement on the terms and there was a palpable lack of progress of building on previous research in a constructive way (Dervin et al. 2006).

To transfer a model of information seeking developed using grounded theory, from observations in one setting, to a more 'middle range' theory (Case 2007: 147) that might encompass more information seeking situations, several stages seem necessary:

Most reports of qualitative data analysis of information behaviour omit any estimation of the reliability of the coding scheme, but that is arguably not a concern with grounded theory (Glaser and Strauss 1967). Hammersley (1990) suggests that validity is identified with confidence in our knowledge but not certainty, reality is assumed to be independent of the claims that researchers make about it and reality is always viewed and represented through particular perspectives. If that is the case, then another researcher approaching the same set of interview transcripts and with the codes used by the first researcher, could be expected to agree with the broad themes (if the first researcher's conclusions were valid), but interpretations could vary, depending on the background knowledge of the second researcher and the empathy s/he had with the interviewee's situation. Some experimental evidence supports this, in a comparison of six researchers' coding of a focus group transcript (Armstrong et al. 1997). Although the analysts identified similar themes, they differed in the labelling they used and the relations they made among the themes. Some aspects would seem more important, some less. But confidence and agreement over some general themes does not, perhaps, advance our knowledge beyond a lowest common denominator. Perhaps the emphasis should be less on checking the interpretation of the first researcher and more on challenging their interpretation, to challenge their common sense assumptions. Silverman (1993:159) suggests that emphasis be placed on analytic induction, defining a phenomenon and then generating and testing a hypothesis to be tested. Mostly, this may require identification of the deviant cases for particular categories and use of the constant comparative method to judge which features are both necessary and sufficient. Simple quantitative analysis, counting instances can also help to assess the validity of some claims and the direction of differences. Similarly, Bloor's approach to analytic induction stresses the identification of the necessary and sufficient features for generating a category: Johnson (1998) describes how these principles were applied.

Discussion of methods used for inter-rater reliability estimates in information behaviour research include Rice et al. (2001) who describe refinement of a coding scheme for accessing information, including development of confusion matrices for the facets of the information seeking process and the influences or constraints. Reliability coefficients were calculated in different ways. Initial results showed low inter-rater reliability but, after discussion among coders and further clarification of coding actions, satisfactory reliability coefficients were obtained. The usual method of calculating the inter-rater reliability is to use the Kappa statistic (Cohen 1960), which corrects for the agreements that would be expected by chance. Other formulae simply calculate an intercoder agreement formula (Miles and Huberman 1994), which sums all the agreements divided by the sum of all agreements and disagreements. Much depends on the number of possible agreements, (number of possible codes) and whether modifications should be made for partial agreements, if, for example, there are main codes and sub-codes.

Cohen's Kappa statistic assumes that the categories, or codes, are independent, mutually exclusive and exhaustive. This makes it easy to apply to simple coding schemes where there are distinct differences in the coding terms, e.g., for type of health condition (Homewood 2004) but very difficult to apply to complex coding schemes, where raters can select multiple responses from a large number of categories. The FREQ procedure (Stein et al. 2005) computes the Kappa statistic based on frequency tables with one rater's responses being the row and a second rater's reponses being the columns. With some adjustments to ensure the tables are square, this method was used by Stein et al. (2005). to determine inter-rater reliability when raters could select from over 1,000 diagnosis codes and use as many as required for each patient condition code.

Another approach to dealing with inter-rater reliability, when the code structure is in a tree structure and multiple responses are available for each chunk of coding, is to use binomial probability theory (Saracevic and Kantor 1997), but this calculation appears to assume that only one code (for a particular facet of the taxonomy) should be applied to a particular coding chunk. However, the basis of the calculation is that the null hypothesis is that 'each of two coders assigns each of the possible codes in a way that has no correlation with the other coder'. The chance that they will agree exactly k times, under the null hypothesis has to consider the number of ways (A) the first coder can assign the code to the total number of responses (R), the number of ways the second coder can assign the code to exactly k of the A cases to which the first coder assigned it, the number of ways (B) the second coder can assign the code to exactly (B-k) of the other (R-A) cases. Calculations of this variety seem to arrive at quite high statistical significance for small number of agreements. Intuitively, this does not seem quite right although the mathematics appear sound.

The difficulty occurs in relating the mathematics to the reasons for coders' agreement or disagreement when there are many possible codes to use. The possible situations include different personal interpretations of a coding chunk, leading to a different set of codes being assigned by different coders and these sets may, or may not, describe the same ideas, but in different ways. A second coder may ignore concepts that appear important to the first coder. Coders may be operating, subconsciously, with a reduced set of codes and prefer to use particular codes, rather than exploring the whole set of possible codes. There is likely to be an interaction between the coder and the text and assignment of new codes can be influenced by codes already assigned to the entire transcript, for example. It is not really surprising that the null hypothesis is disproved so often in the calculations by Saracevic and Kantor, (1997) as transcripts are usually composed of text that is coherent and we would expect coders' comprehension to be similar, although they may choose different words to describe the ideas. The idea of intercode differences described by Saracevic and Kantor (1997) is easier to relate to what happens in practice, but the method of applying this will depend on the tree structure used.

For the validation work, the team included the researcher responsible for the nonlinear model (AF). One researcher (JT) had interviewed students and some academic staff and analysed qualitative data for the JISC Usage Surveys: Trends in Electronic Information Services (JUSTEIS) project (Urquhart and Rowley 2007). The other member of the team (CU) had researched information behaviour in various projects and was involved in coding and analysis for the JUSTEIS project.

The initial codes had been derived inductively (Foster 2004) and more details of the coding scheme are available in an earlier paper (Foster 2005). Coding was done in Atlas.ti. For the validation work, initial preparation involved training of the research associate in Atlas.ti procedures and the code book (the JUSTEIS project had used QSR N6 software).

For the pilot coding work, AF produced a slightly simplified scheme. Pilot coding of two interview transcripts was compared, using this slightly simplified coding scheme, by two researchers (AF, JT). A matrix was drawn up of codes allocated by one researcher for a particular paragraph against the codes allocated by the other for that paragraph. As one paragraph or set of lines could be allocated several codes, these paragraphs or chunks of coding were compared, as line by line comparisons were impracticable. If there is complete agreement, there should be a concentration of agreements along the diagonal. Disagreements are indicated by some scatter across the matrix. After team discussions, the two pilot interview transcripts were coded by the third member of the team (CU), with the addition of memos to aid further team discussion.

The next team discussion examined the reasons for similarities and differences in codes applied by the team members. Differences could be the result of omission by one researcher of a code and the omission could be an oversight or judgement that the paragraph did not warrant that attribution of meaning. Other differences were the result of one researcher using a different set of codes to describe a phenomenon to the set of codes used by another researcher. Discussions clarified whether the researchers were trying to describe the same or different interpretations of the observations.

Discussions produced agreement on a refined set of codes that were then checked against another interview transcript from the original dataset. This produced some queries about some of the codes, which were noted for future investigation.

The expectation had been that normal inter-rater reliability calculations would be possible. Our experience was that the process of parallel coding depends on several factors. The granularity of the coding framework, that is, the number of fine level codes, affects the ease of inter-rater reliability calculations. The greater the number, the easier it is for another researcher to choose different terms, even if the codebook contains detailed instructions on the conditions in which terms should be used. Parallel coding using a coding framework that includes higher level categories as well as the lower level thematic codes is difficult intellectually and the team found that it is probably better to parallel code at one level only, at one pass. The last difficulty was that many paragraphs were tagged with multiple codes, up to five terms, and that made inter-rater reliability calculations even more difficult. There are ways around this, by doing separate inter-rater reliability calculations for each type of category, or aspect and we considered simplifying the calculations in this way, so that we would obtain several sets of inter-rater reliability calculations for each transcript. Provided the categories, or aspects can be independently considered, this makes it easier to produce simple 'confusion matrices' such as those used by Rice et al. (2001). Conceptually, the idea of inter-rater code distance used by Saracevic and Kantor (1997) is interesting but the coding framework under investigation was not hierarchical and application did not seem useful or feasible.

Pilot coding of two interview transcripts was compared, using a slightly simplified coding scheme based on the original scheme. We checked whether there was any overlap in the coding but the findings confirmed the problems of dealing with multiple possible responses and a large number of possible codes. For simplicity, 'paragraphs' or chunks of coding were compared, as line by line comparisons would have been extremely difficult to do. For the first interview (interview 19), forty-one different codes were used. Of these, one coder did not use seven of those and the other coder did not use fourteen. The total number of agreements was twenty, but the number of disagreements far exceeded that, totalling over 300. One coder had assigned a total of fifty-one codes to the transcript and the other coder had assigned eighty codes. A matrix of the coding assignments made by the coders indicated some of the difficulties in describing information behaviour consistently between coders. Our level of disagreement was high, but other reported inter-rater reliability calculations on pre-testing of a code book give a figure of below 50% agreement for coding beliefs about tuberculosis in transcripts (Carey et al. 1996), perhaps a more objective coding activity. Particular inconsistencies in our coding were noted in the use of multiple codes for timing descriptors or time loops, time stage descriptors, refining, problem definition, cognitive approach and serendipity. For the second interview in the pilot (interview 12) the total number of codes used was fifty-four. The total number of agreements was twenty-seven and, like the first interview, the number of disagreements exceeded 300. Particular inconsistencies in the second interview occurred in the use of timing descriptors or time loops, coping strategies, eclecticism, feelings and thoughts, uncertainty, communication or vocabulary or terminology, information sources, networking as information seeking, problems and issues, cognitive approach, breadth exploration. The difficulties in using the coding scheme arose as there were higher level (more abstract) categories available as well as the lower level, more specific descriptors of concepts.

Two examples illustrate these problems.

Example one (extract from interview 12)

If I find specific debates then I will follow them up, but I will look to see what I can find first of all. [Interviewer: After the beginning stage, with the beginnings of answers, what comes next, do you change what you do?] What I do is get some people next and start to follow them up and then I move away from the database altogether and follow up things through the reference trail. I tend to go backwards just because it is easier but if I find something I like then I tend to move forwards as well through citation searches and say who cited this. If it is particularly interesting or uses particularly good metaphors, because usually I am not looking at stuff that is talking about the problem I am interested in, I am usually looking for metaphors or parallels. I tend to be looking at little bits from all over the place and it is all of this rather than look specifically. (P12)

The first coder used the following codes, each phrase in this list refers to the working code labels of the draft code book: Breadth exploration; Chaining; IS: information sources (coded IS to indicate a wider category of information sources); Eclecticism; Timing descriptive cumulative looped waves nonsequential (this was a wordy code label used to indicate that timing was discussed in a section of text.

The second coder used the codes: Chaining; Coping strategies; Time stage: second stage.

Example two (extract from interview 19)

It can be that you find something new and something that you don't know and that takes out of the original search and that could be a technique that you don't understand or an associated problem associated gene or drug treatment and although it is related it makes for a very different search Or it may be that what you are actually interested in is maybe limited to a particular gene and therefore you need to study that gene in particular. The interesting thing is that a gene is a good example. You can search for a gene in a very linear fashion going through the literature chasing an idea , but when you hit the gene you find a whole literature that surrounds it which will encompass the same gene from many different angles. So it may be a bit of both. (P19)

The first coder used the codes: Breadth exploration; Cognitive Approach (high level code), Distraction, Eclecticism; FTU uncertainty (FTU uncertainty was an abbreviation of "Feelings and thoughts of uncertainty"); IS-information sources; Nomadic thought; Opening (high level code); Problems and issues; Timing descriptive cumulative looped waves nonsequential.

The second coder used the codes: Chaining; Problem definition; Refining; Relevance criteria; Time force Stages 1st 2nd 3rd (this was a broad code to indicate possible staging of processes); Timing descriptive cumulative looped waves nonsequential (as described above).

The next stage involved coding of those interviews by CU, who used the coding scheme, but also made memo notes of her own.

We were aware of the problems noted by Stemler (2001) that joint development of a code scheme can lead to shared and hidden meanings of coding, but discussion was necessary to debate alternative meanings and perspectives. After further discussion and comparison of coding of more transcripts, some terms (e.g., difference) were removed and some of the timing descriptors or time loops) were simplified in the codebook.

The main problems arose with the use of terms that are also used in the psychological literature, as the team's different backgrounds meant that their interpretations varied. For example, coping strategies (meaning a way of managing the information seeking process) has connotations for others of dealing with adversity. The codes describing the contextual interactions of the nonlinear model were problematic. For example, in the transcripts interdisciplinary researchers often discussed getting into the way of thinking in another discipline. This could be considered as 'ways of thinking and practising in the subject' (Entwistle 2003), acknowledging that the ways of thinking and knowledge and understanding are bound together. Alternatively, one could try to separate, as the existing codebook did, 'knowledge and understanding' (internal context) from the cognitive approach. The original work had suggested these were concepts requiring further investigation and so it proved. Transferring the model requires a review of concepts from psychology for personality traits and learning styles. Discussions of coding also revealed that some of the differences in coding assignations were due to the differences in opinion on the scale and extent of a particular phenomenon.

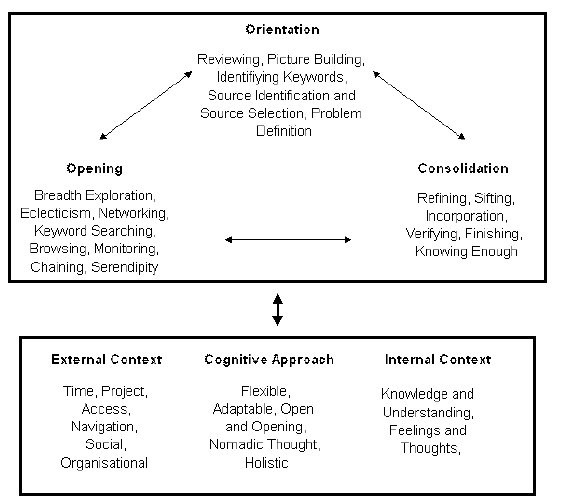

Many of the coding discussions focused on the differences between the sets of processes that characterised the core processes. Several of the terms that occur in the information science literature were discussed by the team. Taking one example, Opening and Orientation, contained (respectively) keyword searching (Opening) and identifying keywords (Orientation). The importance of some previous knowledge, or information and experience seems necessary before keyword searching can begin. Keyword searching during Opening was related to the use of databases, online catalogues, Internet search engines and online journals (Foster 2004). The outcomes of keyword searching were sometimes hard to assess when searchers were working in unfamiliar disciplines.

Again, I think I would use literature searches for that and I will change my keywords appropriately and that comes down to language to some degree. Now I am picking up more experience from talking to people in the different disciplines my searches are coming much more easily for me because I can actually pick those words out. So that is one thing, I would also go straight to a person and talk to them about it and try to track information directly. (P14)

Another activity associated with Opening was browsing. This and other activities such as breadth exploration, eclecticism and networking as information seeking, were found to be important processes for accessing information (as in P14 extract). These activities often necessitated changing the researcher's disciplinary focus or indeed adjusting from keyword searching to identify keywords, bringing researchers into the Orientation process. Orientation focuses on identification, deciding which direction to take.

Yes, well, what I found I was doing was using a set of keywords and then running them through all of the databases that I could find in both disciplines. That was quite good, in some cases I would get nothing at all, clearly it was foreign, but in others I would get quite a surprising amount. But what I found was... nobody in the university formally knew really, you had to find the experts in the field, in the country before you were able to get anything coherent, or of value, from colleagues. (P5)

In the above cases, keyword searching was very closely related to networking as information seeking, highlighted in italics as in the extracts above. In many cases keyword searching was quickly followed by identifying keywords as the researchers became more absorbed in information searching and sometimes questioned their focus of interest:

Well, I need to go back a bit, because sometimes the keywords don't always appear straight away, if for example like with dyslexia, if that is the only word that you know about, you start with that one and then you find all the others through reading, through literature, errm and then as my knowledge base increases I will start asking myself questions about the specific thing that I am interested in. (P6)

Again keyword searching was linked to networking as information seeking which was also mentioned in the interview below. In other extracts, flipping between Opening (keyword searching) and Orientation (identifying keywords) was apparent, allied to breadth exploration. Bates (2007) might argue that flipping between Opening and Orientation typifies human exploratory behaviour.

And it takes a long time to familiarise yourself within a literature even to be able to find out if they are talking about what you are talking about because often what you look for how do you know what words to search for….It is finding parallel problems and finding the right words for them, e.g., what we would call 'participation' the blood people would call 'donation' and that is easy because I am a blood donor so I know what the words are, but there may be other things like that, but just finding out what the right words are, how do you search for, this that or the other. (P11)

Another researcher (below) was systematic in keyword searching and again this merged into identifying keywords and so Opening and Orientation fluctuated as the activities merged. Other activities such as chaining were also involved in the Opening process, together with networking as information seeking.

Some things I would do only at the start, they would tend to be Web of Science, searches under keyword combinations for the project and I try to do that as thoroughly as possible and try to use whatever combination of words that I could possibly think would be relevant to it. But things like following up articles, talking to people and finding out if they know anything about the subject they carry on the whole time. But the topic of what I am doing can also change... (P46)

In the original code book, breadth exploration was recognised as 'a conscious expansion of searching to allow exploration of every possibility' (Foster 2004: 233) and was coded within the core process of Opening. Breadth exploration was acknowledged as being a complex activity in that it involved combinations of other activities to form a larger process although it worked alongside other activities. It included deliberate expansion of information horizons, starting wider, so that narrowing could produce results. In this way it was linked to activities such as source selection, keyword identification (Orientation), sifting and refining (Consolidation).

Well, I tend to go the long way round. I have to satisfy myself that I haven't missed anything, so I tend to take the splatter gun approach and just look anywhere and try to think of things that are tangential and just work through keywords and try to come up with as many keywords as possible and I end up with some dreadful long list, but I start to look at the titles almost and browse the titles and I soon learn that there are things that I can get out of my list like – recycling in rat guts for example. (P11)

As a result of discussion it was decided that the code of breadth exploration would be appropriately represented as a 'cline' (a scale in which the strength of information seeker behaviour could be mapped to a range of text or numerical descriptors, from little to a lot, or from 1 to 10), since interviewees indicated that the extent of exploration depended on the size of project or task.

Browsing, for example, was defined in the code book as open browsing or selective browsing. The team agreed that Browsing could and should be more narrowly coded to increase coding reliability and that other frameworks of browsing (e.g., Rice et al. 2001) should be reviewed. Case (2007: 89) describes browsing as the central and oldest concept among a variety of terms used to denote informal or unplanned search behaviour. Scanning may be similar to browsing and serendipity may also be closely related. Case (2007) synthesises various definitions to produce four main categories of browsing: well defined (formal search and retrieval); semi-defined (browse, forage or scan); poorly defined (browse, graze, navigate or scan) and undefined (encounter or serendipity). Bates (2007) contends that browsing may consist of a series of four steps – glimpsing, selecting or sampling, examining the object and acquiring or abandoning the object.

Open browsing, as defined in the code book, was similar to semi-defined or poorly defined browsing – but glimpsing, selecting and examining may also describe the behaviour:

Also, scanning through journals as well and just allowing myself to follow up interesting things once I have seen a mention of something interesting then I am more likely to follow it up if it is a URL…being distracted is part of my mode of working and so I allow myself to be distracted. (P39)

Selective browsing implies greater certainty about the routes that might possibly be used, but this could be described by the emphasis on glimpsing across different landscapes, selecting and making quick decisions on what to acquire and what to discard – or even berrypicking (Bates 2007).

At the moment I do a search say in LISA and I know I also have to look in Psychological Abstracts, I probably also have to go looking in computing, education, I probably look in social sciences, you are never quite sure when you are going to find stuff. (P9)

Similar discussions took place for the terms in the other core processes and the movement between the core processes.

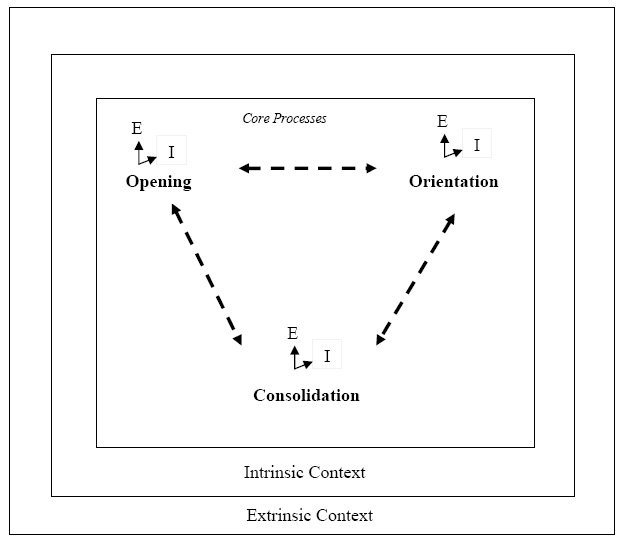

Foster's original model recognised nonlinear behaviour patterns and interaction of all components of the model (see Figure 1). These were bound to external, internal and cognitive dimensions affecting the individual information seeker. In Figure 2 the main processes appear with their component processes.

Analysis of the coding confirmed the main features of the model, but some simplification of codes should now ensure ease of use and consistency of use across different researchers: e.g., the timing descriptors are now refined. Other aspects rationalise the use of language to allow for the likely variation in the experience and knowledge of coders. coders. The other main change will concern the way that Context is developed. This feature of the model should draw upon some of the more developed explanatory theories from the literatures of education, linguistics, psychology and sociology.

The core processes are confirmed, but the activities now incorporate two additional parameters: extent and intensity (represented by arrows and the letters E and I within the outline of a revised nonlinear model Figure 3). These additional dimensions map the variability of behaviour within the seeking process.

Figure 3 also illustrates that a further change to the model is found in the way that context is developed. This feature of the model should draw upon some of the more developed explanatory theories from the literatures of education, linguistics, psychology and sociology. In particular, the elements formerly known as cognitive approach and internal context become intrinsic context embracing learning, personality and affective aspects, with a new grouping of exhibited-observed behaviour.

Within intrinsic context, motivation has been added. This was not noted in the original model as all respondents were motivated, but comparison with other studies and data sets indicates that motivation is important.

External context, now extrinsic context to reflect the overall changes of approach allows not only for social and cultural influences but also adds the 'helps' that are available through information systems (e.g., Google helpfully provides some ordering).

The final version is likely to be similar to Figure 3, but the specification of the codes needs to be finalised against other data sets and evidence from the literature. The above changes are indicators of the ongoing research.

This paper presents the interim findings of the project to develop a refined model of information behaviour that should be transferable to other similar situations. The research confirmed the problems of trying to agree on descriptions of the characteristic activities demonstrated by information seekers and the influences, external and internal, on that behaviour. Future research will examine how the refined framework and revised codebook works with a larger dataset on student information behaviour. Preliminary investigations with that dataset suggest that the core processes of Opening, Orientation and Consolidation can be identified and that there is evidence that information seekers are flipping between the processes (with or without the help of information systems to assist them with orientation and consolidation activities).

| Find other papers on this subject | ||

© the authors, 2008. Last updated: 1 December, 2008 |