Analysing the effects of individual characteristics and self-efficacy on users' preferences for system features in relevance judgment

Yin-Leng Theng and Sei-Ching Joanna Sin

Division of Information Studies, Wee Kim Wee School of

Communication and Information, Nanyang Technological

University. 31 Nanyang Link, Singapore 637718

Introduction

Information behaviour scholars have espoused the importance of user perspective and contextual influences, which contribute to a deeper understanding of the diversity and complexity in information practices (Case, 2008; Dervin and Nilan 1986; Fisher and Julien 2009; Vakkari et al. 1997; Wilson 2000). In the design of information systems, on the other hand, a user-centred perspective has yet to gain as strong an appreciation. Traditional information retrieval research has focused mainly on an objective and system-based approach to relevance that has focused on matching documents with query terms. Such a system-centred focus hinders the effective delivery of relevant information to diverse users (Froehlich 1994).

There is a need for a user-centred perspective on relevance; that is, there is a need to conceptualise relevance as a subjective, individualised mental experience that involves cognitive restructuring (Borlund 2003). Subjective relevance takes the user's knowledge and information needs into consideration. It is also dynamic: over time, one's view of what is relevant will change as knowledge and exposure to one's knowledge domains broadens. What is considered relevant might also change according to the task at hand and the users' understanding of the task itself. Currently, due to the dearth of studies on subjective relevance, less is known about what information system features are important in supporting the subjective relevance judgment of different individuals.

In light of the above research gap, this study surveyed over 200 users on the system features that they consider important to information seeking and retrieval. It tested whether individual preferences for particular system features are influenced by individual differences (such as sex and information literacy skills, particularly self-efficacy at different stages of the information search process). The findings will help inform the design of information systems that facilitate the individual's subjective relevance judgment. They will also demonstrate the need for a user-centred and context-aware approach to the design of information systems.

Literature Review

System-based relevance versus subjective relevance

Traditionally, the design of information retrieval systems has been based on system relevance. This approach focuses on developing algorithms that best match documents to query terms. However, it has been recognised that query terms may not always sufficiently reflect user needs (Taylor 1962). There are increasing criticisms of the system-based relevance approach because it relies on a priori relevance judgments made by non-users.

User-criteria studies, on the other hand, are based on the idea that relevance judgments should be made by users who are motivated by their own information problem situations. They also highlight that relevance judgments should consider a multitude of factors (Barry and Schamber 1998). Saracevic (1996; 2007a) identified five types of relevance: (a) System or algorithmic relevance, which is centred on matching documents with query terms; (b) Topical or ubject relevance, which examines the relationship between the query topic and documents; (c) Cognitive relevance or pertinence, which focuses on users' current knowledge state; (d) Situational relevance or utility, which refers to the usefulness of the retrieved documents to the user's task; (e) Motivational or affective relevance, which reflects the relationship between the documents and the user's intentions, goals and motivations. Cosijn and Ingwersen (2000) conceptualized affective relevance as influencing all other types of subjective relevance (topical, cognitive, situational and socio-cognitive). Apart from system relevance, which assumes that relevance does not change across contexts or users, the other four types of relevance are recognised as subjective; in other words, as context- and user-dependent (Kelly 2004). For more discussion on relevance, readers are referred to in-depth reviews such as Borlund (2003), Cosijn and Ingwersen (2000), and Saracevic (1996, 2007a, 2007b).

Many studies have been conducted to identify the criteria (such as depth or scope, currency, specificity) that individuals use to make relevance judgments (Barry and Schamber 1998; Taylor 1962; Taylor, Cool, Belkin, and Amadio 2007; to name a few). Due to the complex nature of information needs and behaviour, researchers have not established what criteria are most important to different users in different situations (Anderson 2005). With the exception of topicality, no consensus has been reached on other relevance judgment criteria (Anderson 2005; Barry and Schamber 1998; Chen and Xu 2005).

Beyond studies on relevance judgment criteria, few have ventured forward to study the system features that support users' non-topical relevance judgments. Lee, Theng, and Goh (2004; 2007) conducted qualitative studies to elicit features that can be incorporated into digital library interfaces to aid users' subjective relevance judgment. In a subsequent study, a survey and exploratory factor analysis was conducted to group system features into broader categories (Lee et al. 2006). These studies did not examine how individual differences affect preferences for various system features. This is an area that the current study seeks to address.

Process of information seeking

The time dimension of information behaviour (Savolainen 2006), specifically the process of information seeking, is important to the study of relevance. Experiments have shown that through interactions with an information system (e.g., viewing of structured documents), a user's relevance judgment can evolve during a search process (Kagolovsky and Moehr 2004). In Anderson's (2005) view, the act of retrieving materials from an information system is not a single interaction, but is a complex process of interaction between a user and a system; neither the system nor the user can judge relevance in advance.

Taylor and colleagues (Taylor et al. 2007) showed that students pick different relevance criteria at different stages of their information search (picking, learning, formulating, and writing). Vakkari and Hakala (2000) found that the top criteria in evaluating the relevance of references changed only slightly over the information search stages. Information content, specifically topicality, remained the most important factors across all stages. Interestingly, the authors found that when the evaluation of full-texts are concerned, there were more changes in relevance criteria across stages. Tang and Solomon (2001) showed that while topicality remained an important factor across search stages in their laboratory study, participants in their naturalistic study rated topicality as less important in the second stage when full-text articles were evaluated. Nowadays, full texts are increasingly accessible in many online retrieval systems. It is thus timely to continue exploring the relationship between search stages and relevance judgment. We thus hypothesised that design features used for relevance judgment may also vary, and match with the stages of the information seeking process.

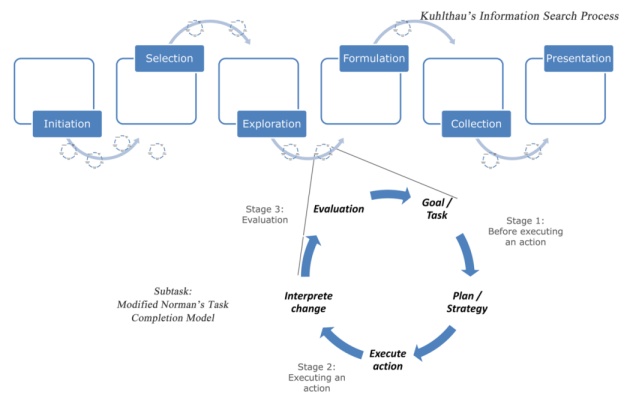

The current study focused on academic information seeking. We conceptualised the search process on two levels (see Figure 1). On the higher level, students go through Kuhlthau's information search process of initiation, selection, exploration, formulation, collection, and presentation (Kuhlthau 1991). Throughout these six stages, students will engage in many sub-tasks (second-level processes). These sub-tasks may include interaction with information systems to retrieve documents, or seeking advice from faculty members. In this study, the focus is on sub-tasks that involve the use of information retrieval systems.

Norman's task completion model (1988) provides the basis for the second (sub-task) level of the information-seeking process. Norman's model states that a searcher undergoes seven stages of cognitive actions: goal, intention, planning, execution, perception, interpretation, and evaluation. In this study, the seven-stage model, reduced to five stages by Carroll (2000), is grouped into three phases: 1) Before executing an action: This includes user actions that address (a) formation of goals and intentions; and (b) planning and specifying the action sequence. 2) Executing an action: This includes (a) carrying out the planned action sequence; and (b) perceiving and interpreting the system's response. 3) Evaluation: This includes evaluating the system's effectiveness with respect to the goal and intentions in helping the user to complete his/her goal.

Figure 1: Enhanced process of information seeking integrating Kuhlthau's information search process and Norman's simplified model of task completion

Individual differences: self-efficacy in information seeking

Effective information seeking and use relies in part on an individual's information literacy skills. Self-efficacy is a significant factor in many facets of information behaviour (Case et al. 2005; Nahl 2005; Wilson 1999). We hypothesised that individuals with varying self-efficacy and skill levels may require different system features to support their information search. Instead of simply examining overall self-efficacy in information seeking, we are interested in a more detailed differentiation of the various aspects of the information-seeking process. This study draws from the Big 6 model (Lowe and Eisenberg 2005), which includes: (a) task definition; (b) information-seeking strategies; (c) location and access; (d) use of information; (e) synthesis; and (f) evaluation.

With the focus on information searches with an information retrieval system, this study studied these five aspects: (a) task definition/question formulation; (b) information-seeking strategies—identifying potential sources; (c) information seeking strategies—developing search strategies and queries; (d) accessing sources; and (e) evaluation.

Summary

Relevance research has established the diversity in relevance judgment and the importance of understanding individuals' subjective relevance evaluation. Information behaviour research, on the other hand, has shed light on the dynamic nature of the information-seeking process. It is uncertain, however, what features of the systems are considered by diverse individuals as useful in their relevance evaluation across the different information-seeking stages. This exploratory study is a step toward addressing this research gap.

The study

Research questions

This study examines the preferred system features that support the individual's subjective relevance judgment. Specifically, the research questions are:

Research question 1: system features

- Research question 1.1. What system features do users perceive as important to their relevance judgment in their academic information search process?

- Research question 1.2. What are the major system-feature categories users perceive as important to their relevance judgment and how are these categories ranked?

Research question 2: Individual characteristics and rating of design features

- Research question 2.1. What are the bivariate relationships among individual characteristics and perceived importance of the system features?

- Research question 2.2. To what extent do the following contribute to the perceived importance of system feature categories in respondent's academic information search process: (a) sex; (b) years of online experience; (c) perceived competence as an online searcher; (d) frequency of Internet use; (e) self-efficacy in question formulation; (f) self-efficacy in identification of sources; (g) self-efficacy in developing search strategies; (h) self-efficacy in accessing information sources; and (i) self-efficacy in evaluating information?

Methods

Design of survey instrument

The study instrument was developed through a small-scale focus-group session. Four participants, two search experts and two novices, were recruited to elicit the desirable and expected information system features that support subjective relevance judgment. Scholars have underscored the importance of contextual factors, such as tasks, to individual behaviour. We thus specified a task for the participants, which was the completion of a literature review for a term paper. This task is selected to emulate the research paper assignment used by Kuhlthau in her studies on the search process (Kuhlthau 1991). Such a task would involve the use of different information systems and resources. In this study, we focused on the sub-tasks in which users interact with academic journal databases and digital libraries. Future studies may examine the types of features and services that aid subjective relevance judgment across different information sources. At the start of the session, participants were briefed on what they were expected to do. They were then given twenty minutes to explore three information retrieval systems: (a) Emerald Fulltext; (b) Library and Information Science Abstracts; and (c) ACM Digital Library. In the course of using the systems, participants were asked to take note of features that helped them complete their task and features that could be improved, as well as features that should have been included. At the end of the twenty minutes, the participants came together to brainstorm features that they thought would help them identify relevant documents. During this brainstorming session, the participants were encouraged to generate as many features as possible, but refrain from commenting on the features generated. After the brainstorming session, the participants were asked which of the generated design features was most important to them.

Measures

The discussion section generated a list of twenty-six features that were considered useful for supporting the three stages of the sub-task level information search process (i.e., before executing an action, executing an action, and evaluation; Figure 1) and subjective relevance judgment (see Table 1). Survey participants were asked to rate the importance of each feature using a five-point Likert scale, from 1 ('not important') to 5 ('very important'). Participants also evaluated their efficacy in executing different actions (such as question formation, identifying potential sources) during the information seeking process. This was rated on a five-point Likert scale. Other demographics data and user characteristics, such as perceived competence in online searching (i.e., novice, intermediate, or expert searchers), were also collected.

| Item no. | Stage 1: Before executing the search task |

|---|---|

| 1_1 | Display search options in order of the most commonly used options (e.g., title, author, year of publication) |

| 1_2 | Provide more search options (e.g., search by title, author, abstract) for better query formulation |

| 1_3 | Allow the use of simple, intuitive commands such as AND, OR, NOT in basic search |

| 1_4 | Provide a command line search option in the advanced search |

| 1_5 | Allow you to change from one subject area to another when searching |

| 1_6 | Provide search tutorials and search examples to help query formulation (e.g., online help or system suggested examples) |

| Item no. | Stage 2: Executing the search task |

| 2_1 | Have search results list formatted and displayed in a simple, uncluttered interface would help identify relevant documents more easily |

| 2_2 | In the search results list, the system categorises documents based on document types, so that results would be grouped by journals, conference proceedings, etc. |

| 2_3 | Rank the items in the search results list by percentage of relevance |

| 2_4 | Allow you to review the search history of past queries submitted and the corresponding search results list |

| 2_5 | Search results list to provide a recommendation of documents and related topics based on the query submitted |

| 2_6 | Highlight the query words in the search results list |

| 2_7 | Provide each document's abstract in the search results list |

| 2_8 | Show year of publication to indicate how current the document for each item in the search result list |

| 2_9 | Provide documentation to inform how search results are ranked |

| 2_10 | Recommend search tips based on your query (e.g., the system suggests alternative query terms) |

| Item no. | Stage 3: Evaluation |

| 3_1 | Include the email address(es) of the author(s) of the retrieved document(s) |

| 3_2 | Indicate how many times a document in the search results has been cited or used by others |

| 3_3 | Highlight search query words in the document text retrieved from the search |

| 3_4 | Provide a feature to "find more documents like this one" for each search result shown |

| 3_5 | Provide an indication to show that you have already reviewed, opened, or downloaded this document previously |

| 3_6 | Allow you to view the table of contents in the source document if its full-text is not available |

| 3_7 | As part of the search results, the system also provides a URL to the actual journal for general information (e.g., publisher's information, accessibility rights) about that document source |

| 3_8 | Specify the pages in which the query words appear in the document's contents and provide direct links to the pages |

| 3_9 | Provide URL links to full-text documents that are referenced in the current document or search results |

| 3_10 | Provide access to a subject specific forum, where you can seek others' views on the documents retrieved or any other related matter |

Analysis methods

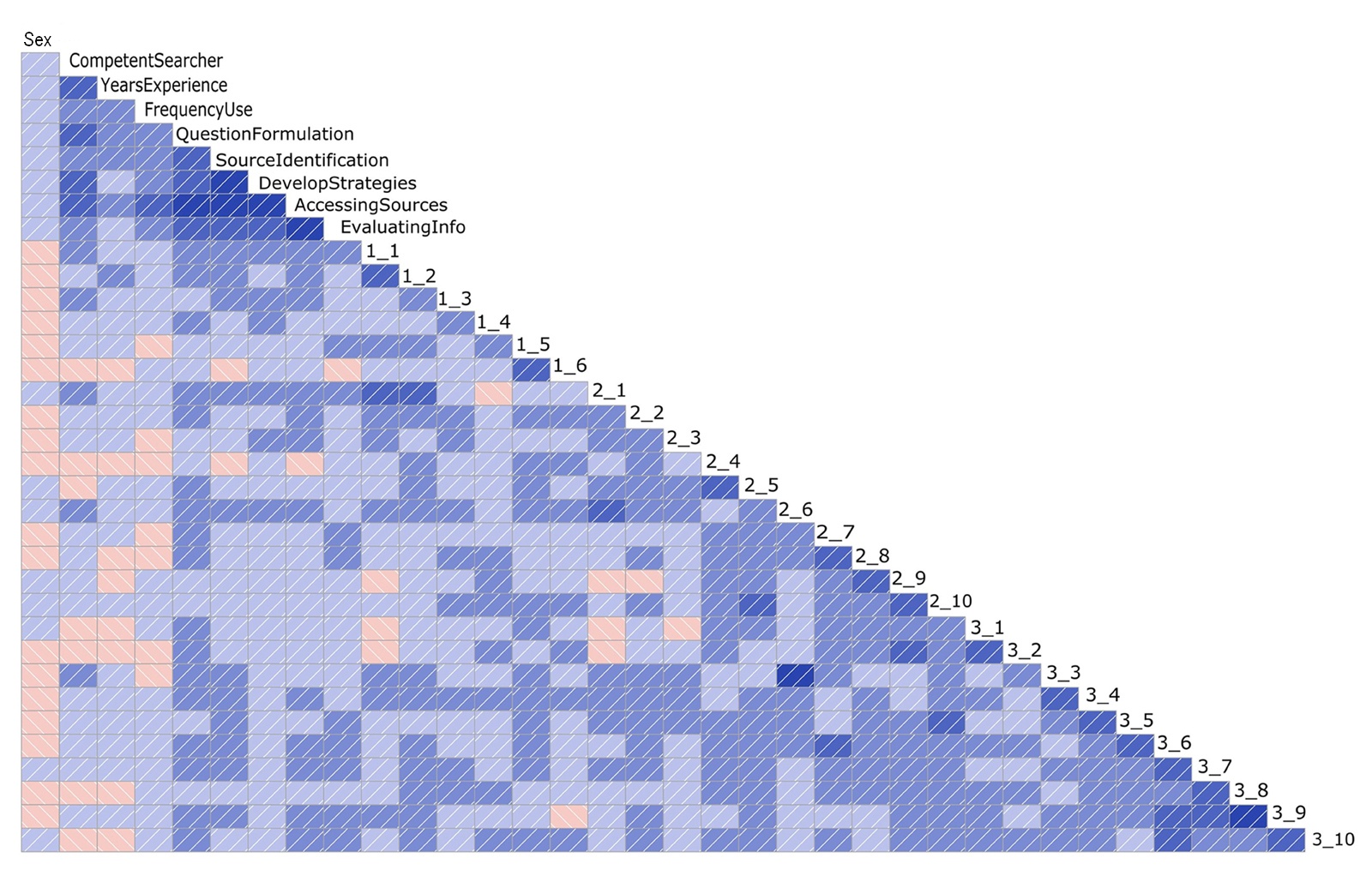

Research question 1.1 was examined through descriptive statistics of the twenty-six system features. Research question 1.2 concerns the grouping of system features. Factor analysis, a technique for identifying the underlying structure among variables, was selected for this purpose. Research question 2 focused on the roles of individual characteristics. First, for Research question 2.1, relationships among the nine individual characteristics (such as sex and self-efficacy), and the perception of twenty-six features, were presented using a correlation matrix. The purpose of this correlation analysis is to provide a visual representation of the bivariate relationships among all thirty-five variables of this study instead of inferential testing. Inferential testing was conducted for Research question 2.2 using multiple regression, which is preferred over correlation analysis for inferential testing purposes. This is because multiple regression is a multivariate technique where the influence of each explanatory variable can be evaluated while holding other variables constant. SPSS17 and the open-source R statistical program were used to run these statistical analyses.

Sample

The survey was administered to first- and second-year undergraduates from the School of Engineering and Computer Science at a local university. Participants took an average of fifteen minutes to complete the survey. A total of 277 respondents (210 males, 58 females, 9 not reported; mean age = 21 years) participated in the study (see Table 2). Of the 277 respondents, 69% (n = 191) rated themselves as intermediate searchers, followed by 19.1% (n = 53) novice searchers, and 11.9% (n = 33) expert searchers. The respondents were frequent users of the Internet, with 43.3% using the Internet on a daily basis, and 41.5% who had been using the Internet for more than five years.

| Sex | |

|---|---|

| Male | 210 (75.8%) |

| Female | 58 (20.9%) |

| Not reported | 9 (3.2%) |

| Competency as online searcher | |

| Novice searcher | 53 (19.1%) |

| Intermediate searcher | 191 (69%) |

| Expert searcher | 33 (11.9%) |

| Experience with online systems | |

| <1 years | 31 (11.2%) |

| 1-2 years | 37 (13.4%) |

| 2-3 years | 28 (10.1%) |

| 3-4 years | 27 (9.7%) |

| 4-5 years | 39 (14.1%) |

| > 5 years | 116 (41.5%) |

| Frequency of online systems usage | |

| Never | 6 (2%) |

| Once a month | 7 (2.5%) |

| Several times / month | 37 (13.4%) |

| Several times / week | 107 (38.6%) |

| Daily | 120 (43.3%) |

The internal consistency of the self-efficacy ratings was evaluated using SPSS. The reliability analysis yielded a Cronbach's alpha of .817, which is better than the generally accepted level of 0.7 (Field 2009). Table 3 shows the ratings for self-efficacy in information searching. Out of a maximum of five, the average rating across all five aspects was 3.7 (SD = 0.51). The score for developing successful search strategies (M = 3.6) was slightly lower than for other aspects. Respondents reported higher self-efficacy for identifying and accessing sources (M = 3.76). Overall, the mean score for all aspects was above 3.5. This indicates that most respondents perceived themselves as quite competent in the main aspects of information seeking.

| Aspects of information seeking | n | Min | Max | Mean | SD |

|---|---|---|---|---|---|

| Formulating questions based on information needs | 276 | 1 | 5 | 3.7 | 0.7 |

| Identifying potential sources of information | 276 | 2 | 5 | 3.76 | 0.64 |

| Developing successful search strategies | 277 | 2 | 5 | 3.6 | 0.68 |

| Accessing sources of information, including computer-based technologies | 277 | 2 | 5 | 3.77 | 0.71 |

| Evaluating information | 277 | 2 | 5 | 3.7 | 0.64 |

| Overall information seeking self-efficacy | 275 | 2 | 5 | 3.7 | 0.51 |

Results

Research question 1.1. Important design features

Table 4 shows the importance rating of the top ten features. The top three features were: (a) presentation of results in a simple, uncluttered interface; (b) display search options in order of usage frequency; and (c) provision of more search options.

| Rank | Item no. | Features | n | Mean | SD |

|---|---|---|---|---|---|

| 1 | 2_1 | Have search results list formatted and displayed in a simple, uncluttered interface would help identify relevant documents more easily | 277 | 4.26 | 0.68 |

| 2 | 1_1 | Display search options in order of the most commonly used options (e.g., title, author, year of publication) | 277 | 4.13 | 0.71 |

| 3 | 1_2 | Provide more search options (e.g., search by title, author, abstract) for better query formulation | 276 | 4.07 | 0.67 |

| 4 | 2_3 | Rank the items in the search results list by percentage of relevance | 277 | 4.02 | 0.81 |

| 5 | 1_3 | Allow the use of simple, intuitive commands such as AND, OR, NOT in basic search | 277 | 3.91 | 0.86 |

| 6 | 2_6 | Highlight the query words in the search results list | 277 | 3.91 | 0.78 |

| 7 | 3_4 | Provide a feature to "find more documents like this one" for each search result shown | 276 | 3.83 | 0.7 |

| 8 | 3_3 | Highlight search query words in the document text retrieved from the search | 277 | 3.81 | 0.7 |

| 9 | 2_2 | In the search results list, the system categorizes documents based on document types, so that results would be grouped by journals, conference proceedings, etc. | 277 | 3.81 | 0.77 |

| 10 | 3_5 | Provide an indication to show that you have already reviewed, opened, or downloaded this document previously | 276 | 3.8 | 0.85 |

Research questions 1.2. Grouping of design features

The internal consistency of the self-efficacy ratings was evaluated using SPSS. The reliability analysis yielded a Cronbach's alpha of 0.817, which is better than the generally accepted level of 0.7 (Field 2009). Table 3 shows the ratings for self-efficacy in information searching. Out of a maximum of five, the average rating across all five aspects was 3.7 (SD = 0.51). The score for developing successful search strategies (M = 3.6) was slightly lower than for other aspects. Respondents reported higher self-efficacy for identifying and accessing sources (M = 3.76). Overall, the mean score for all aspects was above 3.5. This indicates that most respondents perceived themselves as quite competent in the main aspects of information seeking.

For each feature group, the average importance score was calculated. The main characteristics of each group are characterised, and the seven factors are presented in descending order of each group's average importance score:

- Highest rank—Query formulation features (factor 5). Items include: 1_1 and 1_2 (M=4.1, SD=0.58). These features help users formulate queries by allowing more search fields for users to select different search options.

- Second rank—Search results grouping and sorting (factor 6). Items include: 2_3 2_5 2_2, and 2_1. The average importance score is 3.94 (SD=0.49). These features support subjective relevance judgments by grouping documents of similar types and sorting them by percentage of relevance.

- Third rank—Supportive visuals (factor 3). Items include: 3_3, 3_4 and 3_5. The average importance score is 3.78 (SD=0.58). These features help to draw users' attention to the query terms found in the documents and indicate whether a document has been reviewed, opened, or downloaded before.

- Fourth rank—Advanced search reatures (factor 7). Items include: 1_4, and 1_3. The average importance score is 3.73 (SD=0.65). This includes advance search functions such as command-line searching and Boolean searching.

- Fifth rank—Direct search results evaluation (factor 1). Items include: 3_9, 3_8, 3_7, and 3_6. The average importance score is 3.73 (SD=0.54). These features support evaluation of individual search results. They direct users to view the content of the search results, such as links to a document's full text or its table of contents.

- Sixth rank—Results Listing Features (factor 4). Items include: 2_7 2_6 2_8, and 2_9. The average importance score is 3.53 (SD=0.57). Features of factor 4 focus on the results list, such as including document abstracts with the list and highlighting query terms in the results list.

- Seventh rank—Supportive chaining features (factor 2). Items include: 1_5 1_6, 3_1 2_4, 3_10, 3_2, and 2_10. The average importance score is 3.48 (SD=0.50). Features in factor 2 provide links to external documents (such as search tutorials) and external resources (e.g., links to document authors), and to domain experts (i.e., forums).

Research question 2. Individual characteristics and rating of design features

Research question 2.1. Bivariate relationships

The relationships among individual characteristics, self-efficacy in information seeking, and the importance ratings for all 26 features are presented in the Appendix, Figure 2. This study included ordinal variables such as perceived competence as an online searcher and frequency of internet use. Kendall's tau-b (Τb), a non-parametric measure for handling ordinal data, was used in the correlation analysis. Sex is a dichotomous variable, thus, the point biserial correlation coefficient (rpb) is used for this variable (Field 2009). These correlation coefficients were calculated using R, and the resultant correlation matrix was plotted using the corrgram package in R (Friendly 2002). The darker shade indicates a stronger correlation. An right-sloping diagonal line suggests a positive relationship and a left-sloping line, a negative relationship.

Several general patterns can be observed. First, male respondents tended to report having more experience with the Internet and online database searching. They also reported higher self-efficacy in all five aspects of information searching. Interestingly, they tended to give slightly lower importance scores to most features when compared to female respondents. Other than sex, generally, individual characteristics such as higher frequency of Internet use were positively associated with a higher importance rating for most design features. Negative relationships were found for a few features. Expert searchers found search tutorials and search examples (item 1_6) and records of their search history (item 2_4) less important, for example.

Research question 2.2. Multivariate analysis

Multivariate analyses were conducted to probe further the relationships among individual characteristics and design-feature preference. The study tested nine explanatory factors: (a) sex; (b) years of online experience; (c) perceived competence as an online searcher; (d) frequency of internet use; (e) self-efficacy in question formulation; (f) self-efficacy in identification of sources; (g) self-efficacy in developing search strategies; (h) self-efficacy in accessing information sources; and (i) self-efficacy in evaluating information.

Seven multiple regressions were conducted with R to test whether these factors contribute to different ratings for each of the seven design feature groups discussed in Research question 1.2. Individual characteristics were measured as categorical variables. They were analysed using the dummy coding method. Prior to the analysis, a multicollinearity diagnostic was conducted for each of the multiple regression equations using generalized variance inflation factors (GVIF). A GVIF1 with 2 degrees of free value larger than 2 indicates a multicollinearity problem (Fox and Monette 1992), which is a situation in which the correlations among variables are problematically high. For all seven equations in this study, the diagnostic results showed that all nine variables yielded a GVIF1/2df value smaller than two. This suggests that the variables have no significant multicollinearity problem.

The multiple regression findings (see Appendix,Table 6) show significant influences for perceived competence as an online searcher, years of online experience, frequency of Internet use, and the five aspects of information seeking self-efficacy.

The analysis found that individuals with higher question formulation self-efficacy were more likely to deem factor group 1 direct search results evaluation, group 2 supportive chaining, group 4 results listing, and group 6 results grouping and sorting as important. Those with higher self-efficacy in source identification, on the other head, tended to give group 3 supportive visuals higher scores. Respondents with higher self-efficacy in developing search strategies preferred group 7 advanced search features (factor 7). Those with higher efficacy in accessing sources rated group 5 query formulation features and group 6 search results grouping and sorting as important. Respondents with higher self-efficacy in evaluating information were more likely to rate factor group 1 direct search results evaluation and group 4 results listing features higher.

Generally, frequent online system users tend to give higher ratings to most features than infrequent users. They gave a statistically significant higher rating for supportive visuals and search results grouping and sorting features, for example. Respondents with longer online experience also appreciate the query formulation and the search results grouping and sorting features. This may suggest a mutually reinforcing cycle. With increased exposure to certain system features, users may become more comfortable with those functions. Familiarity can contribute to positive evaluations of information systems and more usage of those information resources (Barrett et al. 1968; Xie and Joo 2009), which in turn leads to more familiarity. Conversely, this suggests that information professionals may want to have prolonged instead of one-time training with reluctant users of academic databases, such that they can progressively gain familiarity with advanced features over a period of time (Kim and Sin 2011).

On the other hand, other things being equal, competence in online searching showed negative relationships with many feature ratings. Expert searchers were significantly more likely to rate direct search results evaluation as less important. This may be that expert searchers are more confident with their own ability in judging relevance using a host of contextual cues. They may feel less need to rely on basic features such as links to the document's table of content. Recently, many academic information retrieval systems have move towards simpler search interfaces. There have been concerns among educators and information professionals that this may lead to dumbing down of search functionalities that may adversely affect advanced or professional users (Howard and Wiebrands 2011). How to enhance the customisability of information systems, such that they effectively support users with varying experience, skills, and expectations is an area that needs considerable attention.

Sex was not significant in the multivariate analysis. This is in line with the views that ascribed characteristics such as gender are not as crucial in information behaviour, after perceptual and behaviour factors are taken into account (Zweizig and Dervin 1977). Compared to situations in which behaviour is mostly shaped by biological factors, the stronger influence of perceptual and behaviour factors here suggests that information professionals and system designers have more room in contributing to effective individual information behaviour through system design and user education.

Discussion

In the development of information systems, system testing often involves only a small number of participants in an observational study. The current study shows the usefulness of survey research in collecting the views of a larger sample of actual users. User surveys can complement usability testing and provide better understanding of diverse individuals' subjective needs. The study found that most participants, including experienced users, rated non-topical related features rather highly. This suggests a need for system designers to move beyond system relevance to incorporate more features that support subjective relevance judgment.

In addition, the highest-rated system features belong to stage 1 (before executing the search) and stage 2 (executing an action). Stage 3 features for evaluation are not considered so crucial. Kuhlthau's information search process model helps shed light on these findings. The model shows that individuals feel more uncertainty and confusion at the beginning of the search process, such as the initiation or the exploration stages. Similarly, at the sub-task level, users may also feel unsure at the beginning stage of the process. This uncertainty may contribute to the need for stronger support from information systems, thus translating to high scores for features at sub-task stages 1 and 2. System designers may want to explore ways to provide more features supporting the earlier stages of the search process.

Previous studies have noted the importance of domain knowledge in effective searching. Others have tested the influence of computer skills. This study explored a different angle and found that information literacy skills, such as question formulation and evaluation of information, were significant factors in the choice of preferred features. When creating information delivery systems, developers may want to take into account the varying information literacy skills among users. Specifically, individuals with lower information-seeking self-efficacy and skills may require more attention. This is especially because these users may become frustrated with information systems quite quickly (Kim and Sin 2007), which leads to less use of these resources.

The R2 of the multiple regression analyses ranged from .1 to .19. That is, the factors investigated here explained about 10% to 19% of the variance in preference of system features. This suggests that future studies interested in predicting feature preference may want to include extra variables. These include: task characteristics such as task types and complexity (Vakkari 1999); respondents' domain knowledge and interest in topic (Ruthven et al. 2007); individual characteristics such as affective, cognitive and learning styles (Ford et al. 2001); and the fit or interaction between individual style and tasks (Kim and Allen 2002).

This study was a non-probability sampling of participants in a specific subject domain. It focused on testing relationships among participants, and was not aimed at population generalisation. Future studies may conduct random samplings of different populations to test the effect of disciplinary differences. Also worth pursuing is research on design features that support subjective relevance judgment in everyday-life information-seeking. In terms of data collection methods, including a larger-scale focus group and observation will provide additional rich data on the reasons behind user preferences for specific features. New studies may also ask respondents to group the features and map each feature to specific types of subjective relevance. Standardised information literacy assessment instruments can be employed to complement measures of information searching self-efficiency. Additionally, the features elicited from respondents can be incorporated into an information-delivery-system prototype. Further experiments can then be conducted to test the extent to which these features help users make subjective relevance judgments.

In conclusion, the study findings suggest that sensitivity to user differences and their levels of information seeking self-efficacy can assist in the development of more relevant retrieval systems. With a strong research tradition in studying complex and subjective human behaviour and the fluidity of the information search process, the information behaviour field has much to offer to a more user-centred and context-aware information system design.

Acknowledgements

The authors would like to thank the following students who conducted the user study: Lindsay Goile, Pei-Chee Law, Seo-Lay Wee & Sze-Hwee Poh, and the students who participated in the focus group and the survey. We would also like to thank the anonymous reviewers and the participants of the ISIC 2012 conference for their helpful comments.

About the authors

Yin-Leng Theng is the Associate Chair (Research) and an Associate Professor in the Wee Kim Wee School of Communication and Information, Nanyang Technological University. She received her Bachelor of Science degree from the National University of Singapore and her PhD from Middlesex University. She can be contacted at: TYLTHENG@ntu.edu.sg.

Sei-Ching Joanna Sin is an Assistant Professor in the Wee Kim Wee School of Communication and Information, Nanyang Technological University. She received her Bachelor of Social Science degree from the The Chinese University of Hong Kong and her PhD in Library and Information Studies from the University of Wisconsin-Madison. She can be contacted at: joanna.sin@ntu.edu.sg.