Knowledge management, codification and tacit knowledge

Chris Kimble

Euromed Management, 13288 Marseille cedex 9, France, and, CREGOR/MRM, Universite´ Montpellier II, 34095 Montpellier cedex 5, France

Introduction

This article returns to a theme addressed in the special issue of Information Research on knowledge management: the feasibility of knowledge management and in particular the problem of dealing with tacit knowledge in knowledge management. In keeping with the tone of that earlier edition (sub-titled 'Knowledge management - the Emperor's new clothes?') we offer an agnostic's view of knowledge management. We do so by developing some of the themes from an article by Miller (2002) entitled 'Information has no intrinsic meaning'. In his article Miller argues that knowledge results from the uniquely human capacity of attributing meanings to the messages we receive, that is, we each create our individual versions of knowledge.

In this article, we draw in particular on the works of economists who, within their discipline, have traditionally taken a somewhat different approach. For example, Ancori et al., observe,

'To be treated as an economic good, knowledge must be put in a form that allows it to circulate and be exchanged. The main transformation investigated by economists is the transformation of knowledge into information, i.e. the codification of knowledge. The process of codification allows them to treat knowledge-reduced-to-information according to the standard tools of economics.' (Ancori et al. 2000: 255-256)

Thus while Miller (2002) argues that information has no intrinsic meaning and the same piece of information can result in different instances of knowledge, economists such as Ancori et al. argue that information can have an economic meaning and, if codified, different instances of knowledge can be treated as though they were pieces of information.

The first part of the article presents a conceptual framework, based on the work of Shannon and Weaver (1949), which is used to discuss information and knowledge, avoiding the issue of tacit knowledge altogether. This view emphasises the importance of communication, an area that is often neglected or treated as unproblematic in the literature on knowledge management. It treats the semantic content of the message itself as irrelevant and, like Miller's article, leaves the problem of interpreting the semantics of a message to human beings.

Although useful, Shannon and Weaver's ideas are not universally applicable. The next part of the article examines the limits of their applicability and explores some alternative views of knowledge. It begins by looking at the traditional definition of knowledge as justified true belief that underpins the work of many economists and is largely consistent with Shannon and Weaver's theory. This is followed by an examination of the constructivist view of knowledge, which sees truth as, at best, a phenomenon that exists only within the confines of a particular social group. Finally, given the importance of groups to the latter viewpoint, we consider the issue of knowledge as a group phenomenon.

Having laid the foundations for our analysis, we then address the issue of tacit knowledge directly by looking at both Polanyi's (1966) original formulation of the concept and the notion of tacit knowledge popularised by Nonaka (1994). We follow this with an examination of the issue of codification using a framework created by Cowan et al. (2000) which delineates where, in the conceptual terrain between inarticulable tacit and fully codified explicit knowledge, the codification of knowledge might take place.

The final section addresses the question of the desirability and value of codification. It does this firstly by adopting the cost-benefit approach to the issue favoured by economists and then expanding this to consider the strategic, practical and epistemological problems of codification. Finally, the article concludes with some observations on the reasons why knowledge management projects fail to deliver the expected benefits or quickly fall into disuse.

Information and knowledge

Information as a flux

The classic approach of economists is to adopt a view of knowledge that allows it to be considered as a stock that is accumulated through interaction with an information flux.

The idea of an information flux is associated with Shannon and Weaver's (1949) influential mathematical model of communication. The origins of the theory lie with Shannon's work in Bell Laboratories in the 1940s (Shannon 1948). Shannon was primarily concerned with the problem of how to make the optimal use of the capacity provided by the company's telephone lines; the central problem was one of 'reproducing at one point, either exactly or approximately, a message selected at another point' where '... the actual message is one selected from a set of possible messages' (Shannon 1948: 5). According to the theory, the more of a message that was received, the higher would be the probability that the message selected from the set of possible messages was the same as the original.

In the theory, a message is treated as a stream of encoded characters transmitted from a source to a destination via a communication channel. The process begins with the sender who creates a message, which is then encoded and translated into a signal that is transmitted along the communication channel to a receiver, which decodes the message and reconstitutes it for a recipient. In principle, as long as a stable, shared syntax for encoding/decoding exists, the accuracy of the message is guaranteed. The sematic content of the message however is irrelevant to this process. As Shannon noted, 'Frequently the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspects of communication are irrelevant to the engineering problem' (Shannon 1948: 5).

In his theory, the term information had a narrow, specific, technical meaning. Information (equivalent to additional coded symbols being received) was simply something that reduced the uncertainty that the correct message would be selected from a set of possible messages; it did not refer to the meaning of the message, i.e. its semantic content.

Despite Shannon's warning that the application of his ideas was not 'a trivial matter of translating his words to a new domain' (Shannon 1956), economists argue that the cumulative effect of the flow of messages in an information flux is to reduce uncertainty, where uncertainty is now defined as, '… the difference between the amount of information required to perform the task and the amount of information already possessed by the organization' (Galbraith quoted in Daft and Lengel 1986: 556). In other words, for an economist, the effect of an information flux is to add, in a cumulative fashion, to the sum of what is already known.

The context dependent nature of information

For a piece of information to be useful, one must know the context in which it will be used; yet, despite all of the advances in information technology, selecting and organizing relevant information is still a major problem. One explanation for this might be the difficulty of dealing with the range of contexts in which the information could be used. However, as Cohendet and Steinmueller point out (2000: 195), this issue could be dealt with simply by encoding the information about how it should be used into the message itself. Nevertheless, the problem continues to exist. Clearly, there is more to its solution than is implied by the above analysis.

Duguid (2005) points to an underlying problem with this approach. To encode the knowledge about how to use a piece of information into the message itself means that the message must either be entirely self-contained or have access to some other source of information, a 'codebook', that will make its meaning clear. As he notes, such an argument runs the risk of becoming trapped between circularity (with codebooks explaining themselves) and infinite regress (with codebooks explaining codebooks).

If, as implied above, it is not always possible to encode everything required to decode a message into the message itself, how can meaningful communication be achieved? In the case of human agents the answer is simple, as Miller (2002) suggests, it is the knowledge held within the individual that is used to impart meaning to the message. Human beings are able to learn how to assign meaning to events without needing to refer to a set of pre-programmed instructions. The implication of this is that, in the case of human agents, the decoding of the message needs to take into account not only the context of use, but also the knowledge (cognitive context) of the receiver.

Contrasting concepts of knowledge

Although Shannon and Weaver's ideas are now more than 60 years old, they continue to remain influential. As Kogut and Zander (1996) note,

'It is not hard to see its applicability to understanding communication as the problem of people knowing the commands and sharing common notions of coding, of the costs and reliability of various channels … [this] is especially appealing in an age in which machine manipulation of symbols has proven to be such a powerful aid to human intelligence.' (Kogut and Zander 1996: 509)

However, although useful as a model of information processing, their ideas are not always applicable. For Carlile (2002), this point is reached when, 'the problem shifts from one of processing more information to understanding … novel conditions or new knowledge that lies outside the current syntax' (Carlile 2002: 444). Treating context dependent knowledge as though it were context independent, while legitimate in some circumstances, is not in others. To examine what those circumstances are, we need to look at the notion of knowledge more closely.

Knowledge as justified true belief

The idea of knowledge as justified true belief is often attributed to Plato. Although there has been much philosophical debate about the validity of this definition (Gettier 1963), it remains one of the most frequently cited definitions of knowledge and acts as the starting point for our discussion.

According to Ancori et al. (2000) most traditional economic theory is based on a realist ontology and a rationalist epistemology similar to that found in the physical sciences. Taken in its strongest form, this position would put forward the argument that there is a knowable reality that is both invariant and universal, that is, it is true at all times and in all places. Consequently, given a set of axioms, new knowledge can be deduced simply through the application of logic and reasoning. Like Shannon and Weaver's theory, the distinction between information and knowledge dissolves, and the problem of how to deal with knowledge at the level of a group, referred to below, all but disappears.

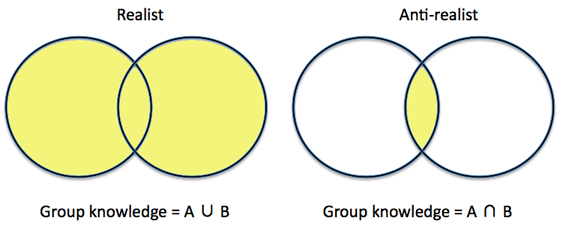

However in contrast to Shannon and Weaver, by linking knowledge to true belief, the semantics of the message become relevant, as this view now implies there is indeed a correct way to interpret information. It also implies that knowledge does not need to be justified by reference to sensory perceptions, and that there is no necessary connection between knowledge and action. If these assumptions are accepted, then learning (i.e. the creation of new knowledge) simply becomes the accumulation of one item of (true) information upon another. In other words, new knowledge is created through the combination (i.e. the union) of existing sets of information (Figure 1). It is this concept that underpins many of the cumulative models of knowledge found in the knowledge management literature, such as Ackoff's (1989) typology of the progression from data to wisdom.

A variant of this viewpoint takes the same realist ontological stance of a universal and unchanging external reality, but relaxes the assumptions concerning truth claims by adopting an empiricist epistemology. Here the argument is that although there is an external truth, it is not possible to apprehend it directly. Instead, it can only be approached by formulating hypotheses and undertaking a series of controlled experiments to build, incrementally, a more accurate representation of reality. This view however has also been the subject of intense philosophical debate with authors such as Popper (1959) and Khun (1962) pointing to the problem of separating the outcome of experiments from the process that generated them.

The constructivist's concept of knowledge

For an alternative view of knowledge, we return to the situation outlined in the section on the context dependent nature of information and deal with the case where human agents are involved and everything required to decode a message has not, for whatever reason, been incorporated into the message itself.

The key change between this and the previous position is a shift from realism to what is sometimes termed anti-realism (Chalmers 2009). For anti-realists, a stable, uncontested external reality does not exist. Stated in its extreme form, the anti-realist position poses many problems and more often reality is defined in terms of what is accepted as truth within the bounds of a particular group or community. Following the work of Berger and Luckmann (1966), this is sometimes termed the constructivist or social constructivist approach.

In a constructivist approach, an action or message has no fixed meaning; such meaning that it does have only exists within the shared perception of a group of actors that hold common epistemic beliefs. Thus, the actors and their ability to recognise, interpret or ignore events become central to the way in which they organize and reorganize their representations of the world. The notion of a group of actors sharing cognitive structures that exist outside of themselves as individuals is problematic and will be dealt with shortly, but for the moment let us consider how this changes the justified true belief version of knowledge outlined above.

Firstly, knowledge now becomes both an input and an output. Existing knowledge, shaped by membership of a particular group and held in an individual's memory, acts as a filter to give meaning to the incoming information flux (input) and new knowledge is generated as meanings are attributed to events (output). Clearly, the assumption that knowledge does not need to be justified by reference to the perceptions of the recipient no longer holds, even if those perceptions are themselves influenced by membership of some broader community.

Secondly, as we shall see below, the assumption that there is no necessary connection between knowledge and action also no longer holds. Many of the ideas put forward for how the shared cognitive structures that provide the necessary epistemic glue to make the anti-realist position tenable, rely on mechanisms such as learning by doing. For example, Cook and Brown (1999) argue that knowing something, as opposed to the passive act of simply having knowledge, is only ever realised in the course of applying that knowledge.

Knowledge at the level of groups and communities

Observing that psychologists tend to focus on individual cognition in laboratory settings, Walsh (1995) claims the notion of group-level cognition challenges the Western orthodoxy of the individual as an independent locus of social action; a sentiment later echoed by Ancori et al. (2000) in relation to economists. Although the situation has changed somewhat in the intervening years, dealing with the concept of knowledge at the group-level still poses a number of problems.

The notion of knowledge held within an individual is relatively easy to grasp, however the idea of knowledge that is somehow shared between individuals in a group is more difficult to explain. In his exhaustive literature review, Walsh (1995) provides numerous examples of studies that demonstrate the existence of what he terms 'supra-individual' knowledge structures; mental templates that give complex information environments form and meaning. However, the issue here is not the existence of such structures, nor particularly what those structures are, but with what they mean for our understanding of knowledge and our ability to manage it.

As indicated in the section on the constructivist's concept of knowledge, much of the work in this area has focused on communities of individuals who share a common worldview. Such communities are seen as the key to providing the cognitive common ground needed if one adopts the constructivist's anti-realist stance. Although the terms that are used have nuances that we do not explore here, epistemic communities and communities of practice are frequently cited as examples of communities that perform this role (Creplet et al. 2001; Iverson and Mcphee 2002).

A common feature of both epistemic communities and communities of practice is that they rely heavily on the notion of knowledge being created through a process of learning by doing, an idea that places the link between knowledge and action at centre stage. Both emphasise how boundaries, created through a process of socialisation and centred on some communal activity, lead to a shared worldview that acts as a foundation for communication and collective action (Wenger 1996; Håkanson 2010). Such groups not only create the basis for a common understanding of what is being done and why, they frequently create their own language to express this understanding to others in the group (Lesser and Storck 2001; Murillo 2008).

Using Walsh's terminology, these groups act as supra-individual knowledge structures, not only in the sense of them being a shared interpretive framework for individuals, but also in the sense of them acting as a form of collective memory (Nelson and Winter 1982; Barley 1986; Lave and Wenger 1991). If these ideas are accepted, then our view of knowledge at the supra-individual level becomes closer to an intersection of the sets of knowledge held by individuals than the union of sets portrayed in the section on knowledge as justified true belief (Figure 1).

The problem with tacit knowledge

Until this point, we have looked at information and knowledge without addressing one of the core themes of this article: tacit knowledge. The discussion of tacit knowledge was left until now because it was felt that it would be more useful to explore some of the fundamental ideas behind knowledge and knowledge management before exploring this topic in any detail.

According to Cowan et al., the notion of tacit knowledge has, 'drifted far from its original epistemological and psychological moorings, has become unproductively amorphous [and] now obscures more than it clarifies' (Cowan et al. 2000: 213). Tacit knowledge is usually described as knowledge that is either (a) inarticulable, that is, it is impossible to describe in propositional terms, or (b) implicit, that is, articulable but only with some difficulty. It is usually seen as being acquired through direct personal experience of something. Because it is hidden from the outside observer, and possibly even from the holder of the knowledge, it is also seen as being difficult to identify and measure.

In spite of this, tacit knowledge has become an important topic of discussion in the literature. For example, Easterby-Smith and Prieto (2008) observe, 'Many of the authors in this field consider that the primary challenge [for Knowledge Management] lies in understanding the nature and processes of tacit knowledge as opposed to explicit knowledge.' (Easterby-Smith and Prieto 2008: 239). In particular, tacit knowledge has been seen by many as a source of sustainable competitive advantage (Barney 1991; Grant 1996b) and innovation (Kogut and Zander 1992; Leonard and Sensiper 1998).

The nature of tacit knowledge continues to be the subject of much critical attention (Gourlay 2006b). We will not explore this in detail here, but instead focus on two widely cited but different views of tacit knowledge. The first view is based on Polanyi's original (1966) notion of the tacit dimension to knowledge. The second, and now probably the dominant view, is attributed to Nonaka and focuses primarily on the difference between tacit and explicit knowledge.

Tacit knowledge and Polanyi

First , Polanyi was more concerned with knowing as a process than with the particular kind of knowledge that resulted from that process; accordingly, he wrote more about tacit knowing than about tacit knowledge. For Polanyi tacit knowledge was a dimension of knowledge that was located in the mind of an individual; there could be no such thing as objective explicit knowledge, which could exist independently of an individual's tacit knowing: 'The ideal of a strictly explicit knowledge is indeed self-contradictory; deprived of their tacit coefficients, all spoken words, all formulae, all maps and graphs, are strictly meaningless.' (Polanyi 1969: 195).

Many of Polanyi's ideas originated from his opposition to the positivist view of science and his concern to make clear the role that personal commitments and beliefs of scientists play in the practice of science. As Spender notes, 'for Polanyi science was a process of explicating the tacit intuitive understanding that was driven by the subconscious learning of the focused scientist' (Spender 1996: 50). However although absolute objectivity can never be attained, Polanyi did believe that our tacit awareness connects us to an external reality.

For Polanyi, the tacit dimension to knowledge is inaccessible to the conscious mind. He used examples such as a skilful performer to illustrate this, arguing that the knowledge that underlies their performance is largely tacit in the sense that they would find it difficult or impossible to articulate what they were doing or why. However, although such knowledge may be difficult to articulate in some circumstances, it may be less difficult in others.

Polanyi makes a distinction between focal and subsidiary awareness. He illustrates this using the example of a carpenter hammering a nail into a piece of wood. The carpenter gives his attention to both the nail and hammer, but in a different way. His objective is to use the hammer to drive the nail into the wood; consequently, his attention is focused on what is happening to the nail, although he retains a subsidiary awareness of the hammer, which is used to guide his hand.

In Polanyi's example, the traveller first makes sense of his experiences using his own innate knowledge. He then composes a written (codified) account of his journey, which he sends to his friend. Upon reception, the traveller's friend interprets the letter using his own knowledge and experience to 'decode' the message. Thus, this process is not a simple process of codification and decoding but the intermingling of three distinct sets of knowledge employed in three different contexts; the knowledge of the traveller, the knowledge associated with producing and reading written documents and the knowledge held by the traveller's friend.

For Polanyi, knowing is the act of integrating tacit and explicit knowledge. Although it is possible to make certain aspects of knowledge explicit and encode it; something can only be known when this explicit component is combined with the tacit in the mind of the receiver. In this sense, knowledge is best termed a duality (Hildreth and Kimble 2002).

Tacit knowledge and Nonaka

A different view of tacit knowledge can be found in much of the current literature on knowledge management. This view largely depends on the degree to which knowledge is articulable and presents tacit and explicit knowledge as two distinctive categories of knowledge. The intellectual basis for this view is usually attributed to Nonaka's reading of Polanyi.

According to Nonaka, 'Polanyi classified human knowledge into two categories. "Explicit" or codified knowledge refers to knowledge that is transmittable in formal, systematic language. On the other hand, "tacit" knowledge has a personal quality, which makes it hard to formalize and communicate.' (Nonaka 1994: 16). Although this may not be an accurate reflection of Polanyi's views, and was later revised (Nonaka and von Krogh 2009), this hard distinction between tacit and explicit has since been adopted by numerous authors (Conner and Prahalad 1996; O'Dell and Grayson 1998; Ipe 2003; Feghali and El-Den 2008; Swart and Harvey 2011).

Nonaka first presented his ideas about how knowledge was used in organizations in 1991 and subsequently extended and developed them into what he termed an organizational knowledge creation theory (Nonaka 1991, 1994; Nonaka and Takeuchi 1995). The concept of knowledge conversion, converting one form of knowledge into another, and that of the SECI model, which describes the mechanism through which this conversion takes place, are the key concepts in Nonaka's theory.

Schematically the SECI model consists of a two by two matrix with each cell in the matrix, Socialisation, Externalisation, Combination and Internalisation, representing one phase in a cycle of knowledge conversion. In the socialisation phase, an apprentice acquires tacit knowledge from an expert through working with them on a continuous basis. A transfer of tacit knowledge takes place. In the externalisation phase, the expert (previously the apprentice) is now able to articulate or externalise their freshly acquired knowledge, making it available for others to use. The newly acquired tacit knowledge is transformed into explicit knowledge. In the combination phase, guided by the organization's goals, this knowledge is combined with other explicit knowledge, either at the individual or at the collective level. In the final internalisation phase, the explicit knowledge created in the previous stage is internalised and is transformed into tacit knowledge again. Nonaka argues that this process of conversion and 'amplification' of knowledge can be repeated at different levels within an organization, moving in a growing spiral from an individual to a group level and later on to an organizational or inter-organizational level.

Effectively, this view presents knowledge as a dichotomy (Hildreth and Kimble 2002) where knowledge is defined as being either tacit or explicit. This view lends itself easily to the notion of the management and codification of knowledge (Gourlay 2006a). For example, if knowledge can be separated into its components it becomes easier to conceive of knowledge as an object that can be owned, stored, manipulated and exchanged (Schultze and Stabell 2004). It also encourages the juxtaposition of the terms tacit and codified knowledge, so that tacit becomes a label for anything that is uncodified (Cowan et al. 2000), making the process of knowledge conversion between tacit and explicit appear to be a simple, one step, process.

In his later work, Nonaka attempted to clarify his views by asserting that, in fact, tacit and explicit knowledge existed on a continuum where, 'The notion of "continuum" refers to knowledge ranging from tacit to explicit and vice versa.' (Nonaka and von Krogh 2009: 637). Although this might be conceptually different from the popular view of a hard distinction between two different types of knowledge, it remains fundamentally different from Polanyi's view of knowledge as a tacit/explicit duality where tacit and explicit are inextricably intertwined.

The limits of codification

Although Shannon and Weaver's theories do not deal directly with the problem of managing knowledge, their notion of coding and decoding does provide one approach to dealing with it. What follows below is a description of an approach to dealing with the problem of tacit knowledge that starts from the seemingly more straightforward approach of codification. This is largely based on Cowan et al.'s (2000) topography of knowledge transaction activities.

Cowan et al.'s approach to the issue of tacitness is that of a 'sceptical economist'. By this, they do not necessarily mean that they are sceptical about the existence of tacit knowledge, nor that they are sceptical about its importance for economics, but principally that they have become concerned about the way that the term is used as a piece of fashionable economic jargon. Their goal was to restrict the realm of the tacit and to,

critically reconsider the ways in which the concepts of tacitness and codification have come to be employed by economists, and to develop a more coherent re-conceptualization of these aspects of knowledge production and distribution activities. (Cowan et al. 2000: 213)

The focus of their work is on, 'where various knowledge transactions or activities take place, rather than where knowledge of different sorts may be said to reside.' (Cowan et al. 2000: 229).

Since its publication, Cowan et al.'s work has been the subject of criticism (Duguid 2005; Balconi et al. 2007), however we have chosen to use it here as it highlights the circumstances in which knowledge might be codified and, in doing so, it throws the topic of tacit knowledge into sharper relief.

Cowan et al.'s topography

Perhaps surprisingly, given that Cowan et al. are economists, certain aspects of their approach have more in common with the social constructivist view outlined in the section on the constructivist's concept of knowledge than the realist or rationalist view presented in the section on knowledge as justified true belief. Most of their analysis centres on the availability of a codebook. For Cowan et al., a codebook is an 'authorized dictionary' used to decode the content of messages (e.g., stored documents). The codes it contains are not a representation of an underlying truth but a set of standards or rules that have been endorsed by an authority or gained authority through common consent. They concede that some of the codes may come from specialised knowledge that has been acquired elsewhere, and may itself be tacit. They also accept that not everybody will have the knowledge required to interpret the codes properly. Thus, what is codified for one person may be tacit for another and completely incomprehensible for a third.

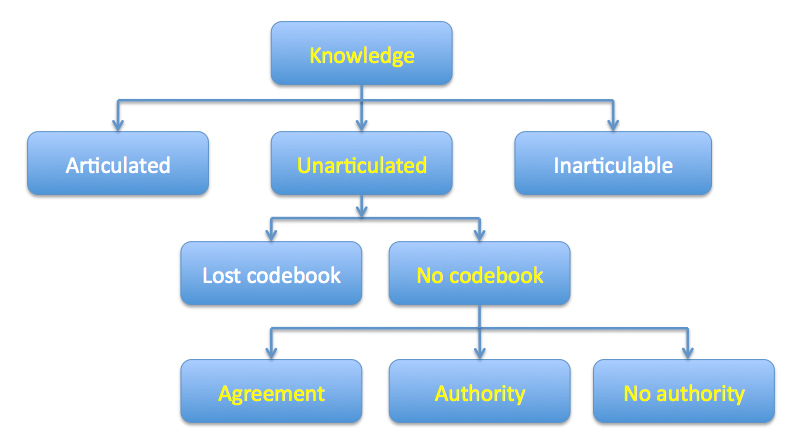

Their topography starts with a three-way split between knowledge that has already been articulated, and is therefore already codified; knowledge that is as yet unarticulated but in principle could be articulated, and knowledge that is simply inarticulable (Figure 2).

They do not address the issue of tacit knowledge, that is, knowledge that is inarticulable, directly. Their focus is on codification and the use of codebooks; they simply suggest that the third part of the initial branching that corresponds to this should be set aside as 'not very interesting' (Cowan et al. 2000: 230). The branch, labelled articulated, is dealt with in a similar fashion. Cowan et al. argue that if the knowledge has already been articulated, is recorded and is referred to by the group, then a codebook must exist and must be in use; effectively, the knowledge is already codified and of no further interest.

This leaves only the unarticulated branch. One of Cowan et al.'s primary concerns was to address the almost mythical status that tacit knowledge had acquired. Their objectives were, first, to explore the option of the codification of tacit knowledge more deeply, and secondly to highlight what they saw as the widespread error of assuming that un-codified knowledge was equivalent to tacit knowledge.

For Cowan et al., the unarticulated branch represents a situation where no codebook is apparent. They split this into two sub-cases where,

'In one, knowledge is tacit in the normal sense - it has not been recorded either in word or artifact, so no codebook exists. In the other, knowledge may have been recorded, so a codebook exists, but this book may not be referred to by members of the group - or, if it is, references are so rare as to be indiscernible to an outside observer.' (Cowan et al. 2000: 231)

Thus, Cowan et al. open up a new possibility, one where knowledge has been recorded and so has, at some point, been codified, but where the codebook has been lost so that to a casual observer it appears as if no codebook exists. They cite as an example a group of scientific researchers who work on some topic that is already highly codified but the members of the group know the codebook so well that it has become internalised, 'paradoxically, its existence and contents are matters left tacit among the group unless some dispute or memory problem arises' (Cowan et al. 2000: 323). As Winograd and Flores (1986: 149) put it, it has become lost in the depths of obviousness. They argue that many of the studies that show the crucial role of tacit knowledge are in fact examples of this, when researchers have 'placed undue reliance upon an overly casual observational test' (Cowan et al. 2000: 237).

The other branch of this tree, where no codebook exists, poses a different problem. Remember that we are dealing with a situation where, in principle, knowledge is articulable. Cowan et al. suggest that this situation can be dealt with by a three-way split, where which branch is followed hinges on the presence or absence of disputes.

The first branch represents a situation where there is agreement about what is being done and the reasons for doing it. Consequently, no disputes occur that might trigger the demand for a more clearly stated set of goals or procedures and the group functions on the basis of a tacit agreement. Cowan et al. claim that it is common to find examples of this situation in large organizations.

The remaining two branches occur when there is a dispute and no codebook exists to resolve it; the difference between them hinges on how the dispute is settled. The branch labelled authority concerns the situation where there is some form of procedural authority that can arbitrate between the contending parties and create a suitable codebook. An example of this might be where there is an umbrella-organization or association that can act as a broker between the disputing parties (Kimble et al. 2010). The second branch concerns the situation where no authority exists. Here the basis for the resolution of disputes is more tricky. Cowan et al. suggest that the resolution to this type of dispute often relies on, 'personal (and organizational) gurus of one shape or another, including the "new age" cult leaders in whom procedural and personal authority over the conduct of group affairs are fused' (Cowan et al. 2000: 234-235).

To codify or not?

While it is certainly possible to argue with points of detail and interpretation in Cowan et al.'s topography, it does offer a pragmatic approach to answering the question about what should be codified.

Any individual or group of agents makes decisions about what kind of knowledge activity to pursue and how it will be carried on. Should the output be codified or remain uncodified? … For an economist, there is a simple one-line answer: the choices will depend on the perceived costs and benefits. (Cowan et al. 2000: 231)

As indicated previously, for an economist, this means focusing exclusively on knowledge that is capable of being codified and converted into information. Below we briefly outline what might be expected from this type of cost-benefit analysis before expanding the discussion to look at the issue of codification from other perspectives. In particular, we will examine the relevance of the cost-benefit approach in the light of the different views of tacit knowledge examined in the section on contrasting concepts of knowledge.

The benefits of codification

For the most part, economists tend to treat the benefits of codification as a given, although the argument of the low or negligible marginal costs associated with transmission of information is implicit in much of the work. Cohendet and Steinmueller (2000: 202-203) list four benefits of codification.

- It gives information the properties of a standard commodity in that it can be bought and sold and used to signal the desirability of entering into some form of transaction.

- It makes it possible to exploit some of the non-standard features of information as a commodity, such as its stock not being reduced by its sale and its low marginal cost of reproduction.

- It allows the modularization of bodies of knowledge, which facilitates specialisation and allows firms to acquire knowledge at a fixed cost, which in turn facilitates the outsourcing of activities.

- It directly affects knowledge creation, innovation and economic growth, which has the potential to alter the rate and direction of knowledge generation and distribution dramatically.

Cowan et al.'s discussion of the benefits of codification are equally sparse. Their principle contribution being to outline five situations where they claim codification would be particularly beneficial. These are in activities that involve the transfer, recombination, description, memorisation or adaptation of knowledge (Cowan et al. 2000: 243-245).

Similar views on capturing knowledge can be found in the early work on knowledge management, such as Davenport et al.'s (1998) call for a 'yellow pages' database of expertise or O'Dell and Grayson's (1998) call for the identification and transfer of best practices. The work on technology-based organizational memories (Walsh and Ungson 1991; Stein and Zwass 1995) is also based on the 'knowing what we know' approach to knowledge management. On a slightly different tack, DeLong (2004), urges companies to find ways to capture and store the knowledge of their employees to deal with the threat of an ageing workforce. However, in each case, the benefit rests on the same argument: that if knowledge is of value to a company, it should be captured, stored and shared, and codification represents the most cost-effective way to do this.

The costs of codification

While Cowan et al. may have little to say about the benefits of codification, their topography does provide a structure to examine its costs. Perhaps the most obvious cost associated with codification is that of creating the codebook.

If no codebook exists, then one will need to be created; this can involve substantial fixed costs. First, some form of conceptual framework, together with the language needed to describe it, has to be developed. Once this has been done, work can start on producing the codebook itself. The costs of this can be particularly high where there are significant disagreements or differences of opinion.

In the case of an existing codebook, be it either lost or explicit, the costs of producing the codebook are, to a greater or lesser extent, already sunk and codification can be achieved at a much lower marginal cost. Pre-existing standards and a vocabulary of well-defined and commonly understood terms should greatly reduce the time and effort required to produce the final codebook.

However, in both of these cases, the costs of codification do not stop with the production of the codebook. Codifying a new piece of knowledge will add to the content of the existing codebook and will also draw upon those contents. A potentially complex, self-referential situation is created which needs to be managed. This problem can be particularly severe when dealing with a new activity where the contents of the codebook have not yet stabilised. Even with well-established activities, adding new material will introduce new concepts and terminology. Consequently, the cost of maintaining the integrity of an existing codebook will inevitably form part of the on-going costs of codification (Kimble 2013).

Both Cohendet and Steinmueller (2000) and Cowan et al. (2000) note the influence that the mechanisms that underlie the construction of a shared context can have on the cost of maintaining a codebook. Cohendet and Steinmueller (2000) suggest that if a situation is relatively stable, for example producing declarative propositions for some physical process, then investments in a codebook should show increasing returns. If on the other hand, the creation of a shared context relies on constantly shifting social interactions, such as those associated with the building of organizational skills and competencies, then the return on investment is more likely to be constant than increasing.

The desirability of codification

Having looked at the costs and benefits, we now return to the original question, to codify or not to codify? The focus provided by economists (Ancori et al. 2000; Cohendet and Steinmueller 2000; Cowan et al. 2000) tends to be exclusively on codified knowledge and carries with it the implicit assumption that by comparing costs and benefits it will be possible to establish an 'optimum degree of codification'. However, this type of analysis falls short in two areas.

First, it does not consider the strategic implications of codification; secondly, and perhaps more significantly, it places an undue emphasis on the idea of knowledge as a dichotomy. Effectively, it assumes that knowledge can be divided into what is often termed tacit (as yet uncodified) and explicit (already codified) knowledge, and that all that is needed is to decide where the line between the two should be drawn.

Dealing first with the strategic issues, perhaps the most obvious risk is that of making something whose value is based on its scarcity and inimitability easier to share and reproduce. For example, Grant (1996a) claims that,

Sustainability of competitive advantage therefore requires resources which are idiosyncratic (and therefore scarce), and not easily transferable or replicable. These criteria point to knowledge (tacit knowledge in particular) as the most strategically-important resource which firms possess. (Grant 1996a: 376)

However Schultz and Stabell among others, highlight the apparent contradiction that,

arises out of knowledge management researchers' recommendations that, in order to manage tacit knowledge, it must be made explicit (e.g., Nonaka and Takeuchi, 1995). However, once the tacit knowledge is explicit, it can be imitated, implying a potential loss of competitive advantage. (Schultze and Stabell 2004: 551)

We will not examine the risks of codification in any more detail here as they have been the subject of discussion elsewhere. Even Cowan et al. note that codified knowledge can be a potent carrier of history and that excessive codification can lock an organization into obsolete conceptual schemas. Instead, we will focus on the incommensurability of the cost-benefits approach with the view of knowledge as a duality and ask a more fundamental question, is it even possible to draw a line between what should and what should not be codified?

The assumption that underlies much of the preceding discussion is that there is a choice between what can be rendered explicit and what should be left as tacit. However, most of the tacit knowledge that Grant and others refer to is rooted in undocumented 'know how' embedded in the web of relationships that go to make up the firm. Can we treat this type of knowledge in that way?

Many of the examples that Polanyi referred to involved some form of skill or competency where the rules for performing the activity have been pushed into the unconscious, leaving the conscious mind free to focus on the performance itself. For example, a pianist does not have to think about the layout of the piano, or how to use the pedals, and is free to concentrate on reading the music and its interpretation. It is only when the tacit dimension to knowledge, which concerns how to operate a piano, and the explicit dimension, which concerns how to read music, are brought together that the performance is complete. If this point of view of knowledge as a duality is accepted, then it becomes impossible to deal with the two things independently.

Even if this argument is rejected and, following Cowan et al.'s logic, it were possible to extract certain aspects of this form of tacit knowledge and codify them, would this be desirable, or even practical? First, there is every likelihood that the remnants of uncodified, unobserved tacit knowledge would be lost and forgotten. For example, Brown and Duguid (1991) explain how Xerox's early attempts at knowledge management focused on abstract canonical knowledge, the way the company thought the work should be done, and ignored the value of practice-based, non-canonical knowledge, which later turned out to be of vital importance. Similar examples of potentially valuable knowledge being ignored or undervalued can be found elsewhere (Alvesson and Karreman 2001; McKinlay 2002; Thompson 2005).

Secondly, the costs of attempting to maintain a codebook for such knowledge would probably prove to be prohibitive. As we noted earlier, in the section on knowledge at the level of groups and communities, communities often create their own private language which not only serves as a useful shorthand for members of the community but can also act as boundary between it and the outside world; in such cases, knowledge really does represent power (Teigland and Wasko 2003; Kimble et al. 2010). Creating a codebook in such circumstances is likely to prove costly, as it will inevitably mean chasing a moving target. As Marshall and Brady (2001) observe in relation to attempts to specify precise and unambiguous meanings for natural languages, 'Linguistic meaning is never complete and final… It is unstable and open to potentially infinite interpretation and reinterpretation in an unending play of substitution' (Marshall and Brady 2001: 101).

Conclusions

The goal of this article was to return to knowledge management and the problem of the management of tacit knowledge in knowledge management. In contrast to many of the articles that tackle this topic, we approached it, not from the viewpoint of what is it that is special about tacit knowledge that means it cannot be codified, but from the viewpoint of the sceptical economists (Cowan et al. 2000) who ask what is it about tacit knowledge that can not be codified?

In taking this approach, we have returned to the theoretical basis for the views of knowledge that appear in the knowledge management literature, and have highlighted the philosophical positions that underpin them. Armed with this knowledge we have critically examined Cowan et al.'s analysis of what they believe is open to codification and drawn attention to its shortcomings.

In this final section we will consider what this means for knowledge management.

Knowledge management or information management?

One of the frequent and potent criticisms levelled at knowledge management is that it is really no more than information management re-badged (Wilson 2002). For example, Offsey (1997) notes that, 'what many software vendors tout as knowledge management systems are only existing information retrieval engines, groupware systems or document management systems with a new marketing tagline' (Offsey 1997: 113).

For those who take the view of the sceptical economists, this is not criticism at all, as the goal of codifying and storing knowledge is to turn it into information. Perhaps Cowan et al.'s attempts to demystify tacit knowledge have some value here by throwing a more honest, and possibly more realistic, light upon what is often termed knowledge management. If we can be clearer about what we mean when we talk about capturing and storing knowledge, we may be one step closer to a more realistic expectation about what knowledge management and knowledge management systems can do.

Misjudging the costs and benefits of codification

The history of knowledge management systems, particularly large scale knowledge management projects, contains a number of examples of cost overruns and failure to achieve the expected benefits (Walsham 2001). What is it then that lies at the root of the over-optimistic assumptions that are made about knowledge management?

Clearly, part of the initial growth of knowledge management was driven by commercial gain. Kay, for example describes the 'vendor feeding frenzy' (Kay 2003: 683), which developed as software vendors attempted to exploit the new and potentially lucrative market for knowledge management. However, the rationale that underpins these assumptions is probably best expressed in terms of Figure 1.

If knowledge can be separated into independent tacit and explicit components, and if these can be specified and constrained to act in such a way that they fulfil the requirements of Cowan et al.'s codebook, then the idea of increasing returns through the cumulative growth of knowledge that comes from the union of individual knowledge sets becomes plausible. However, in most cases, the greatest benefits to an organization come from the construction of a shared cognitive common ground between human agents. As we have seen, in this case, the return on investment is more likely to be constant because the growth of knowledge comes from the intersection of sets of individual knowledge. Although attractive, the idea of a cumulative growth of knowledge, be it Nonaka's 'amplification' of knowledge (Nonaka 1994) or Ackoff's progression from data to wisdom (Ackoff 1989), is most often likely to prove a fallacy.

If there is a tendency to overestimate the benefits of knowledge management, what then of the costs? As indicated in the preceding discussions, the costs of building and maintaining a codebook, particularly when dealing with a novel or fluid situation, can be high. There are several studies that provide examples of knowledge management systems that are developed, at some cost, and then either fully or partially fall into disuse (Vaast 2007; Ali et al. 2012). The problems of extensibility and scalability due to continual modifications to the internal content and structure of such systems remain a significant technological challenge. The field of ontological engineering in artificial intelligence claims to offer one solution to this, but so far there are few working real world examples to support this claim. The cost of managing and maintaining systems based on codified knowledge remains significant and recurring (Kimble 2013).

Codification and knowledge as a duality

We have argued that it is more accurate, and perhaps more legitimate, to describe knowledge management systems as information management systems and that, by doing so, this might lead to a more realistic expectation of what such systems can achieve. What else might our analysis contribute to our understanding of knowledge management and the management of tacit knowledge?

Cowan et al.'s topography is helpful because it focuses on the issue of codification and avoids the not codified = tacit = uncodifiable trap. It is also useful for providing a framework to help us think about the costs and benefits of codification. However, like Nonaka's SECI model, it runs the risk of allowing Polanyi's distinction between the tacit and explicit dimensions of knowledge to segue into a dichotomy, where knowledge is either tacit or explicit, and from there, to lapse into thinking of knowledge as an object that can be stored and manipulated.

Codification is a valid approach to knowledge management, but it is not universally applicable. As we noted in the section on the desirability of codification, it does not sit well when dealing with the sorts of tacit knowledge that are involved in skills and competencies. In such circumstances, it simply does not make sense to attempt to separate tacit and explicit and deal with them separately, because both are needed to complete the picture. Similarly, we noted that the effect of codifying knowledge can lead to knowledge that has not been codified being discounted as 'not very interesting' (Cowan et al. 2000: 230) and lost. The point of knowledge management, at least in so far as it is used to further a set of organizational goals, is to leverage knowledge; separating out, codifying and storing certain aspects of knowledge runs the risk of doing the opposite and producing people who can only follow instructions (Brown and Duguid 1991; Talbott 1999).

Robert Skidelsky, in his biography of another economist, John Maynard Keynes, quotes Keynes as telling his Cambridge students in 1933 that 'when you adopt perfectly precise language, you are trying to express yourself for the benefit of those who are incapable of thought' (Skidelsky 2000). By focusing exclusively on codified knowledge, the advocates of codification may lose sight of the intimate linkages between tacit and explicit knowledge. To do so runs the risk of creating organizations staffed by people that knowledge management has made, in Keynes words, 'incapable of thought'.

About the author

Chris Kimble is an Associate Professor of Strategy and Technology Management at Euromed Management Marseille, is affiliated to MRM-CREGOR at Universite´ Montpellier II, and is the Academic Editor for the journal Global Business and Organizational Excellence. Before moving to France, he lectured in the UK on information systems and management at the University of York, and information technology at the University of Newcastle. His research interests are business strategy and the management of the fit between the digital and social worlds. Chris Kimble is the corresponding author and can be contacted at: chris.kimble@euromed-management.com