The place of health information and socio-emotional support in social questioning and answering

Adam Worrall and Sanghee Oh

College of Communication and Information, The Florida State University, PO Box 3062100, Tallahassee, Florida USA 32306-2100

Introduction

Social media and community channels are popular resources for people with information needs, problems, or questions about health. Fox (2011a) reported 34% of Internet users have looked online for the experiences of others on health or medical issues, 23% of social network users have used them to read and share personal health experiences and 18% of Internet users have sought out others online who share similar health concerns to communicate with. While all segments of the population seek health information online, in the USA, Non-Hispanic whites, college graduates, women and younger adults are more likely to do so; income also correlates positively with online health information seeking (Fox 2011a). Online media and social networking sites are used to find, share and discuss information, both objective and subjective. (Burnett and Buerkle 2004; Coulson 2005; Frost and Massagli 2008; Gooden and Winefield 2007). Cohen and Syme (1985) indicated that information shared in social contexts may provide negative and positive effects on making health decisions and on well-being.

The quality of health information shared in social contexts is an important issue in health information research. How do users evaluate online comments on and answers to health questions? How do experts evaluate them? What role do social and emotional support factors play in this evaluation? Little is known about the quality of health information shared in social contexts; how health consumers evaluate the quality of this information, or the impact of socio-emotional factors on this evaluation.

Towards addressing these concerns, we conducted an exploratory study of the quality of health answers in social questioning and answering services. Websites offering such services, mostly without charge, allow people to ask and answer questions of each other in various topic areas, including health. Users benefit from the different levels of knowledge and expertise other users share and their varied experiences (Gazan 2011; Shah et al. 2009). Social Q&A sites have grown with great speed in recent years (Gazan 2011; Hitwise 2008; Shah 2011), with health a popular topic of discussion. The health answers found on such sites are user-generated information, created by users dynamically in response to questions posted by users desiring information, advice, opinions and experiences from others with similar health concerns, issues, or interests (Gazan 2008; Shah et al. 2009).

Intending to improve our understanding of problem of health information quality in this context, we report qualitative findings from our study of the quality of health answers in the Yahoo! Answers Social Q&A site, observing the views of librarians, nurses and users on the quality of health answers shared on the site and placing special focus on their overall impressions and the socio-emotional support and advice they value. Implications exist for the design of such sites; for virtual reference and other library services; and for user, patron and patient education in evaluating online health information. Next, we review the relevant literature on the importance of social support, user-generated content and personal experiences and of these factors on Social Q&A sites, online health communities and online health information seeking.

Literature review

Social support

In the context of health, social support can have informational, psychological, physical, emotional and community-based elements. Cohen and Syme (1985: 4) focused on 'the resources provided by other persons', including 'useful information or things… [that] may have negative as well as positive effects on health and well-being'. With a more encompassing view than Cohen and Syme, Caplan (1974) included expressions of help in a person's time of need ('crisis') through psychological, informational, physical and emotional resources provided by a person's social network. Such resources address both the task at hand and the need for emotional support. Cassel (1976: 113) narrowed his focus to factors protecting or buffering an individual from the 'physiologic or psychologic consequences' of stress. Cobb (1976: 300) was most interested in emotional and community-based factors of social support, defining it as 'information leading [one] to believe [one] is cared for and loved, esteemed and a member of a network of mutual obligations'. As Caplan (1974: 6) stated, social support may be 'continuing' or 'intermittent' and may either guide the individual in their resource seeking or provide 'a refuge or sanctuary' of comfort away from stress. For all of these authors, social support has a clear impact on the overall 'health and well-being' (Cohen and Syme 1985: 4) of an individual, albeit not always in the positive direction.

In the context of online social support groups, White and Dorman (2001: 693) characterized social support, offered through the Internet, as 'mutual aid and self-help' for those 'facing chronic disease, life-threatening illness and dependency issues'. Online social support groups can be seen as online or virtual communities (Ellis et al. 2004; Preece and Maloney-Krichmar 2003; Rheingold 2000; Rosenbaum and Shachaf 2010). The relative importance and presence of social support and social interaction in online communities has been debated (Eastin and LaRose 2005; Wellman and Gulia 1999), a debate which has spilled over to the popular press (Klinenberg 2012; Marche 2012; Tufekci 2012; Turkle 2012). Research has found social and emotional support and social ties to be important factors in online communities (Eastin and LaRose 2005; Tufekci 2012; Wellman and Gulia 1999), such as those of distance learners (Haythornthwaite et al. 2000; Kazmer 2005; Kazmer and Haythornthwaite 2001). Rheingold's (2000: xx) early definition of virtual communities, first published in 1993 and drawn from participant observation, emphasized the 'webs of personal relationships' and social support elements often present in such communities. Social and community-based factors are key to encouraging knowledge sharing and information exchange in online and virtual communities (Ardichvili 2008; Holste and Fields 2010; McLure Wasko and Faraj 2000, 2005). Burnett's typology of information exchange in such communities includes 'socially important activities', including joking, gossiping, exchanging pleasantries and 'active emotional support', alongside explicit exchanges of information (Burnett and Buerkle 2004: Introduction section, para. 5).

In health-related online communities, research has shown socio-emotional and community support are prominent and desired by community members. In studying the activities of two health-related Usenet newsgroups, Burnett and Buerkle (2004) found the groups featured higher levels of social activity and socio-emotional support behaviour than of actual information exchange; the group dealing with a more severe, life-threatening condition featured higher levels of socio-emotional support. Gooden and Winefield (2007) examined two online discussion boards for survivors of prostate and breast cancer and the nature of discussions that took place. They found the discussion boards offered both emotional and informational social support for their members, resulting in 'a sense of community' allowing members to manage their conditions, albeit in subtly and qualitatively different ways (Gooden and Winefield 2007: 113). Frost and Massagli (2008: Discussion section para. 2) found social and emotional support that 'foster[ed] relationships based on shared attributes' to be important elements of an online amyotrophic lateral sclerosis community. While the health support group studied by Coulson (2005) focused on support through offering information, emotional and social support were still a significant part of the group's activities.

Studies of social Q&A sites have found social and emotional support to be the most important criteria desired in answers and a key element in how answers are evaluated (Kim et al. 2007; Kim and Oh 2009). Kim et al. (2007) concluded the dominance of socio-emotional values and the large proportion of questions they found asking for opinion or suggestions were indicative of what people sought. Kim and Oh (2009) believed the social contexts of such environments were a factor in social and emotional support being of greater importance for users of these sites than in traditional relevance studies (Saracevic 2007). Narrowing the context to health, the follow-up study by Kim et al. (2009) found socio-emotional criteria to be a close second to the utility of answers. While solution feasibility was the most frequent individual criterion, emotional support and affect were also frequent factors in the evaluation of answers.

In a further study of the motivations of answerers, Oh (2011) found they were most influenced by feelings of altruism, enjoyment, efficacy, empathy, social engagement and community interest. Reciprocity was not influential; answerers did not expect 'rewards or compensation' as long as questioners showed similar levels of goodwill (Oh 2011: 553), implying weak ties and a desire to provide support within the community-as-social-network existed (see Granovetter 1973; Wellman and Gulia 1999). 'Self-oriented' factors were a stronger influence on motivations than factors relating to 'social interaction' (Oh 2011: 553).

User-generated content as information source

Online communities often solicit and incorporate contributions from their users in the form of comments, reviews and other user-generated content. Van Dijck (2009) explored the rise of participatory culture evidenced by Websites such as Wikipedia and YouTube, noting the ambiguity inherent in participation. Not all users will participate and generate content in equal measure, nor do all online communities treat user-generated content in the same way; many commercial sites may filter and mediate contributions (van Dijck, 2009). Nevertheless, user-generated content, filtered or not, can serve as an important source of information and knowledge for community members, one drawn from the opinions, ideas and comments of users with similar experiences or situations.

In digital libraries and similar information systems, these are called social annotations, 'enrichment[s] of information object[s] with comments and other forms of meta-information' (Neuhold et al. 2003: 10) that are publicly shared and can be annotated or enriched themselves by other users. They allow users to engage and participate in the community, providing 'a valuable medium for collaboration' within and beyond these communities (Neuhold et al. 2003: 11). AnswerBag has been successful in using social annotations and user-generated content to build community and social ties and provide socially constructed information and knowledge to users (Gazan 2008). Gazan identified eight criteria that digital libraries and online communities should consider when incorporating social annotations, including interface, usability, anonymity, control, retrieval and sharing concerns.

Health consumers read, write and post comments online; refer to online reviews; and receive updates through podcasts and feeds (Fox and Jones 2009). The perceived anonymity of the Internet provides allows consumers the freedom to expose delicate issues, exchange intimate messages, or be less distracted by age, sex, or social status than they would be offline (Eysenbach 2005; Klemm et al. 2003; White and Dorman 2001). Social Q&A sites provide for greater anonymity and candour for questioners and answerers, relying on the wisdom of the crowd and community contributions of user-generated content as in many online communities (Shah et al. 2009). Thus, they can be seen as online communities (Rosenbaum and Shachaf 2010) or as featuring a community as a venue for question asking and answering (Shah et al. 2009).

Personal stories and experiences

Online communities provide opportunities for stories and experiences to be told, exchanged and shared, allowing for information exchange (Bechky 2003; Brown and Duguid 1991; Nonaka 1994) and for community members to know each other better (Preece and Maloney-Krichmar 2003). In forums or discussion board communities, introductions, off-topic, or water cooler-type discussions are common and allow for social information and knowledge sharing. Research findings from studies of online support group communities show users place high value on such hyperpersonal support and communication (Turner et al. 2001: 232) and personalized sharing of information, advice and suggestions (Burnett and Buerkle 2004; Frost and Massagli 2008; Gooden and Winefield 2007). Personal stories, experiences and testimonials provide a different view than the facts and explicit knowledge found in other resources.

Social Q&A sites are similar to online support groups in that people can receive personalized responses. The main difference between the two venue types is in their comprehensiveness. Online support groups often focus on a certain disease, addiction, habit, or condition (Pennbridge et al. 1999). Social questioning and answering embraces people who have various immediate health problems, providing responses from answerers with varied levels of expertise and experiences. The openness and social nature of most such sites encourages users to be active members of the community and contribute informational, personal, social and emotional content and advice, displaying altruism and empathy for others (Oh 2011; Shah et al. 2008).

Research questions

Our study examined the quality of answers given to health-related questions on Yahoo! Answers, one of the most popular social Q&A sites (Gazan 2011; Shah 2011). Little is known about the quality of health information shared on such Websites, about how health consumers asking questions evaluate the quality of the answers they receive, or about the impact of important socio-emotional factors on their seeking, use and evaluation of answers. Our study is significant for its contribution towards improving our understanding of this evaluation process and of key factors that affect it. The relevant literature indicates that key considerations include the socio-emotional reactions felt, evaluation criteria applied and the advice valued by participants in these sites. The overall impressions of participants were also of interest in the context of previous literature. Thus, our study explored the following four research questions:

- What is the overall impression librarians, nurses and users have of the quality of health answers in social Q&A?

- What socio-emotional reactions do librarians, nurses and users have to evaluating health answers from social Q&A sites?

- What are the evaluation criteria librarians, nurses and users emphasize?

- What advice do librarians, nurses and users offer in evaluating health answers in social Q&A?

Librarians and nurses are experts in providing health information services. Although their levels of knowledge and experiences in the domains of health and the information search vary, they share common interests in helping people manage their health problems, identify reliable health information resources and make health decisions. Librarians and nurses may differ in their understanding of how health information obtained from social media is used and evaluated. Librarians have served users in reference and other information services, using online tools such as instant messaging, e-mail, message boards, blogs, wikis, Facebook and Twitter (Agosto et al. 2011; Arya and Mishra 2012; Mon and Randeree 2009). Nurses may have had few chances to use social media for health care services, although the value of social media for better access and social support is recognized (Dentzer 2009; Rutledge et al. 2011). Nurses may be less familiar with how their patients have used social media for health information seeking. Users of social Q&A sites represent a third group: lay people who would like to seek and share health information in social contexts. They are familiar with the systems and procedures employed and have certain purposes or motivations for using the Website to solve their health problems or those of their significant others.

The answers to these four research questions, presented in the Results and Discussion sections later in this paper, add significantly to our understanding of how answers to health questions on Yahoo! Answers and other sites are evaluated and of the key factors and considerations that play a role in the evaluation process.

Method

Recruitment of evaluators

Forty evaluators from each of three groups, librarians, nurses and Yahoo! Answers users, participated and received compensation for doing so. Librarians were purposively sampled and invited through e-mail lists run by the Medical Library Association (with participation from the entire United States) and Florida Ask-a-Librarians and through a public contact list of librarians in Florida and Georgia public or health science libraries. Nurses were purposively sampled and invited from several Advanced Nursing Practitioner Councils in Florida and through access to graduate students from the Florida State University College of Nursing. Users were invited from a random sample of users who asked health-related questions in Yahoo! Answers at least once during May 2011.

Answer collection and sampling

A Web crawler using the Application Programming Interface of Yahoo! Answers randomly collected 72,893 questions and 229,326 associated answers posted during April 2011 in the Health category of Yahoo! Answers. These answers and their associated questions covered all kinds of diseases and associated conditions, dental and optical care, alternative medicine, diet and fitness. In this study, we were interested in assessing the quality of best answers, one answer marked as the best by users for each question. Thus, we narrowed this initial sample by filtering out answers, such as:

- answers that were not marked as best answers by users;

- best answers that were fewer than thirty words, to remove answers simply reacting to questions (e.g., You're right, go to see a doctor) or for which it would be hard to draw out meaningful information due to their brevity; and

- answers that did not provide appropriate information but included disturbing and inappropriate sexual jokes or content, as judged by consensus among the researchers.

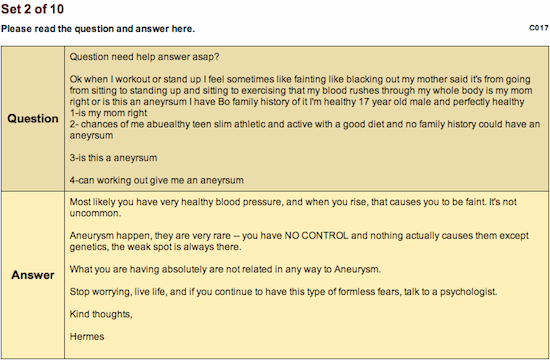

From those answers remaining, 400 questions and associated best answers were randomly sampled for the quality evaluation. Participants were asked to evaluate the quality of answers, but the questions were provided along with answers as necessary context, to help the participants understand the information inquiry that calls for an answer. A representative example of a question and its associated answer, as presented in the evaluation instrument, is shown in Figure 1.

Answer quality evaluation procedures

As noted earlier, 120 participants were recruited as evaluators, forty from each of three groups: librarians (L), nurses (N) and users (U). (We could not use data from one user in analysis because their evaluation was incomplete.) The same pairs of 400 questions and associated best answers were assigned to the three groups. In each group, each participant evaluated ten answers selected at random.

Quantitative evaluation took place using ten criteria derived from the literature on evaluation of online health information (Agency... 1999; Eysenbach et al. 2002; Health on the Net Foundation 1997; Rippen and Risk 2000; Stvilia et al. 2009) and social Q&A (Harper et al. 2008; Kim and Oh 2009; Zhu et al. 2009). These criteria included accuracy, completeness, confidence, efforts, empathy, objectivity, politeness, readability, relevance and source credibility. We report results from and analysis of participants' quantitative evaluations in Oh et al. (2012) and Oh and Worrall (in press) and compare these criteria with our qualitative findings later in this paper. At the end of their evaluation, participants answered open-ended questions asking for their overall impressions of the health answers; explicit suggestions they would make for people seeking answers online and any other comments they might have. These qualitative responses are the focus of this paper.

Participants were compensated for their time and efforts to evaluate the quality of answers and provide their feedback to the answers. We pre-tested our evaluation tool and survey with graduate student and librarian volunteers from Florida State University and with nurse volunteers in the Tallahassee, Florida area. Based on the average amount of time taken by each group of participants in pretesting of the evaluation instrument, librarians and nurses received US$30 Amazon.com gift cards as compensation, while users received US$10 Amazon.com gift cards. We provided different compensation for different groups because pre-testing found librarians and nurses spent, on average, approximately three times longer than users in completing the evaluation.

Data analysis

We used an inductive open coding approach to analyse answers to the open-ended questions qualitatively, an approach similar but not identical to grounded theory and its constant comparative method (Bradley et al. 2007; Charmaz 2006; Strauss and Corbin 1994). The two authors and an additional researcher reviewed the answers and independently developed emergent codes for evaluation criteria and indicators, emotional reactions displayed in participants' responses and themes in advice given to people seeking health answers online. Next, we reviewed our proposed code lists together, discussing similarities and differences in the codes we applied in an inductive and iterative process (Ahuvia 2001). As a result of this process, we merged our code lists together into a master list, helping to ensure intercoder reliability and the credibility and trustworthiness of our analysis (Gaskell and Bauer 2000; Golafshani 2003; Lincoln and Guba 1985). We each then used the established codebook to code a selection of the responses from each of the three groups. Overlap between the selections allowed for further intercoder reliability checks and resolving any subsequent conflicts in coding. While subtle differences existed, we observed no significant discrepancies and the few minor discrepancies were resolved through further discussion to obtain agreement, a procedure informed by Bradley et al. (2007).

Results

Overall impressions

Librarians and nurses showed mixed feelings and both positive and negative impressions on exchanging health information and social support in social Q&A, while users appreciated people who shared their information and experiences and who gave advice with altruistic motivations for helping others.

Good for social support

Many librarians and nurses considered Yahoo! Answers as online communities or support groups for people to share information and emotion and to engage with one another socially. One librarian commented, 'it seems that people who use these types of sites are interested in a connection with someone, something closer to social networking than just static information' (L34). A second librarian found herself going to Yahoo! Answers to, 'hear what other people are feeling, doing, or just releasing anxiety' (L13). Another librarian argued what people do in online communities is not much different from what people do in their everyday lives; 'the online community just allows for the information to travel into a much broader space' (L11). One nurse acknowledged the social aspect of Yahoo! Answers in that 'most did so in trying to help [one another]' (N23). Users' fondness for Yahoo! Answers was much greater than that of librarians and nurses. One user stated there were 'many good questions and good answers' (U11); another stated 'there is a lot of useful information to be found from people who genuinely want to be helpful' (U15). Users appreciated others' efforts to help without serious consideration for the quality of health information exchanged in this context. One user believed 'any cause is a good cause' and 'any help given on answer sites like these [is] welcome and healthy for any questioner' (U39).

Useful but with reservations

In several cases, librarians and nurses affirmed and supported people's efforts in seeking and sharing health information, experiences, concerns and advice online, but with reservations. Using social Q&A sites was acceptable for one librarian 'if the person also then seeks professional medical help' (L29). Another librarian (L28) acknowledged both the valuable and dangerous aspects of such sites, as an online group for social support and as leading people to misinformation and to failure to distinguish between good and bad information. A third librarian indicated it would be okay to use these sites to collect information 'as [the] basis for questions with the doctor', but warned that users should 'not rely completely on advice from Internet sites for important medical questions' and should consult doctors 'for an actual diagnosis and treatment options' (L26).

Unacceptable

A few librarians and nurses expressed strong opinions against using social Q&A sites for health information seeking, while none of the users expressed the same opinion. Most of these librarians and nurses seemed to have few experiences observing health questions and answers shared in social contexts and in reviewing them thoroughly in the past. One librarian questioned, 'do people really think they are getting reliable health information this way?? Yikes! I didn't know it was this bad' (L22). Many nurses were surprised that people who have no medical qualifications were considering health problems and providing information and answers based on their personal experiences. One indicated s/he was not aware of 'how much bad advice was out there' and argued, 'just because people have a venue to express their opinions does not mean they should' (N40). Another nurse criticized the lack of efforts by users in providing reliable information, stating they were 'really surprised at some of the answers, it didn't look as if there had been any thought put into most of them' (N28). The negative reactions from the participants attached to their feelings of fear or concern, mistrust and surprise are described in detail in the Emotions section below.

Why use social Q&A sites?

While observing the health questions and answers in the evaluation, some participants started thinking about why people would go to social Q&A sites for health information, instead of seeking information from health care professionals. One nurse thought people may have to go to Yahoo! Answers because it could be the only source they can reach; they may not 'have the funds to seek medical advice' or they may be 'embarrassed to go to the doctor for help' (N22). In a similar way, one librarian stated young people 'may not have $$ to go to a doctor and may hesitate to ask their parents for help' (L14). One of the users indicated that people may go to such sites often because they are not satisfied with health care professions who 'simply do not have the time/patience/inclination to explain certain things to you [as a patient] that you'd feel happier to know' (U29).

Emotions

Participants displayed a range of emotional reactions to the health answers and social Q&A sites. We identified five common expressed emotions: (a) fear or concern, (b) trust or mistrust, (c) confidence (or lack thereof), (d) surprise and (e) empathy. There were differences in these among the three groups of librarians, nurses and users, which we discuss below. We also briefly note how frequent each emotional reaction was in each group to paint a broader, more descriptive and more connected picture of the themes that emerged in context of each other and the existing literature.

Fear or concern

Across the three groups, emotions indicating fear or concern for those asking questions, answering questions, or reading the questions and answers of others were the most frequent in participants' comments. Librarians showed the greatest levels of fear for users due to the nature of the answers they reviewed, eighteen showing a degree of concern. A few librarians believed answerers were 'attempt[ing] to answer with useful information', but cautioned that users should 'consult with a medical professional' (L03). This librarian also showed fear by repeatedly suggesting users should 'CONSULT A MEDICAL PROFESSIONAL!' (capitals in original) and asking, 'Why in the world would one take medical advice from a random person online? It's frightening' (L03). Other librarians showed high levels of fear over the advice being given and the potential for users to take it without careful evaluation of its quality, accuracy and credibility. One asked if 'people really think they are getting reliable health information this way??' and continued 'YIKES! I didn't know it was this bad' (L22). Nurses showed less concern and fear, but six expressed these emotions to some degree; their concerns related to the evaluation of sources, the level of thought put into answers and the general nature of the questions and answers. One felt 'this is scary' and stated 'it's one thing to write a review… but offering personal opinions on health matters is dangerous' (N40). Two users expressed concern and fear that following the suggestions in answers without careful consideration could lead information seekers to harm themselves, relatives, or close friends.

Trust and mistrust

Comments about trust and mistrust of the answers were the second most popular emotion identified across the three groups. Equal numbers of nurses and librarians (six each) were identified as feeling this emotion. The six librarians' concerns over trust related to sources and their credibility. One of the librarians was more positive, suggesting combining answers with other sources could 'offer some dimension beyond the authoritative answer of a legitimate Website or a doctor'. This librarian continued, 'I also read books by actual doctors and reliable Websites like MedlinePlus' (L06). Of the nurses, four suggested users should mistrust social Q&A sites due to the potential for misinterpretation and varying credibility of answers. Two others were nuanced, suggesting caution but that the information obtained could be trusted in sufficient context, 'with a grain of salt' (N12). Only one user raised trust, in the context of answerers of questions needing to gain the trust of the question-askers.

Confidence

We identified one librarian, four nurses and three users who discussed their level of confidence in social Q&A or in users' evaluation skills. The nurses expressed a lack of confidence in these sites, one being 'unimpressed with the majority of answers given' (N02) and another imploring users to 'avoid question and answer types sites like these!' (N32). While many librarians shared in the fears implicit in the nurses' comments, only one librarian (L04) indicated 'one or two were fair; the rest were rubbish', and expressed an explicit lack of confidence in the evaluation skills of users, who s/he hoped 'did not take up the advice'. For the three users, confidence levels were mixed but more positive than the nurses and librarians. One termed their use of Yahoo! Answers 'a supplement' for other sources that had 'helped me greatly over the years' (U25); another felt that 'the experience is usually good' (U17).

Surprise

Three librarians and five nurses showed surprise at the reality of the social Q&A setting and of the health answers; no users showed surprise. A librarian 'realized these are opinion answers' but still found them 'a little startling' (L16). Nurses were 'surprised at some of the answers' (N28) and 'that people really turn to other folks on the Internet and trust them' (N14).

Empathy

Empathy was the least common emotion identified; three librarians and one nurse (and no users) expressed a degree of empathy for users or answerers. One of the librarians showed similar empathy for the younger population, given their potential lack of financial resources and hesitation to ask for parental guidance. Another librarian expressed empathy for answerers, who 'inherently want to help people and… feel useful', but was concerned over 'dangerous' implications for this 'in the online medical community' (L11). One nurse was concerned how users' finances would have an impact on their ability to see a medical professional.

Evaluation criteria and indicators

Participants raised many quality evaluation criteria and indicators in their responses. We identified five significant categories of these in our analysis: (a) sources, (b) subjectivity, (c) style, (d) completeness and (e) accuracy. Nurses and librarians often referred to more than one criterion or indicator in their responses, relating them together more often than most users. We discuss these categories below, also briefly noting how frequently each criteria was raised by each group to paint a broader, more descriptive and more connected picture of the themes that emerged in context.

Sources

Evaluating answers based on their sources was the most common category of indicators of quality mentioned by nurses and librarians. Nearly two-thirds of the librarians commented on a perceived lack of credible and authoritative evidence in the answers: 'very little evidence [was] involved' (L19), answers were 'without complete information or credentials' (L37) and 'not even in the best of these did… anyone [refer] the questioner to an authoritative source' (L37). A couple of librarians held a more positive and nuanced view, one stating 'authority and accuracy is not always the necessary ingredient in a forum/discussion situation' (L24). Nearly half of the nurses mentioned source-related indicators of quality, focusing on the apparent lack of research in and opinionated nature of answers. For example, one nurse believed 'most [answers] were individual's opinions… I wouldn't have wasted my time reading those sites as the credibility can vary widely' (N11). Fewer users, less than one-fifth, commented on source-based indicators of quality. Some shared the concerns of the librarians and nurses, while others felt using personal experience was 'a source in itself' (U12) and its use was not a problem. Still others took a nuanced approach, believing the context of the source and identifying any underlying bias were important.

Subjectivity

Eighteen librarians and fifteen nurses mentioned the subjectivity of answers as a quality indicator. Librarians tied subjectivity to the answerers' use or lack of sources: 'no credentials other than [their] experiences were ever cited' (L14). As per one librarian, acknowledgement of the subjectivity and 'ambiguity' in answers was rare (L15). A couple of librarians were accepting of personal opinions and biases in the answers, believing they could be useful when placed in context. Most of the nurses echoed the same concerns as librarians about opinion-based, anecdotal answers. Nurses focused their feedback on what many perceived to be 'heavily opinionated' (N01) answers with 'a lot of personal bias' (N12). One nurse felt a lack of professional experience, which 'counts for so much' (N32) and face-to-face interaction with an expert led to poor quality answers: 'having someone lay their eyes on your body makes a big difference in the quality of your diagnosis and treatment' (N32). Users raised subjectivity much less; five discussed this indicator of quality in their comments. Most were accepting of the subjective nature of the answers, stating this is what they would want out of an answer and what other users would desire. Answers that 'only gave Websites' were not seen by one user as 'how these questions should be answered' (U23).

Style

All three groups mentioned the style of the answers. Eleven librarians focused on the attempted helpfulness of answerers ('most people are polite and want to help' (L02)) but most often as a negative element; one felt answers were 'more like friends responding to one another via e-mail than actually providing useful information' (L09). Ten nurses, while having mixed feelings, erred on the negative side; they often felt answers were too informal, lacking detail and evidence. On average, the nurses were less dismissive of the style of answers than the librarians. Those nurses who made positive comments focused on the attempted helpfulness of many answerers and praised responses 'somewhat like you would get from a good friend' (N39). Users raised the issue of style with greater frequency than librarians or nurses, with fifteen making comments in this area; it was the most frequent criterion mentioned by users. Five made negative comments on what they perceived as the 'vague' (U01), unprofessional, 'not very scientific' (U02), over-opinionated, or 'dull… [and] mundane' (U18) style of the answers. Seven users made positive comments on the style of answers as 'clean and understandable… informative' (U14), 'considerate' (U22) and 'help[ing] any individual who comes looking for it' (U39). The remaining users were neutral, offering advice to others on how to ask questions, to 'trust the long lengthy answers' (U11) and to 'ignore the irrelevant voicings from people who are simply over-opinionated' (U15).

Completeness

All three groups mentioned the completeness of answers as a criterion with similar frequency, referencing the incomplete and inadequate nature of many answers. Fourteen librarians commented that 'several [answers] seemed to ignore the real, unasked questions… [and] most were incomplete' (L28); although 'most people were trying to be helpful… few put in a lot of effort in their answers' (L18). Eleven nurses pointed out that 'only a fraction of what… would be provided in a hospital/clinic setting' was available (N01) and 'most' answers did not show 'any thought put into… them' (N28). While one nurse believed 'some [answers were] very informative with lots of detail', they judged other answers to be 'very slack, lazy and not helpful in the least' (N37). Thirteen users were more positive than the nurses and librarians, but still erred towards at least some answers being seen as incomplete due to short length and low effort. The questions were sometimes seen to be 'too short or long' (U02) and one user believed 'no answerer put a tremendous amount of effort into answering' (U37). Another user was 'impressed' that some answerers 'could provide detailed information relating to the problem asked' (U29).

Accuracy

Discussions of accuracy were less common among librarians and users, but were more common for nurses; twelve raised this criterion. Most were concerned the majority of answers being given included incorrect information ('only one gave the most accurate answer which was to go to the doctor' (N14)) despite answerers portraying confidence: 'if the answerer seemed confident, the information was equally as incorrect' (N19). Six librarians commented on accuracy, sharing similar concerns over the accuracy and authority of answers, although one argued 'authority and accuracy [are] not always the necessary ingredient in a forum/discussion situation' (L24). Four users shared varying opinions of accuracy; one felt most answers were inaccurate, two felt most were accurate and one believed there was much variance in accuracy across the answers.

Advice

One of the open-ended questions included in our survey asked participants what suggestions they would give people seeking health answers online. Through analysis, we determined the advice participants gave fell into five categories: (a) sources, including source context and multiple sources; (b) realistic expectations, (c) clarity and details, (d) review with others and (e) education. We discuss these below and, as with the previous sections, briefly note how frequent each category was in each group's discussion to paint a broader, more descriptive and more connected picture of the themes that emerged in context.

Sources

All three groups made comments on specific sources they believed people seeking answers to health-related questions should or should not use. Over three-fourths of the nurses and nearly three-fourths of the librarians discussed sources, while only one-third of users commented. The librarians recommended established online sources of medical information, but recommended MedlinePlus with much greater frequency than the nurses; educational and governmental sites were other common suggestions. Most librarians showed a greater comfort level with users seeking online sources, accepting the reality of modern information seeking. Many librarians suggested using social Q&A sites 'to find out experiences… [and] to network to get more information' (L05). Other librarians felt users should focus on seeking medical professionals, including asking 'another physician for a second opinion' if necessary, 'rather than rely[ing] on the anecdotal advice of strangers' (L12). All agreed information should be sought from 'databases and authorities in the field' (L05).

Nurses often advised users to seek assistance from medical professionals or established medical Websites, such as those of WebMD and the Mayo Clinic. Not all agreed both types of sources should be used; 'a professional provider' and 'not the Internet' (N05), 'legitimate medical Websites that are recommended by their provider' (N10), specific sites ('go to Medline or WebMD' (N20)) and a combination of 'some advice and… medical attention… not lay people from the Internet' (N33) were all recommendations. Nurses further suggested users should 'not rely on online users to diagnose you' (N01) and 'avoid question and answer type sites' (N32).

Users' advice on sources showed greater variety, although many expressed similar comments to the nurses and librarians. One advised fellow users to 'always be sceptical of what you are reading online' and that 'if it is a medical question, it is always best to seek professional medical help' (U09). Another felt social Q&A sites offered 'a great way to find out information, but [users] have to be very cautious about taking the answers seriously' (U35). Other users recommended Internet searchers to, 'ask their questions on Google and see what they come up with' (U06) and to use social Q&A sites 'if you are sure that there is no immediate danger' (U22) and, despite their use being 'flawed', since they can provide 'useful information… from people who genuinely want to be helpful' (U15).

Aside from recommending specific sources, many librarians, nurses and users suggested the importance of the context of the source in their advice to questioners. Librarians mentioned this most often (60% did so), focusing their advice on considering the authoritativeness of sources. Specific suggestions, e.g. looking at 'the About Us section' of Websites (L01) or 'avoid[ing] Websites that have excessive advertising' (L23), were included. About half of the nurses gave advice almost exclusively on the credibility and reputability of sources. Specific suggestions included to determine 'if nurses or doctors are involved in the Website's answers' (N03), 'take anything and everything… with a grain of salt' (N16) and seek 'information from a reputable source that also links [to]… evidence-based' sources (N21). Around a third of the users presented a wider range of contextual criteria in their comments than librarians or nurses. These included perceived authority, trustworthiness, credibility, accuracy, length of answer and specialization of the venue of response. Two users brought up the potential for fear and questioning medical science, suggesting users should 'be aware that there are people online who… may cause harm/have malicious intent' (U29) and should 'look at all forms of health and healing. Don't just go by what makes sense by doctors' (U34).

About a quarter of each of the three groups advised users to seek out multiple sources of information to help answer their questions. Twelve librarians focused on encouraging users to use multiple sources and source types, including social Q&A sites, the Web at large, in databases and by using 'authorities in the field' (L05). They advised online sources should serve 'as a jumping off point to gather ideas' (L14) and that all answers should be 'double-check[ed]… on [a] reliable Website to confirm information' (L24). Thirteen nurses focused on using 'trained medical professionals' (N02) as additional sources, alongside educational Websites and (for some) social Q&A sites. One argued, 'it's okay to ask' online, but 'actually talking to an expert in the field… is still the best way to obtain information' (N12). Ten users made similar suggestions to the librarians, encouraging their fellow users to 'use the answers you're given to point you in specific directions, but do your own research' (U03), to 'double check by looking on Google' (U18) and 'ask in addition to [other sources], not instead of' (U23).

Realistic expectations

Another element raised in advice was suggesting users should have realistic expectations when using social Q&A sites to ask health questions; nine users and eight librarians commented on this, but only two nurses. The librarians focused on the lack of true authority and the basis of most answers in 'personal opinion… personal support' (L15) and 'limited personal experience and biases' (L37). One librarian suggested users should 'draw a line between their information-seeking and their empathy-seeking and pursue separate strategies' (L07). Only two nurses mentioned having realistic expectations, suggesting users should 'take these responses for face value' (N08) and not expect 'lengthy, comprehensive answers' on these sites (N39). The nine users were more accepting than the librarians of the inherent subjectivity of Yahoo! Answers. They suggested their fellow users should be aware of the length, categorization and date of questions and answers. Users argued that answers from 'the ones who have experienced life instead of studying it' (U12) were better and the best questions are those that 'want other patients' personal experience', are 'well written' and 'are not something that can be "googled"' (U27).

Clarity and details

Three librarians raised clarity and specificity, asking questioners to 'be as full of information as [they] can' (L04) and to 'use good grammar [and] be specific' (L19). No nurses gave advice on being clear and giving details when asking questions. Nine users suggested questions should be stated 'clearly and concisely' (U03), include 'specifics' (U05) and include a 'FULL description' (U40). Two users mentioned issues of grammar and style, asking questioners to 'please, please speak English' (U27) and make questions 'easy to read without any errors' (U40).

Review with other resources

A few participants in each category (five librarians, three nurses and four users) suggested medical professionals such as primary care providers, specialists, nurses and other health care providers should be consulted to help evaluate information obtained online. One user suggested users should 'discuss the answers with somebody else they trust in real life' (U29).

Education

Five librarians and five nurses discussed the need for specific educational efforts for patrons and patients. One librarian suggested 'a handout for searching for health information on the Internet and a list of organizations that meet the HON [Health on the Net Foundation] requirements' (L01). Other librarians advised educational efforts focused on directing health information seekers to 'authoritative sites like Go Ask Alice' (L14) and on advising seekers 'to see a health care professional in some situations' (L39). Nurses also suggested having 'hospitals and doctors' offices hand out pamphlets' on health information literacy (N03) and offer a 'list of "reputable" [sites]' (N25) and 'appropriate lay-person medical sites' (N31). Only one user raised education and then indirectly, suggesting a specific change to Yahoo! Answers to make it easier 'to find a question that has already been asked' (U09).

Discussion

Social and emotional support

The themes identified through our qualitative results and analyses confirm the previous findings of Kim et al. (2007; Kim and Oh 2009; Kim et al. 2009); social and emotional support are important criteria on social Q&A sites. While source-related indicators were most popular, librarians and nurses often invoked the subjectivity of answers. Users expressed greater acceptance of subjectivity and instead focused on the overall style of the questions, illustrating greater consideration of the social, emotional and community-based support they valued from the site. Librarians appear less comfortable with users' focus on social and emotional support, believing well-cited, factual, objective and complete responses are needed alongside such support. Nurses shared many of these concerns, but were more concerned than other groups with the lack of accuracy of the answers. While many librarians appreciated users are not always seeking factual answers, almost all nurses argued they should seek face-to-face help from a medical professional first. The lower levels of fear shown by and more positive comments from users indicate greater faith in their fellow users' ability to evaluate the answers they receive in socio-emotional and personal context.

The low number of users who expressed empathy (as derived inductively through our coding and analysis process) in their comments at first seems contradictory to these other findings (as noted by one of the anonymous reviewers). However, most users did not write very long comments to the open-ended questions; lack of motivation by most to answer the questions thoroughly and with the same level of detail as offered by many librarians and nurses may have limited their expressions of empathy. We also believe other comments made elsewhere by users and the comments of librarians and nurses are strongly indicative of users valuing empathy, even if many did not express it themselves in the context of the current research. Further study of users' levels of empathy through interviewing or other in-depth analysis could further explore and describe their emotional reactions to this environment and further understanding of the role of empathy.

Despite this potential discrepancy, the thematic patterns in our qualitative findings imply the importance of socio-emotional and community-based support in online communities (Eastin and LaRose 2005; Kazmer and Haythornthwaite 2001; Tufekci 2012; Wellman and Gulia, 1999), including those related to health (Burnett and Buerkle 2004; Frost and Massagli 2008; Gooden and Winefield 2007), extends to the new setting of health-related social question answering services, at least in the context of evaluating answers. Many librarians and nurses and most users, considered Yahoo! Answers as online communities or support groups, where people can join, remain anonymous, share information and emotion and socially engage with one another (Pennbridge et al. 1999). Different individuals may conceive of this type of community in different ways and their participation will take on many varied forms (Oh 2011; van Dijck 2009), but social and emotional elements are important considerations for most users; there is clear evidence for Fox's (2011b) 'peer-to-peer healthcare' in the social question answering setting. Many librarians appear cognizant of this fact, but librarians and especially nurses are prone to conceive of social Q&A sites as information resources, focusing on objective criteria and indicators. Both a socio-emotional, subjective perspective and an informational, objective perspective are necessary to complete our picture of the phenomena of these sites, much as they are for online communities based in information and knowledge sharing (Burnett and Buerkle 2004; McLure Wasko and Faraj 2000, 2005). Further research will be necessary to determine what variances and thematic differences there may be in these perspectives for different populations and in different settings and what implications these variances have for information services, design and development and educational efforts. We present some of the initial implications from our findings later in this paper.

Evaluation criteria and indicators

Our inductive, qualitative analysis of the open-ended comments in this study echoed and emphasized the ten criteria: accuracy, completeness, relevance, objectivity, source credibility, readability, politeness, confidence, knowledge and efforts, on which we asked participants to quantitatively rate each answer (see above as well as Oh et al. (2012) and Oh and Worrall (in press)). The presentation of these criteria in the evaluation portion of the instrument may have influenced participants' responses to the open-ended questions and thus may be a limitation of the qualitative findings presented in this paper. Careful analysis of participants' responses to the open-ended questions suggests that any bias was not extensive, showing differences in weighting and emphasis not always present in the literature our ten criteria were drawn from.

Overall, socio-emotional factors impact on (and are mutually influenced by) the indicators and criteria used and discussed by librarians, nurses and users. Our qualitative data show complete answers indicate answerers' effort and helpfulness, elements of providing positive social support (White and Dorman 2001). Accuracy was valued by nurses and most librarians as an indicator of content quality, but many users valued social and emotional support over perfect accuracy. The latter was not always desirable for users, serving as an indicator of potentially negative social support.

Source-related indicators, the biggest category emerging as a theme from our qualitative data, can be mapped to most of the criteria put forward by Stvilia et al. (2009) for the quality of online health information. Our analysis shows sources as a separate category, but one interrelated by the participants with other criteria and indicators; librarians, nurses and users all raised the accuracy, completeness, authority and usefulness of sources used, or how the lack of sources served as an indicator of low levels of completeness, authority and usefulness. It may be a more accurate reflection of how our participants saw the evaluation process and its cognitive, social and emotional elements. Subjectivity maps to the objectivity criterion Stvilia et al. place within their usefulness category. Its emergence in our data as a separate element reflects the highly subjective and 'hyperpersonal' (Turner et al. 2001: 232) nature of most answers on Yahoo! Answers and other social Q&A sites (see also Kim and Oh 2009), where it serves as an important indicator of the potential usefulness of an answer to users.

Analysis of the thematic patterns that emerged from our qualitative data and of the frequencies of each theme show a similar proportion of users raised source credibility as a criterion as in Kim's (2010) study, indicating at least a few users feel they must evaluate if they can trust the source. However, our experts (librarians and nurses) placed strong emphasis on evaluating source credibility over message credibility, saying information seekers should consider if they trust the source. Kim's users favoured evaluating the credibility and trustworthiness of the answer and the fellow user providing it. As one of our librarians stated, trust of multiple sources in mutual context is better than trusting each singly; many of the nurses feared the latter would take place. Users may trust answers and sources without thinking or consulting other sources, but they may feel greater trust in their fellow users and in the socio-emotional, community-based setting of a social Q&A site than a one-on-one, fact-based conversation with a medical professional or a formal source of information (e.g. a book or governmental Website). Some nurses and librarians understood the 'good friend' nature of the community (e.g. N39), but others felt it inappropriate (e.g. L09).

Our style category, as an emergent thematic pattern, covered areas such as readability, confidence and empathy and maps to the completeness and accessibility constructs, including the criteria of clarity, cohesiveness and consistency from information quality criteria proposed by Stvilia et al. (2009). The emergence of style as a separate category in our qualitative data implies that it may be a stronger element in the quality evaluation of online health information by nurses, librarians and especially users than previously thought. Many sites relying on user-generated content, such as Wikipedia, include style as an important element of measuring the quality of such content (Stvilia et al. 2008; Wikipedia 2012). The need for and presence of norms and conventions have been observed in online communities (Burnett et al. 2001; Burnett et al. 2003; Preece and Maloney-Krichmar 2003). These elements play a larger role in social, emotional and community-based settings, as social Q&A sites are, than in evaluating individual Web pages and resources. Further research is necessary and we suggest that future, quantitatively-based studies of the quality of health answers in social Q&A should carefully consider the thematic patterns and differences we uncovered in our qualitative analysis when determining which criteria to use for evaluation.

Advice

Other thematic differences between the groups showed in the advice they gave to people seeking health answers online. Nurses recommended medical professionals and non-Internet sources more often than librarians, who were more willing to accept the reality of present-day information seeking and instead focused on steering seekers to using (alongside social Q&A sites and other sources) legitimate, trusted online sources of health information such as MedlinePlus. The two expert groups were less trusting of user-generated content than Yahoo! Answers users. All three groups of participants echoed that the wisdom of the crowd, using multiple sources and answers instead of relying on any one individual, should be sought and users should consider the context of all sources that are used. The specific criteria to be considered varied in the advice given by each group, like it did in the evaluation criteria participants mentioned; librarians and nurses focused their advice on one or two criteria, while users' advice included greater variety in contextual criteria. This is indicative of the overwhelming feeling many users may have when it comes to evaluating health information. While multiple criteria are of importance, our qualitative data imply many of the nurses and librarians would advise focusing on no more than a couple to begin with.

Librarians and users were cognizant of the realities of the social Q&A setting; few nurses gave advice to have realistic expectations or on how to ask questions and many were dismissive of any potential use for social Q&A sites for patients. Nurses may need greater awareness of the positive role user-generated content can play in health information seeking (Eysenbach 2005; Klemm et al. 2003; Shah et al. 2009; White and Dorman 2001). While many users appear to understand and want the subjectivity of the answers they receive from fellow users, the librarians showed (as above) greater interest in users seeking authoritative, objective sources; 'information-seeking' instead of 'empathy-seeking' (L07). Our findings are limited because we did not specifically ask librarians or nurses about their level of experience with social Q&A sites. More than two-thirds of librarians said they had experience with virtual reference, with many using e-mail and social media to provide such services, but none mentioned using social Q&A sites to provide those services. Despite this limitation, the thematic patterns and differences emerging from our qualitative data indicate there is a place for adopting both objective and subjective perspectives to information seeking and sharing in social Q&A. The 'hyperpersonal' (Turner et al. 2001: 232), personalized sharing of empathetic stories and experiences that users value (Burnett and Buerkle 2004; Frost and Massagli 2008; Gooden and Winefield 2007) should be provided, alongside objective, factual information sourced from authoritative online and offline sources.

Implications

The themes emerging from our findings have implications for improving the design of social Q&A sites. All three groups recommended that users should examine multiple sources from multiple contexts. Social Q&A sites may facilitate this better by encouraging users to look at multiple answers, not just one best answer and at questions similar to theirs that may provide further information and knowledge of relevance. Other sources, such as authoritative and objective content sourced from trusted health and medical Websites, could be displayed alongside the subjective, community-provided answers. Answerers could be required to state a source for their responses. Quantitatively, in our sample only 33% of answers included an explicit reference; requiring a reference, even if given as from personal experience or informed opinion, would help provide greater context for each answer. These design implications would require further testing to test their broader applicability.

Both the qualitative themes reported here and our quantitative results and analysis (Oh et al. 2012; Oh and Worrall in press), indicate there must be balance between the offering of socio-emotional support and the provision of factual, accurate information across all health information venues: social Q&A sites, health-related online communities, libraries, medical facilities and other resources. Librarians, nurses, other medical and information professionals and answerers should provide users, patrons and patients with quality information and answers in a setting and context they are comfortable with. Many users of the Internet and of social media appreciate the socio-emotional, subjective perspective offered by these sites, since it offers a view they are unlikely to get from medical professionals and that information professionals are often uneasy about providing. While medical and information professionals should retain their objective stance, they should be aware of the socio-emotional and subjective setting provided by social Q&A sites and adapt their provision of health information services to reflect this emerging reality, one which may not yet be prevalent among a majority of the general public, but is becoming ever more popular with health consumers who use the Internet and social networks (Fox 2011a). Librarians have already provided virtual reference and other library services in new media and environments sharing many of the characteristics of social Q&A (Connaway and Radford 2011; Mon 2012), but not all share the same level of enthusiasm or high skill level in providing such services. Further research studies of and educational and practical efforts towards virtual reference in the context of social Q&A, as suggested by a recent conference panel session (Radford et al. 2011), are needed. In health contexts, these efforts must extend further to cover nurses and other medical professionals, given their apparent lesser degree of awareness of such sites.

Our qualitative analysis and the previous work of Bibel (2008) and Stvilia et al. (2009) further imply that users, patrons and patients must be educated in appropriate evaluation taking into account both subjective and objective aspects of the information they find and the answers they receive. While some users already understand they should seek out multiple sources and consider source bias and context, others may place too much emphasis on their social and emotional needs, forgetting that accuracy, completeness and source credibility also require evaluation. Our nurse and librarian participants provided many suggestions for educational initiatives, including:

- brochures on health information literacy to be distributed through hospitals and doctors' offices;

- lists of authoritative online sources of health information suitable for laypeople;

- instructional handouts on health information literacy; and

- appropriate training in health reference for librarians.

Such efforts will be most useful if they keep the duality of subjective, social, empathetic and objective, factual, informational support in mind. Providing and considering both traditional, authority-provided content and new, personalized, user-generated content will allow social Q&A sites, librarians and medical professionals the better to serve users; educate them in evaluating the varied health information they will find; and encourage their use and evaluation of multiple types, forms and sources of health information.

Conclusion

Our study explored how librarians, nurses and Yahoo! Answers users assessed the quality of health answers posted on the site; the socio-emotional reactions they displayed as part of their evaluation; the advice they gave to users of social Q&A sites; and the relationships between socio-emotional support, advice and evaluation criteria. We examined thematic differences between the groups in their evaluation practices, reactions and advice and compared our findings against previous research and literature in this area. The criteria we found matched, but greater consideration was paid to style, sources and subjectivity by our participants than in previous research. We found users value social and emotional support and are accepting of the subjectivity of these sites, but librarians are less accepting and nurses even less so. We argue there is a place for both 'information-seeking' and 'empathy-seeking' (L07) in the seeking, sharing and evaluation of information and knowledge from Yahoo! Answers and other sites, with implications for their design; the provision of virtual reference and other library services in the context of social Q&A; and the education of users, patrons and patients in evaluating online health information.

While we encourage researchers and practitioners to consider our findings, implications and conclusions, they are limited by the scope of our study and its exploratory nature. Future research should look to deepen the current findings through interviews and other, deeper methods of data collection, as well as expand the scope of the setting to broader populations of users, librarians and medical professionals; to other categories of answers; and to other social Q&A sites. Quantitative evaluation of health answers using criteria derived from both the previous literature and the qualitative themes identified in the current study will also help confirm our implications and conclusions. For health answers, future research should examine the potential for collaboration among librarians, nurses and users of these sites in providing appropriate educational efforts and information services for users. These efforts and services should encourage users to evaluate health information in light of objective and subjective criteria and indicators of quality. Librarians and medical professionals should be educated in how best to provide virtual reference and other library services that adapt to the objective-subjective continuum and serve a range of user, patron and patient needs.

Acknowledgements

This study was funded by a First Year Assistant Professor (FYAP) grant from The Florida State University awarded to Sanghee Oh. We thank Melissa Gross for constructive feedback on the study; Yong Jeong Yi for help with data collection; Mia Lustria, Karla Schmitt, Mai Kung and several liaisons and directors from the sections of the Medical Library Association and Advanced Nursing Practitioners Councils, who helped contact librarians and nurses for the study; and Michelle Kazmer for resources helpful towards our data analysis procedures. We also thank the anonymous reviewers and the editors of Information Research for their thoughtful critiques and constructive comments that have helped improve our paper.

About the authors

Adam Worrall is a Doctoral Candidate in Information Studies at The Florida State University College of Communication and Information, in Tallahassee, Florida. His research interests include social informatics; digital libraries; collaborative information behavior; scientific collaboration; social media; and social and community theories in information science. His research has the common thread of studying information behaviour within and around the social and sociotechnical contexts—groups, communities, worlds and other collaborative environments—of information and communication technologies (ICTs). He can be contacted at: apw06@my.fsu.edu

Sanghee Oh is an Assistant Professor at The Florida State University College of Communication and Information, in Tallahassee, Florida. Her areas of research interest are human computer interaction, user-centered interface design, health informatics and social informatics. She has studied the information behaviour of various populations, such as lay people, undergraduate students, librarians and nurses, investigating their motivations and use of social media in seeking and sharing information about health as well as other topics. She can be contacted at: shoh@cci.fsu.edu