Understanding the factors influencing user experience of social question and answer services

Shengli Deng and Yuling Fang

School of Information Management, Wuhan University, Wuhan, China

Yong Liu

Department of Information and Service Economy, Aalto University School of Business, Helsinki,Finland

Hongxiu Li

Turku School of Economics, University of Turku, Turku,Finland

Introduction

Social question and answer sites are dedicated platforms where online users can post and answer questions, browse the corpus of already answered questions, comment and rate the quality of answers as well as vote for the best answers (Qu, Cong, Li, Sun, and Chen, 2012; Chua and Banerjee, 2013). These sites arose as a result of the advances of information technology and the adoption of Web2.0 technology, complementing traditional search engines by providing informative and interactive aspects for obtaining information. Since 2002, the number of users of these sites has increased dramatically. Between 2006 and 2008, visits increased by 889% (Hitwise, 2008). Specifically, such sites rely on social networks, such as Twitter and Facebook, and are devised so as to enable the relationships in those user networks to attract users who will provide high-quality content (Liu and Deng, 2013). For example, a leading service provider Quora increased its number of visitors from 2.6 million in 2012 to 2. ( million by April 2013, excluding mobile users (Quora, 2013).Formspring is a site in which users seek answers to questions from their friends. As of early 2012, Formspring had obtained over four billion responses (Formspring, 2014). In China, the purpose of using these sites has gradually changed from 'asking questions' to 'searching for answers' along with the quick expansion of the question bank. The largest service provider in China, Baidu Knows, claims that it had answered about 200 million questions up to September 2012 and had approximately 260 million unique visitors (Baidu Knows, 2012). Zhihu, an interpersonal relationship-oriented service provider, declared over four million registered users by April 2013 (Zhihu, 2014), suggesting that these sites have become an important means of information acquisition and problem solving for online users.

Users of social question and answer sites are both information receivers and information creators whose experience affects their motivation and the efficiency of their contribution. Previous studies show that user trust and loyalty can be improved by enhancing user experience (Hassenzahl, 2003;Stvilia, Twidale, Smith and Gasser, 2005). In contrast, low quality answers and an unsatisfactory experience will lead users to distrust and even withdraw from the community (Hassenzahl, 2003;Stvilia et al., 2005). Even though such sites have brought significant benefits to users, the current systems still have several drawbacks (Yan and Zhou, 2012). First, the platforms cannot always solve users' problems promptly and effectively, say, within twenty-four hours (Li and King, 2010;Yan and Zhou, 2012). Secondly, active users who are experienced or professional in particular domains have difficulties in finding the questions they are interested in, which leads to their low participation rate (Yan and Zhou, 2012;Guo, Xu, Bao and Yu, 2008. iResearch (2010) reported that the number of participants in Chinese sites reduced from 100 million to 80 million in 2010 in one month. iResearch (2010) also pointed out that invalid answers appear in all the platforms it surveyed. For instance, among the sixteen answers it got from Baidu Knows for each question, only 4.3 answers were useful in solving users' problems.

According to a survey on Baidu Knows by Li,Chao,Chen and Li (2011), the number of "resolved" questions accounted for only 33.3% of all the problems addressed, while the remaining 66.7% were just "closed", which means the vast majority of users' queries were not solved in time or in a satisfactory manner. Thus, there is a need to improve the overall quality and user experience of social question and answer services in China. To that end, our research sought to address the determinants of the user experience of these sites by quantifying how specific features of a system (interface design, user interaction and answer quality) affect the perceptions of users and shape their user experience among Chinese information seekers.

Literature review

User experience

In recent years, the development of an experience economy has attracted wide attention, while studies on user experience appear in various areas like government, business and services. To summarise, the aims of these studies broadly fall into two categories: social experience and academic experience.

User experience studies that concentrate on social experience are primarily concerned with the content and the determinants of user experience from the perspective of the users' emotion and cognition during their interaction on a site. Hart, Ridley, Taher, Sas, Dix and Jönsson (2008) explored social networking sites, such as Facebook, to understand its recent success and popularity. In the study, the conflict between traditional usability methods and user experiences are addressed by performing a heuristic evaluation to assess how well Facebook complies with usability guidelines and by conducting a user study to reveal unique user experiences. Hart et al. (2008) calls for a more comprehensive method of evaluation that redefines usability to encompass user experience in relation to future technology. Based on Garret's hierarchical model of user experience (Garrett, 2010), Liu (2010) explored users' psychological experience in social networks from the perspective of communication psychology. In the study, Liu proposed a number of determinants of users' psychological experience in social network sites including privacy, information authenticity, activeness and timely feedback. The study highlighted the need to optimise strategies to improve Chinese users' psychological experience when using social network sites. Lai and Mai (2011) discussed the framework construction of the user experience based on users' social network sites using process, and separated the process into two parts: interpersonal relationship and interaction. An online survey was conducted to collect data on the demand for building a good user experience. The results showed that users have expectations about the user action experience, and have a strong demand for specific functions and convenient operation. However, when using a social network site, the habits of users were found not to significantly relate to the demand for a user experience.

Studies on academic experience mainly concentrate on the content and evaluation of user experience with regard to knowledge acquisition by search engines, digital libraries or e-learning systems, etc. For instance, Paechter, Maier and Macher (2010) investigated the factors that students consider important in shaping their learning achievements and course satisfaction in e-learning. The study surveyed 2196 students from twenty-nine universities in Austria. Multiple regression analyses were performed to investigate the influences of different facets of the students' expectations and experiences regarding perceived learning achievements and course satisfaction. The results showed that the students' assessments of the instructor's expertise in e-learning, and their counselling and support are the best predictors of learning achievement and course satisfaction. Baird and Fisher (2005) integrated social networking, user experience design strategies, and some emerging technologies into the curriculum to support student learning, and explored how current and emerging social networking media (such as Weblogs, wiki,and other self-publishing media) enhance the overall user experience for students in synchronous and asynchronous learning environments. Kenney (2011) introduced a search engine developed by the library of Liverpool University in England, studying its interactive interface, content, and tools for personalisation. He found that the key to enhancing user experience in digital libraries is to provide high quality information retrieval services. A study by He (2007) on search engine marketing showed that user experience in search engine communities can be enhanced by exceeding the value that users expect of their experience as well as increasing reward points and interaction between users. Pei, Xue, Zhao and Tao (2013) evaluated search engines from the perspective of user experience, and established an evaluation index system for search engines. In their study, a fuzzy comprehensive evaluation model of search engines was constructed and the rationality and reliability of the model that contained example verification was presented.

Factors affecting use of social question and answer sites

Previous user-centred studies have focused on user motivations, user behaviour and user satisfaction. Oh (2011), for instance, identified and explored relationships between the motivations and the answering strategies of those who answered a question placed in social question and answer sites. A model of the answering processes, composed of ten motivations and five answering strategies, was proposed and tested using an online survey. Oh found that answering strategies vary according to motivations, and that answerers are highly motivated by altruism, enjoyment, and efficacy, rather than by reputation, reciprocity, and personal gain. Park and Jeong (2004) analysed the information needs and user behaviour of a question and answer service, and made suggestions for the service, with particular emphasis on reinforcing the effectiveness of the information and enhancing the service's efficiency and accuracy. Yu (2006) studied how informal information sharing patterns have a role to play regarding learning in the context of services, and examined the power of collective intelligence on the Internet and people's perceptions of collective intelligence. The results showed that the behaviour of site users is related to cognitive factors such as satisfaction, perceived usefulness and trust. Gao (2013) constructed a model of the determinants of user satisfaction in such communities based on the information systems success model and technology acceptance model by including the factors of system quality, information quality, perceived usefulness, the impacts of individual users on users and the impacts of the community on users. Drawing on social exchange theory, Jin (2009) conducted an empirical study to address: (1) the relationship between behavioural intention regarding continuous information contribution in online question answering communities and the satisfaction degree of users as well as self-efficacy; (2) how the performance of contributions affects self-efficacy and user satisfaction; and (3) how users' identity orientations moderate the relationship between the different performances of contribution and user satisfaction. Fan and Zha (2013) developed a research framework to analyse users' continuous intention to contribute information from the perspectives of the social capital theory and subjective norms. They found that reciprocity is the key determinant of user contribution intention.

Information-centred studies are primarily concerned with the quality assessment of answers, question recommendation and answer recommendation, etc. Shah, Pomerantz, Crestani, Marchand-Maillet, Phane, Chen and Efthimiadis (2010) presented a novel approach to measuring the quality of answers found on community-based question and answer sites, and attempted to predict which answer to a given question will be the best for the question raiser. Chua and Banerjee (2013) investigated the interplay between answer quality and answer speed across question types in sites. They found that there is a significant difference in answer quality and answer speed across question types, but, in general, no significant relationship between answer quality and answer speed. Zhou, Cong, Cui, Jensen and Yao (2009) sought to address the problem of pushing the right questions to the right persons with the objective of obtaining quick and high-quality answers to improve user satisfaction. They proposed a framework for the effective routing of a given question to potential experts (users) in a forum by using both the content and structures of the forum system. Kim and Oh (2009) examined the criteria questioners use to select the best answers on a site (Yahoo! Answers) by exploring the theoretical frameworks of relevant research. They identified twenty three relevance criteria in six dimensions, based on their analysis of 2,140 comments collected from Yahoo! The six dimensions are content, cognitive, utility, information sources, extrinsic, and socio-emotional. Qu (2010) proposed an answer recommending approach based on topic modelling. The author adopted the probabilistic latent semantic analysis model to present users' preferences by using personalised information, and generated a question recommending list. Lai and Cai (2013) studied the quality of answers within communities, and proposed a method for evaluating answer quality based on the degree of similarity specific to multiple answers. Li et al. (2011) investigated the interaction relationship between askers and answerers and its characteristics by using the social network method. Furthermore, a machine learning algorithm was used to design and implement a quality classifier by extracting text feature set and non-text feature set.

To summarise, previous studies on social question and answer sites have mostly focused on the aspect of information-centred user experience, such as answer and question recommendations. However, limited information is available on the determinants of the user experience in such sites from the perspective of user perceptions on their technical features. Hence, this study aims to identify the factors influencing user experience in such sites and to quantify the strength of their influence. To this end, we draw on Hassenzahl's (2003) user experience framework to create a model to investigate user experience and conducted a survey in Baidu Knows to test the research model.

Theoretical basis

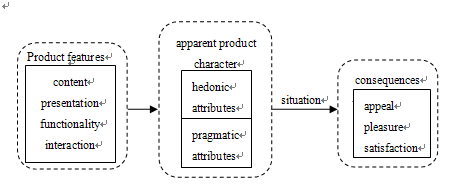

Hassenzahl's user experience (see Figure 1) posits that users construct product attributes by combining the product's features, for example, presentation, content, functionality, and interaction, with personal expectations or standards. Two distinct attribute groups, namely pragmatic and hedonic attributes (including stimulation and identification), can describe a product's characteristics. Using a product with a particular characteristic in a given situation will lead to certain consequences, such as experiencing emotions (e.g., satisfaction or pleasure), forming explicit evaluations (e.g., judgments of appeal, beauty, and goodness), or the creation of overt behaviour (e.g., approach, or avoidance). Pragmatic attributes are connected to the users' need to achieve behavioural goals. A product that allows for effective and efficient goal-achievement is perceived as pragmatic. In contrast, hedonic attributes, further divided into stimulation and identification, are primarily related to the users themselves.

Hassenzahl's model has been widely used as a theoretical base. Some researchers have extended this model by incorporating factors such as aesthetic and overall quality. Hassenzahl (2004) also extended this model by examining the interplay between user-perceived usability (e.g., pragmatic attributes), hedonic attributes (e.g., stimulation, identification), goodness (e.g., satisfaction), and the beauty of four different MP3-player skins. Schaik and Ling (2008) investigated how the system design characteristics of Web pages affect user experience, and explored the influence of user-perceived hedonic and pragmatic qualities on beauty and goodness (overall quality). The study used an experimental design with three factors: principles of screen design, principles for organizing information on a web page and the experience of using a Website. De Angeli, Sutcliffe and Hartmann (2006) and Tuch, Roth, Hornbæk, Opwis and Bargas-Avila (2012) explored the effect of aesthetics and practicality on website interface. Furthermore, Tuch et al. (2012) investigated user evaluation behaviour on the Website before and after using it.

In human-computer interaction research, Hassenzahl's model has been applied to the context of practical applications, such as systems, e-learning, etc. Zaharias and Poylymenakou (2009) proposed a questionnaire-based, usability evaluation method for e-learning applications. The method extended the current practice by focusing on both cognitions and affective considerations that may influence e-learning usability. Ozkan and Koseler (2009) proposed a conceptual e-learning assessment framework, termed the hexagonal e-learning assessment model, by suggesting a multi-dimensional approach through its six dimensions: system quality, service quality, content quality, learner perspective, instructor attitudes, and supportive issues. Xiao and Benbasat (2007), Ozok, Fan and Norcio (2010) and Knijnenburg, Willemsen, Gantner, Soncu and Newell (2012) applied Hassenzahl's model to study user experience regarding the usage of recommendation systems. They found that the user-perceived quality dimensions of a recommendation system, such as interactivity and interface, affect users' attitudes and behaviour. In addition, Ozok et al. (2010) designed a set of evaluation indices for recommendation systems.

Based on the above literature, we developed a hypothesised research framework based on Hassenzahl's (2003) model, in which the determinants of user experience are divided into two dimensions: system characteristic dimension and technique perceptual dimension. The system characteristic dimension covers interface design, content and interaction, while the technique perceptual dimension includes perceived usefulness, perceived ease of use and perceived enjoyment.

We believe that Hassenzahl's model is an appropriate theoretical basis for addressing our research question for two reasons. First, Hassenzahl's model focuses on the factors that affect the experience of users in their interaction with the product, which is prevalent in the social question and answer context. In such sites, information contribution is based on both human-computer interaction and human-human interaction, and interaction among users occurs throughout the whole experience. Secondly, the model has been widely used in the contexts of human-computer interaction (e.g., Zaharias and Poylymenakou, 2009; Ozkan et al., 2009; Xiao et al.,2007; Ozok, et al.,2010; Knijnenburg et al.,2012) and human-human interaction (e.g., Hart, et al.,2008; Baird, et al., 2005).

Research model and hypotheses development

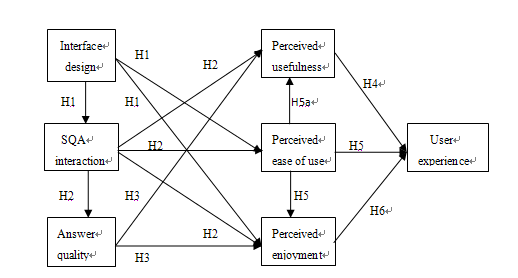

As noted above, the determinants of user experience in a human-computer interaction system can be divided into the system characteristic dimension and the technical perception dimension. In the context of social question and answer sites, Park et al. (2004) found that answer quality and interactivity affect user satisfaction. Thong et al. (2002) found that the quality of a system's interface makes a significant contribution to a usable digital library. The quality of system interface is often listed as a major reason for users not using electronic information retrieval systems (Fox, Hix, Nowell and Brueni, 1993). Based on prior findings, we include three factors that indicate the system characteristic dimension of social question and answer sites: interface design, social question and answers interaction and answer quality. According to Hassenzahl (2003), the technical perception dimension includes perceived usefulness and perceived enjoyment. Based on the study by Thong et al. (2002), this paper further divides usability into perceived ease of use, perceived usefulness and perceived enjoyment. As a result, we hypothesise that user perceptions of interface design, answer quality, and interaction in social question and answer sites lead to different experience consequences. We depict the research framework in Figure 2.

The model proposed by Mahlke and Thuring (2007) for user experience indicated that system features such as functionality and interface design will affect interaction and determine its major characteristics. The technology acceptance model theorised that the effects of external variables on technology use intention are mediated by perceived ease of use and perceived usefulness (Davis, 1989). Davis, Bagozzi and Warshaw (1992) further proposed that the effects of system variables on use intention are also mediated by perceived enjoyment. Thong's (2002) research on the acceptance of digital libraries by users found that interface characteristics such as terminology, screen design and navigation are critical external variables that have an impact on adoption intention through perceived usefulness and perceived ease of use. For example, a well-designed interface can help navigate users through a system by reducing the effort required to identify a particular object on the screen. Zhao and Zhang (2012) pointed out that the interface is the window of interaction between users and the product. In this regard, we assume that a well-designed interface will enhance user-perceived enjoyment and that a good designer needs to arrange interface elements according to the cognition and emotions of users. Therefore, we hypothesise that:

H1a: interface design for social question and answer sites will have a positive effect on interaction on those sites.

H1b: Interface design will have a positive effect on perceived ease of use.

H1c: Interface design will have a positive effect on perceived enjoyment.

Myers (1991) posited that collaborative learning exercises are learner-centred, enabling learners to share authority and empower themselves. By sharing and communicating knowledge, they transform individual knowledge into societal knowledge (Robey, 1997). In other words, effective communication and interaction between learners manifests implicit knowledge into explicit knowledge, enhances the extent of knowledge sharing and revising, and improves knowledge quality and authority. According to Hassenzahl (2003), interaction, as one of the significant product attributes, will affect perceived pragmatic (usability) and hedonic (enjoyment)qualities. Mahlke et al. (2007) also suggested that interaction characteristics will affect perceived instrumental qualities (e.g., ease of use, usefulness) and non-instrumental qualities (e.g., visual aesthetics, enjoyment). The technology acceptance model suggests that perceived ease of use is associated with perceived usefulness (Davis, 1989). Hence, the easier it is for a user to interact with a system, the more likely he or she will find it useful and therefore intend to use it. Therefore, we hypothesise that:

H2a: Easy interaction in social question and answer sites will have a positive effect on answer quality.

H2b: Easy interaction will have a positive effect on perceived usefulness.

H2c: Easy interaction will have a positive effect on perceived ease of use.

H2d: Easy interaction will have a positive effect on perceived enjoyment.

Yu (2006) indicated that if services provide superior information compared to other sources, for example by improving how users feel about the usefulness of the information they get, this will affect users' overall satisfaction. Park et al. (2004) found that answer quality is the most important reason for users choosing a site. When compared to the search results from search engines, the answers these sites provide are more useful for solving user's problems (Park et al.,2004). Hassenzahl's (2003) model suggests that the content of a product is associated with perceived hedonic qualities, which means high quality answers can improve users' knowledge levels and confidence in the answers, enhancing perceived enjoyment. Therefore, we hypothesise that:

H3a: High quality answers on social question and answer sites will have a positive effect on perceived usefulness.

H3b: High quality answers will have a positive effect on perceived enjoyment.

The user acceptance framework for information systems proposed by Davis et al. (1992) indicated that perceived ease of use can affect perceived usefulness and perceived enjoyment. The study on user experience of software systems by Hassenzahl, Platz, Burmester and Lehner (2000) proposed that ergonomic quality and hedonic quality will affect user experience and evaluation. Ergonomic quality mainly refers to usefulness and ease of use, whereas hedonic quality mainly includes creativity and fun. Mahlke (2002) found that the factors affecting website user experience include perceived ease of use, perceived usefulness, perceived enjoyment and perceived aesthetic. The website user experience model proposed by Schaik and Ling (2008) theorised that perceived pragmatic quality and hedonic quality will affect the overall evaluation given by users (goodness). Park, Han, Kim, Oh and Moon (2013) found that user experience includes perceived usability, user emotion, user value and overall user experience, and that overall experience is affected by the other three components. Therefore, we hypothesise that:

H4: Perceived usefulness will have a positive effect on user experience of social question and answer sites.

H5a: Perceived ease of use will have a positive effect on perceived usefulness.

H5b: Perceived ease of use will have a positive effect on perceived enjoyment.

H5c: Perceived ease of use will have a positive effect on user experience.

H6: Perceived enjoyment will have a positive effect on user experience.

Method

Research setting and data collection

Based on the research framework proposed above, a survey questionnaire was developed. Baidu Knows was chosen as the research site for this study. Seven latent variables are included in the research framework, each of which was measured by multiple question items. The majority of measurement items were adopted from previous studies (see Table1). Adjustments were made to some items to better suit the research context. A five-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree) was used to measure each item.

The items measuring the construct of interface design were adapted from Thong, Hong and Tam (2002). We enriched the item set by adding an item concerning screen appearance. As there is no existing measurement for interaction on social question and answer sites, we developed a measurement by modifying the approach taken by Paechter et al. (2010). We replaced the interaction among students in e-learning system with an item on experience concerning the interaction between peer students. The three items for the construct of social question and answer interaction came from the work of Paechter et al. (2010). The three items for the construct of answer quality were adopted from Shah et al. (2010) and Kim et al. (2009). We chose the measurement by summarizing the findings from previous studies of the criteria users have for evaluating the answers in sites (Shah et al., 2010; Kim et al., 2009; Kim, Oh and Oh, 2007; Zhu, Bernhard and Gurevych, 2009). The three items for the construct perceived usefulness and the other three items for the construct perceived ease of use were adopted from Davis (1989) and Schaik et al. (2008). We developed the measurement by combining the main characteristics of social question and answer sites (e.g., information sharing and interaction-orientation). For the construct perceived enjoyment, we developed the measurement by summarizing the measurement that came from Davis et al. (1992) and Schaik et al. (2008) with regard to the context of these sites. The two items for the construct user experience were adopted from Park et al. (2013). We chose the measurement because it reflects the two main aspects of the consequences of user experience: emotions and overt behaviour.

| Construct | Measurement items | Cronbach's alpha | Source |

|---|---|---|---|

| Interface design (ID) | The screen appearance of Baidu Knows is clean and brief | 0.78 | Thong et al., 2002 |

| Layout of Baidu Knows screens is clear and consistent | |||

| The sequence of screens on Baidu Knows is clear and accurate | |||

| Answer quality (AQ) | The answers in Baidu Knows are relevant to the question | 0.78 | Shah et al., 2010; Kim et al.,2009 |

| The answers in Baidu Knows are convincing | |||

| The answers in Baidu Knows are professional | |||

| SQA interaction (SQAI) | There are ample opportunities in Baidu Knows to interact with other users | 0.83 | Paechter et al., 2010 |

| Interaction between me and other users in Baidu Knows is clear and understandable | |||

| The communication tools in Baidu Knows are various | |||

| Perceived usefulness (PU) | My problems are solved by using Baidu Knows | 0.79 | Davis,1989; Schaik et al., 2008 |

| My work efficiency is improved by using Baidu Knows | |||

| I think Baidu Knows is useful to me | |||

| Perceived ease of use (PEOU) | Learning to use Baidu Knows is easy for me | 0.90 | Davis,1989; Schaik et al., 2008 |

| It would be easy for me to become skilful at using Baidu Knows | |||

| I think Baidu Knows is easy to use | |||

| Perceived enjoyment (PE) | Communicating with others in Baidu Knows makes me feel happy | 0.85 | Davis,1989; Schaik et al., 2008 |

| Learning in Baidu Knows brings me a lot of fun | |||

| I think using Baidu Knows is a kind of enjoyment | |||

| User experience (UX) | The experience in Baidu Knows is satisfactory | 0.74 | Park et al., 2013 |

| I will continue to use Baidu Knows in the future |

An online survey was launched to collect responses between July 23, 2013 and August 10, 2013 on a specialised online questionnaire website Questionnaire Star (http://www.sojump.com/. Baidu Knows covers fourteen different varieties of topics such as art, lifestyle and sports. Different users of the fourteen categories may have different characteristics and user experiences. Hence, to randomly select our sample and avoid sampling bias, we sent 2100 invitations via email to Baidu Knows users, and made 150 invitations for each category. A total of 218 valid questionnaires were returned, resulting in a response rate of 10.4%. The respondents' characteristics are summarised in Table 2 which shows that a similar number of males and females participated in the research, and the majority of respondents were between 21 and 30 years of age. Nearly 75% of the respondents had at least a bachelor's degree, and almost 60% of them had been using Baidu Knows for over three years.

| Characteristics | Frequency (218) | Percentage | |

|---|---|---|---|

| Sex | Male | 102 | 46.8% |

| Female | 116 | 53.2% | |

| Age | Less than 20 | 33 | 15.1% |

| 21-30 | 179 | 82.1% | |

| 31-40 | 4 | 1.8% | |

| Over 40 | 2 | 0.9% | |

| Education | High school or lower | 5 | 2.3% |

| Technical school/Junior school | 7 | 3.2% | |

| University | 132 | 60.6% | |

| Postgraduate or higher | 74 | 33.9% | |

| Using time | Less than 1 year | 27 | 12.4% |

| 1-2 years | 29 | 13.3% | |

| 2-3 years | 31 | 14.2% | |

| Over 3 years | 131 | 60.1% | |

Data analysis

We used the structural equation modelling software LISREL 8.7 and SPSS 20.0 to test the research model. We also used Andersen and Gerbing's (1988) two-step analytical approach to assess the measurement model and structural model.

Measurement model

Using Cronbach's alpha and composite reliability, we examined reliability, as the measurement of internal consistency. Both of them should be above the threshold of 0.7 to ensure the internal consistency of the indicators (Fornell and Larcker, 1981). As shown in Table 1 and Table 3, the values of Cronbach's alpha and composite reliability (CR) for each construct all exceed 0.7 respectively, confirming the internal consistency of the indicators.

Validity includes convergent validity and discriminate validity. Convergent validity was measured by factor loading and average variance extracted (AVE), both of which should be above the recommended level of 0.5 (Chin,1998;Hair, Black, Babin and Anderson, 2010). As shown in the Table 3 and Table 4, all factor loading and AVE values are above the threshold of 0.5, which means the measurement items demonstrate good convergent validity. Discriminate validity was assessed by comparing construct correlations and the square root of AVEs (Fornell et al., 1981). As Table 3 shows, the square root of average variance extracted (diagonal elements) for each construct is larger than its correlation with the other constructs (off diagonal elements), which demonstrates discriminate validity. However, the correlation of some variables is higher than 0.6. To alleviate the concern of high correlation, we checked the variance inflation factor (VIF), which tests multicollinearity between each independent variable. These values for all the independent variables are less than the threshold of 3 (Petter, Straub and Rai, 2007), indicating that the relatively high correlations are acceptable. Regarding the common method bias, we conducted an exploratory factor analysis following Harman's one-factor test (Podsakoff and Organ, 1986). The results did not show a single factor accounting for the majority of variances in all items. Hence, we concluded that the common method bias may not pose a serious threat to this study's internal validity.

| CR | AVE | UX | PE | PU | PEOU | SQAI | AQ | ID |

|---|---|---|---|---|---|---|---|---|

| 0.71 | 0.55 | 0.74 | ||||||

| 0.82 | 0.60 | 0.67 | 0.77 | |||||

| 0.89 | 0.73 | 0.71 | 0.44 | 0.75 | ||||

| 0.80 | 0.57 | 0.58 | 0.42 | 0.57 | 0.85 | |||

| 0.85 | 0.53 | 0.53 | 0.67 | 0.43 | 0.44 | 0.81 | ||

| 0.83 | 0.49 | 0.49 | 0.59 | 0.49 | 0.22 | 0.50 | 0.79 | |

| 0.85 | 0.34 | 0.34 | 0.42 | 0.25 | 0.38 | 0.31 | 0.16 | 0.81 |

| ID | AQ | SQAI | PU | PEOU | PE | UX | |

|---|---|---|---|---|---|---|---|

| ID1 | 0.844 | 0.005 | 0.144 | 0.005 | 0.015 | 0.052 | 0.108 |

| ID2 | 0.809 | 0.063 | 0.106 | 0.146 | 0.128 | 0.058 | 0.158 |

| ID3 | 0.756 | 0.117 | -0.033 | 0.153 | 0.170 | 0.223 | 0.013 |

| AQ1 | 0.077 | 0.698 | 0.259 | 0.195 | -0.012 | 0.062 | 0.229 |

| AQ2 | 0.068 | 0.827 | 0.068 | 0.153 | 0.067 | 0.215 | 0.020 |

| AQ3 | 0.038 | 0.841 | 0.082 | 0.050 | 0.114 | 0.149 | 0.025 |

| SQAI1 | 0.125 | 0.098 | 0.839 | 0.104 | 0.002 | 0.177 | 0.083 |

| SQAI2 | 0.034 | 0.103 | 0.839 | 0.146 | 0.162 | 0.204 | 0.044 |

| SQAI3 | 0.075 | 0.171 | 0.736 | 0.024 | 0.268 | 0.145 | 0.126 |

| PU1 | 0.115 | 0.267 | 0.047 | 0.705 | 0.189 | 0.098 | 0.249 |

| PU2 | 0.070 | 0.096 | 0.091 | 0.817 | 0.145 | 0.132 | 0.011 |

| PU3 | 0.176 | 0.095 | 0.167 | 0.741 | 0.289 | 0.126 | 0.252 |

| PEOU1 | 0.088 | 0.048 | 0.111 | 0.245 | 0.816 | 0.135 | 0.114 |

| PEOU2 | 0.150 | 0.051 | 0.171 | 0.134 | 0.873 | 0.058 | 0.116 |

| PEOU3 | 0.086 | 0.101 | 0.112 | 0.168 | 0.869 | 0.147 | 0.141 |

| PE1 | 0.167 | 0.247 | 0.369 | 0.013 | 0.123 | 0.690 | 0.150 |

| PE2 | 0.108 | 0.201 | 0.172 | 0.197 | 0.133 | 0.823 | 0.087 |

| PE3 | 0.130 | 0.115 | 0.178 | 0.156 | 0.130 | 0.810 | 0.225 |

| UX1 | 0.239 | 0.257 | 0.120 | 0.162 | 0.199 | 0.248 | 0.728 |

| UX2 | 0.130 | 0.030 | 0.162 | 0.299 | 0.241 | 0.222 | 0.746 |

Structural model

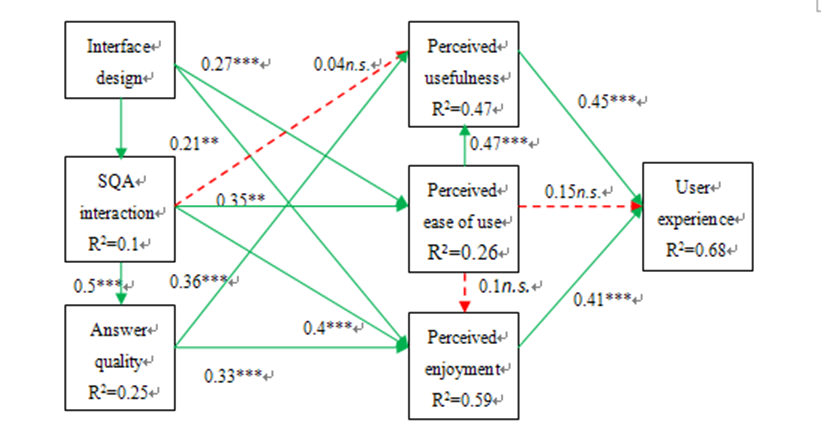

Figure 3 and Table 5 provide the structural model results with the coefficients for each path. Most of the hypotheses were supported except hypotheses H2b, H5b and H5c. First, we examined the factors affecting user experience directly in social question&answer sites. Consistent with expectations, perceived usefulness and perceived enjoyment have a significant and positive influence on user experience. Therefore, hypotheses H4 (β=0.45, t=4.8) and H6 (β=0.41, t=5.01) are supported. However, perceived ease of use has no significant effect on user experience and perceived enjoyment. Hence, hypotheses H5c (β=0.15, t=1.85) and H5b (β=0.1, t=1.31) are not supported. Perceived ease of use is significantly related to perceived usefulness. Thus, H5a (β=0.47, t=5.82) is supported.

Regarding the external factors affecting user experience, interface design is significantly related to interaction on social question and answer sites, perceived ease of use and perceived enjoyment. Therefore hypotheses H1a (β=0.31, t=2.84), H1b (β=0.27, t=3.42) and H1c (β=0. 21, t=3.80) are supported. Interaction has significantly positive influence on answer quality, perceived ease of use and perceived enjoyment. Therefore hypotheses H2a (β=0.5, t=5.5), H2c (β=0.35, t=4.37) and H2d (β=0.4, t=4.33) are supported. Contrary to our expectations, interaction is not significantly related to perceived usefulness. Thus hypothesis H2b (β=0.04, t=0.47) is not supported. Answer quality has a significant and positive effect on perceived usefulness and perceived enjoyment. Thus hypotheses H3a (β=0.36, t=3.80) and H3b (β=0.33, t=3.76) are supported.

| Hypothesis | Relationship | Beta | t-value | Supported? |

|---|---|---|---|---|

| H1a | Interface design towards user interaction | 0.31 | 2.84* | Yes |

| H1b | Interface design towards Perceived ease of use | 0.27 | 3.42* | Yes |

| H1c | Interface design towards Perceived enjoyment | 0.21 | 3.80** | Yes |

| H2a | User interaction towards Answer quality | 0.50 | 5.5* | Yes |

| H2b | User interaction towards Perceived usefulness | 0.04 | 0.47 | No |

| H2c | User interaction towards Perceived ease of use | 0.35 | 4.37*** | Yes |

| H2d | User interaction towards Perceived enjoyment | 0.4 | 4.33* | Yes |

| H3a | Answer quality towards Perceived usefulness | 0.36 | 3.80* | Yes |

| H3b | Answer quality towards Perceived enjoyment | 0.33 | 3.76* | Yes |

| H4 | Perceived usefulness towards User experience | 0.45 | 4.8*** | Yes |

| H5a | Perceived ease of use towards Perceived usefulness | 0.47 | 5.82* | Yes |

| H5b | Perceived ease of use towards Perceived enjoyment | 0.1 | 1.31 | No |

| H5c | Perceived ease of use towards User experience | 0.15 | 1.85 | No |

| H6 | Perceived enjoyment towards User experience | 0.41 | 5.01* | Yes |

Discussion and implications

Based on previous studies on user experience and social question and answer sites, this paper constructed a hypothesised research framework on the determinants of user experience on such sites, and assessed the framework in the context of Baidu Knows. The results highlight a number of new insights. Accordingly, some suggestions were proposed to improve the sites' services.

The results indicate that interface design has a significant influence on interaction. In other words, interface design plays an important role in facilitating effective and convenient interaction among a site's users and between the user and the system. Users are more likely to communicate with each other through the site if the interface is clear and brief. This finding adds to previous research and discussion on the relationship between interface design and human-computer interaction (Mahlke et al., 2007). Accordingly, it is suggested that substantial attention should be paid to effective interface design.

The interface design of a site is found to indirectly affect users' perceived ease of use and the perceived enjoyment mediated by site interaction. This highlights the importance of interface rationality and is also consistent with the studies by Thong et al. (2002) and Davis (1992), suggesting that users will find an system easy to use and enjoyable if its interface has a well-designed screen appearance, layout and sequence of screen.

The results also show that interaction on these sites significantly relates to users' perceived ease of use, perceived enjoyment and answer quality. This is in line with earlier studies by Mahlke et al. (2007), Myers (1991) and Knijnenburg (2012). Given the availability of ample interaction opportunities and various communication tools during interaction, users' communication with the system and others using it should be free of effort. These interaction functions should also have hedonic value for users and enrich their perceived enjoyment.

Interaction is found to have no significant effect on perceived usefulness, which is inconsistent with the results of previous studies by Hassenzahl (2003) and Mahlke et al. (2007). This difference may partly be attributed to our study context. While previous studies have been mostly been grounded on studying how users interact with computer system, our study took the interaction between users as an important dimension of interaction. Zhang and Yuan's (2009) study on the comparison of five such sites in China found that users receive more than four useful answers on average for each question in Baidu Knows. This means that, for most cases, questioners can get the required information by asking once and without any further need to interact with the responders. Therefore, interaction may not directly facilitate the feeling of the usefulness of a site. In addition, we found an indirect relationship between interaction and perceived usefulness through the mediation effect of perceived ease of use. This suggests that interaction improves the feeling of ease of use, which in turn facilitates an enhanced perception of usefulness. However, interaction does not affect perceived usefulness directly.

The study found that the answer quality found on a site significantly affects users' perceived usefulness and perceived enjoyment, which is consistent with the studies by Kim et al. (2009) and Park et al. (2004), suggesting that the evaluation of answer quality relates to its social emotions and cognitive values. For users, high quality answers do not only help them solve problems effectively, but also make using the site enjoyable. Therefore, attention must be paid to making strict review mechanisms that eliminate low quality answers and integrate high quality ones. In addition, to answer quality, it is recommended that site owners should cooperate with a research organization willing share human resources for the purpose of expanding the scope and level of the community's expertise (Kim et al., 2009).

Perceived usefulness and perceived enjoyment are found to significantly relate to user experience. This result is in line with the findings of previous studies (Xiao et al., 2007; Schaik et al., 2008; Hassenzahl, 2003), suggesting that helping users solve problems offers the principle value for these sites as the basis for an information sharing community, and is the key point for enhancing users' information experience. Users are more likely to consider the sites useful and be satisfied if their problems are solved and their work efficiency is improved. Furthermore, use experience will be improved if they experience an enjoyable learning experience, for instance, by gaining identity and friendship through information sharing (Hassenzahl, 2003). This result highlights the importance of the usefulness of the information content and the system as well as entertainment activities when using these sites; in other words, the utilitarian and hedonic values of sites should be taken into consideration when designing a site to improve user experience.

Against expectations, perceived ease of use is found to have no direct and significant impact on both perceived enjoyment and user experience. This difference may be attributed to our respondents. Approximately 75% of the respondents in our research have a bachelor's degree or higher and 84% use more than one site, for example, Zhihu and Yahoo. Most of the social question and answer sites in China have a similar and easy navigation process (iResearch, 2010). In this regard, the feeling of ease of use in Baidu Knows may not necessarily contribute to an improved feeling in the user experience since other sites are also easy to use as well. Therefore, users may not evaluate their use experience directly based on their feeling of ease of use. Given the similarity in system operation processes between most such sites, the usefulness and enjoyment of using a site have become major and direct determinants for users, when evaluating the system use experience. However, a marginal and indirectly influence from perceived ease of use to user experience is found, which is mediated by perceived usefulness. The reported significant relationship between perceived ease of use and usefulness is consistent with the studies of Davis (1989) and Xiao et al. (2007) .

The findings above illustrate the determinants of user experience, providing a useful reference for improving that experience in Baidu Knows and other such sites. Hence, this study has important theoretical implications for research on these sites, as well as for user experience theories in the context of both issue-oriented and social-oriented interaction research. While little previous research has focused on the user experience of these sites, this study identified the determinants of user experience by exploring the influences of both system characteristics and users' perceptions of the technology. On the other hand, this paper also provides vital practical implications for managers by showing that interface design, user interaction and answer quality will effectively enhance users' global technical perceptions of perceived usefulness, ease of use and enjoyment. Hence, attention should be paid to interface design to improve interaction within such sites.

The paper has a number of limitations. First, the research model explains 68.0% of the variance in user experience, and 47% of the variance in perceived usefulness, 26% of perceived ease of use, and 59% of perceived enjoyment. Therefore, there are obviously other factors affecting users' perception and use experience that are not included in our research model. Second, this study only considered one site provider, leaving the features of other such sites relatively unexplored. These problems could be tackled by further study.

Conclusion and future research

Social question and answer services represent a new generation of interactive information service. However, such sites are still at their initial stage and encountering many problems, such as low quality answers and low participation rates. There are also a limited number of studies on user experience of these sites. Hence, this study contributes to research by identifying the determinants of the user's experience in terms of system characteristic factors and technology perceptual factors. Based on the results, we found that low answer quality and low participation rates affect users' perceived usefulness and perceived enjoyment and hence reduce user satisfaction with the service. These findings not only allow us to better understand user experience of such sites, but also provide a useful reference for site managers wishing to improve their service implementation.

In addition to the factors identified in our research framework, future research should consider factors such as resource organization and user-perceived aesthetics. As the development of these services and the number of questions and answers increases, it is also of great significance that the massive resources of the sites are well organized, to facilitate users finding specific answers timely and effectively. Furthermore, the aesthetics of the user interfaces is a topic of major interest in the context of user experience research, for example, the influence of aesthetics on trust and credibility, and could be usefully studied.

Acknowledgements

This research is supported in part by the Chinese National Funds of Social Science (No. 14BTQ044), Wuhan University Academic Development Plan for Scholars after 1970s for the project Research on Internet User Behaviour, Wuhan University Postgraduate English Course on Internet User Behaviour.

About the authors

Shengli Deng (corresponding author) is a professor and deputy director of the Department of Information Management, School of Information Management at Wuhan University. He received his B.S. and M.S. in Information Management from Central China Normal University and Ph.D. in Information Science from Wuhan University. His research interests include information behaviour, information interaction, and information services. He can be contacted at: victorydc@sina.com

Yuling Fang is a postgraduate student of library and information science, School of Information Management at Wuhan University. She received her B.S in Information Management from Central China Normal University. Her research interests include user experience and information service. She can be contacted at: 1033005969@qq.com

Yong Liu is an assistant professor at the Department of Information and Service Economy at Aalto University School of Business. He received his Ph.D in Science of Business Administration and Economics from Åbo Akademi University. His research interests include information systems user behaviour,e-commerce,social networks and big data social science. He can be contacted at: Yong.liu@aalto.fi

Hongxiu Li is a post-doctoral researcher at Turku School of Economics, University of Turku, Finland. She received her Ph.D from Turku School of Economics, University of Turku, Finland. Her research interests include information system adoption and post-adoption behaviour in the fields of e-commerce, e-services and mobile services in different research contexts. She can be contacted at: hongli@utu.fi