Survey on inadequate and omitted citations in manuscripts: a precursory study in identification of tasks for a literature review and manuscript writing assistive system

Aravind Sesagiri Raamkumar, Schubert Foo, and Natalie Pang

Introduction

The scientific publication lifecycle encompasses the key activities in academic research (Björk and Hedlund, 2003). During a literature review, researchers identify research gaps by synthesising prior studies. Subsequently, a research framework is devised, data is collected and required analyses are performed (Levy and Ellis, 2006). The lifecycle completes a single iteration with the publication of study results, followed by the next set of related studies. The sequence of activities where researchers seek information is outlined in the scientific information seeking model (Ellis and Haugan, 1997). The execution complexity of all these activities clearly differentiate experienced researchers from beginners (Karlsson et al., 2012). Research has been conducted in the past to understand the issues so as to ease the complexity of each activity in this lifecycle for novice researchers. There are two kinds of non-mutually exclusive interventions. They are process-based and technology-oriented interventions.

The importance of librarians and expert researchers has been highlighted in helping rookie researchers, from a process standpoint (Du and Evans, 2011; Spezi, 2016). Some of the proposed technology-oriented interventions include search systems with faceted user interfaces for better display of search results (Atanassova and Bertin, 2014), symbiotic systems (Gamberini et al., 2015), meta-search systems (Sturm, Schneider and Sunyaev, 2015), bibliometric tools for visualising citation networks (Chou and Yang, 2011) and scientific paper recommender systems in both embedded mode (Beel, Langer, Genzmehr and Nürnberger, 2013; Naak, Hage and Aïmeur, 2008) and stand-alone mode (Huang, Wu, Mitra and Giles, 2014; Küçüktunç, Saule, Kaya and Çatalyürek, 2013) for catering to specific literature review search and manuscript writing tasks. There is an apparent lack of a task-based assistive system where key literature review search tasks are linked. The available options are piecemeal approaches catering to different tasks.

In the context of studies conducted to identify issues at each activity level in the lifecycle, both qualitative and quantitative methods have been employed. There has been a lack of contextual insights with a key issue taken as the central frame of reference across the activities. This type of approach is required to bind the observations across the activities. In order to identify a key issue, there is a need to look at the criticality of the consequences of the issues. The inadequacies of research studies are identified at the stage where official reviewers review the manuscripts submitted to journals and conferences. Manuscript reviewers have cited issues such as missing citations, unclear research objectives and improper research framework (Bornmann, Weymuth and Daniel, 2009; McKercher, Law, Weber and Hsu, 2007). From a citation analysis perspective, issues such as formal influences not being cited, biased citing and informal influences not being cited, have been identified as problems with cited papers in manuscripts (MacRoberts and MacRoberts, 1989). An improper literature review often leads to problems in subsequent stages of research since findings are not compared with the correct and sufficient number of prior studies. Thus, inadequate and omitted citations in the related work section of manuscripts are indicators of poor quality in research. The current study aims to investigate this issue of inadequate and omitted citations in manuscripts and the associated factors such as the different instances, reasons and the scenarios where external assistance is required.

We conducted a survey-based study for collecting experiential opinions of manuscript reviewers and authors with specific focus on the aforementioned issue. The perspectives of researchers as information seekers have been adequately explored in the context of information seeking performed during literature review. It is expected that the perspectives of researchers as manuscript reviewers and authors would provide insights from the academic writing context since it is a bottom-up approach. The findings of this study are intended to inform the design of a task-based scientific paper recommendation system which is currently under concurrent development and evaluation. The system is aimed at assisting researchers in their literature review and manuscript writing tasks. The survey covered the topics - instances of citations missed by authors, reasons for inadequate and omitted citations, tasks where authors needed external assistance and usage preferences of information sources such as academic search engines, databases, digital libraries and other academic systems. The inadequate and omitted citation instances directly relate to specific literature review recommendation tasks. For example, authors who tend to miss citing seminal papers in their manuscript would be benefitted by the corresponding remedial task where seminal papers are specifically recommended. This scenario has been addressed in few previous studies (Bae, Hwang, Kim and Faloutsos, 2014; Wang, Zhai, Hu and Chen, 2010).

This is the first study to use the dual perspectives of manuscript reviewers and authors as both these roles are important in ascertaining the criticality of the different types of inadequate and omitted citations. The findings from this study will help researchers gain a holistic understanding of the issue of inadequate and omitted citations in research manuscripts. The findings will also provide empirical evidence to justify the need for developing holistic intelligent systems such as recommender systems that cater to the different search tasks performed during literature review and academic writing.

Literature review

Issues faced by researchers during information seeking

The consolidated Ellis information seeking model (Ellis and Haugan, 1997) has been considered as the base model for guiding studies in ascertaining the intricacies and issues in the information seeking sessions of academic researchers. Prior studies provide an overview of issues faced by researchers during information seeking. Barrett (2005) studied the information seeking habits of graduate students in a Humanities school. Findings of this study show that individual sessions of graduates tend to be idiosyncratic with projects started in a haphazard manner. Results indicate students' reliance on supervisors' guidance in finding relevant documents, thereby underlining the importance of providing intelligent systems in addition to online library services such as online public access catalogues. Barrett's study is in line with an earlier study conducted by Fidzani (1998) which highlights the graduate students' inability in using the library resources in a required manner to perform research. The assistive role required from library resources and librarians in helping researchers is a common observation by many studies (Catalano, 2013; George et al., 2006; Head, 2007; Wu and Chen, 2014). The study conducted by Du and Evans (2011) identified characteristics, strategies and sources related to research-related search tasks of graduate students. The study indicates the difficulties faced by researchers in finding relevant information using multiple explorative search sessions in multiple sources. The nuances in handling the features provided by academic search systems are highlighted with marked differences between novices and experts. This issue is also echoed in other studies (Brand-Gruwel, Wopereis and Vermetten, 2005; Karlsson et al., 2012; Yoo and Mosa, 2015) where the experts' ability in carefully formulating a problem before conducting a search is highlighted as a key difference. Academic experience and searching competency are two factors that affect the confidence of researchers, thereby differentiating expert researchers from novices (Niu and Hemminger, 2012).

Studies related to exploratory search and search tasks have identified the characteristics of the search tasks undertaken by researchers and attributed the complexity of these tasks to characteristics such as uncertainty and prior knowledge of researchers (Liu and Kim, 2013; Wildemuth and Freund, 2012). Directed search and passive browsing are the most-used search techniques by researchers during their information seeking sessions (Bates, 2002; Vilar and Žumer, 2011), with the former representing the search for an exact information need while the latter being more serendipitous in nature. Most of these research studies suggest remedial measures such as educating new users on information seeking best practices, involving librarians for assisting users and involving experts to assist novices so that their information seeking, searching skills and competencies improve.

Citing behaviour in academic writing

Scientific research has a cumulative nature of building on top of prior studies for advancement. Therefore, studying citation behaviour, citation patterns and citation intents have remained important. Erikson and Erlandson (2014) have put forth a taxonomy of motivations based on the citation intent of researchers. The main categories are argumentation (support for claims), social alignment (pushing author's identity), mercantile alignment (uplifting other researchers' works) and data (evidence). Other citation motivation studies (Case and Higgins, 2000; Case and Miller, 2011; Harwood, 2009) have reported along similar lines. Even though citations are made with any of the aforementioned motivations, it should not be taken for granted that authors have read the papers that they cite. Simkin and Roychowdhury (2002) report that the majority of authors do not tend to read the papers that they cite and these are the papers with high citation counts. It is not known whether this behaviour is seen across researchers at different experience levels. A study by Oppenheim and Renn (1975) on highly cited papers identified that the repeated citations of these papers are mainly for historical reasons as an indication of continued contemporary relevance. Some of these papers may be considered seminal in the particular research area; therefore the authors may consider the citation as necessary. The impact of publication venues on citation of papers in manuscripts has been identified as a differentiating factor behind citation and non-citation of papers. MacRoberts and MacRoberts (2010) conducted a study in the context of biogeography papers. It was found that the papers from Thomson Reuters monitored journals had more citation visibility as against the other publication venues. However, this finding needs to be validated across disciplines. Interestingly, authors' intent behind citations of papers and the readers' understanding of the citation context vary considerably (Willett, 2013). Factors such as differences in primary discipline(s), interest areas and research experience can be attributed to the dissimilarity in understanding.

Manuscript rejections

Research manuscripts' acceptance in publications is subject to scrutiny by reviewers who are experts in the particular research areas. Peer review of manuscripts is seen as the mechanism for quality control in scientific research (Braun, 2004). Manuscripts are rejected by reviewers if the expected quality is not met. Studies have been conducted in the past looking at the reasons behind manuscript rejections. The common reasons for rejection are issues with research contributions, methodology, design, irrelevant results, and results with inconclusive evidence (Bornmann, Nast and Daniel, 2008; Bornmann et al., 2009; Byrne, 2000). The rejection category ‘Reference to the literature and documentation' (Bornmann et al., 2009) is not regarded as a major reason for rejecting manuscripts. In different studies, this category is ranked at different positions based on the discipline and the journals studied. For instance, it is number 8 in manuscript rejection reasons in Bordage's (2001) study, number 4 in McKercher et al. (2007) and part of number 2 (sub-category) in Murthy and Wiggins (2002). McKercher et al. (2007) have outlined the list of issues in the manuscripts that have a weak literature review section. Poor referencing, lack of key citations, irrelevant and outdated citations are some of the citation-related issues identified. The studies conducted so far have used the review log of publications to arrive at conclusions.

Prior studies have contributed to the understanding of the information seeking behaviour of researchers, as well as the identification of relevant issues and other contextual factors. However, the existing research also has some limitations. While studies have collected data in the context of information seeking, the impact of the seeking/searching sessions on conducting research and manuscript writing have not been explored. There is also a lack of studies that look at the citing behaviour of researchers at different experience levels. The type of citation for which researchers have difficulty finding relevant papers is another area unexplored. Data has not been collected directly from researchers on the topic of manuscript review with emphasis on the different instances of inadequate and omitted citations, and the related reasons.

Aims of research

This study intends to investigate the issue of inadequate and omitted citations in manuscripts and associated factors by addressing the following questions:

Q1: What are the critical instances of inadequate and omitted citations in research manuscripts submitted for review in journals and conferences?

Q2: Do the critical instances and reasons of inadequate and omitted citations in research manuscripts relate with the scenarios/tasks where researchers need external assistance in finding papers?

Motivation: Q1 and Q2 will help in identifying the literature review and manuscript writing tasks that are to be considered for an assistive system.

Q3: Identify the prominent information sources that include academic databases, search engines and digital libraries, through frequency of usage.

Motivation: Q3 will help in identifying the system(s) which can be considered as a basis for designing the User Interface screens and selecting the display features of the proposed assistive system.

Methods

Online survey instrument

In an earlier study (MacRoberts and MacRoberts, 1988) conducted for identifying author motivations for citing certain papers and omitting others, an interview-based method was used for data collection. Interviews were not considering for our study as the aims of the research were not suited to such a method. Data for this study was collected through questionnaire surveys. The questionnaires were pre-tested in a pilot study. The survey flow and questions were corrected based on the feedback of the participants for the criteria readability and comprehensibility. Since there were two types of participants in the study, the section meant for collecting data from the perspective of a reviewer was hidden for participants who identified themselves as mainly authors with no reviewing experience. Demographic details of the participants were collected at the start of the survey: data about age group, gender, highest education level, current position, parent school, primary discipline. The data pertaining to the study was collected in four main sections. Details about the different sections from the full questionnaire (provided to the reviewer group) are provided in Table 1. The questions for the segments in Table 1 are available in the Appendix.

| Section Name | Segment | No. of Question-Items |

|---|---|---|

| Demographic details | Demographic details | 9 |

| Reviewers' experience with citation of prior literature in manuscripts during manuscripts review | Instances of inadequate and omitted citations in journal manuscripts | 4 |

| Instances of inadequate and omitted citations in conference manuscripts | 4 | |

| Factors affecting authors' citing behaviour of prior literature in manuscripts | 5 | |

| Authors' experience with citation of prior literature in manuscripts during manuscripts review | Instances of inadequate and omitted citations in journal manuscripts | 4 |

| Instances of inadequate and omitted citations in conference manuscripts | 4 | |

| Researchers' tasks in literature review and manuscript writing process | Tasks where external assistance is required | 5 |

| Usage of academic information sources | Usage of academic databases, search engines, digital libraries and scientific paper recommendation services | 14 |

In the second and third sections, data about the different instances of inadequate and omitted citations in journal and conference manuscripts were gathered from the reviewer and author perspectives respectively. The instances (i) Missed citing seminal papers, (ii) Missed citing topically relevant papers, (iii) Insufficient papers in the literature review and (iv) Irrelevant papers in the literature review were selected from previous studies which were aimed at helping researchers in literature review (Ekstrand et al., 2010; Hurtado Martín, Schockaert, Cornelis and Naessens, 2013; Mcnee, 2006). The instances frequency was measured using a 5-point ordinal rating scale with the following values: Never (1), Rarely (2), Sometimes (3), Very Often (4) and Always (5). The second section was hidden for participants who identified themselves as authors with no reviewing experience.

In the fourth section, questions were specifically about tasks pertaining to literature review and manuscript writing. The two segments in this section gathered data about the tasks/scenarios where researchers needed external assistance, along with opinions about the characteristics of certain key tasks. The scenarios were (i) Identifying seminal/important papers that are to be read as a part of the literature review in your research study, (ii) Identifying papers that are topically similar to the papers that you have already read as part of your literature review, (iii) Identifying papers related to your research, from disciplines other than your primary discipline, (iv) Identifying papers for particular placeholders in your manuscript and (v) Identifying papers that must be necessarily cited in your manuscripts. These scenarios were identified from previous studies (Bae et al., 2014; Ekstrand et al., 2010; He, Kifer, Pei, Mitra and Giles, 2011; Hurtado Martín et al., 2013; Mcnee, 2006) where recommender and hybrid information systems were used to provide recommendations to researchers. The response data was measured using a 5-point ordinal rating scale with the following values: Never (1), Rarely (2), Sometimes (3), Very Often (4) and Always (5).

In the fifth section, data about the usage of information sources such as academic databases, search engines and the related papers feature in the aforementioned information sources were collected. The related papers feature retrieves a list of similar papers for a particular paper of interest to the user. The academic information sources considered were Google Scholar, Web of Science, ScienceDirect, Scopus, SpringerLink, IEEE Xplore and PubMed. These sources were selected based on their popularity and applicability to multiple disciplines. The response data from the segments in this section were measured using a 5-point ordinal rating scale with the following values: Never (1), Rarely (2), Sometimes (3), Very Often (4) and Always (5).

Participants

As indicated earlier, two groups of participants were invited to take part in the online surveys pertaining to the objectives of this study. The first group comprised of reviewers who had officially reviewed journal or conference papers. The second group comprised of manuscript authors who had published at least one paper in a journal or conference. The first group was provided with the full questionnaire, with questions that were to be answered from the perspectives of both reviewer and author. The second group was provided with a different survey Web link that pointed to a questionnaire with only author-related questions. The data for the study were collected from a single location - Nanyang Technological University, Singapore between November 2014 and January 2015. The authors sought permission from the research heads of fourteen schools in the university, for disseminating the survey invitation email to the academic staff, research staff and the graduate research students of the schools. For schools that did not respond or declined to disseminate the survey participation email, the required details of the staff and students were obtained from the school Websites and Web directories. Prior to the survey advertisement process and data collection, the required permissions were acquired through the Institutional Review Board of the university. Participants were paid with a cash incentive of 10 Singapore dollars for completing the study.

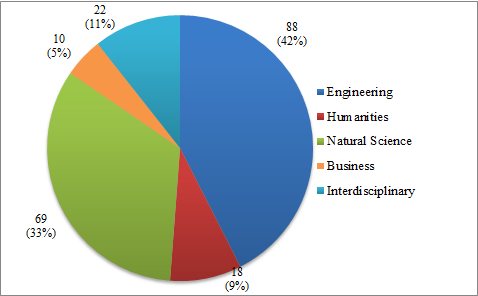

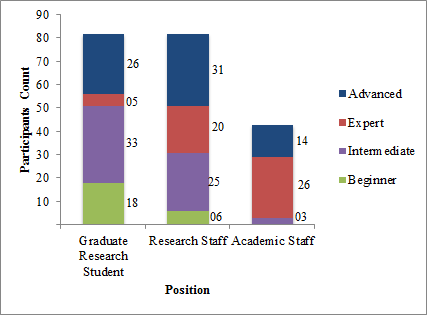

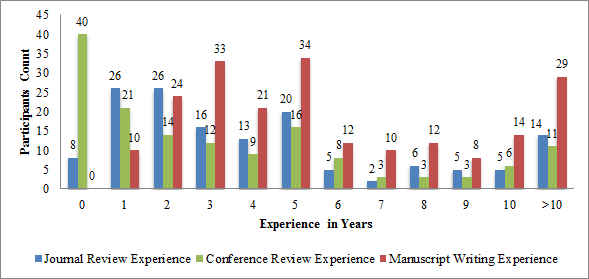

From a population of 1772 potential participants in the university, a total of 207 researchers (response rate of 12%) participated in the surveys with the majority (n = 146 (71%)) answering from both reviewer and author perspectives, since they met the qualification criteria. Schools from the Engineering and Natural Sciences disciplines had a bigger presence, with 42% and 33% of the total participants respectively (Figure 1), since the headcount of staff and students in the schools for these disciplines are higher than in the Humanities and Business disciplines. Participants were requested to rate their experience levels. Figure 2 provides a chart with two related demographic variables, experience level and current position. There were an equal number of graduate research students (n = 82) and research staff (n = 82) while academic staff accounted for 43 participants. Participants were required to provide their journal/conference reviewing and writing experience in years. Figure 3 provides a column chart with experience in number of years for three variables. The three variables are journal review experience, conference review experience and manuscript writing experience. More than half of the participants had less than five years' experience for all the three aforementioned variables: journal review experience (52.3%) conference review experience (54%) and manuscript writing experience (57.9%).

Figure 1: Participants' data by primary discipline

Figure 2: Participants' data by position and experience level

Figure 3: Experience of participants in journal & conference review and writing manuscripts

Analysis procedures

Responses to the online survey were collected and analysed. Descriptive statistics were used to measure central tendency. A one-sample t-test was used in the analysis to check the presence of statistically significant difference with the mean values. Statistical significance was set at p < 0.05. Statistical analyses were done using SPSS 21.0. During the analysis, the continuous variable writing experience was used to create a new categorical variable writing group for facilitating deeper analysis. The writing group 1 (low experience group) was allotted to observations where the participants had indicated their writing experience as less than three years. The writing group 2 (intermediate experience group) was allotted to observations where the writing experience was between three and ten years, while the writing group 3 (high experience group) was allotted to observations where the experience was above 10 years.

Results

Instances of inadequate and omitted citations

Table 2 shows the results of the reviewer and author experience responses on the four instances of inadequate and omitted citations for both journal and conference manuscripts. From the reviewer perspective, the mean experience was higher than the test value of 2 (2 represents rare frequency) at statistically significant differences (p < 0.05) for all the four instances for journal and conference manuscripts. From the author perspective, only two instances (Missed citing seminal papers in manuscripts, Missed citing topically relevant papers in manuscripts) had mean experience higher than the test value of 2, specifically for journal manuscripts. However, all the differences in the author perspective were statistically significant.

The instance Missed citing topically relevant papers in manuscripts received the highest mean value for conferences (M = 3.14) from a reviewer perspective, followed by the instance Insufficient papers in the literature review sections of manuscripts for both journals and conferences (M = 3.09, M = 3.09). From the author perspectives, results indicate that participants have very infrequently faced these instances in their experience. Only the instances Missed citing seminal papers in manuscripts (M = 2.29) and Missed citing topically relevant papers in manuscripts (M = 2.33) are an exception in the case of journal manuscripts.

| Instances of inadequate and omitted citations | Reviewer perspective | Author perspective | |||

|---|---|---|---|---|---|

| M (SD) | t (p<0.05) | M (SD) | t (p<0.05) | ||

| Journals | Missed citing seminal papers | 2.83 (0.770)a | 12.718 | 2.29 (0.888)c | 4.694 |

| Missed citing topically relevant papers | 3.08 (0.715)a | 17.729 | 2.33 (0.865)c | 5.547 | |

| Insufficient papers in the literature review | 3.09 (0.797)a | 16.027 | 1.88 (0.881)c | -1.972 | |

| Irrelevant papers in the literature review | 2.78 (0.896)a | 10.163 | 1.41 (0.661)c | -12.94 | |

| Conferences | Missed citing seminal papers | 2.94 (0.780)b | 12.652 | 1.79 (0.848)c | -3.525 |

| Missed citing topically relevant papers | 3.14 (0.775)b | 15.317 | 1.79 (0.859)c | -3.479 | |

| Insufficient papers in the literature review | 3.09 (0.866)b | 13.155 | 1.56 (0.741)c | -8.629 | |

| Irrelevant papers in the literature review | 2.72 (0.901)b | 8.395 | 1.32 (0.603)c | -16.247 | |

Reasons for inadequate and omitted citations

Table 3 shows the results of reviewers' opinions on the reasons for inadequate and omitted citations. Data indicates that there is agreement in support of these reasons. The mean agreement was higher than the test value of 3 (3 represents neutral) at statistically significant differences (p < 0.05). The reason Lack of research experience in particular research area had the highest average agreement (M = 3.68), followed by Interdisciplinary topic (M = 3.58) and Lack of overall research experience (M = 3.51).

| Reason for inadequate and omitted citations | M (SD) | t (p<0.05) |

|---|---|---|

| Lack of research experience in particular research area | 3.68 (0.813) | 10.076 |

| Interdisciplinary topic | 3.58 (0.750) | 9.382 |

| Lack of overall research experience | 3.51 (0.889) | 6.982 |

Need for external assistance in finding papers

Table 4 shows the opinions of the participants on the need for external assistance, for different types of papers, required during literature search sessions in the literature review and manuscript writing lifecycle. The mean value was higher than the test value of 2 (2 represents rare frequency) at statistically significant differences (p < 0.05) for the five scenarios. Interdisciplinary papers are the paper-type where most external assistance is required during literature search (M = 2.72). The need of assistance in searching two other paper-types Topically-similar papers (M = 2.6) and Seminal papers (M = 2.53) is also visibly apparent.

| Scenarios | M (SD) | t (p<0.05) |

|---|---|---|

| Seminal papers | 2.53 (0.984) | 7.696 |

| Topically-similar papers | 2.6 (1.009) | 8.542 |

| Interdisciplinary papers | 2.72 (0.89) | 11.71 |

| Citations for placeholders | 2.53 (0.954) | 7.939 |

| Necessary citations for inclusion in manuscripts | 2.48 (1.088) | 6.327 |

Usage of academic information sources

Table 5 provides the results of one-sample t-tests carried out with the variables information sources and related papers feature. Test values of 3 (Sometimes) and 2 (Rarely) were used for the two t-tests respectively. Google Scholar was the only system with a mean value above the test value (M = 3.81) followed by ScienceDirect (M = 2.86) (not statistically significant at p < 0.05) and Web of Science (M = 2.79). PubMed (M = 1.9) was the least used of all the information sources. For the variable related papers feature, all the information sources had mean values above the test value and the differences were statistically significant at p < 0.05. The highest usage was recorded for ScienceDirect's Recommended Articles (M = 3.11) and Google Scholar's Related Articles (M = 2.95) while the lowest usage was for SpringerLink's Related Content (M = 2.4).

| Information Source | M (SD) | t (p<0.05) | Related Papers Feature | n | M (SD) | t (p<0.05) |

|---|---|---|---|---|---|---|

| Google Scholar | 3.81 (1.137) | 10.209 | Related Articles | 192 | 2.95 (1.152) | 11.402 |

| Web of Science | 2.79 (1.374) | -2.227 | View Related Records | 152 | 2.6 (1.175) | 6.281 |

| Scopous | 2.19 (1.373) | -8.455 | Related Documents | 108 | 2.83 (1.132) | 7.654 |

| IEEE Xplore | 2.08 (1.352) | -9.816 | Similar | 95 | 2.26 (1.132) | 2.266 |

| ScienceDirect | 2.86 (1.374) | -1.467 | Recommended Articles | 151 | 3.11 (1.105) | 12.375 |

| SpringerLink | 2.44 (1.241) | -6.442 | Related Content | 139 | 2.4 (1.054) | 4.505 |

| PubMed | 1.9 (1.25) | -12.618 | Related Citations | 88 | 2.6 (1.255) | 4.501 |

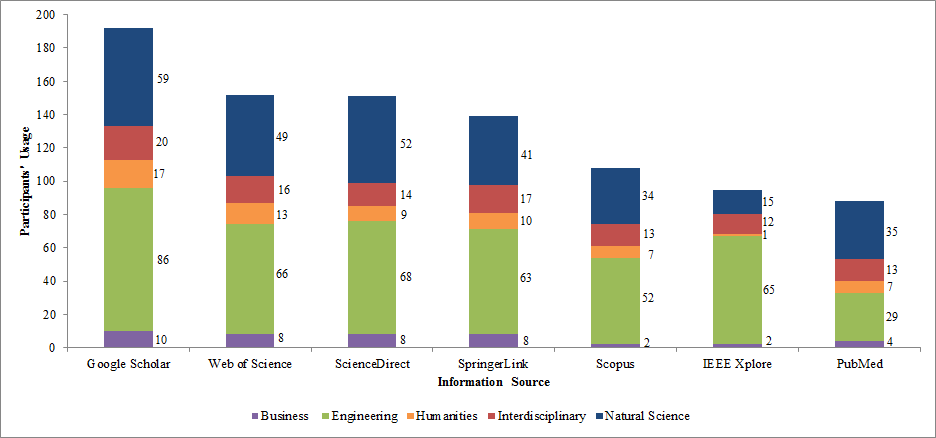

Figure 4 provides the usage response data of information sources by discipline. The respondents who selected any choice other than 1 (Never) in the five-point ordinal Likert scale, were considered for this analysis. Findings indicated that the proportion of usage was similar for sources that indexed research papers from multiple disciplines. Business researchers' use of Scopus (n = 2), IEEE Xplore (n = 2) and PubMed (n = 4) was minimal, while Humanities researchers' use of IEEE Xplore was almost non-existent (n = 1). The highest usage of the sources was from the Engineering respondents with the exception of PubMed where Natural Science researchers' use was the highest (n = 35) in comparison to Engineering (n = 29).

Figure 4: Usage of information sources by discipline

Discussion

With the exception of the two inadequate citation instances seminal and topically-similar papers for journal manuscripts, where the perceptions from the two groups were similar, the difference between the reviewers and authors experience is an interesting case. Reviewers have indicated more than a periodic occurrence of the different instances of inadequate and omitted citations, in contrast to the authors' experience. In order to identify reasons behind the low frequency among authors, the data was analysed at the writing group level. The mean values from Table 6 indicate that authors from the medium (group 2) and high experience (group 3) writing groups have experienced these instances more than the authors with low experience (group 1), for the first three key instances. This behaviour is assumed to be due to the relatively low number of papers written by new researchers (doctoral students) and most of these papers tend to be co-written with experts (senior researchers or supervisors) (Heath, 2002). Therefore, authors might probably face these issues as they write more papers in different topics. The presence of the different instances of inadequate and omitted citations is stronger for journal papers than conference papers. This observation makes sense as conferences are used by researchers mainly for reporting interim results of their research work, in order to acquire suggestive feedback from the research community (Derntl, 2014). In summary for inadequate and omitted citation instances, seminal and topically-similar papers are often missed by authors in their manuscripts, thereby addressing Q1.

| Instances of inadequate and omitted citations | Writing Group | |||

|---|---|---|---|---|

| 1 (n = 67) | 2 (n = 97) | 3 (n = 43) | ||

| M (SD) | M (SD) | M (SD) | ||

| Journals | Missed citing seminal papers | 1.93 (0.893) | 2.47 (0.879) | 2.44 (0.734 |

| Missed citing topically relevant papers | 1.96 (0.878) | 2.58 (0.814) | 2.37 (0.757) | |

| Insufficient papers in the literature review | 1.67 (0.86) | 1.95 (0.906) | 2.05 (0.815) | |

| Irrelevant papers in the literature review | 1.43 (0.722) | 1.38 (0.653) | 1.42 (0.587) | |

| Conferences | Missed citing seminal papers | 1.72 (0.831 | 1.81 (0.894) | 1.86 (0.774) |

| Missed citing topically relevant papers | 1.7 (0.817) | 1.87 (0.942) | 1.77 (0.718) | |

| Insufficient papers in the literature review | 1.57 (0.763) | 1.53 (0.751) | 1.6 (0.695) | |

| Irrelevant papers in the literature review | 1.36 (0.644) | 1.28 (0.608) | 1.35 (0.529) | |

When the reasons for inadequate and omitted citations were identified from the literature on graduate information seeking, it was expected that the participants would agree with them as valid reasons. The findings vindicated the expectations as reviewers have opined that the three reasons: interdisciplinary topic, lack of overall research experience and lack of research experience in the particular research area are valid. Interdisciplinary research presents a unique kind of challenge to the researchers in terms of the information source as there are different academic databases for certain disciplines (George et al., 2006). Even though popular academic search engines such as Google Scholar have a vast coverage of papers from most disciplines, researchers might miss out certain papers which are either not indexed or which do not appear in the top search results (Giustini and Boulos, 2013). General research experience is one of the key factors that differentiate researchers while doing information seeking (Karlsson et al., 2012). However, participants have indicated that the lack of research experience in the particular research area is a more prominent reason than the lack of overall research experience as there are differences in conducting research across different disciplines.

Researchers have been in situations where they periodically require assistance for finding papers for all the specified tasks or scenarios. For specific search scenarios finding papers from other disciplines' and finding topically-similar papers, researchers needed more assistance. These search tasks are complex. Interdisciplinary research is a challenging area as it integrates methods from different disciplines (Wagner et al., 2011). Therefore, researchers would often require assistance in finding papers from other disciplines. The task of finding similar papers is an interesting one as it deals with the aspects of scale and technique. The scale aspect of this task is the quantity of papers that go into the seed set for which similar papers are found. There are various scenarios for this aspect. For example, a researcher might want to find similar papers for one seed paper, while in another scenario the researcher might want to find similar papers for a set of papers (Mcnee, 2006). The technique aspect is about the method and data used in finding similar papers. Some of the commonly used techniques include (i) Citation chaining (forward and backward chaining) based on a set of references (Levy and Ellis, 2006), (ii) Textual-similarity techniques based on text extracted from a research paper and (iii) Metadata based techniques where metadata from a paper is used to find similar papers. Researchers have indicated that keeping up-to-date with the latest research studies and exploring tangentially similar yet unfamiliar areas are complex tasks (Athukorala, Hoggan, Lehtio, Ruotsalo and Jacucci, 2013). Therefore, the task is complex where researchers are in situations where they require additional assistance for task completion. Interestingly, the observations for the task of finding similar papers, directly relate to the observations for the corresponding inadequate citation instance. Hence, this task is highly critical for researchers. Secondly, the observations for the task of finding interdisciplinary papers directly relate to the corresponding second most important reason for inadequate and omitted citations. Therefore for Q2, the findings from the three related sections related to each other at a moderate level.

The popularity of Google Scholar among researchers across different disciplines is reinforced through the findings of this study. The finding is an indication of the constant growth in popularity of Google Scholar through the years (Cothran, 2011; Herrera, 2011). For Q3, the databases ScienceDirect and Web of Science are the other popular choices since they are two of the oldest available online academic information sources, covering multiple disciplines. Most of the databases that were included in the questionnaire are multidisciplinary, with the exception of IEEE Xplore and PubMed. Therefore, these two systems were the least popular sources. The use of all these sources is not mutually exclusive. The participants' indication of frequently using the related papers feature in the systems suggests their usability and the researcher's dependability on system-level intelligence for the task of finding similar papers. The usage of this feature is highest in the case of ScienceDirect and Google Scholar. In the case of ScienceDirect, the recommended articles are displayed to the right of the currently viewed article. This placement could probably attract more clicks from the users as they get to see topically similar articles placed side-by-side. The usage statistics of these features indicates the reliance of researchers on the system's capability to find similar papers based on multiple factors such as topical similarity, collaborative viewership, shared citations and metadata. Google Scholar's simple and minimalist design requires the users to scroll less and search for options. The interface can be termed as intuitive and sufficient for finding relevant information.

Implications for assistive systems

The medium of external assistance to researchers can range from online and offline training, to on-site assistance by experts, and also through deployment of assistive intelligent systems. Process-based interventions include strengthening the role of librarians, which is constrained by many factors such as the required knowledge levels and cultural issues (Ishimura and Bartlett, 2014). On the other hand, academic search systems could be enhanced with task-based features where search results are tailored to the specific search tasks of the researchers. Based on the findings, it is evident that researchers perceive the task of finding similar papers as a manually complex task. Secondly, seminal papers are also missed by manuscript authors, thereby underlining the need for a corresponding recommendation task. These two tasks are intended to address literature review related search activities. Interdisciplinary papers pose a challenge to researchers as they require additional knowledge of terminologies from multiple disciplines. The retrieval/recommendation technique for the two shortlisted tasks should consider interdisciplinary papers as indicated by the explicit preference of the participants in the current study.

The selection of the third task for the assistive system is constrained by the decision to have a task meant to help researchers during manuscript preparation. In this area, citation context recommender system studies (He et al., 2011; He, Pei, Kifer, Mitra and Giles, 2010) have concentrated on approaches to recommend papers to particular placeholders in the manuscript. Some of the participants in the current study have indicated the issue of insufficient citations in their manuscripts. Therefore, assistive systems could help researchers in identifying unique and important papers in their final reading list (a list comprising of all papers read or collected during the literature review), just before they start writing their manuscripts. This novel task is taken as the third task of the assistive system. The three selected tasks represent the usual flow of activity in the scientific publication lifecycle. The tasks are logically inter-connected as the papers from the first task become the input to the second and third task. Therefore, a paper-collection feature such as a seed basket of papers can be introduced to help the researchers manage the papers between the tasks.

The universal popularity of Google.com as a top general-purpose search engine seems to have been repeated with Google Scholar since most participants, irrespective of discipline, use it frequently. This observation is validated with other studies as well (Spezi, 2016; Wu and Chen, 2014). However, Google Scholar is classified as a search engine that indexes papers from different academic databases and also from non-academic Websites, while systems such as Scopus and Web of Knowledge are fully-fledged databases with traditional features for search and filtering. In comparison, Google Scholar provides a basic set of advanced search options along with two sorting options. Google Scholar combines a simplistic user-interface with an effective retrieval algorithm to provide fast and relevant results. If the recommendation results in the proposed system are provided in a similar user-interface as Google Scholar, it would benefit the user in quickly adjusting to the new system. Alternatively, if a new user-interface is provided to the users, separate tests are to be conducted for ascertaining the cognitive load and user convenience levels. Therefore, using a user-interface similar to Google Scholar is a recommended approach for the design of assistive systems in this domain. However, the display features of the system need to be tailored as per the nature of the task (Diriye, Blandford, Tombros and Vakkari, 2013). For instance, a recommendation task for finding similar papers should have a screen where the relations between the recommended papers and the seed papers are to be displayed.

Limitations

There are two limitations in this study. First, the data have been collected from researchers of a single university; therefore the findings may require validation across other locations. Secondly, certain fundamental differences were perceived between disciplines in the context of research dissemination. For instance, some participants from Natural Science schools indicated that they submit manuscripts mainly to journals while their submissions to conferences were limited. This leads to a scenario where a participant has higher experience in both authoring and reviewing journal papers than conference papers. At the other end, researchers in Engineering schools submit regularly to both journals and conferences with corresponding review experience levels. Therefore, the survey responses could have been affected by such differences.

Conclusion

The activities of literature review, execution of research and manuscript writing are the key activities for researchers (Hart, 1998). Even though there are well-defined guidelines and heuristics given to researchers, the complexity of these activities present a challenge to researchers, particularly those with low experience. The presence of issues with research becomes apparent during the review of research manuscripts. The majority of the manuscript rejections are due to deficiencies in research contributions and methodology (Bornmann et al., 2008, 2009; Byrne, 2000). It is hypothesised that these deficiencies are in-turn caused by an inadequate literature review. Earlier studies have looked at the information seeking patterns of researchers across different disciplines and experience levels, to understand the problems faced during literature search sessions. The perspectives explored so far are that of researchers searching for specific information needs. There is a necessity to learn about the experience and opinions of researchers from the perspectives of manuscript reviewers and authors with the central issue being inadequate and omitted citations in manuscripts.

This paper reports the findings of a survey-based study conducted in a university with participants invited from incumbent schools. 207 researchers with official manuscript review experience and manuscript authoring experience took part in the online survey. Topics in the survey addressed the issue of inadequate and omitted citations in manuscripts. Seminal and topically-similar papers were found to be the most critical instances of citations often missed by authors. Lack of experience in the particular research area was found to be the major reason for missing citations. Participants specifically indicated that they needed external assistance most while finding interdisciplinary papers, seminal papers and topically-similar papers. Based on the findings of the study two literature review search tasks, of finding seminal and topically similar papers, have been identified as the first two tasks of an assistive system. This assistive system is meant to aid researchers in literature search and manuscript writing related tasks. A third task meant to help researchers in identifying unique and important papers from their final reading list, has also been selected for the assistive system. Since Google Scholar was observed to be the predominantly used information source for searching papers, the user-interface aspects of the search engine have been taken as a basis for designing the user-interface of the assistive system in a similar fashion. The resemblance in the interface should help researchers adjust to the new system in an expedited manner. The findings from the study will help researchers gain a rich understanding of the issue of inadequate and omitted citations in manuscripts since data have been collected from the dual perspectives of manuscript reviewers and authors.

About the authors

Aravind Sesagiri Raamkumar is a PhD Candidate in the Wee Kim Wee School of Communication and Information, Nanyang Technological University, Singapore. He received his MSc in Knowledge Management from Nanyang Technological University. His research interests include recommender systems, information retrieval, scholarly communication, social media and linked data. He can be contacted at aravind002@ntu.edu.sg.

Schubert Foo is Professor of Information Science at the Wee Kim Wee School of Communication and Information, Nanyang Technological University (NTU), Singapore. He received his B.Sc. (Hons), M.B.A. and Ph.D. from the University of Strathclyde, UK. He has published more than 300 publications in his research areas of multimedia and Internet technologies, multilingual information retrieval, digital libraries, information and knowledge management, information literacy and social media innovations. He can be contacted at sfoo@ntu.edu.sg.

Natalie Pang is an Assistant Professor in the Wee Kim Wee School of Communication and Information, Nanyang Technological University. Her research interest is in the area of social informatics, focusing on basic and applied research of social media, information behaviour in crises, and structurational models of technology use in marginalised communities. She can be contacted at nlspang@ntu.edu.sg.