Citing the innovative work of the original inventors. An analysis of citations to prior clinical trials

Tove Faber Frandsen

Introduction. The massive growth of the amount of scientific literature can result in an abundancy of relevant studies when an author wants to substantiate a claim. References and citations are fundamental bibliometric artefacts yet little guidance is offered to authors regarding the selection between equally relevant references. Only rules of thumb exist, and this paper provides an overview of the scarce publications within the area. One well-known rule of thumb is to cite seminal work.

Method. This study analyses the extent to which seminal papers are being cited more or less than more recent studies, when authors cite previous, similar studies. Cohorts of studies addressing the same research question form the data set of the analysis. The data is visualised and analysed using statistical analyses and charts.

Results. The results show that some research questions are addressed by as much as 50 or 100 studies. Also, the paper shows that the more citable studies, the smaller the ratio of cited to non-cited studies.

Conclusion. There does not seem to be a general tendency towards older or more recent studies being cited more. The more citable studies the more evenly distributed the share of citations seems to be. The implications for bibliometrics are discussed.

Introduction

The massive growth of the scientific literature is a well-known fact although the exact size of the growth rate has been debated (see e.g. Bornmann and Mutz, 2015; Larivière, Archambault and Gingras, 2008; Tabah, 1999 and van Raan, 2000). While the growth of literature may have been exponential during the last decade or two the growth seems to be steady. Consequently, within some fields we would expect that authors have to choose between many relevant references when substantiating a claim. Even though references and citations are fundamental bibliometric artefacts little guidance is offered in the literature to authors regarding the prioritizing of various relevant references. Cox (2011) argues that “[o]nce a [research] question is posed, the next logical step involves a thorough review of the existing literature to seek an answer”, and he concludes that “[t]his includes an exhaustive literature search, which would likely benefit from the methodological transparency required of a systematic review”. This point of view is supported by e.g. Campbell and Walters (2014), Clarke Hopewell and Chalmers (2007, 2010), Clarke (2004), Clark, Davies and Mansmann (2014), and Sutton, Cooper and Jones (2009). A systematic search may be followed by a prioritization of references. Szava-Kovats (2008) provides numerous examples of how authors admit the need to select references and argues that citing all would be impossible.

One well-known rule of thumb is to credit the seminal work. Chakraborti (2007) states that not citing “the innovative work of the original inventors […] amounts to violation of ethical guidelines”, and Colquitt (2013) stresses that

[p]assages that define constructs or that make reference to theories should credit the seminal works that kicked off that stream […]. Relative to pieces that introduce constructs or theories, articles that reveal empirical findings will have a more limited “shelf life.” Those articles should also be given proper credit, however, at least until subsequent studies qualify, reframe, or debunk their findings (Colquitt, 2013).

Referencing the original work thus seems crucial although there are other concerns as well (Campion, 1997, p. 166), 'All other things equal, preference should be given to articles that are:'

(a) Seminal (original) in an area of research.

(b) More methodologically or conceptually rigorous.

(c) More recent.

Among other recommendations, Johnson and Green (2009) warn against the use of outdated references. Green and Johnson (2006: 79) even state that: “Authors should use the most recent references possible, unless the history of scholarship in a topic area is being discussed.” On the other hand, Harzing (2002) argues that out-of-date-references should not be cited, but she stresses that it concerns references used to substantiate a claim that is only valid at the present time. Finally, Colquitt (2013) notes that authors often refer to meta-analyses instead of referring to the original studies. He recommends that authors supplement the references to seminal work by appending a reference to a recent review as well.

The present study uses empirical citation data to analyse the extent to which older studies are being cited more or less than recent studies. The following section presents related literature and is followed by sections containing the methods, results and discussion/conclusion, respectively.

Related research

The data set used in this study is based on systematic reviews from health care research, and to put the analyses into perspective, this section is dedicated to providing an overview of research dealing with bibliometric analyses of the citation patterns in health care research.

Research is according to Borgerson (2014) often redundant, secretive, and isolated. Obviously, this is a waste of resources, but in the context of medical research it is perhaps more important that participants in clinical trials are at risk of being exposed to harm. Resnik (2003: 242) thus states: “Since medical research often results in a variety of benefits and harms to research subjects, completely harmless research is rare”. Hence, patients may be denied effective treatment or may even be offered harmful treatment. Consequently, researchers who plan and carry our clinical trials should possess an exhaustive overview of the existing research before enrolling patients in a new trial.

However, the methodological transparency of a systematic review is rarely present in the reports of randomized controlled trials (RCT). Robinson and Goodman (2011) and Sawin and Robinson (2015) find that only a quarter of prior research is cited in reports of RCTs that had 3 or more relevant RCTs to reference. They also find that the median number of trials cited did not vary as the number of prior trials increased, i.e. as the number of citable studies increases the cited number of studies remains remarkably stable. Sheth et al. (2011) find a citation rate of just 48% while Fergusson et al. (2005) report that citation rate of previous RCTs is extremely low, with a median of 20% of prior trials cited.

Furthermore, over time there does not seem to be a rise in the proportion of earlier studies being cited (Sawin and Robinson, 2015). A number of reasons have been offered to explain the lack of references to earlier (relevant) studies. Robinson and Goodman (2011) suggest that trials do not exactly replicate a previous study and therefore authors may not see their study as identical to the same studies as a subsequent meta-analysis. Furthermore, journal space limitations have been suggested to influence the number of cited prior studies. It may also be that systematic reviews are cited instead of original trials although this hypothesis is not supported by the findings by Clarke et al. (Clarke, Alderson and Chalmers, 2002; Clarke and Chalmers, 1998; Clarke, Hopewell and Chalmers, 2007; Clarke, Hopewell and Chalmers, 2010).

Bias has been detected in the process of citing previous trials. One finding in this respect is that the previous trials cited in a given RCT are not necessarily the studies representing the largest number of trial participants (Fergusson et al, 2005; Sawin and Robinson, 2015). Also, Sawin and Robinson (2015) find that a study is much more likely to cite a particular earlier study if the citable trials reported results consistent with the citing trial (approximately 45 per cent more likely to be cited). Another bias is that studies in higher-impact journals with positive results are more likely to be cited in subsequent studies (Sheth et al, 2011). Finally, the results by Campbell (1990) suggest the existence of a national bias as authors from the US and the UK tend to cite publications by authors from their own country more. The authors also cited material produced in non-U.S. and non-U.K. countries far less than the amount of material produced by these countries would otherwise indicate.

Robinson and Goodman (2011) find that that older articles are cited less than newer articles, suggesting that evidence from older trials tend to be neglected. These findings are, however, challenged by the findings of Fergusson et al. (2005) as one of their figures indicates that citations to earlier trials are more frequent than citations to later studies. Another study, by Sheth et al. (2011), finds no clear tendency with respect to the age of the cited material. The findings by Martín-Martín et al. (2015) and Verstak et al. (2014) can cast light on the possible negligence of older studies as they find a trend of a growing impact of older articles. Consequently, we may see a change in the citation of older studies over the next years.

Summing up, the existing literature stresses the importance of avoiding redundant medical research, as there is a possibility of a degree of harm to patients. Typically, a clinical trial does not include a literature review done with the methodological transparency of a systematic review, and relatively few prior trials are typically cited. Finally, the few previous studies being cited are often subject to biases. Among the various types of biases mentioned in the literature, the age of the cited studies is mentioned, and while some studies find that older studies are neglected others suggest the opposite. The present study will test whether or not authors tend to cite seminal studies more or less using a novel data set and methodology.

Methods

An analysis of the process of prioritizing between several relevant studies is not straight forward. It requires a body of research addressing the same research question in order to be able to determine which studies could have been cited but were not. Typically, in-depth knowledge of the literature within a field is needed in order to be able to provide an overview of the citable publications. Even with in-depth knowledge the task is colossal and for larger samples impossible. This study makes use of the fact that systematic reviews provide us with a cohort of studies that addresses the same research question. Systematic reviews is distinguished from other literature reviews by “the use of pre-specification, of what exactly was the question to be answered, how evidence was searched for and assessed, and how it was synthesized in order to reach the conclusion” (Moher, Stewart and Shekelle, 2015). Several forms of systematic reviews exist (e.g. meta-analyses, rapid reviews, scoping reviews) and these types can be considered different species within a family (Moher, Stewart and Shekelle, 2015).

In the present paper the data is based on Cochrane reviews whose strength is the rigor that lie beneath each systematic review or meta-analysis (Sackett, 1994). Cochrane reviews are “systematic summaries of evidence of the effects of healthcare interventions. They are intended to help people make practical decisions.” (Green and Higgens, 2005). The cohorts of studies included in a given Cochrane review form the basis of the analysis; because these included studies all meet the eligibility criteria of that specific review. The eligibility criteria (inclusion and exclusion criteria) are pre-specified in the protocol, and systematic reviews analysing the same research question may result in different results depending on eligibility criteria (Nelson, 2014).

All the included studies in each Cochrane review are numbered and ranked according to publication year. More specifically, the included study with the earliest publication year is the first study and the rest follows thereafter. If two included studies were published in the same year they are assigned the same rank and the subsequent rank is skipped. An example: 15 studies are included in a Cochrane review, and we start by ranking the 15 studies according to age. To analyse citations pattern we then use the fact that each study can only cite studies that are older that itself: study number 15 can only cite the 14 studies with lower rank, and study number 13 can only cite the 14 studies with lower rank etc. Now, we proceed by finding out exactly which of the citable studies were in fact cited. To do that all the included studies are matched to references in Web of Science, enabling us to see how which of the earlier studies dealing with the exact same research question are being cited.

The following section presents the results using the above described method using reviews from all 53 Cochrane groups. Cochrane Review Groups support Cochrane’s primary organizational function: the preparation and maintenance of systematic reviews. The 53 groups are based in research institutions worldwide, each focused on a specific topic of health research (http://www.cochrane.org/about-us/cochrane-groups). We retrieved 4805 Cochrane reviews containing included studies from 1970 onwards. Reviews including studies published before 1970 were excluded as they are less likely to be indexed in Web of Science. We were able to match the included studies to 60,495 references in Web of Science resulting in more than 1.5 million incidences of a given study citing or not citing a preceding study. A study is defined as a citable study if it is from the same publication year or older. Depending on the month of publication some studies are more likely to be able to cite a previous study from the same year than others. Consequently, we may be able to detect a fall in share of citations to the youngest citable studies.

Results

Table 1 provides an overview of the number of citable studies in the data set. The table should be read as follows: the first entry shows that a total 4,644 studies in the analysis only had one previous study to cite when it was published. Similarly, the second entry shows that 3,994 studies had two previous studies to cite etc. When looking at Table 1 it should first be noted that Szava-Kovats (2008) may have a point when stating that citing all relevant research would not be possible. As can be seen from the table, a substantial amount of studies can cite as much as 20, 50 or even 100 previous related studies (i.e. included in the same Cochrane review). Few journals would allow for that many references, in fact some journals even impose limits on the number of references allowed (Cohen, 2006).

| Citable studies | Number of studies | Citable studies | Number of studies | Citable studies | Number of studies | Citable studies | Number of studies |

|---|---|---|---|---|---|---|---|

| 1 | 4644 | 26 | 496 | 51 | 136 | 76 | 50 |

| 2 | 3994 | 27 | 516 | 52 | 125 | 77 | 8 |

| 3 | 3476 | 28 | 486 | 53 | 138 | 78 | 39 |

| 4 | 3274 | 29 | 492 | 54 | 185 | 79 | 77 |

| 5 | 2870 | 30 | 418 | 55 | 171 | 80 | 31 |

| 6 | 2595 | 31 | 386 | 56 | 78 | 81 | 61 |

| 7 | 2120 | 32 | 411 | 57 | 104 | 82 | 41 |

| 8 | 2086 | 33 | 346 | 58 | 79 | 83 | 45 |

| 9 | 1915 | 34 | 393 | 59 | 118 | 84 | 20 |

| 10 | 1718 | 35 | 249 | 60 | 53 | 85 | 44 |

| 11 | 1626 | 36 | 302 | 61 | 108 | 86 | 97 |

| 12 | 1416 | 37 | 336 | 62 | 76 | 87 | 50 |

| 13 | 1339 | 38 | 317 | 63 | 43 | 88 | 34 |

| 14 | 1154 | 39 | 239 | 64 | 102 | 89 | 84 |

| 15 | 1204 | 40 | 288 | 65 | 78 | 90 | 27 |

| 16 | 1117 | 41 | 193 | 66 | 104 | 91 | 22 |

| 17 | 948 | 42 | 267 | 67 | 104 | 92 | 44 |

| 18 | 870 | 43 | 172 | 68 | 52 | 93 | 23 |

| 19 | 835 | 44 | 269 | 69 | 84 | 94 | 30 |

| 20 | 835 | 45 | 212 | 70 | 96 | 95 | 3 |

| 21 | 720 | 46 | 166 | 71 | 19 | 96 | 36 |

| 22 | 751 | 47 | 188 | 72 | 120 | 97 | 4 |

| 23 | 699 | 48 | 150 | 73 | 72 | 98 | 52 |

| 24 | 606 | 49 | 161 | 74 | 33 | 99 | 20 |

| 25 | 604 | 50 | 161 | 75 | 53 | 100 | 22 |

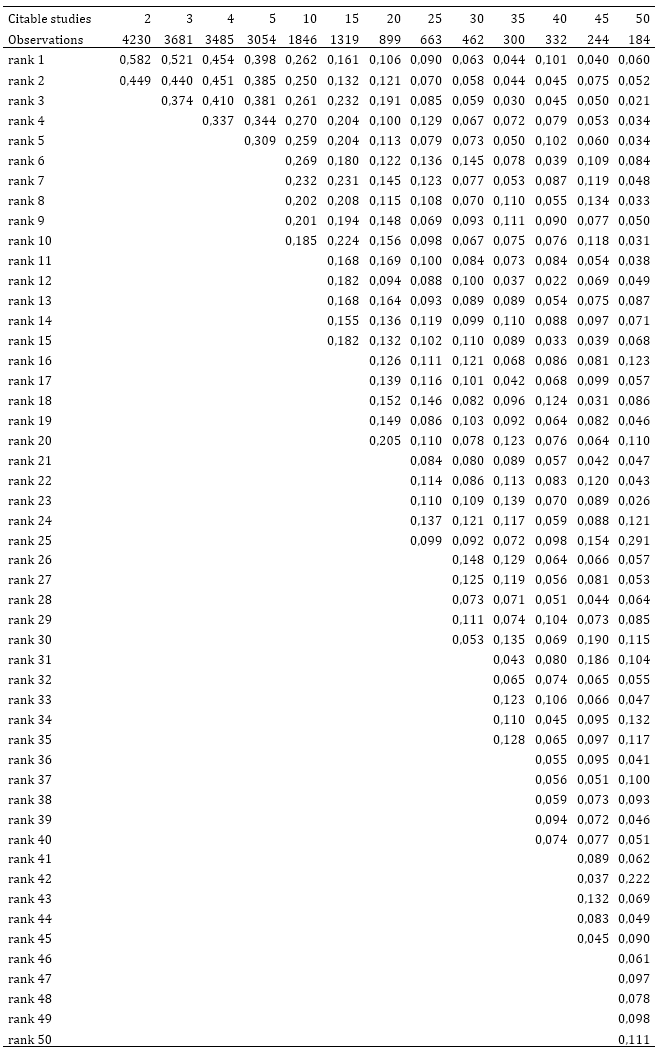

Following one of the existing rules of thumb authors should credit the seminal work within the field i.e. the study ranked first in our data set. The following figures illustrate the share of cited and citable studies. An excerpt of the data is available in appendix 1.

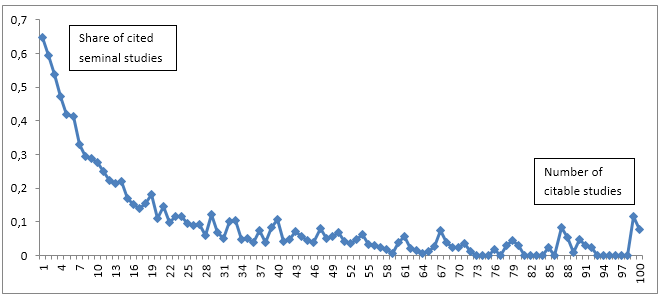

Figure 1 provides an overview of the number of citable studies and the share of cited seminal studies.

Figure 1: Shares of cited seminal studies (rank 1). Only shares for studies with up to 100 citable studies are shown.

As we can see from Figure 1 the share of cited seminal studies decrease radically as the number of citable studies increase. An author cites a greater share of the previous studies if there are fewer. If there are 1 or 2 previous studies about half of the studies cite the seminal work. If there are 5 previous studies more than one third of the studies cite the seminal study. However, if there are 50 previous studies only about 8 per cent cite the paper(s) with rank 1. One would expect to find these decreasing shares of studies citing the seminal papers as the number of studies increase. It is more difficult to keep up with the related literature if there are 50 preceding studies. It seems that there is a tendency towards citing seminal work more when the number of citable studies is low. These results are confirming results by Robinson and Goodman (2011) who find that a median of 2 trials were cited, regardless of the number of prior trials that had been conducted.

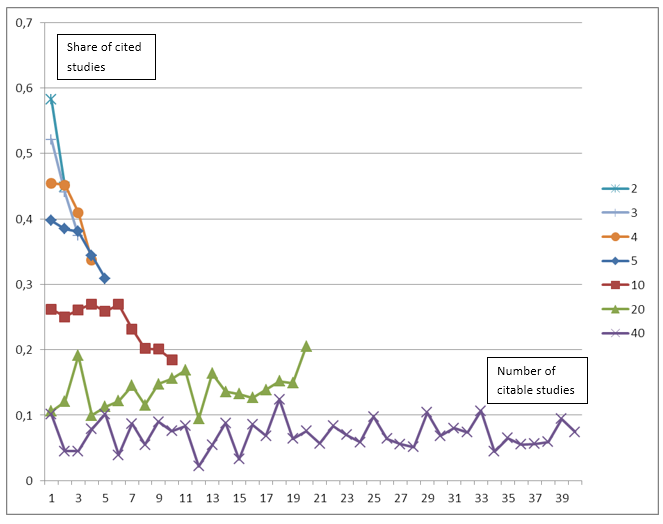

Figure 2 shows the share of papers citing preceding studies by study rank. In the figure the shares for studies with 2, 3, 4, 5, 10, 20 and 40 citable studies are shown. The figure should be read as follows: The line for e.g. 10 citable studies shows for each of the ranks from 1 to 10 the share of these studies being cited, i.e. the number 0.26 for rank 3 means that for studies who could cite exactly 10 previous studies, 26 percent of these cited the study with rank 3. The figure clearly shows (as in Figure 1) that fewer citeable studies mean that a higher share of these studies is actually cited. We can, however, also see that for studies that could potentially cite many preceding studies the share of studies citing a particular study is remarkably independent of study rank. We are practically unable to see any differences between the share of papers citing the seminal work and the share citing any other study. Using studies with 40 prior trials as an example we can see that 2-12 per cent of prior trials are being cited independent of these trials’ rank. The share of studies being cited is fluctuating somewhat along the ranking, but there is no clear tendency towards citing seminal work or more recent work. Statistical analyses carried out for all the rankings and number of preceding citeable studies confirms that there is no clear pattern towards citing either seminar work or recent work.

Figure 2: Share of studies citing preceding studies by study rank

Discussion and conclusion

Before discussing the implications of the present study, we pay attention to the limitations of the study. First of all, it should be taken into account that the present study relies on Web of Science for identifying citation patterns. This means that if a reference is not indexed in Web of Science it is not included in the data set. Consequently, a proportion of cited studies are excluded from the analysis. It implies that the ranks of the studies are affected. It could even be the seminal studies that are excluded. Any missing seminal studies would affect the results as this may imply that the second or even third study is considered seminal in the data set used in the analysis. The characteristics of missing studies have not been analysed and consequently, the importance of them for the results not assessed. Secondly, the rank of a publication can only be determined based on publication year, as the publication month is not included in the bibliographic databases. Ideally, information on month of publication and on publication lags would also be taken into account to more precisely determine the relative age of the studies in the data set. Depending on the month of publication some studies are more likely to be able to cite a previous study than others. However, that would seriously limit the data sample as this information may not even be available. It does mean, though, that not too much should be concluded with respect to specific ranks, but in terms of patterns with respect to more recent or older studies in general. Thirdly, this study does not take into account that the authors of a paper might choose cite a literature review instead of the original papers. The authors may in that case not find it necessary to cite the seminal paper as well. It is, however, not within the scope of this study to analyse whether or not authors cite reviews instead of original papers, and this question is left for future research.

The data set used in the present paper, constructed on the basis of inclusion in Cochrane reviews, shows that a substantial amount of studies can potentially cite as many as 20, 50 or even 100 preceding closely related studies. Furthermore, the results of our analysis show that the share of actually cited studies decreases as the number of potentially citable studies increase. Authors cite a greater share of the previous studies if there are fewer studies to choose from. The results also reveal a tendency towards citing seminal work more when the number of citable studies is low. But in general there does not seem to be any clear tendency towards a preference for citing seminal work or more recent work. The findings could potentially be explained by authors following some of the other rule of thumb such as citing “more methodologically or conceptually rigorous” studies (Campion 1997:166).

MacRoberts and MacRoberts (2010) argue that all the relevant references should be made available to reader upon request or in an appendix to make the invisible more visible. They present a number of examples where that may be feasible, but within a number of fields the task would be enormous and probably unlikely to be manageable. Prioritisation is probably inevitable as recognised by Cronin (2005: 1506):

Truth be told, we are invariably challenged to cite the most precise and most relevant work on a given subject, for the simple reason that few, if any, of us are wholly and authoritatively familiar with the scattered literature of our specialties, let alone the wider scientific literature. Even if we were familiar with the entire corpus of relevant literature, we would still have to make difficult choices.

Cronin further argues that the reference list will never be a complete record of the influences of a given work. A number of factors are at play when authors decide to cite one piece of work and leave another, related study uncited. He argues that this is not due to systematic biases but that e.g. social relationships leave a mark on the reference lists.

There is a lack of clear and explicit rules or guidelines on how to prioritize equally relevant references. As Campion (1997: 165) states it: “There is almost nothing helpful written on this topic, and few people in the profession can even identify how they learned to do it.” Consequently, Campion suggests that prioritizing relevant references should be a skill taught to aspiring researchers. However, as the literature offers so little advice on how to select the development of guidelines should be first priority. Campion argues that a set of guidelines or standards is needed. Bibliometricians are already familiar with developing guidelines e.g. ethics of evaluative bibliometrics (Furner, 2014), evaluation of research institutes in the natural sciences (Bornmann et al., 2014), or analysis of bibliometric data and presenting and interpreting results (Bornmann et al., 2008).

The health care research field has acknowledged the problems with poor reporting standards by establishing numerous reporting guidelines. A reporting guideline is “a checklist, flow diagram, or explicit text to guide authors in reporting a specific type of research, developed using explicit methodology” (Moher, Schulz, Simera and Altman, 2010). A systematic review actually finds 81 different reporting guidelines (Moher et al., 2011) but that does not include one for referencing. An overview of nearly 300 reporting guidelines for main study types is available in the EQUATOR Network database of reporting guidelines (http://www.equator-network.org).

The bibliometrics community needs to consider the ever expanding scientific literature and the need for guidelines on how to prioritize the relevant literature in the citing process. The pool of relevant literature can be too large to cite systematically, and journal space limitations may even mandate a reduced number of cited studies. Consequently, a selection process is necessary. Let’s help aspiring researchers with frameworks or guidelines that can assist them with their decision.

Acknowledgements

The author is greatful to David Hammer for valuable assistance with the data collection.

About the author

Tove Faber Frandsen is head of Videncentret at Odense University Hospital, Denmark. She received her PhD in library and information science from the Royal School of Library and Information Science, Copenhagen, Denmark. She can be contacted at: t.faber@videncentret.sdu.dk

References

- Borgerson, K. (2014). Redundant, Secretive, and Isolated: When Are Clinical Trials Scientifically Valid? Kennedy Institute of Ethics Journal, 24(4), 385-411

- Bornmann, L., B. F. Bowman, J. Bauer, W. Marx, H. Schier and M. Palzenberger (2014). 11 Bibliometric Standards for Evaluating Research Institutes in the Natural Sciences. In: Cronin, B., & Sugimoto, C. R. (2014). Beyond bibliometrics: harnessing multidimensional indicators of scholarly impact. Cambridge, MA: MIT Press.

- Bornmann, L., & Mutz, R. (2015). Growth rates of modern science: A bibliometric analysis based on the number of publications and cited references. Journal of the Association for Information Science and Technology, 66(11), 2215-2222.

- Bornmann, L., R. Mutz, C. Neuhaus and H.-D. Daniel (2008). Citation counts for research evaluation: standards of good practice for analyzing bibliometric data and presenting and interpreting results. Ethics in Science and Environmental Politics, 8(1), 93-102.

- Campbell, M. J., & Walters, S. J. (2014). How to design, analyse and report cluster randomised trials in medicine and health related research. New York: John Wiley & Sons.

- Campbell , F. M. (1990). National bias: a comparison of citation practices by health professionals. Bulletin of the Medical Library Association, 78:376-382.

- Campion, M. A. (1997). Rules for references: Suggested guidelines for choosing literary citations for research articles in applied psychology. Personnel Psychology, 50(1), 165.

- Chakraborti, A. K. (2007). Comments on non-citation by H.R. Shaterian et al. [J. Mol. Catal. A: Chem. 272 (2007) 142–151] of the original work on the discovery of HClO4–SiO2. Journal of Molecular Catalysis A: Chemical, 266.

- Clark, T., Davies, H., & Mansmann, U. (2014). Five questions that need answering when considering the design of clinical trials. Trials, 15(1), 286.

- Clarke, M. (2004). Doing new research? Don't forget the old. PLoS medicine, 1(2), 100.

- Clarke M, Alderson P, Chalmers I. (2002). Discussion sections in reports of controlled trials published in general medical journals. JAMA, 287:2799-801.

- Clarke M, Chalmers I. (1998). Discussion sections in reports of controlled trials published in general medical journals: islands in search of continents? JAMA, 280:280-2.

- Clarke M, Hopewell S, Chalmers I. (2007). Reports of clinical trials should begin and end with up-to-date systematic reviews of other relevant evidence: a status report. Journal of the Royal Society of Medicine, 100:187-90.

- Clarke M, Hopewell S, Chalmers I. (2010). Clinical trials should begin and end with systematic reviews of relevant evidence: 12 years and waiting [Letter]. Lancet, 376:20-1.

- Cohen, H. (2006). How to write a patient case report. American Journal of Health-System Pharmacy, 63, 1888–1892.

- Colquitt, J. A. (2013). Crafting references in AMJ submissions. Academy of Management Journal, 56(5), 1221-1224.

- Cox, Charles (2011). When Does Clinical Equipoise Cease to Exist?: Commentary on an article by Ujash Sheth, BHSc, et al.: Poor Citation of Prior Evidence in Hip Fracture Trials. The Journal of Bone and Joint, 93-A(22), November 2011, p e137(1)-e137(2).

- Cronin, B. (2005). A hundred million acts of whimsy? Current Science, 89, 1505–1509.

- Fergusson D, Glass KC, Hutton B, Shapiro S. (2005). Randomized controlled trials of aprotinin in cardiac surgery: could clinical equipoise have stopped the bleeding? Clinical Trials, 2, 218-29.

- Furner, J. (2014). The Ethics of Evaluative Bibliometrics. In: Cronin, B., & Sugimoto, C. R. (2014). Beyond bibliometrics: harnessing multidimensional indicators of scholarly impact. Cambridge, MA: MIT Press.

- Green, S., & Higgins, J. (2005). Glossary. In: Higgins, J. P. G. S., & Green, S. (2005). Cochrane handbook for systematic reviews of interventions 4.2. 5 [updated May 2005]. The cochrane library, (3).

- Harzing, A. W. (2002). Are our referencing errors undermining our scholarship and credibility? The case of expatriate failure rates. Journal of Organizational Behavior, 23(1), 127-148.

- Green, B. N., and Johnson, C. D. (2006). How to write a case report for publication. Journal of Chiropractic Medicine, 5(2), 72-82.

- Johnson, C., and Green, B. (2009). Submitting manuscripts to biomedical journals: common errors and helpful solutions. Journal of Manipulative and Physiological Therapeutics, 32(1), 1-12.

- Larivière, V., Archambault, É., & Gingras, Y. (2008). Long‐term variations in the aging of scientific literature: From exponential growth to steady‐state science (1900–2004). Journal of the American Society for Information Science and Technology, 59(2), 288-296.

- MacRoberts, M. H., & MacRoberts, B. R. (2010). Problems of citation analysis: A study of uncited and seldom‐cited influences. Journal of the American Society for Information Science and Technology, 61(1), 1-12.

- Martín-Martín, A., Orduña-Malea, E., Ayllón, J. M., & López-Cózar, E. D. (2015). Reviving the past: the growth of citations to old documents. ArXiv:1501.02084.

- Moher, D., Stewart, L., & Shekelle, P. (2015). All in the Family: systematic reviews, rapid reviews, scoping reviews, realist reviews, and more. Systematic Reviews, 4(1), 1.

- Moher, D., Schulz, K. F., Simera, I., & Altman, D. G. (2010). Guidance for developers of health research reporting guidelines. PLoS Medicine, 7(2), e1000217.

- Moher, D., L. Weeks, M. Ocampo, D. Seely, M. Sampson, D. G. Altman, K. F. Schulz, D. Miller, I. Simera, J. Grimshaw and J. Hoey (2011). Describing reporting guidelines for health research: a systematic review. Journal of Clinical Epidemiology, 64(7): 718-742.

- Nelson, H. D. (2014). Systematic Reviews to Answer Health Care Questions: Lippincott Williams & Wilkins.

- van Raan, A.F.J. (2000). On growth, ageing, and fractal differentiation of science. Scientometrics, 47(2), 347–362

- Resnik, D. B. (2003). Exploitation in biomedical research. Theoretical Medicine and Bioethics, 24(3), 233-259.

- Robinson, K. A., & Goodman, S. N. (2011). A systematic examination of the citation of prior research in reports of randomized, controlled trials. Annals of internal medicine, 154(1), 50-55.

- Robinson, K. A., Dunn, A. G., Tsafnat, G., & Glasziou, P. (2014). Citation networks of related trials are often disconnected: implications for bidirectional citation searches. Journal of clinical epidemiology, 67(7), 793-799.

- Sackett, D. L. (1994). Cochrane Collaboration. BMJ: British Medical Journal, 309(6967), 1514.

- Sawin, V. I., & Robinson, K. A. (2015). Biased and inadequate citation of prior research in reports of cardiovascular trials is a continuing source of waste in research. Journal of Clinical Epidemiology, 69, 174-178.

- Schmidt LM, Gotzsche PC. (2005). Of mites and men: reference bias in narrative review articles: a systematic review. The Journal of Family Practice, 54, 334-8.

- Sheth, U., Simunovic, N., Tornetta, P., 3rd, Einhorn, T. A., & Bhandari, M. (2011). Poor citation of prior evidence in hip fracture trials. Journal of Bone and Joint Surgery (American Volume), 93(22), 2079-2086.

- Sutton, A. J., Cooper, N. J., & Jones, D. R. (2009). Evidence synthesis as the key to more coherent and efficient research. BMC medical research methodology, 9(1), 29.

- Szava-Kovats, E. (2008). Phenomenon and manifestation of the 'Author's Effect of Showcasing' (AES): a literature science study, I. Emergence, causes and traces of the phenomenon in the literature, perception and notion of the effect. Journal of Information Science, 34(1), 30-44.

- Tabah, A.N. (1999). Literature dynamics: Studies on growth, diffusion, and epidemics. In: M.E. Williams (Ed.), Annual review of information science and technology (ARIST), vol. 34, Information Today, Medford, NJ (1999), 249–286

- Verstak, A., Acharya, A., Suzuki, H., Henderson, S., Iakhiaev, M., Lin, C. C. Y., & Shetty, N. (2014). On the shoulders of giants: The growing impact of older articles. ArXiv preprint, 1411.0275.