Short paper

Systematic reviews as object to study relevance assessment processes

Ingeborg Jäger-Dengler-Harles, Tamara Heck , and Marc Rittberger.

Introduction. Systematic reviews are a method to synthesise research results for evidence-based decision-making on a specific question. Processes of information seeking and behaviour play a crucial role and might intensively influence the outcomes of a review. This paper proposes an approach to understand the relevance assessment and decision-making of researchers that conduct systematic reviews.

Method. A systematic review was conducted to build up a database for text-based qualitative analyses of researchers’ decision-making in review processes.

Analysis. The analysis focuses on the selection process of retrieved articles and introduces the method to investigate relevance assessment processes of researchers.

Results. There are different methods to conduct reviews in research, and relevance assessment of documents within those processes is neither one-directional nor standardised. Research on information behaviour of researchers involved in those reviews has not looked at relevance assessment steps and their influence in a review’s outcomes.

Conclusions. A reason for the varieties and inconsistencies of review types might be that information seeking and relevance assessment are much more complex and researchers might not be able to draw upon their concrete decisions. This paper proposes a research study to investigate researcher behaviour while synthesising research results for evidence-based decision-making.

DOI: https://doi.org/10.47989/irisic2024

Introduction

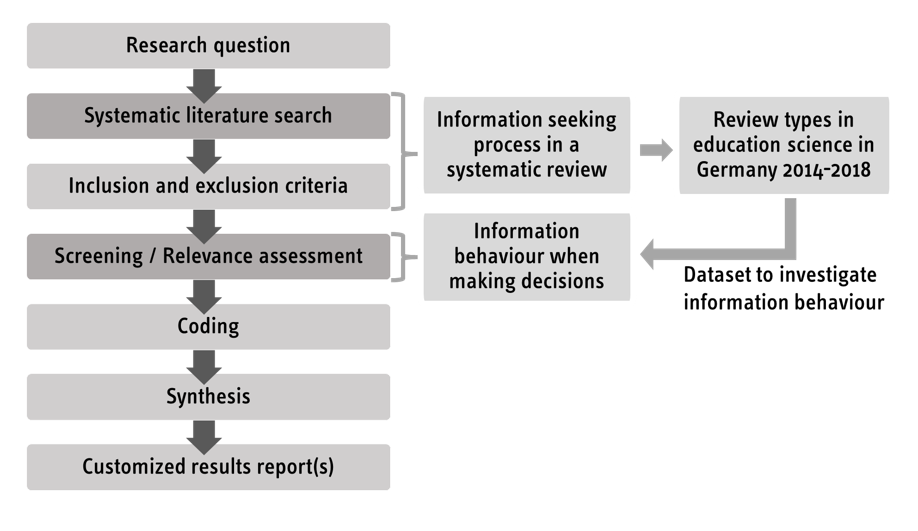

Research reviews are a scientific method of its own that has gained attention during the last decades (Gough et al., 2017). More precisely, there exist different types of reviews that differ in their scope and method, and we should rather speak of a family of review types. Grant and Booth (2009) identified fourteen types of reviews like for example critical reviews, literature reviews, mapping reviews, meta-analyses, scoping reviews or umbrella reviews (reviews of reviews). A well-known type of review is the systematic review, which ‘seeks to systematically search for, appraise and synthesis[e] research evidence, often adhering to guidelines on the conduct of a review‘ (Grant und Booth 2009, p. 102). Systematic reviews are applied as a stand-alone method in research. They are formalised and follow a well-structured and fixed step-by-step plan (Gough et al., 2017) like exemplified in figure 1. Crucial aspects in the systematic review approach are the accuracy and transparency of the single processes involved to guarantee quality enhanced research findings. Guidelines for those processes exist for different research disciplines like those by Cochrane for medical systematic reviews (Cochrane, 2019) or by the Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI-Centre) for systematic reviews in education (Tripney, 2016). Researchers can apply those guidelines to go through the basic processing steps while accomplishing a systematic review. However, concrete tasks within a process depend on the review’s goal and its well-defined and precise research question and cannot be determined in a one size fits all mode. Within their concrete review’s context, researchers face challenges in making decisions on the literature search process, the inclusion and exclusion criteria of research papers and the synthesis of the results.

In all those processes, researchers make relevance decisions. The behaviour of researchers in those decision-making processes is partly studied. Merz (2016) focused on the behaviour of information professionals when conducting literature searches in the context of systematic reviews in evidence-based medicine. However, how and why researchers decide on the relevance of research papers and how the processes during a systematic review influence each other, is not fully understood.

This paper is part of an overall PhD study to investigate the information behaviour of researchers in systematic review processes. The case is on educational research reviews in Germany.

Figure 1. Systematic review process (own image).

The research question is formulated according to the SPICE model (setting, perspective, intervention, comparison and evaluation) (Cleyle and Booth, 2006, p. 363):

When preparing systematic reviews in educational research (setting) how are reviewers (perspective) influenced by their information behaviour and comprehension of relevance (intervention) in German research organisations (comparison) when making decisions during the screening process (evaluation)?

The paper proposes an approach to investigate relevance assessment and decision-making processes of researchers that conduct a systematic review. We will discuss the rationale for the approach and the relevance for studying information behaviour during systematic review processes.

The approach applies different methodological steps including a qualitative text analysis of existing systematic reviews and user studies (qualitative interviews and observations). The paper will report on the first part of the study, i.e. conducting a data set of systematic reviews in educational research in Germany to run the qualitative text analysis. The data set was conducted considering the first four steps of a systematic review process as shown in figure 1. We will report on relevant aspects to consider within the information seeking and screening process based on our own review process experience. This experience will inform our study approach and questions we have for researchers conducting reviews.

The literature section introduces systematic reviews as scientific method and relevant guidelines that are currently applied. We will then give a theoretical background on information behaviour and discuss the rationale for our study. In the next section, we will introduce our own systematic review and discuss practices for constructing information searches.

Literature review

Systematic reviews as a research method

Systematic reviews are a method of gaining evidence-based results and started to be a reputable research method in evidence-based medicine and evidence-based health care (Eldredge, 2000). They are conducted to answer clinical questions on the best therapy or medication in a transparent and reproducible way. Research studies are systematically retrieved, carefully selected and evaluated to produce final results and recommendations that are transferred to practice. In other disciplines like educational research, systematic reviews as a method are getting more and more important. Andrews points out their role to map and explore systematically what is known about a topic and attributes a ’ground-clearing‘ function in identifying methodological and theoretical perspectives of a research topic as well as gaps ‘in which the potential and usefulness of systematic reviews are most evident‘ (Andrews 2005, p. 413).

In medicine, the Cochrane Collaboration (Cochrane, 2019) is one of the leading players in the field of systematically addressing clinical research questions. They publish manuals and guidelines (Cochrane, 2019) which are seen as a ‘gold standard’ for conducting systematic reviews (Fleming et al., 2013) and are an important source and guidance for other fields of research that use this kind of method to produce evidence-based results, like social sciences and educational research. The Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI-Centre) in the United Kingdom is another player in Europe that produces systematic reviews and develops methods and recommendations for systematic review approaches in education (Tripney, 2016). The centre is also part of the European Union network EIPPEE (Evidence Informed Policy and Practice in Education in Europe). Other players for educational research are the Campbell Collaboration in Norway (campbellcollaboration.org) and the Institute of Education Sciences (ies.ed.gov).

Research about (systematic) reviews especially in medicine and healthcare focuses on methods and quality aspects. Instruments like AMSTAR (Assessment of multiple systematic reviews), a measurement tool to assess systematic reviews (Shea et al., 2009), or ROBIS (risk of bias in systematic reviews) (Whiting et al., 2016) support reviewers with guidance on an exact and transparent procedure. The CERQual approach (confidence in the evidence from reviews of qualitative research) helps assessing the confidence for review findings from qualitative evidence syntheses (Lewin et al., 2016). The open access journal Systematic Reviews deals with important aspects of how to conduct systematic reviews (planning, procedure, reporting, protocols), yet focusing on health science. In education science, Gough et al. (2017) published a concise and well-structured guidance for each stage of a systematic review.

A recent textbook for educational research by Zawacki-Richter et al. (2020) shows that the research method has gained attention in the discipline. Working examples illustrate each step of the review process and comment on possible pitfalls. It should be pointed out, that text-mining software is about to enter the screening processes to facilitate study selection. Nevertheless, reviewers will still be responsible for their criteria set and decisions made, whatever information technology is being applied.

In summary, a systematic review includes the following steps (figure 1): a formulated research question is analysed in detail to plan the concrete search design process. The process includes selecting relevant literature databases and other information sources, finding and determining search terms, planning the search strategy and finally operating the retrieval. After removing duplicates, the retrieved documents are filtered by applying inclusion and exclusion criteria according to the research question. In general, this first screening is done via assessing the titles and abstracts of the documents. Afterwards, a second screening on basis of the full-text is done. Those relevance assessment steps are essential to proceed with the data analysis steps in a systematic review. The remaining literature being assessed as relevant is forwarded for coding and synthesis to answer the research question. A documentation of each step and the findings is mandatory to guarantee the confirmability of the results.

However, many decisions within these processes and workflows are not reported in the review documents or being told elsewhere, although guidance and reporting standards are calling for carefully and comprehensively documenting these selection steps for reasons of transparency and reproducibility. Guidelines suggest information management steps (Brunton et al., 2017b). The reason is plausible with regard to quality assurance:

A third reason is so that the review team can justify or defend their decisions. At the end of the process, reviewers may be required to defend an action such as why a particular study was or was not included in the review. (Brunton et al., 2017b, p. 150).

With regard to current sources of how to conduct systematic reviews as a research method, it is obvious that the current guidelines aim at structuring the information seeking and relevance assessment processes. Those guidelines appear to describe those processes as if they could be applied and documented in the most transparent and detailed way. However, as said above, in most cases reviews lack a consistent documentation of relevant decisions steps. The reason for this phenomenon might be that information seeking and relevance assessment are much more complex than the guidelines are able to show.

Information behaviour research

Research and theories on information seeking demonstrate that processes are quite complex and hard to retrace by humans. Information seekers are influenced by affective, cognitive and physical modes (Kuhlthau, 1991). They might face diverse cognitive barriers (Savolainen, 2015) that influence information seeking. This process is not separated, but should be seen within a broader perspective of human information behaviour (Wilson, 2000; Ford, 2015). Moreover, information seeking processes might be non-linear (Foster, 2005), in contrast to the proposed structured review process (figure 1). This is striking specifically in a systematic review process as reviews are often conducted in research teams with iterative agreement processes between information specialists responsible for the literature search, and field experts who formulate the question and do relevance assessment. A further aspect concerns information literacy (CILIP Information Literacy Group, 2018) of searchers that influences search quality.

Recent research often refers to theories and models of information behaviour to analyse processes of information seeking and relevance assessment. Wilson uses the concept human information behaviour and defines it as:

Information behaviour is the totality of human behaviour in relation to sources and channels of information, including both active and passive information seeking, and information use. Thus, it includes face-to-face communication with others, as well as the passive reception of information as in, for example, watching TV advertisements, without any intention to act on the information given. (Wilson, 2000, p. 49).

The context (e.g., workplace) plays an important role as well and should be included in the definition as Pettigrew et al., being consistent with Wilson, recommend: Information behaviour is ’the study of how people need, seek, give and use information in different contexts, including the workplace and everyday living‘ (Pettigrew et al., 2001, p. 44). Ford (2015) stresses that human information behaviour should not only focus on the individual, but on interaction processes with other people, teams, organisations or communities. Besides actively searching for information, there are unintentional or passive behavioural patterns like coming across information by chance (serendipity) or intentionally avoiding information (Case, 2012). Related to information behaviour in general are studies that focus on relevance assessment more specifically. Research investigates how people or groups of people decide on relevant information and what influences them. Factors are for example the consistency of judgements and the measurement of relevance. An exhaustive summary is provided by Saracevic (2007a, 2007b).

Relevance assessment and decision-making in systematic review processes

Research has introduced over seventy theories, models and frameworks of information behaviour (Fisher et al., 2005) that show the high complexity and diversity of influences on information seeking and assessing. Those aspects have not been considered fully within the methodological approaches of systematic reviews in research. Systematic reviews are an established research method, the results generally relish high reputation and have a strong impact in guiding educational practice. In Germany, large review projects are currently funded by the Federal Ministry of Education and Research. Those projects aim at investigating relevant educational questions like factors of social inequality in education. The review results shall inform researchers, practitioners and politicians. With this development, first textbooks on more concrete methodological approaches of systematic reviews are published (Zawacki-Richter et al., 2020).

The mechanisms of decision-making and the underlying information flows and determining factors in the process of conducting systematic reviews have a strong influence on the outcomes of the reviews. Decisions on which databases to search in, which publications to include and which publication to determine relevant for deeper analysis are made by those people (single researchers or researcher groups) involved in the review processes. Thus, their relevance assessment and decision-making will have an impact on the review outcomes and we want to have a closer look on those processes with the background of information behaviour theories.

There are two essential decision-making processes, which influence the results from a systematic review. First in the information seeking process, when the actors determine the search strategy, the breadth and width, and the restriction of the search. Second during relevance assessment, when the actors go through the search results and decide about their relevance for the review study.

To guarantee search quality in the information seeking process, the PRESS (peer review of electronic search strategies) guidelines for systematic reviews summarise critical factors to be considered when searching in electronic databases (McGowan et al., 2016). One essential element is the PRESS peer review process supporting decision-making and reaching consensus when developing the search strategy.

The following relevance assessment or screening process is the next essential step within a review process. It is defined as ‘the process of reading each title and abstract to ascertain its relevance’ (Brunton et al., 2017a, p. 119). Normally, this is realised in a two-stage process. There is a first screening based on title, keyword and abstract and a second in-depth one based on the full texts of the research documents. In the published versions of systematic reviews you can often find a diagram, like PRISMA (referred reporting items for systematic reviews and meta-analyses) (Moher et al., 2009), showing the amount of studies having been screened during different stages and the criteria for relevance assessment. Whereas the amount of relevant studies significantly decreases (often from hundreds or thousands to 100 or less), the amount of work increases as each detailed review step involves multiple checking by different persons (see Brunton et al., 2017b, p. 148). What is only numbers (of documents) does not reflect the meticulous work behind, the processes of applying inclusion and exclusion criteria and assessing relevance.

Our research focus lies on the behaviour of actors during the relevance assessment process. It is the reviewers’ actions, their information behaviour, which is being focused on in this research project, which contributes to information behaviour research of scientists in a special setting (conducting a systematic review) to analyse the essential process of screening and relevance assessment. Decision-making activities during relevance assessment of research documents are tasks to be done individually or in groups (collaborative information behaviour), where distributed activities happen. Those activities involve the exchange and sharing of information to guarantee well-informed team members. To guarantee the fully transparent documentation, each actor has to openly explain the reasons for their decision.

When assessing relevance, we first have to describe the concept of relevance. It can be best described as a relation to an object or a context being expressed in a degree of appropriateness (Saracevic, 2016, p. 17) or usefulness. Mizzaro (1997) explains the different kinds of relevance shown between two entities of two groups (p. 811), with the first group including the entities like documents (full-texts), surrogates (representation of the document) and information (‘what the user receives when reading a document‘), and the second group including the entities like problem (to be solved), information need (representation of the problem in the mind of the user), request (representation of the information need in a language) and query (representation of the information need in a system language). Relevance is used dynamically and depends on many factors, e.g. state of knowledge, utility, temporal aspects, intentions, accessibility.

Bearing in mind the above-mentioned characteristics of relevance, it is clear that in systematic review processes assessing relevance of items (surrogates, documents) is a dynamic activity. During the first screening process (based on title and abstract of the surrogate) different degrees of relatedness (prioritisation, more or less relevant documents) would apply whereas during the second screening process (based on the whole document) content-related relevance criteria apply.

Research design to study relevance assessment of researchers

The work is part of a PhD project that focuses on the screening and relevance assessment process of a review, the crucial element in a review process. Decisions are made whether a study meets the reviewers’ criteria or not or whether a publication is worth being coded. This determines the direction of the analysis and synthesis, and thus the final outcomes of a systematic review. The process will be investigated based on theories and models of information behaviour and relevance assessment. The following aspects will be considered: First of all the organisation of the screening process, particularly when review team members work together. The chosen review type could have an influence on the breadth and depth of the review and thus on the level of detail when screening and assessing documents. It will be interesting to explore how reviewers see relevance. Furthermore, the project examines whether methods from information or knowledge management are considered and explicitly applied by team members and if quality standards and recommendations in guidelines from Campbell, Cochrane or other sources serve as orientation.

The research design consists of two parts, a concise qualitative text analysis of the review documents and a qualitative user study (individual reviewer and review teams).

Qualitative document analysis

The document analysis is the first step to analyse the information behaviour and practices when doing systematic reviews. It will be done by means of a qualitative content analysis (thematic analysis) with inductive coding. The analysis of the corpus will focus on the authors’ documentation of relevance assessment processes and their decision-making with regard to the goals of their research question. Relevant questions for the analysis are:

- What kind of review type do the authors use, as stated by themselves and according to the criteria reported by Grant and Booth (2009)?

- Do we find a broad field of different review types or are researchers focussing on a few types?

- What review process stages are being reported on, do authors omit many?

- Do authors list all criteria relevant for the screening process?

- Are knowledge or information management methods being discussed?

- Are the process steps described in a concise and transparent matter to enable traceability?

- Do researchers apply official review guidelines like PRESS?

User studies to detect relevance assessment process

As said above, authors do not use review denominations consistently, and from our screenings, it gets obvious that larger parts of the review process are not documented in the publications. Further, we will not get any insights of the researchers’ experience and behaviour in a concrete relevance assessment process as these are not part of a publication. Thus, the text analysis will give a first hint of relevance assessment processes, but needs to be deepened. A qualitative user study following the document analysis will include individual as well as group interviews and observations.

Relevant questions are:

- How do researchers organise the screening process?

- Do researchers apply typical information to assess relevance like publication type, source, or author?

- Do researchers find it easy to explain their relevance decisions?

- How are relevance decisions agreed upon in a group of researchers?

- Do different researchers/teams in educational research select similar criteria?

The empiric results gained from a descriptive document analysis (final corpus) and qualitative user studies should inform about the characteristics of information behaviour in decision-making processes during the screening and relevance assessment processes. Suitable theories, models and frameworks of information behaviour will be compared with the mechanisms of the reviewers’ information behaviour. Finally, we will identify potentials for improvement relating to the document selection process and the reporting of relevance decisions made to support the overarching principles of transparency and reproducibility in systematic reviews.

Corpus for qualitative document analysis

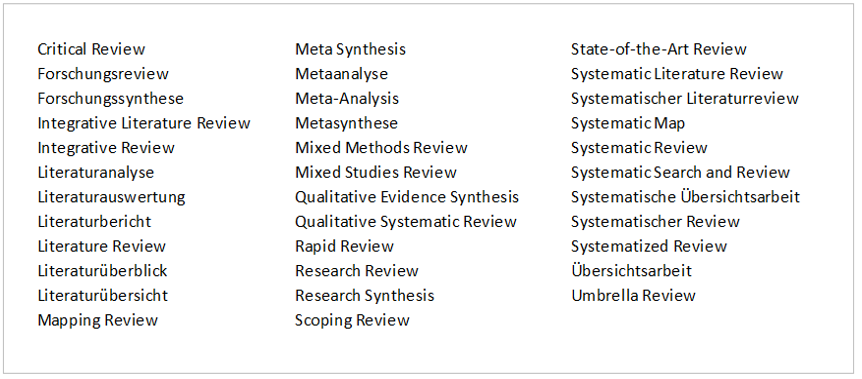

Figure 2. English and German search terms for review types.

Our research question for our corpus as basis for our qualitative document analysis consists of four blocks: The theme (reviews), the discipline (educational research), the location (conducted by authors from German institutions), and the time (2014 till 2018). Some databases offer fields to address these blocks, but they are not always appropriate. We will first report on our choice of terms for the theme block, and then on the choice of databases.

Subject or thematic categories are an option to find a great amount of relevant reviews, but they are not present in every database. Regarding the family of reviews it was a question of detail. A broad search strategy with looking for the concepts review and education would have confronted us with being snowed under with screening work. Thus, we had to find a manageable way with a subset of specific review terms combined with the concept of educational research. As starting point, we decided to take the fourteen review types identified by Grant and Booth (2009), supplemented by the equivalents in German (see figure 2). We left out the term overview as it yielded too many irrelevant documents.

Database selection and search

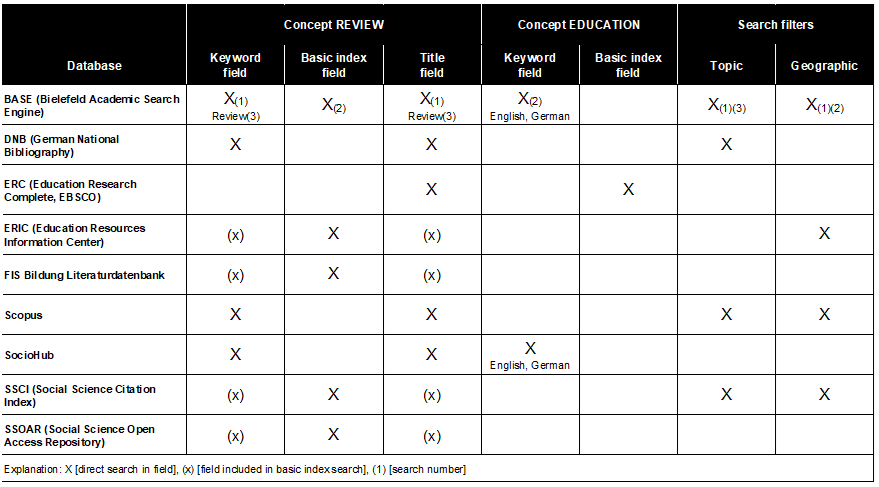

Table 1. Overview over databases and tailored search concepts.

We searched with the terms in figure 2 for documents published between 2014 and 2018 in national and international databases. As educational research is not covered by one or two main databases like in medicine we had to search in diverse databases.

An overview over the databases used and the tailored search concepts is given in table 1. Fields that were searched are marked with ‘X’, fields included in a basic index search are marked with ‘(x)’. A search number is added when different queries were conducted (BASE). This overview points out that we could not transfer a search concept suitable for one database without any adaptations to another database. Fields and search options are different, vocabularies, classifications and scope vary.

In the following, we briefly describe the databases used:

FIS Bildung Literaturdatenbank (Special Information System Education literature database)

The leading database for educational research in Germany includes not only research literature from Germany, but also literature from the Library of Congress and e-books from EBSCO. As FIS Bildung Literaturdatenbank does not offer many keywords relating to reviews we undertook a basic index search (including keyword search). As this database focuses on education, further narrowing was not necessary.

ERIC (Education Resources Information Center)

ERIC is the Anglo-American equivalent to FIS Bildung and should be considered as about 1.8 million references might yield some items relevant to German educational research, though. The ERIC thesaurus offers three different review concept terms what might narrow the search for systematic reviews significantly. Therefore, a basic index search combined with a location filter was done.

BASE (Bielefeld Academic Search Engine, Bielefeld University Library)

Searching grey literature is an important point in guidelines for systematic reviews (Kugley et al., 2016). As BASE as a metadata search engine provides heterogeneous information sources and indexed information, a straightforward approach (same level, same search strategy for all review terms) was not possible. A case-by-case strategy depending on the amount of hits retrieved for a specific review type was pragmatic and efficient.

DNB - Deutsche Nationalbibliographie (German National Bibliography)

The German National Bibliography is a valuable source for the systematic search for publications relating to Germany, as the collection is safeguarded by a protection policy and a legal mandate. As an authority file search yielded no results in combination with DDC class 37* education, we decided for title search in combination with DDC class 37*.

ERC (Education Research Complete, EBSCO)

ERC includes one of the most comprehensive collections for literature search in education and related topics. We searched with our review terms in the basic index. In case a search yielded over 200 hits we combined the term with the concept education research to get manageable results.

Scopus

Scopus as an international database covering a multitude of disciplines and research fields required some thoughts about how to narrow the results with regard to educational research in Germany. We searched the review terms in the title and keyword fields and selected Social sciences as subject area and Germany as affiliation or founding institution (filter options).

Social Sciences Citation Index (SSCI, Web of Science)

The SSCI offers a lot of possibilities to refine or analyse search results. First, we refined the amount of hits by selecting the categories Education - Educational Research, Education - Special or Education - Scientific Disciplines. Second, we chose research areas, in this case Education - Educational Research. In both cases, we narrowed down the results by using the analyse function Countries/Regions (Germany).

SSOAR (Social Science Open Access Repository)

SSOAR is a social sciences full-text repository that makes literature available on the web according to open access publishing regulations. A search for the different review terms in the basic index yielded relevant results.

SocioHub (Special Information System Sociology)

This meta-search service includes several databases. The source type search review in combination with the broad concept education brought too many results. Thus, a title and subject search in combination with the concepts education in German and English was done.

Decisions in screening process practice

While the qualitative document analysis of the corpus described will be the next step in our project, we can yet report on our own experience conducting the corpus while following systematic review processes. In the following, we will discuss our decision-making processes and show some complexities in systematic review approaches.

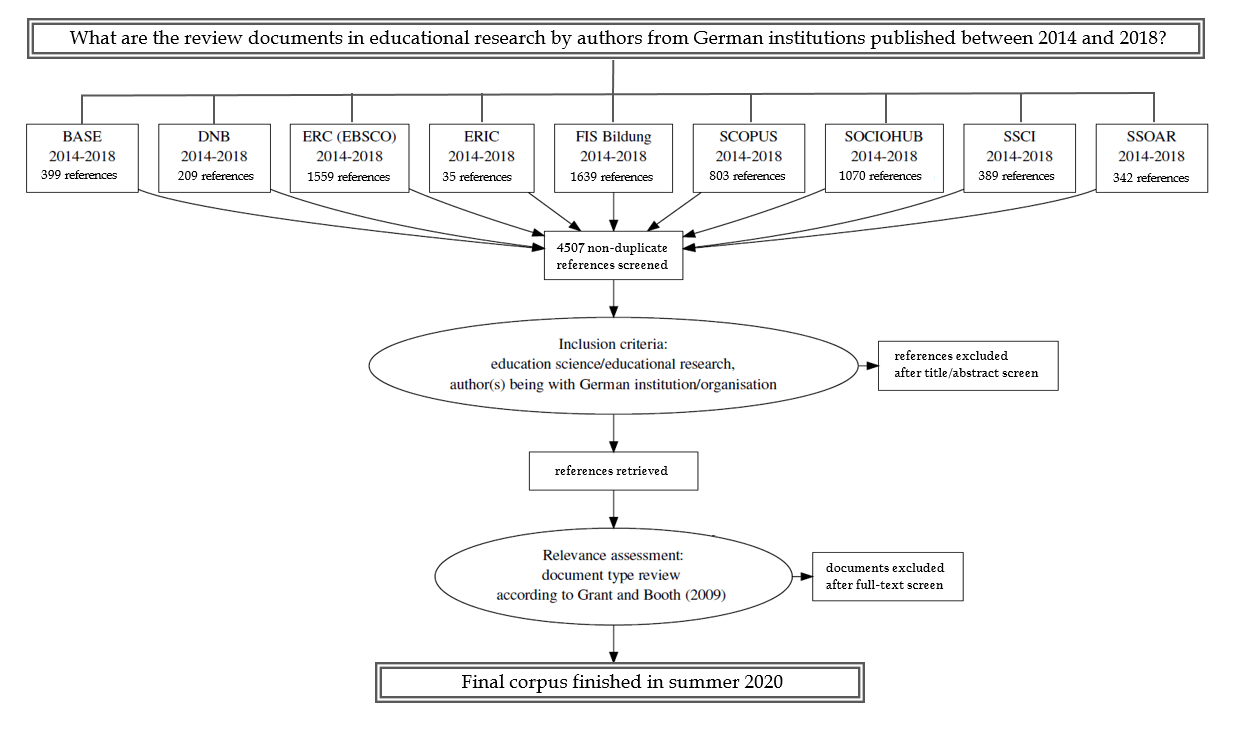

In total, we retrieved 6369 documents, after removing duplicates, our data set includes 4507 documents. We then determined further screening factors to reduce our data set to relevant systematic reviews in educational research.

Figure 3. PRISMA diagram.

In a nutshell, relevance rating is an evolving process based on different types of criteria. Whereas it is easy to include formal or factual criteria like publication date into the search query to gain precise results, other criteria is more complex, e.g. dealing with broad concepts and not using a Boolean NOT to inadvertently exclude relevant items. In those cases, we had to screen and filter relevant documents after the search process. We will explain this by means of our actual systematic review on systematic reviews in education.

The first screening focused on excluding documents that did not cover educational research. We did so by excluding about 1 000 documents from journals that distinctly belong to another discipline, based on classifications offered by the German Electronic Journals Library (EZB). In addition, the documents from those journals where double-checked with regard to their topic and included when they cover educational research. The exclusion process was done independently by two reviewers and only in cases where both reviewers decided to exclude the journal or document, they were excluded.

Another point was focusing on authors from German institutions. Our goal is to analyse the different methodological approaches and the impact these reviews have on the German educational system. We concentrated on one country, Germany, as approaches in systematic reviews and relevant (German-specific) literature databases depend on the specialities of a country's educational system, and we want to be able to compare review processes under similar conditions. Some databases provide an affiliation and institution field to filter documents. However, there is no guarantee those fields contain complete data. In cases we did not have metadata in an author’s affiliation, we did a web search. We finally reduced the number of documents by about 1 700 titles.

A decision had to be made by rating the documents as review type. The use of a typology of reviews is helpful to differentiate between review types. However, determining exclusive characteristics of a review within a research document is hardly possible. Characteristics are shared by more than one review type. Moreover, many authors do not provide sufficient information to be able to clearly assign their work to a review type, neither do databases. Research proves a misclassification of journal articles in the ISI Web of Science that disproportionally affects the Social Sciences (Harzing, 2013). That is, an article containing more than 100 references is coded as a review in the document type field, but especially in the Social Sciences many papers with 100 references and more are not review articles. This hinders the sifting through bibliographic records with document type review as many false reviews remain to be finally unmasked by full-text analysis.

In-depth assessment of the full-texts shows a great variety of documents being labelled (systematic) review. Some authors refer to standards and write their systematic review close to the guidelines introduced above. This makes it easy to recognise the review type and to make a decision about including the publication into our final corpus. Other authors do not name their publication as ‘review’, but are using a well-structured outline with clear criteria like sampling strategy, type of studies, approaches, range of years, limits, inclusion and exclusion criteria, terms used and electronic resources (Booth, 2006, p. 426). This makes those publications a candidate for being considered as review. In contrast, some authors label their review ‘systematic’ and do not meet these criteria. Thus, it was up to us to decide which criteria should be met to rate a document as valid for the final corpus. It proved difficult to differ between a ‘systematic’ and a ‘normal’ literature review, as sufficient characteristic elements for clear identification are often missing.

We chose the following exclusion criteria:

- Publication type:

- no review (e.g. literature about reviews, literature on database applications, patent analysis, bibliography, exemplary document analysis, scientometric/bibliometric studies, literature on a specific study, summary of a study)

- primary publication (study, primary level of research)

- Analysis/synthesis: incomplete (only results are presented, no description of literature search and selection criteria)

- Institution: no author(s) or co-authors(s) from German institutions

- Field of research: education science/education(al) research (pragmatic decision: topic should belong to at least one of the categories/classification of FIS Bildung Literaturdatenbank)

Other aspects to be discussed during the screening process are the reporting quality of publications. In the social sciences we frequently come across working papers or discussion papers that play an essential role in this scientific culture as they open a discussion by presenting new results. In case we find a final paper picking up on the first results without any changes made relating to the study design, the final version will be judged relevant, the preliminary working or discussion paper could then be excluded. If authors did changes, both papers have to be kept as relevant. Discussion papers are kept as well when there is no final results paper published.

For our qualitative document analysis, we are planning to determine topic categories to code specific document snippets. For example, one main category covers the decision-making process when selecting documents (criteria applied, reasons). Another main category should be assigned to the notion of relevance expressed by the reviewer(s). That is, how are surrogates being assessed, which criteria are compulsory to not discard an item at an early stage?

In addition, the localisation of the screening process(es) during the review procedure could be interesting, e.g. is screening done only once after the literature search, or in turns characterised by iterative activities of searching and screening? One point of interest could be to analyse at what stage in the review process inclusion and/or exclusion criteria have been defined and how rigorously they have been applied. Configuring interesting details being worth mentioning by the author(s) in the review article should enable a first albeit partial insight into the reviewing practices in educational research in Germany and thus prepare for the design of the subsequent field research activities (interviews, group discussions, observations). It is intended to contact review authors from this sample (corpus) for the qualitative user study.

Conclusion

Systematic reviews as a research method to synthesise evidence-based results become more and more common in educational research. Guidelines for conducting reviews and improve quality exist, but they do not fully accomplish the complexity and diversity of human information behaviour. The paper discussed the need to investigate information behaviour of actors within a systematic review process. The authors reported on and discussed the process of information seeking and screening while conducting their own systematic review that is the basis for further document analysis on information behaviour during a systematic review process. A first assessment of the documents shows a great variety of review types with different denominations like meta-analysis, literature review, systematic review and literature analysis. Methodological processes seem to differ a lot or are not described within the research papers. This means, although there exist standard guidelines for conducting a systematic review in education, researchers seem to apply diverse methods and use non-standard terms to describe their research. A reason for this inconsistency might be that information seeking and relevance assessment during a systematic review are much more complex and researchers might not be able to draw upon their concrete decisions within the larger review process. Our future research will investigate information behaviour of actors in a review process to get deeper insights into the mechanisms of synthesising research results for evidence-based decision-making.

Acknowledgements

Thanks to Carolin Keller (DIPF | Leibniz Institute for Research and Information in Education) for doing a great number of literature searches.

About the authors

Ingeborg Jäger-Dengler-Harles holds a MLIS degree and works on a research synthesis project at DIPF | Leibniz Institute for Research and Information in Education, Germany. Her research focuses on information retrieval, information behaviour and systematic review processes. She can be contacted at: i.jaeger@dipf.de.

Tamara Heck is a Postdoc researcher at DIPF | Leibniz Institute for Research and Information in Education, Germany. Her research interests include information behaviour and literacy, open science practices and open digital infrastructures. She can be contacted at: heck@dipf.de.

Marc Rittberger is Director at the Information Center for Education at DIPF | Leibniz Institute for Research and Information in Education, Germany, and Professor at Darmstadt University of Applied Sciences, Germany. His research interests include information behaviour, informetrics, open science, and research infrastructures. He can be contacted at: rittberger@dipf.de.

References

- Andrews, R. (2005). The place of systematic reviews in education research. British Journal of Educational Studies, 53(4), 399-416. http://dx.doi.org/10.1111/j.1467-8527.2005.00303.x

- Booth, A. (2006). "Brimful of STARLITE": toward standards for reporting literature searches. Journal of the Medical Library Association, 94(4), 421-429. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1629442/

- Brunton, G., Stansfield, C., Caird, J. & Thomas, J. (2017a). Finding relevant studies. In D. Gough, S. Oliver & J. Thomas (Eds.), An introduction to systematic reviews (2nd ed., pp. 93-122). Sage Publications.

- Brunton, J., Graziosi, S. & Thomas, J. (2017b). Tools and technologies for information management. In D. Gough, S. Oliver & J. Thomas (Eds.), An introduction to systematic reviews (2nd ed., pp. 145-180). Sage Publications.

- Case, D.O. (2012). Looking for information: a survey of research on information seeking, needs and behavior ( ed.). Emerald Group Publishing.

- CILIP Information Literacy Group. (2018). Definition of information literacy. Archived by the Internet Archive at https://web.archive.org/web/20200801120300/https://infolit.org.uk/ILdefinitionCILIP2018.pdf

- Cleyle, S. & Booth, A. (2006). Clear and present questions: formulating questions for evidence based practice. Library Hi Tech, 24(3), 355-368. https://doi.org/10.1108/07378830610692127

- Cochrane. (2019). Cochrane Handbook for Systematic Reviews of Interventions. Archived by the Internet Archive at https://web.archive.org/web/20200806202112/https://training.cochrane.org/handbook/current

- Eldredge, J.D. (2000). Evidence-based librarianship: searching for the needed EBL evidence. Medical Reference Services Quarterly, 19(3), 1-18. http://dx.doi.org/10.1300/J115v19n03_01

- Fisher, K.E., Erdelez, S. & McKechnie, L. (Eds.). (2005). Theories of information behavior. ASIST, Information Today Inc.

- Fleming, P.S., Seehra, J., Polychronopoulou, A., Fedorowicz, Z. & Pandis, N. (2013). Cochrane and non-Cochrane systematic reviews in leading orthodontic journals: a quality paradigm? European Journal of Orthodontics, 35(2), 244-248. https://doi.org/10.1093/ejo/cjs016

- Ford, N. (2015). Introduction to information behaviour. Facet Publishing.

- Foster, A.E. (2005). A non-linear model of information seeking behaviour. Information Research,10(2), paper 222. Archived by the Internet Archive at https://web.archive.org/web/20190810140444/http://www.informationr.net/ir//10-2/paper222.html

- Gough, D., Oliver, S. & Thomas, J. (Eds.). (2017). An introduction of systematic reviews (2nd ed.). Sage Publications.

- Grant, M.J. & Booth, A. (2009). A typology of reviews: an analysis of 14 review types and associated methodologies. Health Information and Libraries Journal, 26, 91-108. https://doi.org/10.1111/j.1471-1842.2009.00848.x

- Harzing, A-W. (2013). Document categories in the ISI Web of knowledge. Misunderstanding the social sciences? Scientometrics, 94(1), 23-34. https://doi.org/10.1007/s11192-012-0738-1

- Kugley, S., Wade, A., Thomas, J., Mahood, Q., Klint Jørgensen, A-M., Hammerstrøm, K. & Sathe, N. (2016). Searching for studies: a guide to information retrieval for Campbell systematic reviews. This guide is based on chapter 6 of The Cochrane Handbook (Lefebvre C, 2011 and Higgins JPT, 2011); last updated: 30 October 2015. The Campbell Collaboration. https://doi.org/10.4073/cmg.2016.1

- Kuhlthau, C.C. (1991). Inside the search process: information seeking from the user's perspective. Journal of the American Society for InformationScience,42(5), 361-371. http://dx.doi.org/10.1002/(SICI)1097-4571(199106)42:5%3C361::AID-ASI6%3E3.0.CO;2-%23

- Lewin, S., Glenton, C., Munthe-Kaas, H., Carlsen, B., Colvin, C.J., Gülmezoglu, M., Noyes, J., Booth, A., Garside, R. & Rashidian, A. (2015). Using qualitative evidence in decision making for health and social interventions: an approach to assess confidence in findings from qualitative evidence syntheses (GRADE-CERQual). PLoS Medicine, 12(10), e1001895. https://doi.org/10.1371/journal.pmed.1001895

- McGowan, J., Sampson, M., Salzwedel, D.M., Cogo, E., Foerster, V. & Lefebvre, C. (2016). PRESS Peer Review of electronic search strategies: 2015 guideline statement. Journal of Clinical Epidemiology, 75, 40-46. https://doi.org/10.1016/j.jclinepi.2016.01.021

- Merz, A-K. (2016). Information behaviour bei der Erstellung systematischer Reviews: Informationsverhalten von Information professionals bei der Durchführung systematischer Übersichtsarbeiten im Kontext der evidenzbasierten Medizin. (University of Regensburg Ph.D. dissertation). Archived by the Internet Archive at https://web.archive.org/web/20200703003258/https://epub.uni-regensburg.de/36331/1/2017_11_13_akm_final.pdf

- Mizzaro, S. (1997). Relevance - the whole history. Journal of the American Society for Information Science, 48(9), 810-832. http://dx.doi.org/10.1002/(SICI)1097-4571(199709)48:9%3C810::AID-ASI6%3E3.0.CO;2-U

- Moher, D., Liberati, A., Tetzlaff, J. & Altman, D.G. (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Journal of Clinical Epidemiology, 62(10), 1006-1012. http://dx.doi.org/10.1016/j.jclinepi.2009.06.005

- Pettigrew, K.E., Fidel, R. & Bruce, H. (2001). Conceptual frameworks in information behavior. Annual Review of Information Science and Technology, 35(1), 43-78. Archived by the Internet Archive at https://web.archive.org/web/20200807073901/http://faculty.washington.edu/fidelr/RayaPubs/ConceptualFrameworks.pdf

- Saracevic, T. (2007a). Relevance: a review of the literature and a framework for thinking on the notion in information science. Part II: nature and manifestations of relevance. Journal of the American Society for Information Science and Technology,58(13), 1915-1933. https://doi.org/10.1002/asi.20682

- Saracevic, T. (2007b). Relevance: a review of the literature and a framework for thinking on the notion in information science. Part III: behavior and effects of relevance. Journal of the American Society for Information Science and Technology,58(13), 2126-2144. https://doi.org/10.1002/asi.20681

- Saracevic, T. (2016). The notion of relevance in information science. Everybody knows what relevance is. But, what is it really? Synthesis Lectures on Information Concepts, Retrieval, and Services, 8(3), 109. https://doi.org/10.2200/S00723ED1V01Y201607ICR050

- Savolainen, R. (2015). Cognitive barriers to information seeking. A conceptual analysis. Journal of Information Science, 41(5), 613-623. https://doi.org/10.1177/0165551515587850

- Shea, B.J., Hamel, C., Wells, G.A., Bouter, L.M., Kristjansson, E., Grimshaw, J., Henry, D.A. & Boers, M. (2009). AMSTAR is a reliable and valid measurement tool to assess the methodological quality of systematic reviews. Journal of Clinical Epidemiology, 62(10), 1013-1020. http://dx.doi.org/10.1016/j.jclinepi.2008.10.009

- Tripney, J. (2016). Introduction to systematic reviews: evidence 2016, Pretoria, South Africa, 20-22 September 2016. London. Archived by the Internet Archive at https://archive.org/details/tripney-2016-introduction-to-systematic-reviews

- Whiting, P., Savović, J., Higgins, J.P.T., Caldwell, D.M., Reeves, B.C., Shea, B., Davies, P., Kleijnen, J. & Churchill, R. (2016). ROBIS: a new tool to assess risk of bias in systematic reviews was developed. Journal of Clinical Epidemiology, 69, 225-234. https://doi.org/10.1016/j.jclinepi.2015.06.005

- Wilson, T.D. (2000). Human information behavior. Informing science: The International Journal of an Emerging Transdiscipline, 3, 49-56. http://dx.doi.org/10.28945/576

- Zawacki-Richter, O., Kerres, M., Bedenlier, S., Bond, M. & Buntins, K. (Eds.). (2020). Systematic reviews in educational research: methodology, perspectives and application. Springer. https://doi.org/10.1007/978-3-658-27602-7