Proceedings of the Eighth International Conference on Conceptions of Library and Information Science, Copenhagen, Denmark, 19-22 August, 2013

Short papers

Core journals in library and information science: measuring the level of specialization over time

Jeppe Nicolaisen

Royal School of Library and Information Science, Birketinget 6, DK-2300 Copenhagen S, Denmark

Tove Faber Frandsen

University of Southern Denmark, Campusvej 55, DK-5230 Odense M, Denmark

Introduction

A basic idea underlying many science studies is that members of a specialty communicate more with each other than with members of other specialties. The explanation is thought to be simple. Members of a certain specialty share a common interest in a certain phenomenon and therefore have something to communicate about. During the 1970s, this simple idea convinced a number of information scientists that it would be possible to map the specialties of any scientific discipline by studying the patterns of communication between its members. What was needed was some clever method for clustering such communication patterns. Marshakova (1973) and Small’s (1973) co-citation technique was found to provide the required method. By measuring the strength of co-citation in a large enough sample of units (e.g. documents or authors) it was found to be possible to detect clusters of units, which were highly co-cited. The information scientists, who became interested in this technique during the 1970s, hypothesized that such clusters would represent scientific specialties.

Small and Griffith (1974) were the first to test the hypothesis. The source of data for their study was the magnetic tape version of the Science Citation Index for the first quarter of 1972. By clustering co-cited documents together which were co-cited beyond a certain threshold, the authors were able to form a number of individual clusters. A linguistic analysis of word usage in the titles of the citing papers revealed that the clusters were linguistically consistent. This was taken as evidence that the clusters, in fact, corresponded to scientific specialties. Since Small and Griffith’s (1974) pioneering study, many others have used documents as the unit of analysis and co-citations of pairs of documents as the variable that enables the clustering of cited documents. Some of these studies have made use of a statistical technique known as multidimensional scaling. This technique enables the construction of two-dimensional maps, which illustrate the clusters of co-cited documents. Such maps are commonly held to reflect the relationships between documents at a given level: That of science as a whole, or of particular disciplines, specialties, or sub-specialties.

A related method for clustering related documents is that of bibliographic coupling. Documents are said to be bibliographically coupled if they share one or more bibliographic references. The concept of bibliographic coupling was introduced by Kessler (1963) who demonstrated the existence of the phenomenon and argued for its usefulness as an indicator of subject relatedness. However, as noted by Glänzel and Czerwon (1996) and De Bellis (2009) the technique lived a relatively quiet life until the 1990s when bibliometricians began to employ it for identifying and mapping clusters of subject-related documents (e.g., Glänzel and Czerwon, 1996; Jarneving, 2007; Ahlgren and Jarneving, 2008). As shown by Nicolaisen and Frandsen (2012), bibliographic coupling has another promising potential as a measure of the level of consensus and specialization in science. Using a modified form of bibliographic coupling (aggregated bibliographic coupling), they were able to measure the level of consensus in two different disciplines at a given time.

However, specialization is a process. The level of specialization within a discipline probably increases or decreases over time. To measure this by bibliometric methods such as co-citation analysis and bibliographic coupling, we need to include a time dimension. In this paper we present an attempt to do so. Using the scientific journal as our sample unit, we measure the level of specialization by calculating the overlap in bibliographic references year by year. To give an example: a journal produces 1,300 references in year 0 and 1,000 references in year 1. Of these, 1,900 are unique, and 400 are found in the reference lists of the journal in both year 0 and year 1. Thus, 200 out of 1,000 references in year 1 was similar to references found in the same journal in year 0. This equals 20 percent, and is taken as an indicator of the level of specialization in that journal in year 1. The level of specialization in year 2 is calculated by comparing the overlap in bibliographic references used by the journal in year 1 and year 2, and so on. To test the method, we have chosen to apply it to a selection of information science core journals and measure the specialization from 1990 onwards.

The next section outlines the method further. After the results section, we will discuss the potentials of this new method for measuring the specialization process in science.

Method

A selection of journals representative of the field is needed for the study. Nixon (2013) provides an overview of various models for determining the core journals of the field and finds great overlap between lists computed using different methods. In this case core library and information science journals were identified using the list of twelve journals by White and McCain (1998). The list is divided into two sections which enables interpretation of the data in terms of subfields. The information science journals may differ in the level of specialization from the library automation journals.

Proceedings of the American Society for Information Science (the proceedings of the ASIS Annual Meeting) were excluded from the study because of the lack of consistent indexing for the relevant years. Furthermore, it should be noted that the Annual Review of Information Science and Technology lacks data for 2000 as well as 2012 and Electronic Library lacks data for 2012. This is due to discontinuation of journal titles as well as the indexing policy of the citation indexes. The selected journals are shown in table 1.

| Information Science |

|---|

| Annual Review of Information Science and Technology |

| Information Processing & Management (and Information Storage & Retrieval) |

| Journal of the American Society for Information Science (and Journal of the American Society for Information Science and Technology) |

| Journal of Documentation |

| Journal of Information Science |

| Library & Information Science Research (and Library Research) |

| Scientometrics |

| Library Automation |

| Electronic Library |

| Information Technology and Libraries (and Journal of Library Automation) |

| Library Resources & Technical Services |

| Program—Automated Library and Information Systems |

The references in a specific year of each of the included journals were compared to the references of the previous year. In order to determine the share of re-citations in e.g., Journal of Documentation in 2005 the references were compared to references in the same journal in 2004. A re-citation is defined as a 100 percent match between a cited reference in one year to a cited reference the previous year. Consequently, spelling errors, typing errors, variations of spelling and the like should be considered a possible source of bias. However, as these irregularities are expected to be evenly distributed across the data set, bias is unlikely. Data registered is name of journal, publication year, cited references in the journal and the number of instances for every reference. Some of the references appear more than once and consequently, the number of re-citations depends on the total number of instances and not just the number of unique references. The share of re-citations in journal j in year y is calculated as follows:

Share of re-citations = number of re-citationsj,y / total number of referencesj,y-1

Journal of Documentation can serve as an example. In 2011 the Journal contained 2106 references of which 190 were re-citations resulting in a share of re-citations of 190 / 2106 = 0.073.

This study analysed the re-citation share of 364.747 references in 11 journals from 1991 to 2012, and calculated the re-citation share. Only articles, notes, reviews and letters were included.

Results

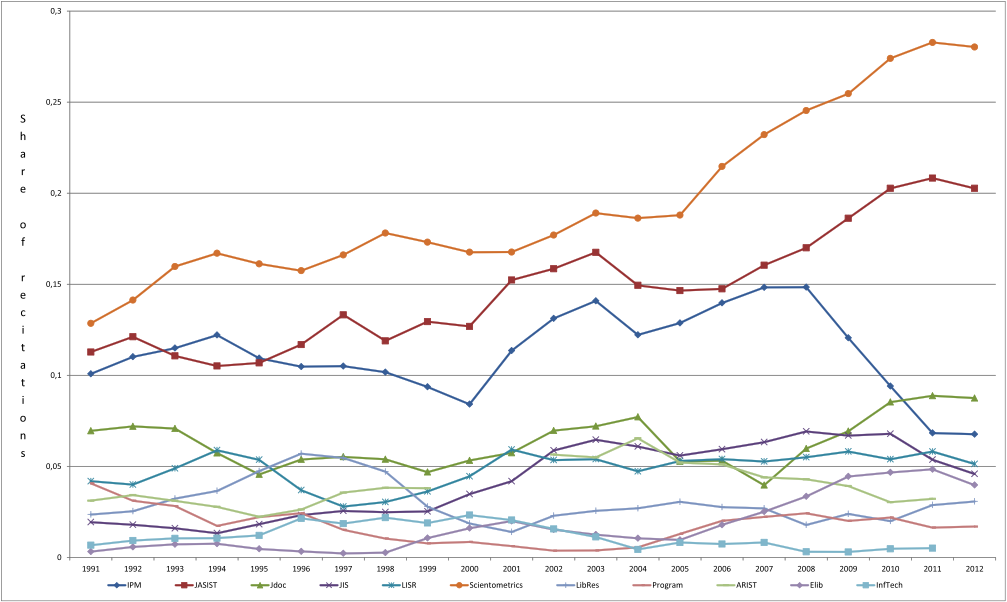

Library and information science journals are specialized in varying degrees. The share of re-citations vary from 0 to more than 25 per cent i.e. up to 25 per cent of the references in any given year appeared in that specific journal the previous year. Figure 1 is an illustration of the development in levels of specialization from 1991 to 2012. The shares are shown using moving averages as means to provide a clearer picture of the development over time for each journal. The moving averages consist of the values of three years divided into three. The first and last year consist of observations from two years divided by two.

Two journals stand out in this figure as they are characterized by a greater extent of specialization throughout the entire period. Journal of the American Society for Information Science (and Technology) and Scientometrics particular appear to be highly specialized. Information Processing and Management is at a slightly lower level of specialization than the two previous mentioned journals in the first fifteen years. However, their shares of re-citations drop remarkably during the last five years.

The division of journals by White and McCain in a group of information science journals and a group of library automation can be used to analyse the data. The four library automation journals are all placed in the lower part of the scale in terms of re-citations. Consequently, the library automation journals cannot be characterized by a high degree of specialization whereas the information science journals in general and some of them in particular are much more specialized.

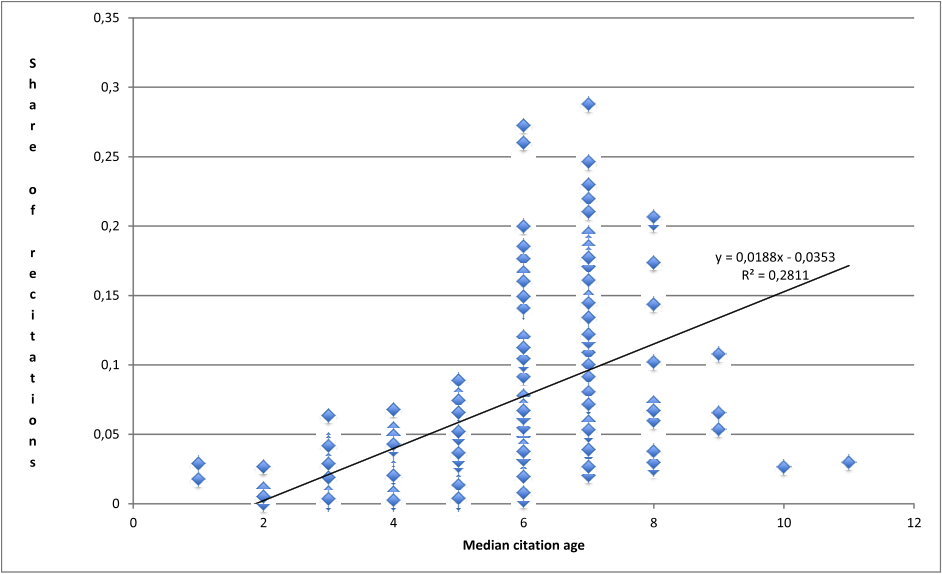

Before any conclusions can be made on the basis of this study we need to look at other obvious explanations for the variation in the data. The levels of re-citations and development over two decades can be explained by specialization but also to some extent by obsolescence. Most papers die within a ten year period (de Solla Price, 1965), and thus we would expect to see a pattern in the age distribution of references. However, the age distribution varies considerably across fields (see e.g. Glänzel and Schoepflin, 1999). The widespread use of open-access and e-print servers such as arXiv allows to cite more recent literature as the technology affords easy access to research not even published yet (Lariviere et al., 2008). The age distribution not only varies across fields but also across subfields (e.g. Huang, et al., 2012). As means to test this hypothesis we examine the age distribution of the references in the journal. The half-life or median citation age, as Line (1970, 1993) argues, is calculated. A discrete analysis method is applied as publication years are treated as discrete units not a continuum of dates in terms of intervals. To determine the median citation age for a journal the publication dates of its references are first listed in reverse chronological order.

We can use a simplified case with the reference dates of a journal published in 2011 as an example. 2011, 2010, 2009, 2009, 2008, 2008, 2007, 2007, 2007, 2006, 2006, 2006, 2006, 2000, 2000, 2000, 1995, 1994, 1993, 1993, 1990, 1989, 1988, 1982, 1981, 1979, 1979, 1977, 1977, 1976, 1976, 1970.

The median year is 1997.5 (the average of the 16th and 17th of the 32 values). Subtracting 1997.5 from the year of publication yields a median citation age of 13.5 years (2011-1997.5).

Figure 2 presents an overview of the median citation age and the level of specialization.

As we might expect the correlation is positive i.e. journals including a relatively large share of older references are characterized by a greater level of specialization – all other things equal. Journals with relatively recent references have fewer re-citations simply because there are more references in those journals that could not have been cited the year before. However, as the r-squared value suggests this can only partially explain the differences in levels of re-citation. Degree of specialization may offer a potential framework for understanding the differences.

Discussion and initial conclusions

We have developed and presented a new bibliometric method for measuring the process of specialization. But does it work? Is it really measuring what it is intended to measure, i.e. specialization?

Being most familiar with the information science journals in our sample, we initially expected to find a clear divide in degree of specialization between the general Information science journals (Annual Review of Information Science and Technology; Journal of the American Society for Information Science (and Technology); Journal of Documentation; Journal of Information Science; Library & Information Science Research (and Library Research)) and the specialized. The general information science journals seek to cover the discipline as a whole whereas journals like Scientometrics and Information Processing & Management focus on the research and development in two subfields. Thus, we expected to find high degrees of specialization in these two journals over time and lower degrees of specialization in the rest. With the exception of JASIS&T, our expectations came true. Being a general information science journal, JASIS&T fits poorly with the idea that general journals re-cite much less than specialized journals. Does that mean that the way we operationalize and measure the concept of specialization is flawed or could it be that JASIS&T is wrongly categorized as a general journal? We believe that the high degree of re-citations in JASIS&T is caused in part by the relatively high number of articles on bibliometric topics it publishes. It could be that JASIS&T to some extent acts as host for a highly specialized sub-field. In a study of references and citations between the same sample of journals as we use, Schneider (2009: 450) found that next to self-citations from Scientometrics to Scientometrics, JASIS&T 'is the only other large contributor of references to Scientometrics'. This lends some support to the bibliometric host idea, but to investigate this further, we plan on doing a follow up study in which we compare re-citations between Scientometrics and the rest of the journals in our sample. If JASIS&T proves to have a much higher degree of re-citations with Scientometrics compared to the other journals in our sample, this could at least partly explain its high score in the present study.

Acknowledgements

The authors gratefully acknowledge the competent assistance of Mathies Glasdam with the identification of more than 300,000 potential re-citations.