What can you perceive? Understanding user’s information quality judgment on academic social networking sites

Ning Zhang, Qinjian Yuan, Xin Xiang, and Kuanchin Chen

Introduction. Considering the overwhelming amount of scientific information available on academic social networking sites, the purpose of this paper is to explore how users perceive and judge the information quality.

Method. Drawing upon the dual-process model, we theorised that the results of perception depend on the influence of both content cues and context-related cues.

Analysis. We conducted two controlled experiments to verify our hypotheses.

Results. Our findings indicated that, (1) higher levels of information quality can be perceived with high content value than with low content value, and there was an interaction effect between content value and question type (Experiment 1); (2) three kinds of context-related cues (authority cues, peer cues, and recommendation cues) demonstrated the significant main effect on perceived information quality, and there was an interaction effect among these three cues (Experiment 2).

Conclusions. This study contributes by addressing both central and peripheral cues based on a dual-process model, different from previous research which has mainly been confined to examining the external cues' effects. Our findings not only can deepen the comprehension about how users perceive and judge the information quality in academic social networking sites, but also can inform platform developers about the design of the interface and the information system.

DOI: https://doi.org/10.47989/irpaper896

Introduction

Academic social networking sites have become a common venue for disseminating and accessing academic information (Yan and Zhang, 2018; Kadriu, 2013). The richness of information available on these sites benefits scholars by providing important references for the solution of scientific problems. However, the user-generated character of such online content may increase the risk of encountering poor-quality information. Moreover, online information quality judgment is becoming more challenging for users as the volume of data on sites continues to grow (Lin et al., 2016).

Information quality issues on academic social networking sites have already received considerable attention over the last decade (Ghasemaghaei et al., 2016), Castillo et al. (2011) implicated many salient features of Web 2.0, such as the ease of information dissemination and the lack of professional gatekeepers, as contributing to such issues, major outstanding question is how academic social networking sites’ users perceive and judge information quality.

Faced with abundant information of uncertain quality, users of academic social networking sites are inclined to seek useful signals to guide their information quality judgments. These signals are often referred to as cues, a term succinctly defined by Choo (2009, p. 1079) as any perceived features used in forming an interpretation. A recent survey (Horrigan, 2016), showed that more than one third of Americans believe libraries can help people decide what information they can trust. Such cues no matter directly from content providers or third parties have particular value for information quality perception, traditionally serving as a means of reducing the uncertainty involved in forming a judgment.

Typical sources of these cues include personal knowledge, indirect information (such as reputation) and traditional information intermediaries (e.g., opinion leaders, experts and information intermediaries), all of which conduce to an eventual information quality decision (Metzger and Flanagin, 2013). However, with the proliferation of online information and the lack of traditional intermediaries in this area, individuals must judge a great mass of information on their own (Eysenbach, 2008). For example, in the context of online shopping, consumers must rely on information cues to evaluate the quality of goods due to the lack of assistance from salespeople. A series of studies has reported how people used indirect cues of credibility, such as sex, name type and image, to estimate source credibility (Morris, 2012; Westerman, 2012; Yang et al., 2013). Collaborative filtering technologies, such as user ratings, feedback (e.g., comments) and tags, are ubiquitous in online communities and platforms, where they somewhat supply the role vacated by traditional intermediaries.

Although many studies have aimed to predict and explain users’ perceived information quality behaviour, some fine-grained cognitive problems still need to be explored. A few studies have attempted to examine social cues related to sources, such as source authority; however, to our knowledge, no research has yet examined the combined effects of all of these information cues, and it remains unclear whether earlier findings on information quality evaluation can be generalised to the academic social networking sites’ context.

As a matter of fact, the process of information quality perception relates not only to cognitive effort, but also to heuristics in most cases, necessitating a comprehensive investigation. According to the dual-process model (Chaiken, 1980), or more specifically heuristic-system models, individuals will use one of two information processing methods (heuristic or systematic) when making specific decisions. Wirth et al. (2007) argued that in complex decision situations, people deal with all available information (which may be quite extensive) through a combination of systematic and heuristic methods. Based on the aforementioned concepts, Sundar (2008) delineated a view of online credibility judgment cues as diverse, complex and interactive, further underscoring the need for research to assess the relative and collective importance of these cues.

Given the complexity of the Web environment, we concentrated on academic social networking sites in particular, and developed hypotheses concerning the effect of content value (content cues), information source (authority cues) and information propagation (peer cues, recommendation cues) on users’ perception of information quality. After reviewing the existing literature describing these cues, we tested our hypotheses using two controlled experiments, which helped to reveal the pattern of causality driving the perception process. Together, these experiments addressed content cues and context-related cues that might impact information quality perception.

Literature review and development of hypotheses

Heuristic method

At present, existing studies on the heuristic method can be divided into two perspectives: those of information processing and those of cognitive science. Studies from the viewpoint of information processing theory embrace, for instance, the limited capacity model, which asserts that limitations of cognitive ability prevent people from processing all available information (Lang, 2000), so that only information with certain significant characteristics is selected for encoding, storage and retrieval. Similar predictions are made by prominence-interpretation theory (Fogg, 2003), which indicates that not all elements presented on the Web can be noticed, and that only those elements noticed and perceived will be used to evaluate information credibility. Based on information processing theory, behavioural economists observed how consumers make decisions based on simple heuristic information; examples include the well-known anchoring effect (Mussweiler and Strack, 2000), the various heuristics employed to reckon with incomplete or missing information (Ross and Creyer, 1992). Fogg et al. (2003) suggested that individuals’ processing of Web information is very superficial and often relies on peripheral cues. Likewise, Wathen and Burkell (2002) argued that users' initial evaluation of online information is based on superficial features, such as the appearance or arrangement of the Website. Savolainen (2009) contended that people cannot make any decision at all without the interpretation of information cues.

From the perspective of cognitive science, researchers have long acknowledged humans' limited information processing ability: Simon (1955, p.112) coined the term "bounded rationality" to refer to the various cognitive and informational limits on the heuristic decision process. His theory, with its various successors and refinements, indicated that people employ heuristics instead of full rational optimizations to make decisions in view of the cognitive constraints they face (Fu and Sim, 2011). Following Wilson’s (1983) definition, cognitive authority refers to influences that a user would recognise as proper because the information therein is thought to be credible and worthy of belief. Furthermore, many scholars have confirmed that cognitive heuristics are the most common means of dealing with information overload and information uncertainty (Pirolli and Card, 1999; Sundar, 2008; Wirth et al. 2007).

In conclusion, theories of information processing and cognitive science show that people will consider the cost of information processing when dealing with online information, and that they reckon with this cost by employing a set of cognitive heuristics, which are useful psychological shortcuts and guidelines that can reduce cognitive load.

Content cues

From the viewpoint of information users, information quality is the fitness for use by information users, ultimately it is the users who will judge whether or not an information product is fit for use. In general, although previous studies interpreted this notion in various context, however, faced with abundant information of uncertain quality, individuals on academic social networking sites have difficulting in finding the right criterion, so they are inclined to seek useful signals to guide their information quality judgments, and content value is one of the signals that users may rely on.

Content value is the core of content quality. Chua and Banerjee (2013) explain the concept in terms of three aspects: rationality, stability and reliability. Rationality means that the content is internally consistent (John et al., 2011); stability refers to the content remaining constant over time; and reliabilitydescribes the timeliness and security of the content (Kahn et al., 2002). Rudat et al. (2014) focused on Twitter retweeting behaviour, and found that people tended to retweet messages with high content value more often than those with low content value. Our study switches to the context of academic social networking sites to determine whether and how the content cues in this area operate on perceived information quality.

According to the dual-process model, the central information processing path is used to judge information quality when individuals focus on content; otherwise, the peripheral path is employed (Toncar and Munch, 2001). For researchers with a certain level of knowledge and academic background, it is possible to pay more attention to the value of the academic site’s content, so as to reduce the information uncertainty. It has been pointed out that judgments of information quality mainly utilised features of the information itself, such as its content, visual presentation or structure (Rieh, 2001).

Among other things, it has been shown that diverse content types have different effects on content value. To take the question and answer section of academic social networking sites as an example: although the information contents of such sections are user-generated in a freeform fashion, they still belong to distinct and identifiable categories (Harper et al., 2010). Lin and Katz (2006, p.856) divided the questions into five such types: 'factoid, list, definition, complex, and target questions'. A question’s type has been proved in previous studies to affect the effort put forth by respondents, with a concomitant impact on answer quality; in particular, questions seeking personal advice are more likely to attract more professional answers (Harper et al., 2008 ).

In this paper, we aim at features of the academic question and answer function, drawing on the classification of question types proposed by Fahy et al. (2001), and focusing on two question types: resource acquisition questions and ^ questions, which have turned out to be well-adapted to our study. Within these, we wished to explore the main effect of content value as well as its interaction with the question type.

Our hypotheses were as follows:

H1: Higher levels of information quality will be perceived with high content value than with low content value.

H2: There is an interaction effect between content value and question type.

Apart from content cues, we planned to further investigate whether context-related cues might have some influence. Hence, in the following, we will discuss three types of context-related cues that are apt to influence information quality perception.

Source authority cues

A general premise for information quality judgment posits that people are apt to rely on context-related cues and heuristics (Walther and Jang, 2012). One kind of situational cue that has been shown as important for users’ information quality perceptions is authority cue, which can be provided by official or professional evaluation. Research in social psychology states that information source has been proved to influence impressions of online credibility; this role has been considered important in early research on persuasion (Fogg and Tseng, 1999). For example, people were found to trust the information provided by university or government agency Websites more than that found on other organizations' sites, (Briggs et al., 2002). Moreover, in e-commerce, professional information sources can contribute to more positive attitudes than non-professional sources (Sundar et al., 2009).

This rapid assessment of information quality, motivated by source authority cues, is called the 'authority heuristic' and can directly influence individual judgment through heuristic processing (Reimer et al., 2004, p.72). Morris et al. (2012) found that official verification of a twitter account is considered the most important credibility cue in that setting. However, in another study on online information credibility assessment, Eysenbach and Kohler (2002) showed that no participants paid attention to source credibility. This contradictory conclusion stimulated our research interest: in the context of academic social networking sites, what is the impact of authority cues on users’ perception of information quality? Will users depend on content value or authority cues when making decisions? We proposed that:

H3: Higher levels of information quality will be perceived from a high authority cue than from a low authority cue.

H4: There is an interaction effect between content value and source authority.

Peer cues

Another important type of context-related cues is the peer cue, which is derived from users’ positive social attributes (Metzger et al., 2010). Hilligoss and Rieh (2008) found that information sources can be divided into familiar and unfamiliar, with users perceiving information from familiar sources as more credible. Peers can be considered as proximal sources, and such proximity has been proved to have a positive impact on content quality assessments: individuals are more likely to evaluate content credibility based on proximal source rather than on distal source (Lee and Sundar, 2013). For example, a tweet from a peer would be given a higher quality appraisal than one from a stranger (Lin et al., 2016). As individuals seek to spare cognitive effort, this kind of heuristic may effectively replace one’s own decision when a trusted peer provides the advice (Metzger et al., 2010). Is there a peer effect on perceived information quality in academic social networking sites? We hypothesised that:

H5: Higher levels of information quality will be perceived from a peer than from a stranger.

Recommendation cues

In social media, additional context-related cues come by way of the collective opinion of other users. These are recommendation cues, also called bandwagon cues because of their ability to trigger a bandwagon heuristic. The common underlying logic of such cues is that 'if others think this is a good story, then I would think so too' (Sundar, 2008, p.82), based on the belief that people are likely to conform to others’ opinions. When assessing online issues, bandwagon heuristics are considered as effective cognitive shortcuts (Lin et al., 2016); moreover, there is already ample evidence that rankings, recommendations, and collective comments on social networking sites serve as important tools of information quality judgment (Metzger et al., 2010; Sundar et al., 2009). For instance, in social navigation research, the best answer with a five-star rating often exists in online forums, where the rating indicates that many other users have found the answer relevant or helpful. Symbols and icons can represent the information quality, popularity, or attractiveness of an item, influencing people’s ultimate decisions (Rudat and Buder, 2015).

In the question and answer section of academic social networking sites, buttons such as recommendation or thumbs-up are set for each specific answer. The number of recommendations may affect decisions: Westerman et al. (2012) verified that having large number of followers instils confidence in the collective judgment of trustworthiness. To explore whether the bandwagon effect has an impact on users’ perceptions of information quality in our chosen setting, we posited that:

H6: Higher levels of information quality will rather be perceived from highly recommended sources than from less recommended sources.

We also sought to investigate the influence among the three heuristic cues on academic social networking sites. Hence, the last hypothesis is proposed:

H7: There is an interaction effect among authority cues, peer cues, and recommendation cues.

Common setup of the two experiments

Materials

We chose the question and answer section of ResearchGate as our experiment domain. ResearchGate is one of the world's best-known academic social networks; it takes the form of an online community and can help researchers build personal research homepages, share publications, and communicate with peers (Thelwall and Kousha, 2015). The use of ResearchGate's section as our experimental object is based on the fact that these resources are exponentially increasing and have users from all disciplines around the world. In addition to the rich and active nature of these resources, the most important trait is section’s variety of social features, such as recommendations, number of followers, which can satisfy our experimental purposes and integrate many social elements into the experimental framework. Furthermore, the question and answer section can best reflect the interactivity of user-generated content and the social characteristics of users. Finally, the rich responses and availability of users' personal information provide a strong context for research on authority cues, peer cues, content cues, and recommendation cues. Therefore, based on the belief that answer quality is subjective, the perception of information quality may depend on complex factors. A reasonable explanation of this perception will necessarily account for different combinations of cues.

To truly reflect users’ natural habits and accurately manipulate the variables, a mobile, mock ResearchGate site was developed (Figure 1). Materials consisted of question and answer threads populated entirely with real content from ResearchGate, including eight topics with two kinds of questions (resource acquisition questions and discussion acquisition questions). The interface was customised for different cues; real profile photos and names were hidden for privacy reasons.

Procedures of the main experiments

Before conducting the main experiments, we performed a pre-experiment with sixty-four undergraduates; as a result, some ambiguous words in the questionnaire were revised and the stability of our mock system was tested. In our two main experiments, we recruited graduate students from the same public university in China. Each subject received extracurricular credits for his or her participation. Before the experiments, students were asked to complete a short questionnaire on their demographics and their understanding of academic social networking sites.

The main experiments were conducted in a spacious and well-lit classroom. All instructions, requirements and precautions were displayed on the screen. Participants accessed the previously randomised experimental materials’ sites by mobile phone (all materials were distributed using a random matching method to prevent sequence effects). We did not inform participants of the true intention of the experiment; instead, we simply stated that we wanted to test the functions of the question and answer community. Table 1 gives an overview of the main experiments.

| Focus | Manipulation | Controlled for |

|---|---|---|

| Experiment 1: Content cue and Authority cue under different question type | We manipulated the content cue between high content value and low content value. The authority cue was either high or low. The question type was either resource acquisition or discussion acquisition. | We controlled for the peer cue and recommendation cue, all of the two kind of cues was not displayed on the page. |

| Experiment 2:Authority cue, Peer cue and Recommendation cue | We manipulated the peer cues as from peer or from stranger. Recommendation cue was either high or low. | We controlled for the content cue, all subjects received the low content value material.a |

| aIn Experiment 2: we chose to use a low content value material, due to the findings of Experiment 1 indicating that high content value has stronger effect on perceived information quality. Therefore, using a low content value material would seem to the more conservative test of our hypotheses. | ||

Manipulation

Our research manipulated five variables. The first was the question type: drawing on the results of Fahy et al. (2001), we divided the questions into resource acquisition and discussion acquisition categories. The resource acquisition questions sought assistance in obtaining various resources and information (e.g., books, software). The discussion acquisition questions entailed controversial or suggestive issues, with no fixed answer criteria. We designed four different topics for each question type (See Table 2).

| Question Type | Overview | Asker |

|---|---|---|

| Resource acquisition questions | What is the difference between literature review, theoretical analysis and conceptual analysis? | Muhammad Kamaldeen Imam |

| For investigating the present project, what methods of data collection should I employ? | Mary Zhang | |

| What is the best method for analyzing interviews? | Azam Nemati Chermahini | |

| How can we distinguish between mediator and moderator variable, theoretically? | Amer Ali AI-Atwi | |

| Discussion acquisition questions | Does anyone know the online free SPSS software for beginners learning? | George Duke Mukoro |

| Recommended references about optimal control. | Poom Jatunitanon | |

| What is the "Bible" of statistics? | Plamen Akaliyski | |

| Recommended statistics books to learn R? | Thomas Duda |

The second variable was content value. Here, we relied on experts’ judgments as proxies, following an approach used by others in a similar study (John et al., 2011). The experts rated each answer as high or low value, respectively; their Cohen kappa value was 0.81, indicating good consistency.

The remaining three variables reflect the three other types of cues. The authority cue was manipulated by whether the respondent held a doctorate or a master’s degree: high-authority was operationalised as the possession of a doctorate. For the peer cue, with peer cue was manipulated as the respondent was from his peer group, otherwise, from a stranger. The recommendation cue was operationalised according to the number of followers for the answer; after a careful investigation of the academic social networking sites, we assigned thirty followers to an answer as the high recommendation condition and one follower as the low recommendation condition.

Dependent and control variables

The perceived information quality of the threads on the academic social networking sites was measured using a 5-point Likert-type scale modified from Lee et al. (2002) and Dedeke (2000). Participants were asked to show how well five adjectives (accuracy, reliability, relevance, integrity, comprehensibility) described the information quality that they perceived.

Five control variables were included in our data analysis. These were (1) sex, (2) age, (3) academic background, (4) academic social networking sites’ usage time, and (5) academic social networking sites’ usage type.

Participants

In all, 163 graduate students registered for our experiments, of whom 144 completed both experiment 1 and experiment 2 (65 males and 79 females). Participants’ ages ranged from 22 to 26 (M = 23.63, SD = 1.02). The most prevalent academic background include management, economics, engineering, science and literature.Experiment 1

Experiment design

Experiment 1 examined the effects of content value cues and authority cues on perceived information quality. To test hypotheses H1 to H4, we conducted a two-way repeated measures analysis of variance (ANOVA), with question type (resource acquisition vs. discussion acquisition), content value (high vs. low), and authority cue (high vs. low) as independent variables, and perceived information quality as the dependent variable. As the question type was the within-subject factor, this resulted in four treatment groups, with participants in each group reading two different kinds of questions. Details of the manipulation are described in the previous section on experimental setup.

Data analysis

Table 3 presents descriptive statistics, with ANOVA results shown in Table 4. The ANOVA reveals a significant main effect of content cue, F(1, 140) = 248.53, p < 0.0005, partial η2 = 0.64, indicating that participants perceived answers with high content value (M = 3.80, SD = 0.86) as having higher information quality than answers with low content value (M = 2.30, SD = 0.77). Therefore, H1 was supported.

Likewise, a significant main effect was found for source authority, F(1, 140) = 16.86, p < 0.0005, partial η2 = 0.11. Participants rated information with high source authority (M = 3.25, SD = 0.68) as having higher quality than information with low source authority (M = 2.86, SD = 0.47). Thus, H3 was confirmed.

| Source authority | Content value | Question type | M | SD | N |

|---|---|---|---|---|---|

| High | High | Discuss acquisition | 3.83 | 0.47 | 18 |

| Resource acquisition | 4.04 | 0.69 | 18 | ||

| Low | Discuss acquisition | 2.02 | 0.54 | 18 | |

| Resource acquisition | 3.09 | 1.18 | 18 | ||

| Low | High | Discuss acquisition | 3.57 | 0.76 | 18 |

| Resource acquisition | 3.77 | 0.77 | 18 | ||

| Low | Discuss acquisition | 1.76 | 0.60 | 18 | |

| Resource acquisition | 2.34 | 0.74 | 18 |

| Factor | Level | M | SD | F | p | Eta2 |

|---|---|---|---|---|---|---|

| Source authority | High | 3.25 | 0.68 | 16.86 | <0.0005 | 0.11 |

| Low | 2.86 | 0.47 | ||||

| Content value | High | 3.80 | 0.86 | 248.53 | <0.0005 | 0.64 |

| Low | 2.30 | 0.77 | ||||

| Question type | Discuss acquisition | 2.79 | 0.75 | 41.75 | <0.0005 | 0.23 |

| Resource acquisition | 3.31 | 0.97 |

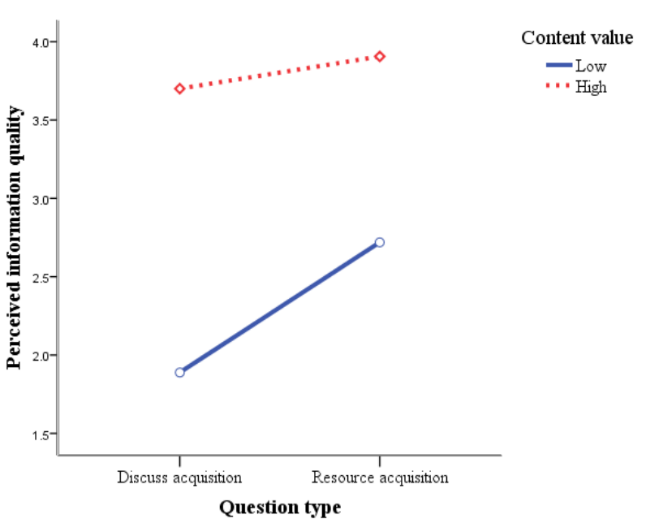

We also discovered a significant interaction effect between content value and question type, Wilks’ Lambda = 0.90, F(1, 140) = 15.19, p < 0.0005, partial η2 = 0.01. As shown in Figure 2, the influence of content value varied by question type: for discussion acquisition questions, high content value led to a significantly high perception of information quality than did low content value. Thus, H2 was supported. However, no interaction effect was observed between content value and authority cue, so H4 was not corroborated.

Figure 2: Interaction effects of content value and question type

Discussion

Experiment 1 investigated the impacts of content cues and authority cues on perceived information quality under different question types. First, results showed that high content value led to higher information quality perception than did low content value, especially for resource-acquisition type questions. Previous research, however, has paid more attention to peripheral cues than central cues. It is true that perceptual behaviour is related to the external stimuli provided by the information environment, but this behaviour is also influenced by users’ own internal needs. As Metzger (2007) once pointed out, the degree to which online information will be scrutinised depends on individual ability and initial motivation. Users of academic social networking sites in particular are both motivated and purposeful, and are more sensitive to internal cues (Koriat et al., 2009). According to the dual-process model, such users are apt to take a more rigorous and systematic approach (Metzger, 2007). Examples of a content value effect outside academic social networking sites include the frequency with which high-content-value, Twitter news items are forwarded (Rudat and Buder, 2015) and the large observed impact of a Website’s content value on user stickiness (Weber and Roehl, 1999).

Secondly, our findings also suggest that users pay attention to source authority cues to spare effort in information processing. Highly authoritative information sources lead users to perceive a higher level of information quality. This finding strikes a chord with many previous studies: for example, Fogg et al. (2003) believed that the display of qualifications and identities was crucial to enhance the credibility of online information. Lin, Spence and Lachlan (2016) likewise found that information from professional organizations on the Chinese site Weibo has higher credibility. Most credibility literature has followed suit in describing source authority as a major criterion. This kind of judgment is based on the authority heuristic, cognitive tendency that in its most reductive form amounts to the belief that authoritative people always say the right thing. No interaction effect between content value and source authority was found in experiment 1, indicating the independence of central and peripheral cues in this context. When users are already highly knowledgeable, it is more desirable to choose the central processing path, and vice versa.

Experiment 2

Experiment design

Experiment 2 examined the effect of authority, peer, and recommendation cues on perceived information quality. A three-way ANOVA was conducted to explore the main effects and interaction effects among them, with authority (high vs. low), peer (with peer vs. without peer) and recommendation (high vs. low) cues as independent variables, and perceived information quality as the dependent variable. There were eight treatment groups. Since experiment 2 is designed to examine the influence of peripheral cues, the impact of the content itself was not considered. (Again, see above for details of the manipulation.)

Data analysis

The main-effect results are shown in Table 5. The analysis found a significant main effect for the peer cue, with F(1, 140) = 29.19, p < 0.0005, partial η2 = 0.18. That means that higher levels of information quality were perceived in answers from a peer (M = 3.23, SD = 1.07) than in those originating from a stranger (M = 2.68, SD = 0.82). Therefore, H5 was supported. We also observed a significant main effect for the recommendation cue: F(1, 140) = 42.18, p < 0.0005, partial η2 = 0.24. This indicates that answers marked with a high numbers of recommendations (M = 3.29, SD = 0.45) were perceived as having higher information quality than answers with few recommendations (M = 2.63, SD = 0.63). Hence, H6 also was confirmed.

| Factor | Level | M | SD | F | p | Eta2 |

|---|---|---|---|---|---|---|

| Source authority | High | 3.36 | 0.67 | 62.62 | <0.0005 | 0.32 |

| Low | 2.56 | 0.52 | ||||

| Peer cue | With | 3.23 | 1.07 | 29.19 | <0.0005 | 0.18 |

| Without | 2.68 | 0.82 | ||||

| Recommendation cue | With | 3.29 | 0.45 | 42.18 | <0.0005 | 0.24 |

| Without | 2.63 | 0.63 |

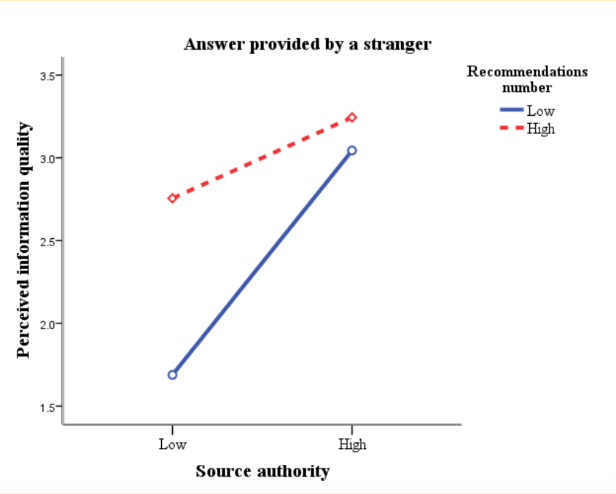

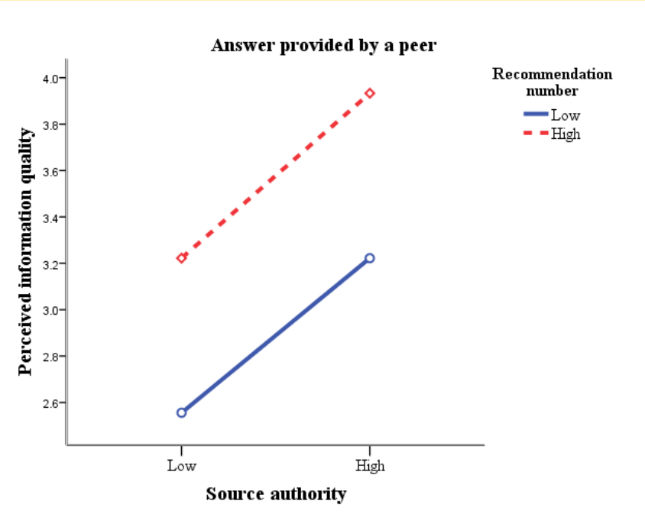

We then examined the perceived information quality for each type of origin (peer or stranger). For the answers provided by a stranger, the individual effects of authority and recommendation cues were both significant, as was their interaction (see Figure 3(a)). Likewise, authority and recommendation cues had a significant effect for answers provided by a peer, and a significant interaction was observed here as well (see Figure 3(b)). In brief, whether the answer was provided by a stranger or by a peer, we find a significant interaction effect between source authority and recommendation count. Although the two figures provided an intuitive description of the interaction among three cues, further statistical testing was necessary. The ANOVA showed that the interaction of the three cues was statistically significant: F(1, 136) = 5.01, p = 0.03, partial η2 = 0.04. Thus, H7 was supported.

Figure 3(a): Interaction effects of authority cue and recommendation cue (originate from a stranger)

Figure 3(b): Interaction effects of authority cue and recommendation cue (originate from a peer)

Having already established the significance of the three-factor interaction, we undertook a closer examination of simple-two-way interactions. The results showed that when source authority was low, the main effect of the peer cue was significant regardless of whether the answer had been highly recommended or not: the ANOVA results were F(1, 139) = 6.50,p = 0.01 and F(1, 139) = 2.98, p= 0.09 respectively. When source authority was high, if there is high recommendation count, the main effect of the peer cue was significant: F(1, 139) = 10.29, p < 0.0005; otherwise, the effect was not significant.

Discussion

We considered the influences of peripheral cues on perceived information quality in experiment 2. All three peripheral cues’ main effects were found to be significant, indicating that users tend to consider contextual factors, and their perceptual behaviours are affected by both source familiarity and the opinions of others. Previous research, too, has found that people ascribe greater recognition to accepted alternatives than to unaccepted alternatives (Gigerenzer and Todd, 1999) and often perceive familiar sources as more trustworthy than unfamiliar sources (O’Keefe, 1990). Since people are more apt to acknowledge familiar sources, they will tend to agree with the answers provided by peers and perceive those answers as having higher information quality.

Furthermore, previous studies have implied that users judge the online information quality in light of popularity cues (Fu and Sim, 2011). Our results confirmed the bandwagon effect, indicating that user behaviour or opinion is prone to successively imitative in order to reduce cognitive load and improve decision-making validity (Shi et al., 2017).

Finally, our study found an interaction among authority, peer, and recommendation cues. More specifically, our simple-two-way interaction examination bore the notable result that when source authority and recommendation count were both high, the main effect of the peer cue was significant; otherwise, the peer effect was not significant. In line with the interpretation of the 'cues cumulative effect;'proposed by Sundar et al. (2009, p. 4234), the coexistence of more cues seems to increase the positive effects of perception. When the source of the answer is authoritative, and the answer itself is highly recommended by others, such a cumulative effect is induced, and the positive effect of further cues (e.g., the peer cue) is enhanced. Our findings provide a noteworthy illustration of the complexities of cue selection.

Conclusions

In this study, we set out to develop a broader understanding of information quality perception by investigating several cues on which users depend. Drawing on the dual-process model, we theorised that users rely on both central and peripheral cues when making decisions. We performed two controlled experiments to test our seven hypotheses and provided an in-depth discussion based on our results. Our study has broad, significant implications, as discussed below.

Theoretical contributions

Our approach promises a more complete understanding of the users’ perception and judgment in an online context and extends the literature in two ways. First, we studied information quality from the perspective of users’ perception: rather than identifying the dimensions of information quality as in previous studies, we paid more attention to how users actually perceive that information quality, enriching the related research on information quality perception.

Secondly, few studies of online information quality judgment to date have ventured a comprehensive examination of cues' effects, focusing rather only on peripheral cues. We have incorporated both content cues and context-related cues into our research framework and conducted two experiments to analyse the influence of multiple cues, as well as to explore the quantitative relationship among them. Our findings confirm the complexity of the individual’s perceptual process, which means that they not only rely on content cues, even they have a high level of knowledge or motivation is not reliant solely on content cues. Such individuals still employ context-related cues and exhibit a tendency to follow the crowd when they wish to reduce cognitive effort.

Practical and managerial implications

In the complex online information environment of academic social networking sites, the provision and presentation of information cues are important factors in determining users' perception of information quality. This study thus also offers practical implications for interface and information system design. It is well known that effective systems for feedback, evaluation, and reputation assessment focus not only on the relevant indicators of attention and design, but also on the simultaneous presentation of different types of cues to target different users’ psychology. In general, the positioning and display strategy of the platform ultimately affects users’ perception of information quality. The developers of response buttons should fully understand the cue characteristics that drive this perception.

Specifically, platform designers and service providers should focus on the human-computer interaction aspect of their work, optimising their product’s presentation according to the relative importance of cues and combing multiple information cue strategies to provide multi-channel references for decision-making, with the overall goal of reducing uncertainty in user information quality judgments. Furthermore, understanding the process by which users’ information quality perceptions arise can hold benefits for courses design and information literacy intervention. We believe that the influence of cues on the perceptual behaviour of users is not restricted to the context of academic social networking sites, but extends to other social media platforms.

Future research and limitations

Our study is limited in some respects. First, our experimental sample consisted entirely of graduate students, which may limit the generalisability of the results. Thus, a sample with varied characteristics (e.g., educational background, age, professional knowledge) should be considered in future research. Secondly, although we conducted a strict experimental manipulation, our method is still insufficient to explain the causal mechanism by which these cues exert their influence; hence, in future work, we may utilise other research methods, such as in-depth interviews post-experiment, to obtain more convincing findings. Finally, our experimental system simulated an actual academic social networking sites to only a limited extent, presenting a single mock ResearchGate page with variations to incorporate different cues. A future study design could improve the extent of the simulation; for instance, by allowing participants to scroll back the page to obtain more information. This, we believe, will enhance the validity of our work.

About the authors

Ning Zhang is a Professor at the School of Information at Financial and Economic of Guizhou University, Guiyang 550025, Guizhou Province, P.R. China. She received her PhD degree from Nanjing University, China, and her main research interests are Internet user behaviour, social media, information analysis, information quality. She can be contacted at ningzhang@mail.gufe.edu.cn.

Qinjian Yuan is a Professor at the School of Information Management at Nanjing University, Nanjing 210023, Jiangsu Province, P.R. China. His main research interests are Internet user behaviour, digital gap, and social media. His contact address is yuanqj@nju.edu.cn.

Xin Xiang is a PhD student at the School of Information Management at Nanjing University, Nanjing 210023, Jiangsu Province, P.R. China. His main research interests are Internet user behaviour, cyber metrics, and information analysis. His contact address is xiangx@mail.gufe.edu.cn.

Kuanchin Chen is a Professor of Computer Information Systems and John W. Snyder Faculty Fellow at

Western Michigan University. He is a co-director of WMU’s Center for Business Analytics. His research interests include electronic business, analytics, social networking, project management, privacy and security, online behavioural issues, data mining and human computer interactions. He can be contacted at kc.chen@wmich.edu.

Acknowledgments

The authors appreciate the regional editor and the anonymous reviewers for their insightful comments and constructive suggestions. This work was supported by Talent Introduction Program of Guizhou University of Finance and Economics (No. 2019YJ052).References

- Briggs, P., Burford, B., De Angeli, A., Lynch, P., & Lynch, P. (2002). Trust in online advice. Social Science Computer Review, 20(3), 321-332. https://doi.org/10.1177/089443930202000309

- Castillo, C., Mendoza, M., & Poblete, B. (2011). Information credibility on Twitter. In WWW'11: Proceedings of the 20th International Conference on World Wide Web, Hyderabad, India March, 2011 (pp. 675-684). Association for Computing Machinery. https://dl.acm.org/doi/10.1145/1963405.1963500

- Chaiken, S. (1980). Heuristic versus systematic information processing and the use of source versus message cues in persuasion. Journal of Personality and Social Psychology, 39(5), 752-766. https://doi.org/10.1037/0022-3514.39.5.752

- Choo, C. W. (2009). Information use and early warning effectiveness: perspectives and prospects. Journal of the Association for Information Science and Technology, 60(5), 1071-1082. https://doi.org/10.1002/asi.21038

- Chua, A. Y., & Banerjee, S. (2013). So fast so good: an analysis of answer quality and answer speed in community Question‐answering sites. Journal of the Association for Information Science and Technology, 64(10), 2058-2068. https://doi.org/10.1002/asi.22902

- Dedeke, A. (2000). A conceptual framework for developing quality measures for information systems. In B.D. Klein and D.F. Rossin, (Eds.). Fifth Conference on Information Quality (IQ 2000), MIT Sloan School of Management, Cambridge, MA, USA (pp. 126-128). MIT Press. https://bit.ly/3vCSmil. (Archived by the Internet Archive at https://bit.ly/3eMuZfr)

- Eysenbach, G. (2008). Credibility of health information and digital media: new perspective and implications for youth. In M.J. Metzger, and A.J. Flanagin, (Eds.). Digital media, youth, and credibility (pp. 123-154). MIT Press.

- Eysenbach, G., & Kohler, C. (2002). How do consumers search for and appraise health information on the World Wide Web? Qualitative study using focus groups, usability tests, and in-depth interviews. British Medical Journal, 324(7337), 573-577. https://doi.org/10.1136/bmj.324.7337.573

- Fahy, P. J., Gail, C., & Mohamed, A. (2001). Patterns of interaction in a computer conference transcript. International Review of Research in Open & Distance Learning, 2(1), 1-24. https://doi.org/10.19173/irrodl.v2i1.36

- Fogg, B. J. (2003). Prominence-interpretation theory: explaining how people assess credibility online. In G. Cockton, P. Korhonen, (Eds.). CHI EA '03: CHI '03 Extended Abstracts on Human Factors in Computing Systems, Ft. Lauderdale, Florida, USA, April, 2003 (pp. 722-723). Association for Computing Machinery. https://dl.acm.org/doi/abs/10.1145/765891.765951

- Fogg, B. J., & Tseng, H. (1999). The elements of computer credibility. In G. M. Williams (Ed.). CHI '99: Proceeding of the SIGCHI Conference on Human Factors in Computing Systems, Pittsburgh, Pennsylvania, USA, May, 1999 (pp. 80-87). Association for Computing Machinery. https://dl.acm.org/doi/10.1145/302979.303001

- Fogg, B. J., Soohoo, C., Danielson, D. R., Marable, L., Stanford J., & Tauber, E.R. (2003). How do users evaluate the credibility of Web sites? A study with over 2,500 participants. In J. Arnowitz (Ed.). DUX '03: Proceedings of the 2003 Conference on Designing for User Experiences, San Francisco, California, June, 2003 (pp. 1-15). Association for Computing Machinery. https://dl.acm.org/doi/abs/10.1145/997078.997097

- Fu, W. W., & Sim, C. (2011). Aggregate bandwagon effect on online videos' viewership: value uncertainty, popularity cues, and heuristics. Journal of the Association for Information Science and Technology, 62(12), 2382-2395. https://doi.org/10.1002/asi.21641

- Ghasemaghaei, M., & Hassanein, K. (2016). A macro model of online information quality perceptions: a review and synthesis of the literature. Computers in Human Behavior, 55, 972-991. https://doi.org/10.1016/j.chb.2015.09.027

- Gigerenzer, G., & Todd, P. M. (1999). Simple heuristics that make us smart. Oxford University Press.

- Harper F. M., Weinberg J., Logie J., & Konstan, J.A. (2010). Question types in social Q&A sites. First Monday, 15(7), 1-21. https://firstmonday.org/ojs/index.php/fm/article/view/2913 (Archived by the Internet Archive at https://bit.ly/3nIkoWL)

- Harper, F. M., Raban, D., Rafaeli, S., & Konstan, J. A. (2008). Predictors of answer quality in online Q&A sites. In CHI '08: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, April, 2008 (pp. 865-874). Association for Computing Machinery. https://dl.acm.org/doi/10.1145/1357054.1357191

- Hilligoss, B., & Rieh, S. Y. (2008). Developing a unifying framework of credibility assessment: Construct, heuristics, and interaction in context. Information Processing and Management, 44(4), 1467-1484. https://doi.org/10.1016/j.ipm.2007.10.001

- Horrigan J. (2016). Libraries 2016. Pew Research Center. http://www.pewinternet.org/2016/09/09/2016/Libraries-2016. (Archived by the Internet Archive at https://bit.ly/3ueQqwd)

- John, B., Chua, A. Y., & Goh, D. H. (2011). What makes a high-quality user-generated answer? IEEE Internet Computing, 15(1), 66-71. https://doi.org/10.1109/MIC.2011.23

- Kadriu, A. (2013). Discovering value in academic social networks: a case study in ResearchGate. In V. Luzar-Stiffler, & I. Jarec (Eds), Proceedings of the International Conference on Information Technology Interfaces, ITI, Cavtat/Dubrovnik, Croatia, June 24-27, 2013 (pp. 57-62). IEEE. https://doi.org/10.2498/iti.2013.0566

- Kahn, B. K., Strong, D. M., & Wang, R. Y. (2002). Information quality benchmarks: product and service performance. Communications of the ACM, 45(4), 184-192. https://doi.org/10.1145/505999.506007

- Koriat, A., Ackerman, R., Lockl, K., & Schneider, W. (2009). The memorizing effort heuristic in judgments of learning: a developmental perspective. Journal of Experimental Child Psychology, 102(3), 265-279. https://doi.org/10.1016/j.jecp.2008.10.005

- Lang, A. (2000). The limited capacity model of mediated message processing. Journal of Communication, 50(1), 25. https://doi.org/10.1111/j.1460-2466.2000.tb02833.x

- Lee, J. Y., & Sundar, S. (2013). To tweet or to retweet? That is the question for health professionals on Twitter. Health Communication, 28(5), 509-524. https://doi.org/10.1080/10410236.2012.700391

- Lee, Y. W., Strong, D. M., Kahn, B. K., & Wang, R. Y. (2002). AIMQ: a methodology for information quality assessment. Information & Management, 40(2), 133-146. https://doi.org/10.1016/S0378-7206(02)00043-5

- Lin, J. J., & Katz, B. (2006). Building a reusable test collection for question answering. Journal of the Association for Information Science and Technology, 57(7), 851-861. https://doi.org/10.1002/asi.20348

- Lin, X., Spence, P. R., & Lachlan, K. A. (2016). Social media and credibility indicators: the effect of influence cues. Computers in Human Behavior, 63, 264-271. https://doi.org/10.1016/j.chb.2016.05.002

- Metzger, M. J. (2007). Making sense of credibility on the Web: models for evaluating online information and recommendations for future research. Journal of the Association for Information Science & Technology, 58(13), 2078-2091. https://doi.org/10.1002/asi.20672

- Metzger, M. J., & Flanagin, A. J. (2013). Credibility and trust of information in online environments: the use of cognitive heuristics. Journal of Pragmatics, 59(59), 210-220. https://doi.org/10.1016/j.pragma.2013.07.012

- Metzger, M. J., Flanagin, A. J., & Medders, R. B. (2010). Social and heuristic approaches to credibility evaluation online. Journal of Communication, 60(3), 413-439. https://doi.org/10.1111/j.1460-2466.2010.01488.x

- Morris, M. R., Counts, S., Roseway, A., Hoff, A., & Schwarz, J. (2012). Tweeting is believing? Understanding microblog credibility perceptions. In CSCW '12: Proceedings of the ACM 2012 conference on Computer Supported Cooperative Work, Seattle, Washington, USA, February, 2012 (pp. 441-450). https://dl.acm.org/doi/10.1145/2145204.2145274

- Mussweiler, T., & Strack, F. (2000). The use of category and exemplar knowledge in the solution of anchoring tasks. Journal of Personality and Social Psychology, 78(6), 1038-1052. https://doi.org/10.1037/0022-3514.78.6.1038

- O’Keefe D. J. (1990). Persuasion: theory and research. Sage Publications Inc.

- Pirolli, P, & Card, S. K. (1999). Information foraging. Psychology Review, 106(4), 643-675. https://doi.org/10.1007/978-0-387-39940-9_205

- Reimer, T., Mata, R., & Stoecklin, M. (2004). The use of heuristics in persuasion: deriving cues on source expertise from argument quality. Current Research in Social Psychology, 10(6), 69-83. https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.409.6177&rep=rep1&type=pdf (Archived by the Internet Archive at https://bit.ly/3aY7S0t)

- Rieh, S. Y. (2001). Judgment of information quality and cognitive authority in the Web. Journal of the Association for Information Science & Technology, 53(2), 145-161. https://doi.org/10.1002/asi.10017.abs

- Ross, W. T., & Creyer, E. H. (1992). Making inferences about missing information: the effects of existing information. Journal of Consumer Research, 19(1), 14-25. https://doi.org/10.1086/209282

- Rudat, A., & Buder, Jürgen. (2015). Making retweeting social: the influence of content and context information on sharing news in Twitter. Computers in Human Behavior, 46, 75-84. https://doi.org/10.1016/j.chb.2015.01.005

- Rudat, A., Buder, J., & Hesse, F. W. (2014). Audience design in Twitter: retweeting behavior between informational value and followers’ interests. Computers in Human Behavior, 35, 132-139. https://doi.org/10.1016/j.chb.2014.03.006

- Savolainen, R. (2009). Interpreting informational cues: an explorative study on information use among prospective homebuyers. Journal of the Association for Information Science and Technology, 60(11), 2244-2254. https://doi.org/10.1002/asi.21167

- Shi, J., Lai, K. K., Hu, P., & Chen, G. (2017). Understanding and predicting individual retweeting behavior: receiver perspectives. Applied Soft Computing, 60, 844-857. https://doi.org/10.1016/j.asoc.2017.08.044

- Simon, H. A. (1955). A behavioral model of rational choice. Quarterly Journal of Economics, 69(1), 99-118. https://doi.org/10.2307/1884852

- Sundar S, S. (2008). The MAIN model: a heuristic approach to understanding technology effects on credibility. In M.J. Metzger & A. J. Flanagin, (Eds.). Digital Media, Youth, and Credibility (pp. 73-100). The MIT Press

- Sundar, S. S., Xu, Q., & Oeldorf-Hirsch, A. (2009). Authority vs. peer: How interface cues influence users. In CHI EA '09: CHI '09 Extended Abstracts on Human Factors in Computing Systems, Boston, MA, USA, April 2009 (pp. 4231-4236). Association for Computing Machinery. https://dl.acm.org/doi/10.1145/1520340.1520645

- Thelwall, M., & Kousha, K. (2015). ResearchGate: disseminating, communicating, and measuring scholarship? Journal of the Association for Information Science and Technology, 66(5), 876-889. https://doi.org/10.1002/asi.23236

- Toncar, M. F., & Munch, J. M. (2001). Consumer responses to tropes in print advertising. Journal of Advertising, 30(1), 55-65. https://doi.org/10.1080/00913367.2001.10673631

- Walther, J. B., & Jang, J. (2012). Communication processes in participatory Websites. Journal of Computer-Mediated Communication, 18(1), 2-15. https://doi.org/10.1111/j.1083-6101.2012.01592.x

- Wathen, C. N., & Burkell, J. (2002). Believe it or not: factors influencing credibility on the Web. Journal of the Association for Information Science and Technology, 532), 134-144. https://doi.org/10.1002/asi.10016

- Weber, K., & Roehl, W. S. (1999). Profiling people searching for and purchasing travel products on the World Wide Web. Journal of Travel Research, 37(3), 291-298. https://doi.org/10.1177/004728759903700311

- Westerman, D., Spence, P. R., & Der Heide, B. V. (2012). A social network as information: the effect of system generated reports of connectedness on credibility on Twitter. Computers in Human Behavior, 28(1), 199-206. https://doi.org/10.1016/j.chb.2011.09.001

- Wilson, P. (1983). Second-hand knowledge. An inquiry into cognitive authority. Greenwood Press.

- Wirth, W., Bocking, T., Karnowski, V., & Von Pape, T. (2007). Heuristic and systematic use of search engines. Journal of Computer-Mediated Communication, 12(3), 778-800. https://doi.org/10.1111/j.1083-6101.2007.00350.x

- Yan, W., Zhang, Y., & Egghe, L. (2018). Research universities on the ResearchGate social networking site: an examination of institutional differences, research activity level, and social networks formed. Journal of Informetrics, 12(1), 385-400. https://doi.org/10.1016/j.joi.2017.08.002

- Yang, J., Counts, S., Morris, M. R., & Hoff, A. (2013). Microblog credibility perceptions: comparing the USA and China. In CSCW '13: Proceedings of the 2013 Conference on Computer Supported Cooperative Work (pp. 575-586). Association for Computing Machinery. https://dl.acm.org/doi/10.1145/2441776.2441841